如果您对OpenCamera1.3发布,Android相机应用感兴趣,那么本文将是一篇不错的选择,我们将为您详在本文中,您将会了解到关于OpenCamera1.3发布,Android相机应用的详细内容

如果您对Open Camera 1.3 发布,Android 相机应用感兴趣,那么本文将是一篇不错的选择,我们将为您详在本文中,您将会了解到关于Open Camera 1.3 发布,Android 相机应用的详细内容,我们还将为您解答open camera 安卓的相关问题,并且为您提供关于Android 5.1 Camera 架构学习(一)——Camera 初始化、Android Camera 原理之 camera HAL 底层数据结构与类总结、Android Camera 原理之 camera service 与 camera provider session 会话与 capture request 轮转、Android Camera 流程学习记录(一)—— Camera 基本架构的有价值信息。

本文目录一览:- Open Camera 1.3 发布,Android 相机应用(open camera 安卓)

- Android 5.1 Camera 架构学习(一)——Camera 初始化

- Android Camera 原理之 camera HAL 底层数据结构与类总结

- Android Camera 原理之 camera service 与 camera provider session 会话与 capture request 轮转

- Android Camera 流程学习记录(一)—— Camera 基本架构

Open Camera 1.3 发布,Android 相机应用(open camera 安卓)

Open Camera 1.3 增加了图像破裂模式,延迟可配置;同时可设置视频的分辨率;在屏幕上显示电池状态;重新组织了设置界面。

Open Camera 是一个开源的 Android 手机和平板上的相机应用

Android 5.1 Camera 架构学习(一)——Camera 初始化

Android Camera 采用 C/S 架构,client 与 server 两个独立的线程之间(CameraService)使用 Binder 通信。

一 CameraService 的注册。

1. 手机开机后,会走 init.rc 流程,init.rc 会启动 MediaServer Service。

service media /system/bin/mediaserver class main user root #### # google default #### # user media #### group audio camera inet net_bt net_bt_admin net_bw_acct drmrpc mediadrm media sdcard_r system net_bt_stack #### # google default #### # group audio camera inet net_bt net_bt_admin net_bw_acct drmrpc mediadrm #### ioprio rt 4

2.MediaServer 的 main 函数位于 frameworks/base/media/mediaserver/main_mediaserver.cpp 中。

在 Main_MediaServer.cpp 的 main 函数中,CameraService 完成了注册

1 int main(int argc __unused, char** argv) 2 { 3 signal(SIGPIPE, SIG_IGN); 4 char value[PROPERTY_VALUE_MAX]; 5 bool doLog = (property_get("ro.test_harness", value, "0") > 0) && (atoi(value) == 1); 6 pid_t childPid; 7 .............................. 8 sp<ProcessState> proc(ProcessState::self()); 9 sp<IServiceManager> sm = defaultServiceManager(); 10 ALOGI("ServiceManager: %p", sm.get()); 11 AudioFlinger::instantiate(); 12 MediaPlayerService::instantiate(); 13 #ifdef MTK_AOSP_ENHANCEMENT 14 MemoryDumper::instantiate(); 15 #endif 16 CameraService::instantiate(); 17 ......................................

3. instantiate 的实现在 CameraService 的父类中,

namespace android { template<typename SERVICE> class BinderService { public: static status_t publish(bool allowIsolated = false) { sp<IServiceManager> sm(defaultServiceManager()); return sm->addService( String16(SERVICE::getServiceName()), new SERVICE(), allowIsolated); } static void publishAndJoinThreadPool(bool allowIsolated = false) { publish(allowIsolated); joinThreadPool(); } static void instantiate() { publish(); } static status_t shutdown() { return NO_ERROR; } private: static void joinThreadPool() { sp<ProcessState> ps(ProcessState::self()); ps->startThreadPool(); ps->giveThreadPoolName(); IPCThreadState::self()->joinThreadPool(); } }; }; // namespace android

可以发现在 publish ()函数中,CameraService 完成服务的注册 。SERVICE 是个模板,这里是注册 CameraService,所以可用 CameraService 代替

return sm->addService(String16(CameraService::getServiceName()), new CameraService());

这样,Camera 就在 ServiceManager 完成服务注册,提供给 client 随时使用。

二 client 如何连上 server 端,并打开 camera 模块

我们从 Camera.open () 开始往 framework 进行分析,调用 frameworks\base\core\java\android\hardware\Camera.java 类的 open 方法 。

public static Camera open() { ............................................ return new Camera(cameraId); ............................................. }

这里调用了 Camera 的构造函数,在构造函数中调用了 cameraInitVersion

private int cameraInitVersion(int cameraId, int halVersion) { .................................................. return native_setup(new WeakReference<Camera>(this), cameraId, halVersion, packageName); }

此后进入 JNI 层 android_hardware_camera.cpp

// connect to camera service static jint android_hardware_Camera_native_setup(JNIEnv *env, jobject thiz, jobject weak_this, jint cameraId, jint halVersion, jstring clientPackageName) { ....................... camera = Camera::connect(cameraId, clientName, Camera::USE_CALLING_UID); ...................... sp<JNICameraContext> context = new JNICameraContext(env, weak_this, clazz, camera); ........................... camera->setListener(context); .......................... }

JNI 函数里面,我们找到 Camera C/S 架构的客户端了(即红色加粗的那个 Camera),它调用 connect 函数向服务端发送连接请求。JNICameraContext 这个类是一个监听类,用于处理底层 Camera 回调函数传来的数据和消息

sp<Camera> Camera::connect(int cameraId, const String16& clientPackageName, int clientUid) { return CameraBaseT::connect(cameraId, clientPackageName, clientUid); }

template <typename TCam, typename TCamTraits> sp<TCam> CameraBase<TCam, TCamTraits>::connect(int cameraId, const String16& clientPackageName, int clientUid) { ALOGV("%s: connect", __FUNCTION__); sp<TCam> c = new TCam(cameraId); sp<TCamCallbacks> cl = c; status_t status = NO_ERROR; const sp<ICameraService>& cs = getCameraService(); if (cs != 0) { TCamConnectService fnConnectService = TCamTraits::fnConnectService; status = (cs.get()->*fnConnectService)(cl, cameraId, clientPackageName, clientUid, /*out*/ c->mCamera); } if (status == OK && c->mCamera != 0) { c->mCamera->asBinder()->linkToDeath(c); c->mStatus = NO_ERROR; } else { ALOGW("An error occurred while connecting to camera: %d", cameraId); c.clear(); } return c; }

// establish binder interface to camera service template <typename TCam, typename TCamTraits> const sp<ICameraService>& CameraBase<TCam, TCamTraits>::getCameraService() { Mutex::Autolock _l(gLock); if (gCameraService.get() == 0) { sp<IServiceManager> sm = defaultServiceManager(); sp<IBinder> binder; do { binder = sm->getService(String16(kCameraServiceName)); if (binder != 0) { break; } ALOGW("CameraService not published, waiting..."); usleep(kCameraServicePollDelay); } while(true); if (gDeathNotifier == NULL) { gDeathNotifier = new DeathNotifier(); } binder->linkToDeath(gDeathNotifier); gCameraService = interface_cast<ICameraService>(binder); } ALOGE_IF(gCameraService == 0, "no CameraService!?"); return gCameraService; }

此处终于获得 CameraService 实例了,该 CameraService 实例是通过 binder 获取的。

再来看 fnConnectService 是什么,

在 Camera.cpp 中,有

CameraTraits<Camera>::TCamConnectService CameraTraits<Camera>::fnConnectService = &ICameraService::connect;

这样也就是说 fnConnectService 就是使用 CameraService 调用 connect,从这里开始,终于从进入了服务端的流程:

status_t CameraService::connect( const sp<ICameraClient>& cameraClient, int cameraId, const String16& clientPackageName, int clientUid, /*out*/ sp<ICamera>& device) { ......................... status_t status = validateConnect(cameraId, /*inout*/clientUid); .................................. if (!canConnectUnsafe(cameraId, clientPackageName, cameraClient->asBinder(), /*out*/clientTmp)) { return -EBUSY; } status = connectHelperLocked(/*out*/client, cameraClient, cameraId, clientPackageName, clientUid, callingPid); return OK; }

status_t CameraService::connectHelperLocked( /*out*/ sp<Client>& client, /*in*/ const sp<ICameraClient>& cameraClient, int cameraId, const String16& clientPackageName, int clientUid, int callingPid, int halVersion, bool legacyMode) { .................................... client = new CameraClient(this, cameraClient, clientPackageName, cameraId, facing, callingPid, clientUid, getpid(), legacyMode); .................................... status_t status = connectFinishUnsafe(client, client->getRemote()); ............................................ }

在 connectHelpLocked,CameraService 返回一个其实是它内部类的 client——CameraClient。(注意此 client 是 CameraService 内部的 CameraClient,不是 Camera 客户端)

在 connectFinishUnsafe 中,

status_t status = client->initialize(mModule);

我们再来看 CameraClient 类的 initialize 函数

status_t CameraClient::initialize(camera_module_t *module) { int callingPid = getCallingPid(); status_t res; mHardware = new CameraHardwareInterface(camera_device_name); res = mHardware->initialize(&module->common); }

CameraClient 的初始化就是:先实例化 Camera Hal 接口 CameraHardwareInterface,CameraHardwareInterface 调用 initialize () 进入 HAL 层打开 Camera 底层驱动

status_t initialize(hw_module_t *module) { ALOGI("Opening camera %s", mName.string()); camera_module_t *cameraModule = reinterpret_cast<camera_module_t *>(module); camera_info info; status_t res = cameraModule->get_camera_info(atoi(mName.string()), &info); if (res != OK) return res; int rc = OK; if (module->module_api_version >= CAMERA_MODULE_API_VERSION_2_3 && info.device_version > CAMERA_DEVICE_API_VERSION_1_0) { // Open higher version camera device as HAL1.0 device. rc = cameraModule->open_legacy(module, mName.string(), CAMERA_DEVICE_API_VERSION_1_0, (hw_device_t **)&mDevice); } else { rc = CameraService::filterOpenErrorCode(module->methods->open( module, mName.string(), (hw_device_t **)&mDevice)); } if (rc != OK) { ALOGE("Could not open camera %s: %d", mName.string(), rc); return rc; } initHalPreviewWindow(); return rc; }

hardware->initialize (&mModule->common) 中 mModule 模块是一个结构体 camera_module_t,他是怎么初始化的呢?我们发现 CameraService 里面有个函数

void CameraService::onFirstRef() { BnCameraService::onFirstRef(); if (hw_get_module(CAMERA_HARDWARE_MODULE_ID, (const hw_module_t **)&mModule) < 0) { LOGE("Could not load camera HAL module"); mNumberOfCameras = 0; } }

了解 HAL 层的都知道 hw_get_module 函数就是用来获取模块的 Hal stub,这里通过 CAMERA_HARDWARE_MODULE_ID 获取 Camera Hal 层的代理 stub,并赋值给 mModule,后面就可通过操作 mModule 完成对 Camera 模块的控制。那么 onFirstRef () 函数又是何时调用的?

onFirstRef()属于其父类 RefBase,该函数在强引用 sp 新增引用计数时调用。就是当 有 sp 包装的类初始化的时候调用,那么 camera 是何时调用的呢?可以发现在

客户端发起连接时候

sp Camera::connect(int cameraId)

{

LOGV("connect");

sp c = new Camera();

const sp& cs = getCameraService();

}

这个时候初始化了一个 CameraService 实例,且用 Sp 包装,这个时候 sp 将新增计数,相应的 CameraService 实例里面 onFirstRef()函数完成调用。

CameraService::connect()即实例化 CameraClient 并打开驱动,返回 CameraClient 的时候,就表明客户端和服务端连接建立。Camera 完成初始化。

Android Camera 原理之 camera HAL 底层数据结构与类总结

camera HAL 层数据结构非常多,看代码的时候常常为了了解这些数据结构找半天,为了方便大家学习,特地总结了一些数据结构以及这些数据结构的位置:

1.hardware/libhardware/include/hardware/camera_common.h:

1.1 camera_info_t : camera_info

typedef struct camera_info {

int facing;

int orientation;

uint32_t device_version;

const camera_metadata_t *static_camera_characteristics;

int resource_cost;

char** conflicting_devices;

size_t conflicting_devices_length;

} camera_info_t;

1.2 camera_device_status_t : camera_device_status

typedef enum camera_device_status {

CAMERA_DEVICE_STATUS_NOT_PRESENT = 0,

CAMERA_DEVICE_STATUS_PRESENT = 1,

CAMERA_DEVICE_STATUS_ENUMERATING = 2,

} camera_device_status_t;

1.3 torch_mode_status_t : torch_mode_status

typedef enum torch_mode_status {

TORCH_MODE_STATUS_NOT_AVAILABLE = 0,

TORCH_MODE_STATUS_AVAILABLE_OFF = 1,

TORCH_MODE_STATUS_AVAILABLE_ON = 2,

} torch_mode_status_t;

1.4 camera_module_callbacks_t : camera_module_callbacks

typedef struct camera_module_callbacks {

void (*camera_device_status_change)(const struct camera_module_callbacks*,

int camera_id,

int new_status);

void (*torch_mode_status_change)(const struct camera_module_callbacks*,

const char* camera_id,

int new_status);

} camera_module_callbacks_t;

1.5 camera_module_t : camera_module

typedef struct camera_module {

hw_module_t common;

int (*get_number_of_cameras)(void);

int (*get_camera_info)(int camera_id, struct camera_info *info);

int (*set_callbacks)(const camera_module_callbacks_t *callbacks);

void (*get_vendor_tag_ops)(vendor_tag_ops_t* ops);

int (*open_legacy)(const struct hw_module_t* module, const char* id,

uint32_t halVersion, struct hw_device_t** device);

int (*set_torch_mode)(const char* camera_id, bool enabled);

int (*init)();

void* reserved[5];

} camera_module_t;

2.hardware/libhardware/include/hardware/hardware.h:

2.1 hw_module_t : hw_module_t

typedef struct hw_module_t {

/** tag must be initialized to HARDWARE_MODULE_TAG */

uint32_t tag;

uint16_t module_api_version;

#define version_major module_api_version

uint16_t hal_api_version;

#define version_minor hal_api_version

/** Identifier of module */

const char *id;

/** Name of this module */

const char *name;

/** Author/owner/implementor of the module */

const char *author;

/** Modules methods */

struct hw_module_methods_t* methods;

/** module''s dso */

void* dso;

#ifdef __LP64__

uint64_t reserved[32-7];

#else

/** padding to 128 bytes, reserved for future use */

uint32_t reserved[32-7];

#endif

} hw_module_t;

2.2 hw_module_methods_t : hw_module_methods_t

其中 hw_module_methods_t 结构如下:

typedef struct hw_module_methods_t {

/** Open a specific device */

int (*open)(const struct hw_module_t* module, const char* id,

struct hw_device_t** device);

} hw_module_methods_t;

这个结构体里面有一个函数指针,什么地方明确了函数指针的指向了?

在 hardware/libhardware/modules/camera/3_0/CameraHAL.cpp 中的 167 行明确了函数指针指向。指向其中的 open_dev 函数。

162static int open_dev(const hw_module_t* mod, const char* name, hw_device_t** dev)

163{

164 return gCameraHAL.open(mod, name, dev);

165}

166

167static hw_module_methods_t gCameraModuleMethods = {

168 .open = open_dev

169};

2.3 hw_device_t : hw_device_t

typedef struct hw_device_t {

/** tag must be initialized to HARDWARE_DEVICE_TAG */

uint32_t tag;

uint32_t version;

/** reference to the module this device belongs to */

struct hw_module_t* module;

/** padding reserved for future use */

#ifdef __LP64__

uint64_t reserved[12];

#else

uint32_t reserved[12];

#endif

/** Close this device */

int (*close)(struct hw_device_t* device);

} hw_device_t;

3.hardware/libhardware/include/hardware/camera3.h

3.1 camera3_device_t : camera3_device

typedef struct camera3_device {

hw_device_t common;

camera3_device_ops_t *ops;

void *priv;

} camera3_device_t;

3.2 camera3_device_ops_t : camera3_device_ops

typedef struct camera3_device_ops {

int (*initialize)(const struct camera3_device *,

const camera3_callback_ops_t *callback_ops);

int (*configure_streams)(const struct camera3_device *,

camera3_stream_configuration_t *stream_list);

int (*register_stream_buffers)(const struct camera3_device *,

const camera3_stream_buffer_set_t *buffer_set);

const camera_metadata_t* (*construct_default_request_settings)(

const struct camera3_device *,

int type);

int (*process_capture_request)(const struct camera3_device *,

camera3_capture_request_t *request);

void (*get_metadata_vendor_tag_ops)(const struct camera3_device*,

vendor_tag_query_ops_t* ops);

void (*dump)(const struct camera3_device *, int fd);

int (*flush)(const struct camera3_device *);

/* reserved for future use */

void *reserved[8];

} camera3_device_ops_t;

camera3_device_ops_t 映射函数指针操作: hardware/libhardware/modules/camera/3_0/Camera.cpp

const camera3_device_ops_t Camera::sOps = {

.initialize = default_camera_hal::initialize,

.configure_streams = default_camera_hal::configure_streams,

.register_stream_buffers = default_camera_hal::register_stream_buffers,

.construct_default_request_settings

= default_camera_hal::construct_default_request_settings,

.process_capture_request = default_camera_hal::process_capture_request,

.get_metadata_vendor_tag_ops = NULL,

.dump = default_camera_hal::dump,

.flush = default_camera_hal::flush,

.reserved = {0},

};

上面的函数指针映射只是抽象层提供一个默认映射方法,实际上芯片中都会复写这个指针函数的映射关系。

以高通 660 芯片为例,实际上映射的函数指针映射关系是 hardware/qcom/camera/QCamera2/HAL3/QCamera3HWI.cpp 中,不同的芯片会在不同的地方,但是不会相差太大,况且这些函数指针都是一样的,这是 android hal 层提供的通用调用方法。

camera3_device_ops_t QCamera3HardwareInterface::mCameraOps = {

.initialize = QCamera3HardwareInterface::initialize,

.configure_streams = QCamera3HardwareInterface::configure_streams,

.register_stream_buffers = NULL,

.construct_default_request_settings = QCamera3HardwareInterface::construct_default_request_settings,

.process_capture_request = QCamera3HardwareInterface::process_capture_request,

.get_metadata_vendor_tag_ops = NULL,

.dump = QCamera3HardwareInterface::dump,

.flush = QCamera3HardwareInterface::flush,

.reserved = {0},

};

在 hardware/qcom/camera/QCamera2/HAL3/QCamera3HWI.cpp 构造函数中已经建立了这种联系了。

mCameraDevice.ops = &mCameraOps;

3.3 camera3_callback_ops_t : camera3_callback_ops

typedef struct camera3_callback_ops {

void (*process_capture_result)(const struct camera3_callback_ops *,

const camera3_capture_result_t *result);

void (*notify)(const struct camera3_callback_ops *,

const camera3_notify_msg_t *msg);

} camera3_callback_ops_t;

camera3_callback_ops_t 是 camera provider 到 camera service 之间的回调,直接和 ICameraDeviceCallback.h 对应,可以直接回调这个接口中的方法 IPC 调用到 camera service 进程中。

3.4 camera3_capture_result_t : camera3_capture_result

typedef struct camera3_capture_result {

uint32_t frame_number;

const camera_metadata_t *result;

uint32_t num_output_buffers;

const camera3_stream_buffer_t *output_buffers;

const camera3_stream_buffer_t *input_buffer;

uint32_t partial_result;

uint32_t num_physcam_metadata;

const char **physcam_ids;

const camera_metadata_t **physcam_metadata;

} camera3_capture_result_t;

3.5 camera3_capture_request_t : camera3_capture_request

typedef struct camera3_capture_request {

uint32_t frame_number;

const camera_metadata_t *settings;

camera3_stream_buffer_t *input_buffer;

uint32_t num_output_buffers;

const camera3_stream_buffer_t *output_buffers;

uint32_t num_physcam_settings;

const char **physcam_id;

const camera_metadata_t **physcam_settings;

} camera3_capture_request_t;

执行相机预览的操作,单个 camera request 请求通过 camera service 层的 processCaptureRequest () 函数发送到 HAL 设备上,来进行图像捕获或者图像缓冲区重新处理。

该请求包含用于此捕获的设置,以及用于将结果图像数据写入的输出缓冲区集。它可以选择性地包含输入缓冲区,在这种情况下,请求用于重新处理该输入缓冲区而不是捕获新的用相机传感器拍摄图像。捕获由 frame_number 标识。

作为响应,相机 HAL 设备必须发送 camera3_capture_result 使用 process_capture_result()与框架异步结构打回来。

3.6 camera3_request_template_t : camera3_request_template

typedef enum camera3_request_template {

/**

* Standard camera preview operation with 3A on auto.

*/

CAMERA3_TEMPLATE_PREVIEW = 1,

/**

* Standard camera high-quality still capture with 3A and flash on auto.

*/

CAMERA3_TEMPLATE_STILL_CAPTURE = 2,

/**

* Standard video recording plus preview with 3A on auto, torch off.

*/

CAMERA3_TEMPLATE_VIDEO_RECORD = 3,

/**

* High-quality still capture while recording video. Application will

* include preview, video record, and full-resolution YUV or JPEG streams in

* request. Must not cause stuttering on video stream. 3A on auto.

*/

CAMERA3_TEMPLATE_VIDEO_SNAPSHOT = 4,

/**

* Zero-shutter-lag mode. Application will request preview and

* full-resolution data for each frame, and reprocess it to JPEG when a

* still image is requested by user. Settings should provide highest-quality

* full-resolution images without compromising preview frame rate. 3A on

* auto.

*/

CAMERA3_TEMPLATE_ZERO_SHUTTER_LAG = 5,

/**

* A basic template for direct application control of capture

* parameters. All automatic control is disabled (auto-exposure, auto-white

* balance, auto-focus), and post-processing parameters are set to preview

* quality. The manual capture parameters (exposure, sensitivity, etc.)

* are set to reasonable defaults, but should be overridden by the

* application depending on the intended use case.

*/

CAMERA3_TEMPLATE_MANUAL = 6,

/* Total number of templates */

CAMERA3_TEMPLATE_COUNT,

/**

* First value for vendor-defined request templates

*/

CAMERA3_VENDOR_TEMPLATE_START = 0x40000000

} camera3_request_template_t;

3.7 camera3_notify_msg_t : camera3_notify_msg

typedef struct camera3_notify_msg {

int type;

union {

camera3_error_msg_t error;

camera3_shutter_msg_t shutter;

uint8_t generic[32];

} message;

} camera3_notify_msg_t;

3.8 camera3_shutter_msg_t : camera3_shutter_msg

typedef struct camera3_shutter_msg {

uint32_t frame_number;

uint64_t timestamp;

} camera3_shutter_msg_t;

3.9 camera3_error_msg_t : camera3_error_msg

typedef struct camera3_error_msg {

uint32_t frame_number;

camera3_stream_t *error_stream;

int error_code;

} camera3_error_msg_t;

3.10 camera3_error_msg_code_t : camera3_error_msg_code

typedef enum camera3_error_msg_code {

CAMERA3_MSG_ERROR_DEVICE = 1,

CAMERA3_MSG_ERROR_REQUEST = 2,

CAMERA3_MSG_ERROR_RESULT = 3,

CAMERA3_MSG_ERROR_BUFFER = 4,

CAMERA3_MSG_NUM_ERRORS

} camera3_error_msg_code_t;

3.11 camera3_msg_type_t : camera3_msg_type

typedef enum camera3_msg_type {

CAMERA3_MSG_ERROR = 1,

CAMERA3_MSG_SHUTTER = 2,

CAMERA3_NUM_MESSAGES

} camera3_msg_type_t;

3.12 camera3_jpeg_blob_t : camera3_jpeg_blob

typedef struct camera3_jpeg_blob {

uint16_t jpeg_blob_id;

uint32_t jpeg_size;

} camera3_jpeg_blob_t;

3.13 camera3_stream_buffer_set_t : camera3_stream_buffer_set

typedef struct camera3_stream_buffer_set {

camera3_stream_t *stream;

uint32_t num_buffers;

buffer_handle_t **buffers;

} camera3_stream_buffer_set_t;

3.14 camera3_stream_buffer_t : camera3_stream_buffer

typedef struct camera3_stream_buffer {

camera3_stream_t *stream;

buffer_handle_t *buffer;

int status;

int acquire_fence;

int release_fence;

} camera3_stream_buffer_t;

3.15 camera3_buffer_status_t : camera3_buffer_status

typedef enum camera3_buffer_status {

CAMERA3_BUFFER_STATUS_OK = 0,

CAMERA3_BUFFER_STATUS_ERROR = 1

} camera3_buffer_status_t;

3.16 camera3_stream_configuration_t : camera3_stream_configuration

typedef struct camera3_stream_configuration {

uint32_t num_streams;

camera3_stream_t **streams;

uint32_t operation_mode;

const camera_metadata_t *session_parameters;

} camera3_stream_configuration_t;

3.17 camera3_stream_t : camera3_stream

typedef struct camera3_stream {

int stream_type;

uint32_t width;

uint32_t height;

int format;

uint32_t usage;

uint32_t max_buffers;

void *priv;

android_dataspace_t data_space;

int rotation;

const char* physical_camera_id;

void *reserved[6];

} camera3_stream_t;

3.18 camera3_stream_configuration_mode_t : camera3_stream_configuration_mode

typedef enum camera3_stream_configuration_mode {

CAMERA3_STREAM_CONFIGURATION_NORMAL_MODE = 0,

CAMERA3_STREAM_CONFIGURATION_CONSTRAINED_HIGH_SPEED_MODE = 1,

CAMERA3_VENDOR_STREAM_CONFIGURATION_MODE_START = 0x8000

} camera3_stream_configuration_mode_t;

3.19 camera3_stream_rotation_t : camera3_stream_rotation

typedef enum camera3_stream_rotation {

/* No rotation */

CAMERA3_STREAM_ROTATION_0 = 0,

/* Rotate by 90 degree counterclockwise */

CAMERA3_STREAM_ROTATION_90 = 1,

/* Rotate by 180 degree counterclockwise */

CAMERA3_STREAM_ROTATION_180 = 2,

/* Rotate by 270 degree counterclockwise */

CAMERA3_STREAM_ROTATION_270 = 3

} camera3_stream_rotation_t;

3.20 camera3_stream_type_t : camera3_stream_type

typedef enum camera3_stream_type {

CAMERA3_STREAM_OUTPUT = 0,

CAMERA3_STREAM_INPUT = 1,

CAMERA3_STREAM_BIDIRECTIONAL = 2,

CAMERA3_NUM_STREAM_TYPES

} camera3_stream_type_t;

未完待续。。。

Android Camera 原理之 camera service 与 camera provider session 会话与 capture request 轮转

上层调用 CameraManager.openCamera 的时候,会触发底层的一系列反应,之前我们分享过 camera framework 到 camera service 之间的调用,但是光看这一块还不够深入,接下来我们讨论一下 camera service 与 camera provider 之间在 openCamera 调用的时候做了什么事情。

status_t Camera3Device::initialize(sp<CameraProviderManager> manager, const String8& monitorTags) {

sp<ICameraDeviceSession> session;

status_t res = manager->openSession(mId.string(), this,

/*out*/ &session);

//......

res = manager->getCameraCharacteristics(mId.string(), &mDeviceInfo);

//......

std::shared_ptr<RequestMetadataQueue> queue;

auto requestQueueRet = session->getCaptureRequestMetadataQueue(

[&queue](const auto& descriptor) {

queue = std::make_shared<RequestMetadataQueue>(descriptor);

if (!queue->isValid() || queue->availableToWrite() <= 0) {

ALOGE("HAL returns empty request metadata fmq, not use it");

queue = nullptr;

// don''t use the queue onwards.

}

});

//......

std::unique_ptr<ResultMetadataQueue>& resQueue = mResultMetadataQueue;

auto resultQueueRet = session->getCaptureResultMetadataQueue(

[&resQueue](const auto& descriptor) {

resQueue = std::make_unique<ResultMetadataQueue>(descriptor);

if (!resQueue->isValid() || resQueue->availableToWrite() <= 0) {

ALOGE("HAL returns empty result metadata fmq, not use it");

resQueue = nullptr;

// Don''t use the resQueue onwards.

}

});

//......

mInterface = new HalInterface(session, queue);

std::string providerType;

mVendorTagId = manager->getProviderTagIdLocked(mId.string());

mTagMonitor.initialize(mVendorTagId);

if (!monitorTags.isEmpty()) {

mTagMonitor.parseTagsToMonitor(String8(monitorTags));

}

return initializeCommonLocked();

}

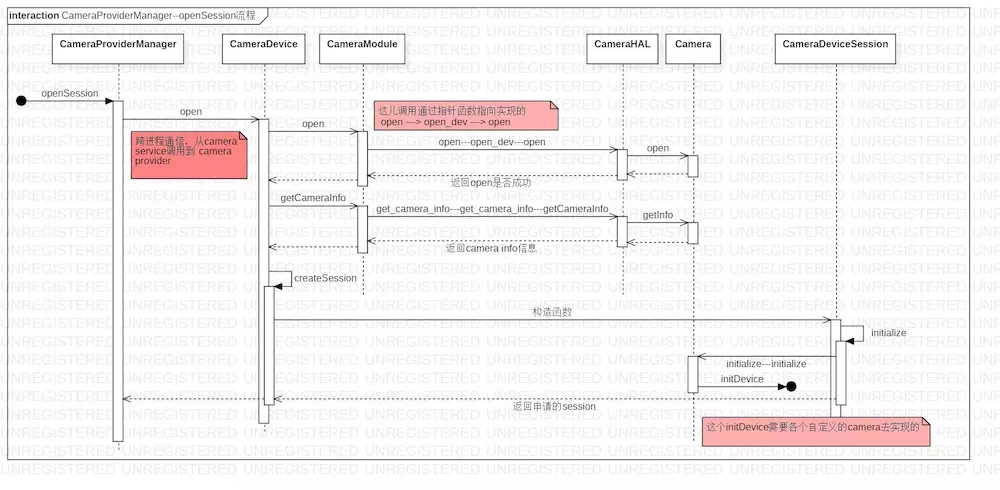

上面 camera service 中执行 openCamera 中的核心步骤,可以看出,第一步执行的就是 manager->openSession (mId.string (), this, /out/ &session);

本文就是通过剖析 openSession 的执行流程来 还原 camera service 与 camera provider 的执行过程。

CameraProviderManager--openSession 流程.jpg

为了让大家看得更加清楚,列出各个文件的位置:

CameraProviderManager : frameworks/av/services/camera/libcameraservice/common/CameraServiceProvider.cpp

CameraDevice : hardware/interfaces/camera/device/3.2/default/CameraDevice.cpp

CameraModule : hardware/interfaces/camera/common/1.0/default/CameraModule.cpp

CameraHAL : hardware/libhardware/modules/camera/3_0/CameraHAL.cpp

Camera : hardware/libhardware/modules/camera/3_0/Camera.cpp

CameraDeviceSession : hardware/interfaces/camera/device/3.2/default/CameraDeviceSession.cpp

中间涉及到一些指针函数的映射,如果看不明白,可以参考:《Android Camera 原理之底层数据结构总结》,具体的调用流程就不说了,按照上面的时序图走,都能看明白的。

一些回调关系还是值得说下的。我们看下 CameraProviderManager::openSession 调用的地方:

status_t CameraProviderManager::openSession(const std::string &id,

const sp<hardware::camera::device::V3_2::ICameraDeviceCallback>& callback,

/*out*/

sp<hardware::camera::device::V3_2::ICameraDeviceSession> *session) {

std::lock_guard<std::mutex> lock(mInterfaceMutex);

auto deviceInfo = findDeviceInfoLocked(id,

/*minVersion*/ {3,0}, /*maxVersion*/ {4,0});

if (deviceInfo == nullptr) return NAME_NOT_FOUND;

auto *deviceInfo3 = static_cast<ProviderInfo::DeviceInfo3*>(deviceInfo);

Status status;

hardware::Return<void> ret;

ret = deviceInfo3->mInterface->open(callback, [&status, &session]

(Status s, const sp<device::V3_2::ICameraDeviceSession>& cameraSession) {

status = s;

if (status == Status::OK) {

*session = cameraSession;

}

});

if (!ret.isOk()) {

ALOGE("%s: Transaction error opening a session for camera device %s: %s",

__FUNCTION__, id.c_str(), ret.description().c_str());

return DEAD_OBJECT;

}

return mapToStatusT(status);

}

我们看下 IPC 调用的地方:

ret = deviceInfo3->mInterface->open(callback, [&status, &session]

(Status s, const sp<device::V3_2::ICameraDeviceSession>& cameraSession) {

status = s;

if (status == Status::OK) {

*session = cameraSession;

}

});

传入两个参数,一个是 const sp<hardware::camera::device::V3_2::ICameraDeviceCallback>& callback, 另一个是 open_cb _hidl_cb

callback 提供了 camera HAL 层到 camera service 的回调。

open_cb _hidl_cb 是硬件抽象层提供了一种 IPC 间回传数据的方式。就本段代码而言,需要传回两个数据,一个 status:表示当前 openSession 是否成功;另一个是 session:表示 camera session 会话创建成功之后返回的 session 数据。

CameraDevice::open (...) 函数

{

session = createSession(

device, info.static_camera_characteristics, callback);

if (session == nullptr) {

ALOGE("%s: camera device session allocation failed", __FUNCTION__);

mLock.unlock();

_hidl_cb(Status::INTERNAL_ERROR, nullptr);

return Void();

}

if (session->isInitFailed()) {

ALOGE("%s: camera device session init failed", __FUNCTION__);

session = nullptr;

mLock.unlock();

_hidl_cb(Status::INTERNAL_ERROR, nullptr);

return Void();

}

mSession = session;

IF_ALOGV() {

session->getInterface()->interfaceChain([](

::android::hardware::hidl_vec<::android::hardware::hidl_string> interfaceChain) {

ALOGV("Session interface chain:");

for (auto iface : interfaceChain) {

ALOGV(" %s", iface.c_str());

}

});

}

mLock.unlock();

}

_hidl_cb(status, session->getInterface());

最后执行的代码 _hidl_cb (status, session→getInterface ()); 当前 session 创建成功之后,回调到 camera service 中。

const sp<hardware::camera::device::V3_2::ICameraDeviceCallback>& callback 设置到什么地方?这个问题非常重要的,camera 上层很依赖底层的回调,所以我们要搞清楚底层的回调被设置到什么地方,然后在搞清楚在合适的时机触发这些回调。

执行 CameraDeviceSession 构造函数的时候,传入了这个 callback。

CameraDeviceSession::CameraDeviceSession(

camera3_device_t* device,

const camera_metadata_t* deviceInfo,

const sp<ICameraDeviceCallback>& callback) :

camera3_callback_ops({&sProcessCaptureResult, &sNotify}),

mDevice(device),

mDeviceVersion(device->common.version),

mIsAELockAvailable(false),

mDerivePostRawSensKey(false),

mNumPartialResults(1),

mResultBatcher(callback) {

mDeviceInfo = deviceInfo;

camera_metadata_entry partialResultsCount =

mDeviceInfo.find(ANDROID_REQUEST_PARTIAL_RESULT_COUNT);

if (partialResultsCount.count > 0) {

mNumPartialResults = partialResultsCount.data.i32[0];

}

mResultBatcher.setNumPartialResults(mNumPartialResults);

camera_metadata_entry aeLockAvailableEntry = mDeviceInfo.find(

ANDROID_CONTROL_AE_LOCK_AVAILABLE);

if (aeLockAvailableEntry.count > 0) {

mIsAELockAvailable = (aeLockAvailableEntry.data.u8[0] ==

ANDROID_CONTROL_AE_LOCK_AVAILABLE_TRUE);

}

// Determine whether we need to derive sensitivity boost values for older devices.

// If post-RAW sensitivity boost range is listed, so should post-raw sensitivity control

// be listed (as the default value 100)

if (mDeviceInfo.exists(ANDROID_CONTROL_POST_RAW_SENSITIVITY_BOOST_RANGE)) {

mDerivePostRawSensKey = true;

}

mInitFail = initialize();

}

CameraDeviceSession 中的 mResultBatcher 类构造中传入了这个 callback,现在由 CameraDeviceSession::ResultBatcher 来持有 callback 了。看下 ResultBatcher 全局代码,在 CameraDeviceSession.h 中。

那以后底层要回调到上层必定要经过 CameraDeviceSession::ResultBatcher 的 mCallback 来完成了。

class ResultBatcher {

public:

ResultBatcher(const sp<ICameraDeviceCallback>& callback);

void setNumPartialResults(uint32_t n);

void setBatchedStreams(const std::vector<int>& streamsToBatch);

void setResultMetadataQueue(std::shared_ptr<ResultMetadataQueue> q);

void registerBatch(uint32_t frameNumber, uint32_t batchSize);

void notify(NotifyMsg& msg);

void processCaptureResult(CaptureResult& result);

protected:

struct InflightBatch {

// Protect access to entire struct. Acquire this lock before read/write any data or

// calling any methods. processCaptureResult and notify will compete for this lock

// HIDL IPCs might be issued while the lock is held

Mutex mLock;

bool allDelivered() const;

uint32_t mFirstFrame;

uint32_t mLastFrame;

uint32_t mBatchSize;

bool mShutterDelivered = false;

std::vector<NotifyMsg> mShutterMsgs;

struct BufferBatch {

BufferBatch(uint32_t batchSize) {

mBuffers.reserve(batchSize);

}

bool mDelivered = false;

// This currently assumes every batched request will output to the batched stream

// and since HAL must always send buffers in order, no frameNumber tracking is

// needed

std::vector<StreamBuffer> mBuffers;

};

// Stream ID -> VideoBatch

std::unordered_map<int, BufferBatch> mBatchBufs;

struct MetadataBatch {

// (frameNumber, metadata)

std::vector<std::pair<uint32_t, CameraMetadata>> mMds;

};

// Partial result IDs that has been delivered to framework

uint32_t mNumPartialResults;

uint32_t mPartialResultProgress = 0;

// partialResult -> MetadataBatch

std::map<uint32_t, MetadataBatch> mResultMds;

// Set to true when batch is removed from mInflightBatches

// processCaptureResult and notify must check this flag after acquiring mLock to make

// sure this batch isn''t removed while waiting for mLock

bool mRemoved = false;

};

// Get the batch index and pointer to InflightBatch (nullptrt if the frame is not batched)

// Caller must acquire the InflightBatch::mLock before accessing the InflightBatch

// It''s possible that the InflightBatch is removed from mInflightBatches before the

// InflightBatch::mLock is acquired (most likely caused by an error notification), so

// caller must check InflightBatch::mRemoved flag after the lock is acquried.

// This method will hold ResultBatcher::mLock briefly

std::pair<int, std::shared_ptr<InflightBatch>> getBatch(uint32_t frameNumber);

static const int NOT_BATCHED = -1;

// move/push function avoids "hidl_handle& operator=(hidl_handle&)", which clones native

// handle

void moveStreamBuffer(StreamBuffer&& src, StreamBuffer& dst);

void pushStreamBuffer(StreamBuffer&& src, std::vector<StreamBuffer>& dst);

void sendBatchMetadataLocked(

std::shared_ptr<InflightBatch> batch, uint32_t lastPartialResultIdx);

// Check if the first batch in mInflightBatches is ready to be removed, and remove it if so

// This method will hold ResultBatcher::mLock briefly

void checkAndRemoveFirstBatch();

// The following sendXXXX methods must be called while the InflightBatch::mLock is locked

// HIDL IPC methods will be called during these methods.

void sendBatchShutterCbsLocked(std::shared_ptr<InflightBatch> batch);

// send buffers for all batched streams

void sendBatchBuffersLocked(std::shared_ptr<InflightBatch> batch);

// send buffers for specified streams

void sendBatchBuffersLocked(

std::shared_ptr<InflightBatch> batch, const std::vector<int>& streams);

// End of sendXXXX methods

// helper methods

void freeReleaseFences(hidl_vec<CaptureResult>&);

void notifySingleMsg(NotifyMsg& msg);

void processOneCaptureResult(CaptureResult& result);

void invokeProcessCaptureResultCallback(hidl_vec<CaptureResult> &results, bool tryWriteFmq);

// Protect access to mInflightBatches, mNumPartialResults and mStreamsToBatch

// processCaptureRequest, processCaptureResult, notify will compete for this lock

// Do NOT issue HIDL IPCs while holding this lock (except when HAL reports error)

mutable Mutex mLock;

std::deque<std::shared_ptr<InflightBatch>> mInflightBatches;

uint32_t mNumPartialResults;

std::vector<int> mStreamsToBatch;

const sp<ICameraDeviceCallback> mCallback;

std::shared_ptr<ResultMetadataQueue> mResultMetadataQueue;

// Protect against invokeProcessCaptureResultCallback()

Mutex mProcessCaptureResultLock;

} mResultBatcher;

到这里,openSession 工作就完成了,这个主要是设置了上层的回调到底层,并且底层返回可用的 camera session 到上层来,实现底层和上层的交互通信。

1. 获取的 session 是什么?为什么这个重要?

此 session 是 ICameraDeviceSession 对象,这个对象是指为了操作 camera device,camera provider 与 camera service 之间建立的一个会话机制,可以保证 camera service IPC 调用到 camera provider 进程中的代码。

1.1. 获取 session 当前请求原数组队列

auto requestQueueRet = session->getCaptureRequestMetadataQueue(

[&queue](const auto& descriptor) {

queue = std::make_shared<RequestMetadataQueue>(descriptor);

if (!queue->isValid() || queue->availableToWrite() <= 0) {

ALOGE("HAL returns empty request metadata fmq, not use it");

queue = nullptr;

// don''t use the queue onwards.

}

});

到 HAL 层的 CameraDeviceSession.cpp 中调用 getCaptureRequestMetadataQueue

Return<void> CameraDeviceSession::getCaptureRequestMetadataQueue(

ICameraDeviceSession::getCaptureRequestMetadataQueue_cb _hidl_cb) {

_hidl_cb(*mRequestMetadataQueue->getDesc());

return Void();

}

这个 mRequestMetadataQueue 是在 CameraDeviceSession::initialize 执行的时候初始化的。

int32_t reqFMQSize = property_get_int32("ro.camera.req.fmq.size", /*default*/-1);

if (reqFMQSize < 0) {

reqFMQSize = CAMERA_REQUEST_METADATA_QUEUE_SIZE;

} else {

ALOGV("%s: request FMQ size overridden to %d", __FUNCTION__, reqFMQSize);

}

mRequestMetadataQueue = std::make_unique<RequestMetadataQueue>(

static_cast<size_t>(reqFMQSize),

false /* non blocking */);

if (!mRequestMetadataQueue->isValid()) {

ALOGE("%s: invalid request fmq", __FUNCTION__);

return true;

}

首先读取 ro.camera.req.fmq.size 属性,如果没有找到,则直接赋给一个 1M 大小的 请求原数组队列。这个队列很重要,后续的 camera capture 请求都是通过这个队列处理的。

1.2. 获取 session 当前结果原数组队列

这个和 请求原数组队列相似,不过结果原数组中保留的是 camera capture 的结果数据。大家可以看下源码,这儿就不贴源码了

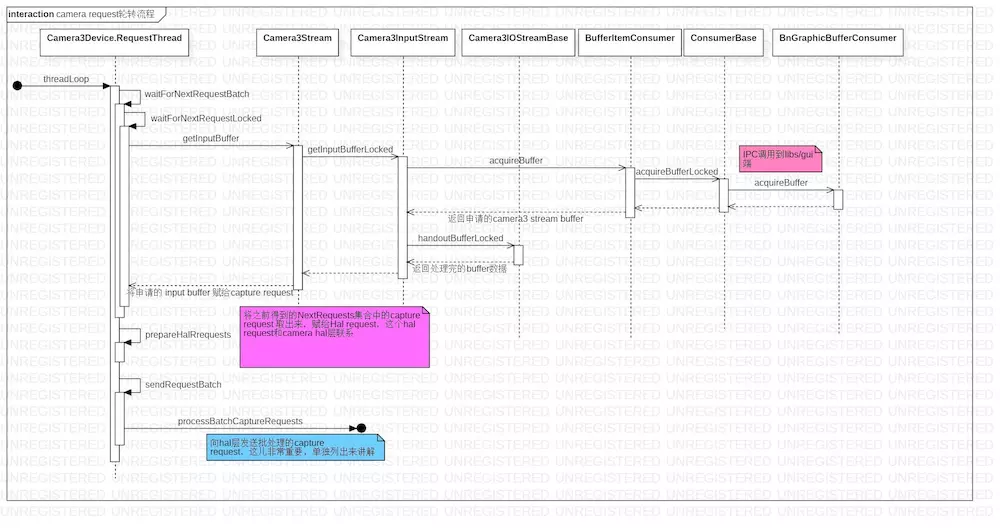

2. 开始运转 capture request 线程

camera service 与 camera provider 建立 session 会话之后,开始运转 capture request 请求线程,之后发送的 capture request 都会到这个线程中执行,这就是熟知的 capture request 轮转。

在 Camera3Device::initializeCommonLocked 中执行了 capture request 轮转。

/** Start up request queue thread */

mRequestThread = new RequestThread(this, mStatusTracker, mInterface, sessionParamKeys);

res = mRequestThread->run(String8::format("C3Dev-%s-ReqQueue", mId.string()).string());

if (res != OK) {

SET_ERR_L("Unable to start request queue thread: %s (%d)",

strerror(-res), res);

mInterface->close();

mRequestThread.clear();

return res;

}

开始启动当前的 capture request 队列,放在 RequestThread 线程中执行,这个线程会一直执行,当有新的 capture request 发过来,会将 capture request 放进当前会话的请求队列中,继续执行。这个轮转很重要,这是 camera 能正常工作的前提。

轮转的主要工作在 Camera3Device::RequestThread::threadLoop 函数中完成,这是 native 中定义的一个 线程执行函数块。

bool Camera3Device::RequestThread::threadLoop() {

ATRACE_CALL();

status_t res;

// Handle paused state.

if (waitIfPaused()) {

return true;

}

// Wait for the next batch of requests.

waitForNextRequestBatch();

if (mNextRequests.size() == 0) {

return true;

}

//......

// Prepare a batch of HAL requests and output buffers.

res = prepareHalRequests();

if (res == TIMED_OUT) {

// Not a fatal error if getting output buffers time out.

cleanUpFailedRequests(/*sendRequestError*/ true);

// Check if any stream is abandoned.

checkAndStopRepeatingRequest();

return true;

} else if (res != OK) {

cleanUpFailedRequests(/*sendRequestError*/ false);

return false;

}

// Inform waitUntilRequestProcessed thread of a new request ID

{

Mutex::Autolock al(mLatestRequestMutex);

mLatestRequestId = latestRequestId;

mLatestRequestSignal.signal();

}

//......

bool submitRequestSuccess = false;

nsecs_t tRequestStart = systemTime(SYSTEM_TIME_MONOTONIC);

if (mInterface->supportBatchRequest()) {

submitRequestSuccess = sendRequestsBatch();

} else {

submitRequestSuccess = sendRequestsOneByOne();

}

//......

return submitRequestSuccess;

}

waitForNextRequestBatch () 不断去轮训底层是否有 InputBuffer 数据,获取的 inputBuffer 数据放在 request 中,这些数据会在之后被消费。

这儿先列个调用过程:对照着代码看一下,camera 的 producer 与 consumer 模型之后还会详细讲解的。

camera request 轮转流程.jpg

这儿也为了大家快速进入代码,也列出来代码的对应位置:

Camera3Device::RequestThread : frameworks/av/services/camera/libcameraservice/device3/Camera3Device.cpp 中有内部类 RequestThread,这是一个线程类。

Camera3Stream : frameworks/av/services/camera/libcameraservice/device3/Camera3Stream.cpp

Camera3InputStream : frameworks/av/services/camera/libcameraservice/device3/Camera3InputStream.cpp

Camera3IOStreamBase : frameworks/av/services/camera/libcameraservice/device3/Camera3IOStreamBase.cpp

BufferItemConsumer : frameworks/native/libs/gui/BufferItemConsumer.cpp

ConsumerBase : frameworks/native/libs/gui/ConsumerBase.cpp

BnGraphicBufferConsumer : frameworks/native/libs/gui/IGraphicBufferConsumer.cpp

上层发过来来的 capture request,手下到底层申请 Consumer buffer,这个 buffer 数据存储在 capture request 缓存中,后期这些 buffer 数据会被复用,不断地生产数据,也不断地被消费。

capture request 开启之后,camera hal 层也会受到 capture request 批处理请求,让 camera hal 做好准备,开始和 camera driver 层交互。hal 层的请求下一章讲解。

小礼物走一走,来简书关注我

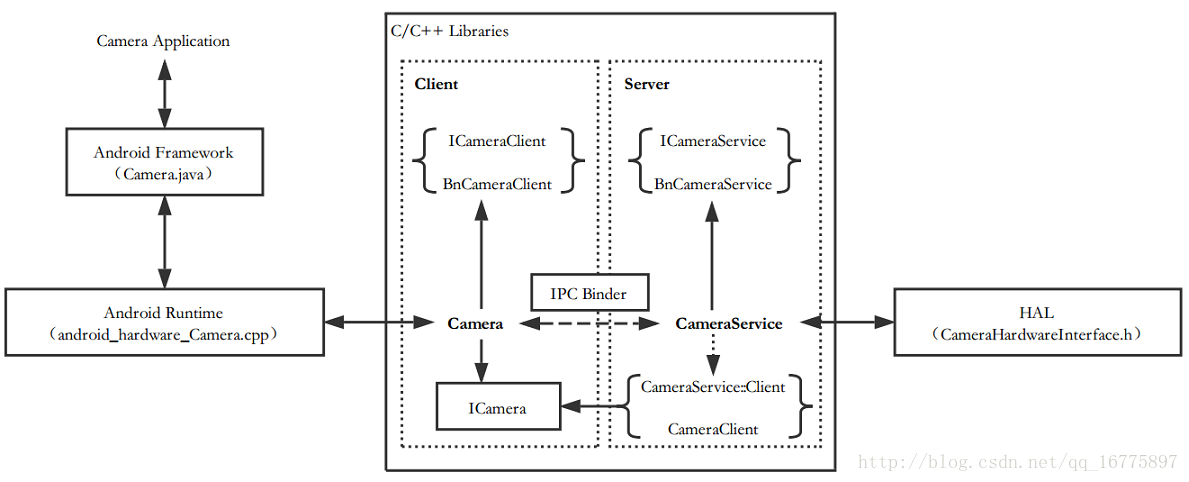

Android Camera 流程学习记录(一)—— Camera 基本架构

Camera 架构

NOTE:这是 Android Camera API 1 的相关架构。

Camera 的架构与 Android 整体架构是保持一致的:

Framework : Camera.java

Android Runtime : android_hardware_Camera.cpp

Library :

Client (Camera.cpp, ICameraClient.cpp, etc...)

Server (CameraService.cpp, ICameraService.cpp, etc...)

HAL : CameraHardwareInterface.h

以上是 Camera 与 Android 架构对层次的相关信息。

架构简图

- NOTE:由于 HAL 层之后的 Device Drivers 部分比较复杂,还需要一段时间去慢慢学习,所以目前先忽略这一部分的内容。

相关文件位置(Android 7.1 源码)

Application:(这部分不是学习的重点)

packages/apps/Camera2/src/com/android/camera/***

Framework:

/frameworks/base/core/java/android/hardware/Camera.java

Android Runtime:

frameworks/base/core/jni/android_hardware_Camera.cpp

C/C++ Libraries:

Client:

frameworks/av/camera/CameraBase.cpp

frameworks/av/camera/Camera.cpp

frameworks/av/camera/ICamera.cpp

frameworks/av/camera/aidl/android/hardware/ICamera.aidl

frameworks/av/camera/aidl/android/hardware/ICameraClient.aidl

Server:

frameworks/av/camera/cameraserver/main_cameraserver.cpp

frameworks/av/services/camera/libcameraservice/CameraService.cpp

frameworks/av/services/camera/libcameraservice/api1/CameraClient.cpp

frameworks/av/camera/aidl/android/hardware/ICameraService.aidl

HAL:

HAL 1:

frameworks/av/services/camera/libcameraservice/device1/CameraHardwareInterface.h

HAL 3:(主要学习了 HAL 1 的机制,HAL 3 以后再补充)

frameworks/av/services/camera/libcameraservice/device3/***

小结

紧接着上一篇关于 Android 基本架构的介绍,先对 Camera 的架构有一个初步的印象。

根据架构简图可以看到,实际上 Camera 的架构与 Android 架构是一一对应的,上层应用调用 Camera 相关的方法后,指令依次通过框架层、运行时环境、本地库、硬件抽象层,最终到达具体设备。设备执行动作后,获得的数据又会沿着反方向依次发送到最上层。

需要注意的是,在本地库这一层中,涉及到一个 C/S 结构:

即通过客户端与服务端的交互来传递指令与数据。

实际上,只有服务端与 HAL 层进行沟通。

由于客户端与服务端是不同的进程,它们之间需要依靠 IPC Binder 机制 来进行通讯。(Binder 机制在参考书 1 中有详细的分析)

————————————————

关于Open Camera 1.3 发布,Android 相机应用和open camera 安卓的问题我们已经讲解完毕,感谢您的阅读,如果还想了解更多关于Android 5.1 Camera 架构学习(一)——Camera 初始化、Android Camera 原理之 camera HAL 底层数据结构与类总结、Android Camera 原理之 camera service 与 camera provider session 会话与 capture request 轮转、Android Camera 流程学习记录(一)—— Camera 基本架构等相关内容,可以在本站寻找。

本文标签:

![[转帖]Ubuntu 安装 Wine方法(ubuntu如何安装wine)](https://www.gvkun.com/zb_users/cache/thumbs/4c83df0e2303284d68480d1b1378581d-180-120-1.jpg)