最近很多小伙伴都在问Kubernetes学习4kubernetes应用快速入门和kubernetes入门教程这两个问题,那么本篇文章就来给大家详细解答一下,同时本文还将给你拓展(三)Kubernete

最近很多小伙伴都在问Kubernetes 学习 4 kubernetes 应用快速入门和kubernetes入门教程这两个问题,那么本篇文章就来给大家详细解答一下,同时本文还将给你拓展(三)Kubernetes 快速入门、Kubernetes as Database: 使用kubesql查询kubernetes资源、kubernetes RBAC实战 kubernetes 用户角色访问控制,kubectl配置生成、Kubernetes 学习 5 kubernetes 资源清单定义入门等相关知识,下面开始了哦!

本文目录一览:- Kubernetes 学习 4 kubernetes 应用快速入门(kubernetes入门教程)

- (三)Kubernetes 快速入门

- Kubernetes as Database: 使用kubesql查询kubernetes资源

- kubernetes RBAC实战 kubernetes 用户角色访问控制,kubectl配置生成

- Kubernetes 学习 5 kubernetes 资源清单定义入门

Kubernetes 学习 4 kubernetes 应用快速入门(kubernetes入门教程)

一、相关命令

1、kubectl

通过连接 api server 进行各 k8s 对象资源的增删改查,如 pod,service,controller (控制器),我们常用的 pod 控制器 replicaset,deployment,statefulet,daemonset,job,cronjob 等,甚至 node 都是对象。

[root@k8smaster ~]# kubectl --help

kubectl controls the Kubernetes cluster manager.

Find more information at: https://kubernetes.io/docs/reference/kubectl/overview/

Basic Commands (Beginner): #新手用的命令

create #增 Create a resource from a file or from stdin.

expose Take a replication controller, service, deployment or pod and expose it as a new Kubernetes Service

run Run a particular image on the cluster

set Set specific features on objects

Basic Commands (Intermediate): #中级的基础命令

explain Documentation of resources

get #查 Display one or many resources

edit #改 Edit a resource on the server

delete #删 Delete resources by filenames, stdin, resources and names, or by resources and label selector

Deploy Commands: #部署命令

rollout #滚动,回滚 Manage the rollout of a resource

scale #改变应用程序的规模 Set a new size for a Deployment, ReplicaSet, Replication Controller, or Job

autoscale #自动改变,就是创建HPA的 Auto-scale a Deployment, ReplicaSet, or ReplicationController

Cluster Management Commands: #集群管理相关命令

certificate #证书 Modify certificate resources.

cluster-info #集群信息 Display cluster info

top #查看资源使用率 Display Resource (CPU/Memory/Storage) usage.

cordon #标记一个节点不可被调用 Mark node as unschedulable

uncordon #标记一个节点可被调用 Mark node as schedulable

drain #排干模式 Drain node in preparation for maintenance

taint #增加污点,给节点增加污点以后,能容忍该污点的pod才能被调度到该节点,默认master会有很多污点,所以创建的pod默认是不会在master上创建,这样确保了master只运行各系统组件 Update the taints on one or more nodes

Troubleshooting and Debugging Commands: #修复和调试命令

describe #描述一个资源的详细信息 Show details of a specific resource or group of resources

logs #查看日志 Print the logs for a container in a pod

attach #和docker 中的attach相似 Attach to a running container

exec #和docker exec 相似 Execute a command in a container

port-forward #端口转发 Forward one or more local ports to a pod

proxy #代理 Run a proxy to the Kubernetes API server

cp #跨容器复制文件 Copy files and directories to and from containers.

auth #测试认证 Inspect authorization

Advanced Commands: #高级命令

apply #创建,修改 Apply a configuration to a resource by filename or stdin

patch #打补丁 Update field(s) of a resource using strategic merge patch

replace #替换 Replace a resource by filename or stdin

wait #等待 Experimental: Wait for one condition on one or many resources

convert #转换 Convert config files between different API versions

Settings Commands: #设置命令

label #打标签 Update the labels on a resource

annotate #给资源加一个注解 Update the annotations on a resource

completion #用来做命令补全 Output shell completion code for the specified shell (bash or zsh)

Other Commands: #其它命令

alpha Commands for features in alpha

api-resources Print the supported API resources on the server

api-versions Print the supported API versions on the server, in the form of "group/version"

config Modify kubeconfig files

plugin Runs a command-line plugin

version Print the client and server version information

Usage:

kubectl [flags] [options]

Use "kubectl <command> --help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all commands).2、查看 kubectl 版本信息或集群信息

[root@k8smaster ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"11", GitVersion:"v1.11.1", GitCommit:"b1b29978270dc22fecc592ac55d903350454310a", GitTreeState:"clean", BuildDate:"2018-07-17T18:53:20Z", GoVer

sion:"go1.10.3", Compiler:"gc", Platform:"linux/amd64"}Server Version: version.Info{Major:"1", Minor:"11", GitVersion:"v1.11.1", GitCommit:"b1b29978270dc22fecc592ac55d903350454310a", GitTreeState:"clean", BuildDate:"2018-07-17T18:43:26Z", GoVer

sion:"go1.10.3", Compiler:"gc", Platform:"linux/amd64"}

[root@k8smaster ~]# kubectl cluster-info

Kubernetes master is running at https://192.168.10.10:6443

KubeDNS is running at https://192.168.10.10:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use ''kubectl cluster-info dump''.3、kubectl run 命令

[root@k8smaster ~]# kubectl run --help

Create and run a particular image, possibly replicated.

Creates a deployment or job to manage the created container(s).#基于这两种中的某一种创建容器(也就是pod)

Examples:

# Start a single instance of nginx.

kubectl run nginx --image=nginx #基于nginx镜像启动pod

# Start a single instance of hazelcast and let the container expose port 5701 .

kubectl run hazelcast --image=hazelcast --port=5701

# Start a single instance of hazelcast and set environment variables "DNS_DOMAIN=cluster" and "POD_NAMESPACE=default"

in the container.

kubectl run hazelcast --image=hazelcast --env="DNS_DOMAIN=cluster" --env="POD_NAMESPACE=default"

# Start a single instance of hazelcast and set labels "app=hazelcast" and "env=prod" in the container.

kubectl run hazelcast --image=nginx --labels="app=hazelcast,env=prod"

# Start a replicated instance of nginx.

kubectl run nginx --image=nginx --replicas=5 #启动5个pod

# Dry run. Print the corresponding API objects without creating them.

kubectl run nginx --image=nginx --dry-run #单跑模式

# Start a single instance of nginx, but overload the spec of the deployment with a partial set of values parsed from

JSON.

kubectl run nginx --image=nginx --overrides=''{ "apiVersion": "v1", "spec": { ... } }''

# Start a pod of busybox and keep it in the foreground, don''t restart it if it exits.

kubectl run -i -t busybox --image=busybox --restart=Never #默认容器结束了会自动补上去,加了此命令后就不会再自动补上去

# Start the nginx container using the default command, but use custom arguments (arg1 .. argN) for that command.

kubectl run nginx --image=nginx -- <arg1> <arg2> ... <argN>

# Start the nginx container using a different command and custom arguments.

kubectl run nginx --image=nginx --command -- <cmd> <arg1> ... <argN> #加上自定义的命令

# Start the perl container to compute π to 2000 places and print it out.

kubectl run pi --image=perl --restart=OnFailure -- perl -Mbignum=bpi -wle ''print bpi(2000)''

# Start the cron job to compute π to 2000 places and print it out every 5 minutes.

kubectl run pi --schedule="0/5 * * * ?" --image=perl --restart=OnFailure -- perl -Mbignum=bpi -wle ''print bpi(2000)'' #创建一个定时job使用 kubectl run 创建一个单跑模式的 nginx 容器

[root@k8smaster ~]# kubectl run nginx-deploy --image=nginx:1.14-alpine --port=80 --replicas=1 --dry-run=true

deployment.apps/nginx-deploy created (dry run) #deployment控制器下所控制的应用程序,叫做nginx-deploy使用 kubectl run 创建一个 nginx 容器

[root@k8smaster ~]# kubectl run nginx-deploy --image=nginx:1.14-alpine --port=80 --replicas=1

deployment.apps/nginx-deploy created

[root@k8smaster ~]# kubectl get deployment

NAME DESIRED(期望) CURRENT(当前) UP-TO-DATE AVAILABLE(可用) AGE

nginx-deploy 1 1 1 0 23s过一会儿查看显示已经可用

[root@k8smaster ~]# kubectl get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

nginx-deploy 1 1 1 1 2m查看创建的 pod

[root@k8smaster ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deploy-5b595999-vw5vt 1/1 Running 0 3m

[root@k8smaster ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-deploy-5b595999-vw5vt 1/1 Running 0 4m 10.244.2.2 k8snode2到节点 2 中查看网桥可用发现启动的相应 pod 是连接在 cni0 网桥上的

[root@k8snode2 ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:b3:80:ea brd ff:ff:ff:ff:ff:ff

inet 192.168.10.12/24 brd 192.168.10.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::df7a:6e6c:357:ba25/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:85:22:4d:73 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN

link/ether 92:d1:5f:6c:71:7b brd ff:ff:ff:ff:ff:ff

inet 10.244.2.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::90d1:5fff:fe6c:717b/64 scope link

valid_lft forever preferred_lft forever

5: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP qlen 1000

link/ether 0a:58:0a:f4:02:01 brd ff:ff:ff:ff:ff:ff

inet 10.244.2.1/24 scope global cni0

valid_lft forever preferred_lft forever

inet6 fe80::6429:a3ff:fe46:ac7e/64 scope link

valid_lft forever preferred_lft forever

6: vethadaa4f42@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP

link/ether 3e:4d:5f:db:62:17 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::3c4d:5fff:fedb:6217/64 scope link

valid_lft forever preferred_lft forever

[root@k8snode2 ~]# docker exec -it 706159bf29fc /bin/sh

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 0A:58:0A:F4:02:02

inet addr:10.244.2.2 Bcast:0.0.0.0 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1

RX packets:15 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1206 (1.1 KiB) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ #[root@k8snode2 ~]# curl 10.244.2.2

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@k8snode2 ~]#4、kubelet delete :使用此命令删除刚刚创建的 nginx 容器,会发现删除后控制器会再次启动一个 pod

[root@k8smaster ~]# kubectl delete pods nginx-deploy-5b595999-vw5vt

pod "nginx-deploy-5b595999-vw5vt" deleted

[root@k8smaster ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-deploy-5b595999-kbj6j 0/1 ContainerCreating 0 17s <none> k8snode1

[root@k8smaster ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-deploy-5b595999-kbj6j 1/1 Running 0 2m 10.244.1.2 k8snode15、kubelet expose (暴露): 此时会发现 pod 的 ip 已经变了,因此我们需要创建一个固定的 service 来提供固定的访问接口。我们使用 kubelet expose 命令来进行创建。(service 默认只服务于集群的内部 pod 客户端)

[root@k8smaster ~]# kubectl expose deployment(控制器) nginx-deploy(控制器名字) --name=nginx --port=80(service 端口) --target-port=80(pod端口) --protocol=TCP

service/nginx exposed查看和测试创建后的 service

[root@k8smaster ~]# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 20h

nginx ClusterIP 10.103.127.92 <none> 80/TCP 17m

[root@k8smaster ~]# kubectl describe service nginx

Name: nginx

Namespace: default

Labels: run=nginx-deploy

Annotations: <none>

Selector: run=nginx-deploy

Type: ClusterIP

IP: 10.103.127.92

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.2:80

Session Affinity: None

Events: <none>

[root@k8smaster ~]# curl 10.103.127.92

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@k8smaster ~]#删除 pod 然后等自动重建后继续访问原来的 service 发现依然可以访问

[root@k8smaster ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

client 0/1 Error 0 14m 10.244.2.3 k8snode2

nginx-deploy-5b595999-jdbtn 1/1 Running 0 31s 10.244.1.3 k8snode1

[root@k8smaster ~]# kubectl delete pods nginx-deploy-5b595999-jdbtn

pod "nginx-deploy-5b595999-jdbtn" deleted

[root@k8smaster ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

client 0/1 Error 0 14m 10.244.2.3 k8snode2

nginx-deploy-5b595999-d9lv5 1/1 Running 0 27s 10.244.2.4 k8snode2

[root@k8smaster ~]# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21h

nginx ClusterIP 10.103.127.92 <none> 80/TCP 42m

[root@k8smaster ~]# curl 10.103.127.92

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>6、关于集群 dns, 所有启动的 pod 的 nameserver 地址都是指向集群中 系统名称空间 中的 dns 的 service 的

[root@k8smaster ~]# kubectl get svc --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21h

default nginx ClusterIP 10.103.127.92 <none> 80/TCP 29m

kube-system kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 21h

[root@k8smaster ~]# kubectl describe svc kube-dns -n kube-system

Name: kube-dns

Namespace: kube-system

Labels: k8s-app=kube-dns

kubernetes.io/cluster-service=true

kubernetes.io/name=KubeDNS

Annotations: prometheus.io/port=9153

prometheus.io/scrape=true

Selector: k8s-app=kube-dns

Type: ClusterIP

IP: 10.96.0.10

Port: dns 53/UDP

TargetPort: 53/UDP

Endpoints: 10.244.0.2:53,10.244.0.3:53

Port: dns-tcp 53/TCP

TargetPort: 53/TCP

Endpoints: 10.244.0.2:53,10.244.0.3:53

Session Affinity: None

Events: <none>

[root@k8smaster ~]# kubectl run client --image=busybox --replicas=1 --replicas=1 -it --restart=Never

If you don''t see a command prompt, try pressing enter.

/ # cat /etc/resolv.conf

nameserver 10.96.0.10

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:57、get pod 时查看 label (service 与 pod 之间是通过 label 关联的)

[root@k8smaster ~]# kubectl get pods --show-labels -o wide

NAME READY STATUS RESTARTS AGE IP NODE LABELS

nginx-deploy-5b595999-d9lv5 1/1 Running 0 15m 10.244.2.4 k8snode2 pod-template-hash=16151555,run=nginx-deploy8、kubectl edit #可以编辑运行的 service,不过当前版本应该只可以查看不支持编辑

[root@k8smaster ~]# kubectl edit svc nginx

Edit cancelled, no changes made.9、svc 还有负载均衡的功能

[root@k8smaster ~]# kubectl run myapp --image=ikubernetes/myapp:v1 --replicas=2

deployment.apps/myapp created

[root@k8smaster ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-848b5b879b-5hg7h 1/1 Running 0 2m

myapp-848b5b879b-ptqjd 1/1 Running 0 2m

nginx-deploy-5b595999-d9lv5 1/1 Running 0 56m

[root@k8smaster ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

myapp-848b5b879b-5hg7h 1/1 Running 0 3m 10.244.2.5 k8snode2

myapp-848b5b879b-ptqjd 1/1 Running 0 3m 10.244.1.4 k8snode1

nginx-deploy-5b595999-d9lv5 1/1 Running 0 57m 10.244.2.4 k8snode2

^C[root@k8smaster ~]# kubectl get deployment -o wide -w #持续监控

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

myapp 2 2 2 2 4m myapp ikubernetes/myapp:v1 run=myapp

nginx-deploy 1 1 1 1 4h nginx-deploy nginx:1.14-alpine run=nginx-deploy创建 svc 并查看转发情况

[root@k8smaster ~]# kubectl expose deployment myapp --name=myapp --port=80

service/myapp exposed

[root@k8smaster ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 22h

myapp ClusterIP 10.106.171.207 <none> 80/TCP 12s

nginx ClusterIP 10.103.127.92 <none> 80/TCP 1h

[root@k8smaster ~]# kubectl describe svc myapp

Name: myapp

Namespace: default

Labels: run=myapp

Annotations: <none>

Selector: run=myapp

Type: ClusterIP

IP: 10.106.171.207

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.4:80,10.244.2.5:80

Session Affinity: None

Events: <none>

[root@k8smaster ~]# curl 10.106.171.207/hostname.html

myapp-848b5b879b-ptqjd

[root@k8smaster ~]# curl 10.106.171.207/hostname.html

myapp-848b5b879b-ptqjd

[root@k8smaster ~]# curl 10.106.171.207/hostname.html

myapp-848b5b879b-ptqjd

[root@k8smaster ~]# curl 10.106.171.207/hostname.html

myapp-848b5b879b-ptqjd

[root@k8smaster ~]# curl 10.106.171.207/hostname.html

myapp-848b5b879b-ptqjd

[root@k8smaster ~]# curl 10.106.171.207/hostname.html

myapp-848b5b879b-ptqjd

[root@k8smaster ~]# curl 10.106.171.207/hostname.html

myapp-848b5b879b-5hg7h

[root@k8smaster ~]# curl 10.106.171.207/hostname.html

myapp-848b5b879b-5hg7h

[root@k8smaster ~]# curl 10.106.171.207/hostname.html

myapp-848b5b879b-5hg7h

[root@k8smaster ~]# curl 10.106.171.207/hostname.html

myapp-848b5b879b-5hg7h

[root@k8smaster ~]# curl 10.106.171.207/hostname.html10、kubectl scale # 动态改变副本数

[root@k8smaster ~]# kubectl scale --replicas=5 deployment myapp

deployment.extensions/myapp scaled

[root@k8smaster ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-848b5b879b-5hg7h 1/1 Running 0 22m

myapp-848b5b879b-6fvr5 1/1 Running 0 30s

myapp-848b5b879b-dpwpj 1/1 Running 0 30s

myapp-848b5b879b-f77xt 1/1 Running 0 30s

myapp-848b5b879b-ptqjd 1/1 Running 0 22m

nginx-deploy-5b595999-d9lv5 1/1 Running 0 1h

[root@k8smaster ~]# while true; do curl 10.106.171.207/hostname.html;sleep 1; done

myapp-848b5b879b-ptqjd

myapp-848b5b879b-5hg7h

myapp-848b5b879b-dpwpj

myapp-848b5b879b-6fvr5

myapp-848b5b879b-dpwpj

myapp-848b5b879b-f77xt

myapp-848b5b879b-dpwpj

myapp-848b5b879b-5hg7h

myapp-848b5b879b-dpwpj

myapp-848b5b879b-f77xt

myapp-848b5b879b-dpwpj

^C

[root@k8smaster ~]# kubectl scale --replicas=3 deployment myapp

deployment.extensions/myapp scaled

[root@k8smaster ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-848b5b879b-5hg7h 1/1 Running 0 25m

myapp-848b5b879b-dpwpj 1/1 Running 0 3m

myapp-848b5b879b-ptqjd 1/1 Running 0 25m

nginx-deploy-5b595999-d9lv5 1/1 Running 0 1h

[root@k8smaster ~]# while true; do curl 10.106.171.207/hostname.html;sleep 1; done

myapp-848b5b879b-ptqjd

myapp-848b5b879b-dpwpj

myapp-848b5b879b-ptqjd

myapp-848b5b879b-5hg7h

myapp-848b5b879b-ptqjd

^C11、kubelet set image 滚动升级更新

先查看各 pod 以及其镜像,然后滚动更新后发现 pod 名称和镜像都发生了改变

[root@k8smaster ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-848b5b879b-5hg7h 1/1 Running 0 30m

myapp-848b5b879b-dpwpj 1/1 Running 0 8m

myapp-848b5b879b-ptqjd 1/1 Running 0 30m

nginx-deploy-5b595999-d9lv5 1/1 Running 0 1h

[root@k8smaster ~]# kubectl describe myapp-848b5b879b-5hg7h

error: the server doesn''t have a resource type "myapp-848b5b879b-5hg7h"

[root@k8smaster ~]# kubectl describe pod myapp-848b5b879b-5hg7h

Name: myapp-848b5b879b-5hg7h

Namespace: default

Priority: 0

PriorityClassName: <none>

Node: k8snode2/192.168.10.12

Start Time: Wed, 08 May 2019 22:41:52 +0800

Labels: pod-template-hash=4046164356

run=myapp

Annotations: <none>

Status: Running

IP: 10.244.2.5

Controlled By: ReplicaSet/myapp-848b5b879b

Containers:

myapp:

Container ID: docker://54587de57edd701951f1e0492504a17be62c9fa18002f5bfc58b252ed536b029

Image: ikubernetes/myapp:v1

Image ID: docker-pullable://ikubernetes/myapp@sha256:9c3dc30b5219788b2b8a4b065f548b922a34479577befb54b03330999d30d513

Port: <none>

Host Port: <none>

State: Running

Started: Wed, 08 May 2019 22:42:45 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-jvtl7 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-jvtl7:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-jvtl7

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Pulling 18h kubelet, k8snode2 pulling image "ikubernetes/myapp:v1"

Normal Pulled 18h kubelet, k8snode2 Successfully pulled image "ikubernetes/myapp:v1"

Normal Created 18h kubelet, k8snode2 Created container

Normal Started 18h kubelet, k8snode2 Started container

Normal Scheduled 30m default-scheduler Successfully assigned default/myapp-848b5b879b-5hg7h to k8snode2

[root@k8smaster ~]# kubectl set image deployment myapp myapp=ikubernetes/myapp:v2

deployment.extensions/myapp image updated

[root@k8smaster ~]# kubectl rollout status deployment myapp #查看滚动更新状态

deployment "myapp" successfully rolled out

[root@k8smaster ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-74c94dcb8c-ccqzs 1/1 Running 0 2m

myapp-74c94dcb8c-jmj4p 1/1 Running 0 2m

myapp-74c94dcb8c-lc2n6 1/1 Running 0 2m

nginx-deploy-5b595999-d9lv5 1/1 Running 0 1h另启动一个 shell 访问镜像,可以看到动态更新效果

[root@k8smaster ~]# while true; do curl 10.106.171.207;sleep 1; done

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>12、kubectl rollout #版本回退(回滚)

[root@k8smaster ~]# curl 10.106.171.207

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

[root@k8smaster ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-74c94dcb8c-8l4n7 1/1 Running 0 11s

myapp-74c94dcb8c-dzlfx 1/1 Running 0 14s

myapp-74c94dcb8c-tsd2s 1/1 Running 0 12s

nginx-deploy-5b595999-d9lv5 1/1 Running 0 1h

[root@k8smaster ~]# kubectl rollout undo deployment myapp #不加镜像版本默认回退到上一个版本

deployment.extensions/myapp

[root@k8smaster ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-74c94dcb8c-8l4n7 0/1 Terminating 0 22s

myapp-74c94dcb8c-dzlfx 1/1 Terminating 0 25s

myapp-74c94dcb8c-tsd2s 0/1 Terminating 0 23s

myapp-848b5b879b-5k4s4 1/1 Running 0 5s

myapp-848b5b879b-bzblz 1/1 Running 0 3s

myapp-848b5b879b-hzbf5 1/1 Running 0 2s

nginx-deploy-5b595999-d9lv5 1/1 Running 0 1h

[root@k8smaster ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-848b5b879b-5k4s4 1/1 Running 0 13s

myapp-848b5b879b-bzblz 1/1 Running 0 11s

myapp-848b5b879b-hzbf5 1/1 Running 0 10s

nginx-deploy-5b595999-d9lv5 1/1 Running 0 1h

[root@k8smaster ~]# curl 10.106.171.207

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>还可以自动扩缩容,不过需要和资源监控配合。

13、可以通过 iptables -vnL 查看 iptables 规则,可以看到 service 是出现在其中的。

14、通过修改 svc 的 type 属性为 NodePort 可以在外部访问到对应的 pod 服务

[root@k8smaster ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 23h

myapp ClusterIP 10.106.171.207 <none> 80/TCP 50m

nginx ClusterIP 10.103.127.92 <none> 80/TCP 2h

[root@k8smaster ~]# kubectl edit svc myapp

service/myapp edited

#打开后内容如下

# Please edit the object below. Lines beginning with a ''#'' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

kind: Service

metadata:

creationTimestamp: 2019-05-09T08:28:47Z

labels:

run: myapp

name: myapp

namespace: default

resourceVersion: "49560"

selfLink: /api/v1/namespaces/default/services/myapp

uid: 776ef2c3-7234-11e9-be24-000c29d142be

spec:

clusterIP: 10.106.171.207

externalTrafficPolicy: Cluster

ports:

- nodePort: 30935

port: 80

protocol: TCP

targetPort: 80

selector:

run: myapp

sessionAffinity: None

type: NodePort #此处由ClusterIP改为NodePort

status:

loadBalancer: {}

[root@k8smaster ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 23h

myapp NodePort 10.106.171.207 <none> 80:30935/TCP 51m

nginx ClusterIP 10.103.127.92 <none> 80/TCP 2h

[root@k8smaster ~]# curl 192.168.10.10:30935

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

(三)Kubernetes 快速入门

Kubernetes的核心对象

API Server提供了RESTful风格的编程接口,其管理的资源是Kubernetes API中的端点,用于存储某种API对象的集合,例如,内置Pod资源是包含了所有Pod对象的集合。资源对象是用于表现集群状态的实体,常用于描述应于哪个节点进行容器化应用、需要为其配置什么资源以及应用程序的管理策略等,例如,重启、升级及容错机制。另外,一个对象也是一种“意向记录“——一旦创建,Kubernetes就需要一直确保对象始终存在。Pod、Deployment和Service等都是最常用的核心对象。

Pod资源对象

Pod资源对象是一种集合了一到多个应用容器、存储资源、专用IP及支撑容器运行的其他选项的逻辑组件,如图所示。Pod代表着Kubernetes的部署单元及原子运行单元,即一个应用程序的单一运行实例,它通常由共享资源且关系紧密的一个或多个应用容器组成。

Kubernetes的网络模型要求其各Pod对象的IP地址位于同一网络平面内(同一IP网段),各Pod之间可使用其IP地址直接进行通信,无论它们运行于集群内的哪个工作节点上,这些Pod对象都像运行于同一局域网中的多个主机。不过,

Pod对象中的各进程均运行于彼此隔离的容器中,并于容器间共享两种关键资源:网络和存储卷。

网络:每个

Pod对象都会被分配一个集群内专用的IP地址,也称为Pod IP,同一Pod内部的所有容器共享Pod对象的Network和UTS名称空间,其中包括主机名、IP地址和端口等。因此,这些容器间的通信可以基于本地回环接口lo进行,而与Pod外的其他组件的通信则需要使用Service资源对象的ClusterIP及相应的端口完成。存储卷:用户可以为

Pod对象配置一组“存储卷”资源,这些资源可以共享给其内部的所有容器使用,从而完成容器间数据的共享。存储卷还可以确保在容器终止后重启,甚至是被删除后也能确保数据不会丢失,从而保证了生命周期内的Pod对象数据的持久化存储。

一个

Pod对象代表某个应用程序的一个特定实例,如果需要扩展应用程序,则意味着为此应用程序同时创建多个Pod实例,每个实例均代表应用程序的一个运行的“副本”(replica)。这些副本化的Pod对象的创建和管理通常由另一组称为“控制器”(Controller)的对象实现,例如,Deployment控制器对象。创建

Pod时,还可以使用Pod Preset对象为Pod注入特定的信息,如ConfigMap、Secret、存储卷、挂载卷和环境变量等。有了Pod Preset对象,Pod模板的创建者就无须为每个模板显示提供所有信息,因此,也就无须事先了解需要配置的每个应用的细节即可完成模板定义。基于期望的目标状态和各节点的资源可用性,

Master会将Pod对象调度至某选定的工作节点运行,工作节点于指向的镜像仓库(image register)下载镜像,并于本地的容器运行时环境中启动容器。Master会将整个集群的状态保存于etcd中,并通过API Server共享给集群的各组件及客户端。

Controller

Kubernetes集群的设计中,Pod是有生命周期的对象。通过手动创建或由Controller(控制器)直接创建的Pod对象会被“调度器”(Scheduler)调度至集群中的某工作节点运行,待到容器应用进程运行结束之后正常终止,随后就会被删除。另外,节点资源耗尽或故障也会导致Pod对象被回收。但

Pod对象本身并不具有“自愈”功能,若是因为工作节点甚至是调度器自身导致了运行失败,那么它将会被删除;同样,资源耗尽或节点故障导致的回收操作也会删除相关的Pod对象。在设计上,Kubernetes使用”控制器“实现对一次性的(用后即弃)Pod对象的管理操作,例如,要确保部署的应用程序的Pod副本数量严格反映用户期望的数目,以及基于Pod模板来创建Pod对象等,从而实现Pod对象的扩缩容、滚动更新和自愈能力等。例如,某节点发生故障时,相关的控制器会将此节点上运行的Pod对象重新调度到其他节点进行重建。控制器本身也是一种资源类型,它有着多种实现,其中与工作负载相关的实现如

Replication Controller、Deployment、StatefulSet、DaemonSet和Jobs等,也可统称它们为Pod控制器。

Pod控制器的定义通常由期望的副本数量、Pod模板和标签选择器(Label Selector)组成。Pod控制器会根据标签选择器对Pod对象的标签进行匹配检查,所有满足选择条件的Pod对象都将受控于当前控制器并计入其副本总数,并确保此数目能够精确反映期望的副本数。

Service

尽管

Pod对象可以拥有IP地址,但此地址无法确保在Pod对象重启或被重建后保持不变,这会为集群中的Pod应用间依赖关系的维护带来麻烦:前端Pod应用(依赖方)无法基于固定地址持续跟踪后端Pod应用(被依赖方)。于是,Service资源被用于在被访问的Pod对象中添加一个有这固定IP地址的中间层,客户端向此地址发起访问请求后由相关的Service资源调度并代理至后端的Pod对象。换言之,

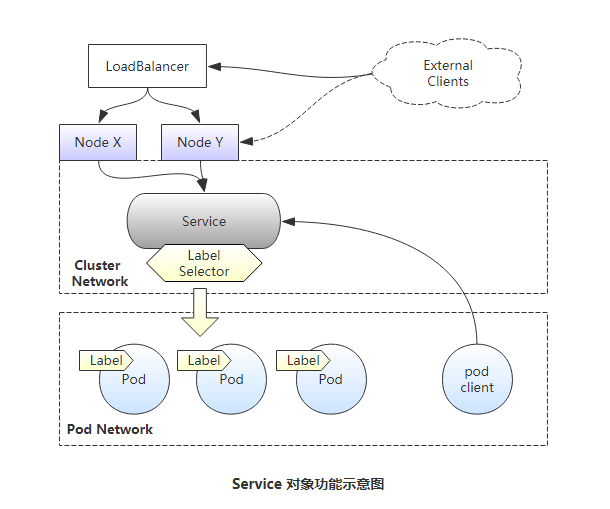

Service是“微服务”的一种实现,事实上它是一种抽象:通过规则定义出由多个Pod对象组合而成的逻辑集合,并附带访问这组Pod对象的策略。Service对象挑选、关联Pod对象的方式同Pod控制器一样,都是要基于Label Selector进行定义,其示意图如下

Service IP是一种虚拟IP,也称为Cluster IP,它专用于集群内通信,通常使用专用的地址段,如“10.96.0.0/12”网络,各Service对象的IP地址在此范围内由系统动态分配。集群内的

Pod对象可直接请求此类的Cluster IP,例如,图中来自Pod client的访问请求即可以Service的Cluster IP作为目标地址,但集群网络属于私有网络地址,它们仅在集群内部可达。将集群外部的访问流量引入集群内部的常用方法是通过节点网络进行,实现方法是通过工作节点的IP地址和某端口(NodePort)接入请求并将其代理至相应的Service对象的Cluster IP上的服务端口,而后由Service对象将请求代理至后端的Pod对象的Pod IP及应用程序监听的端口。因此,图中的External Clients这种来自集群外部的客户端无法直接请求此Service提供的服务,而是需要事先经由某一个工作节点(如NodeY)的IP地址进行,这类请求需要两次转发才能到达目标Pod对象,因此在通信效率上必然存在负面影响。事实上,

NodePort会部署于集群中的每一个节点,这就意味着,集群外部的客户端通过任何一个工作节点的IP地址来访问定义好的NodePort都可以到达相应的Service对象。此种场景下,如果存在集群外部的一个负载均衡器,即可将用户请求负载均衡至集群中的部分或者所有节点。这是一种称为“LoadBalancer”类型的Service,它通常是由Cloud Provider自动创建并提供的软件负载均衡器,不过,也可以是有管理员手工配置的诸如F5一类的硬件设备。简单来说,

Service主要有三种常用类型:第一种是仅用于集群内部通信的ClusterIP类型;第二种是接入集群外部请求的NodePort类型,它工作与每个节点的主机IP之上;第三种是LoadBalancer类型,它可以把外部请求负载均衡至多个Node的主机IP的NodePort之上。此三种类型中,每一种都以其前一种为基础才能实现,而且第三种类型中的LoadBalancer需要协同集群外部的组件才能实现,并且此外部组件并不接受Kubernetes的管理。

命令式容器应用编排

部署应用Pod

在

Kubernetes集群上自主运行的Pod对象在非计划内终止后,其生命周期即告结束,用户需要再次手动创建类似的Pod对象才能确保其容器中的依然可得。对于Pod数量众多的场景,尤其是对微服务业务来说,用户必将疲于应付此类需求。Kubernetes的工作负载(workload)类型的控制器能够自动确保由其管控的Pod对象按用户期望的方式运行,因此,Pod的创建和管理大多会通过这种类型的控制器来进行,包括Deployment、ReplicasSet、ReplicationController等。

1)创建Deployment控制器对象

kubectl run命令可用于命令行直接创建Deployment控制器,并以--image选项指定的镜像运行Pod中的容器,--dry-run选项可以用于命令的测试,但并不真正执行资源对象的创建过程。

# 创建一个名字叫做nginx的deployment控制器,并指定pod镜像使用nginx:1.12版本,并暴露容器内的80端口,并指定副本数量为1个,并先通过--dry-run测试命令是否错误。

[root@k8s-master ~]# kubectl run nginx --image=nginx:1.12 --port=80 --replicas=1 --dry-run=true

[root@k8s-master ~]# kubectl run nginx --image=nginx:1.12 --port=80 --replicas=1

deployment.apps/nginx created

[root@k8s-master ~]# kubectl get pods #查看所有pod对象

NAME READY STATUS RESTARTS AGE

nginx-685cc95cd4-9z4f4 1/1 Running 0 89s

###参数说明:

--image 指定需要使用到的镜像。

--port 指定容器需要暴露的端口。

--replicas 指定目标控制器对象要自动创建Pod对象的副本数量。2)打印资源对象的相关信息

kubectl get命令可用来获取各种资源对象的相关信息,它既能显示对象类型特有格式的简要信息,也能按照指定格式为YAML或JSON的详细信息,或者使用Go模板自定义要显示的属性及信息等。

[root@k8s-master ~]# kubectl get deployment #查看所有deployment控制器对象

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 1 1 66s

###字段说明:

NAME 资源对象名称

READY 期望由当前控制器管理的Pod对象副本数及当前已有的Pod对象副本数

UP-TO-DATE 更新到最新版本定义的Pod对象的副本数量,在控制器的滚动更新模式下,表示已经完成版本更新的Pod对象的副本数量

AVAILABLE 当前处于可用状态的Pod对象的副本数量,即可正常提供服务的副本数。

AGE Pod的存在时长

说明:Deployment资源对象通过ReplicaSet控制器实例完成对Pod对象的控制,而非直接控制。另外,通过控制器创建的Pod对象都会被自动附加一个标签。格式为“run=<Controller_Name>”。

[root@k8s-master ~]# kubectl get deployment -o wide #查看deployment控制器对象的详细信息

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx 1/1 1 1 69m nginx nginx:1.12 run=nginx

[root@k8s-master ~]# kubectl get pods #查看pod资源

NAME READY STATUS RESTARTS AGE

nginx-685cc95cd4-9z4f4 1/1 Running 0 72m

[root@k8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-685cc95cd4-9z4f4 1/1 Running 0 73m 10.244.1.12 k8s-node1 <none> <none>

###字段说明:

NAME pode资源对象名称

READY pod中容器进程初始化完成并能够正常提供服务时即为就绪状态,此字段用于记录处于就绪状态的容器数量

STATUS pod的当前状态,其值有Pending、Running、Succeeded、Failed和Unknown等其中之一

RESTARTS Pod重启的次数

IP pod的IP地址,通常由网络插件自动分配

NODE pod被分配的节点。3)访问Pod对象

这里部署的是

pod是运行的为nginx程序,所以我们可以访问是否ok,在kubernetes集群中的任意一个节点上都可以直接访问Pod的IP地址。

[root@k8s-master ~]# kubectl get pods -o wide #查看pod详细信息

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-685cc95cd4-9z4f4 1/1 Running 0 88m 10.244.1.12 k8s-node1 <none> <none>

[root@k8s-master ~]# curl 10.244.1.12 #kubernetes集群的master节点上访问

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@k8s-node2 ~]# curl 10.244.1.12 #kubernetes集群的node节点上访问

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>上面访问是基于一个pod的情况下,但是,当这个pod由于某种原因意外挂掉了,或者所在的节点挂掉了,那么deployment控制器会立即创建一个新的pod,这时候再去访问这个IP就访问不到了,而我们不可能每次去到节点上看到IP再进行访问。测试如下:

[root@k8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-685cc95cd4-9z4f4 1/1 Running 0 99m 10.244.1.12 k8s-node1 <none> <none>

[root@k8s-master ~]# kubectl delete pods nginx-685cc95cd4-9z4f4 #删除上面的pod

pod "nginx-685cc95cd4-9z4f4" deleted

[root@k8s-master ~]# kubectl get pods -o wide #可以看出,当上面pod刚删除,接着deployment控制器又马上创建了一个新的pod,且这次分配在k8s-node2节点上了。

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-685cc95cd4-z5z9p 1/1 Running 0 89s 10.244.2.14 k8s-node2 <none> <none>

[root@k8s-master ~]# curl 10.244.1.12 #访问之前的pod,可以看到已经不能访问

curl: (7) Failed connect to 10.244.1.12:80; 没有到主机的路由

[root@k8s-master ~]#

[root@k8s-master ~]# curl 10.244.2.14 #访问新的pod,可以正常访问

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>部署Service对象

简单来说,一个

Service对象可视作通过其标签选择器过滤出的一组Pod对象,并能够为此组Pod对象监听的套接字提供端口代理及调度服务。就好比上面做的测试,如果没有Service,那么每次都得去访问pod对象自己的地址等。且那还只是创建了一个pod对象,如果是多个。那么该如何是好?故使用Service解决此问题。

1)创建Service对象(将Service端口代理至Pod端口示例)

"kubectl expose"命令可用于创建Service对象以将应用程序“暴露”(expose)于网络中。

#方法一

[root@k8s-master ~]# kubectl expose deployment nginx --name=nginx-svc --port=80 --target-port=80 --protocol=TCP #为deployment的nginx创建service,取名叫nginx-svc,并通过service的80端口转发至容器的80端口上。

service/nginx-svc exposed

#方法二

[root@k8s-master ~]# kubectl expose deployment/nginx --name=nginx-svc --port=80 --target-port=80 --protocol=TCP

service/nginx-svc exposed

###参数说明:

--name 指定service对象的名称

--port 指定service对象的端口

--target-port 指定pod对象容器的端口

--protocol 指定协议

[root@k8s-master ~]# kubectl get svc #查看service对象。或者kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 25h

nginx-svc ClusterIP 10.109.54.136 <none> 80/TCP 41s这时候可以在kubernetes集群上所有节点上直接访问nginx-svc的cluster-ip及可访问到名为deployment控制器下nginx的pod。并且,集群中的别的新建的pod都可以直接访问这个IP或者这个service名称即可访问到名为deployment控制器下nginx的pod。示例:

# master节点上通过ServiceIP进行访问

[root@k8s-master ~]# curl 10.109.54.136

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

#新建一个客户端pod进行访问,这里这个客户端使用busybox镜像,且pod副本数量为1个,-it表示进入终端模式。--restart=Never,表示从不重启。

[root@k8s-master ~]# kubectl run client --image=busybox --replicas=1 -it --restart=Never

If you don''t see a command prompt, try pressing enter.

/ # wget -O - -q 10.109.54.136 #访问上面创建的(service)nginx-svc的IP

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

......

/ #

/ # wget -O - -q nginx-svc #访问上面创建的(service)名称nginx-svc

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>2)创建Service对象(将创建的Pod对象使用“NodePort”类型的服务暴露到集群外部)

[root@k8s-master ~]# kubectl run mynginx --image=nginx:1.12 --port=80 --replicas=2 #创建一个deployments控制器并使用nginx镜像作为容器运行的应用。

[root@k8s-master ~]# kubectl get pods #查看创建的pod

NAME READY STATUS RESTARTS AGE

client 1/1 Running 0 15h

mynginx-68676f64-28fm7 1/1 Running 0 24s

mynginx-68676f64-9q8dj 1/1 Running 0 24s

nginx-685cc95cd4-z5z9p 1/1 Running 0 16h

[root@k8s-master ~]#

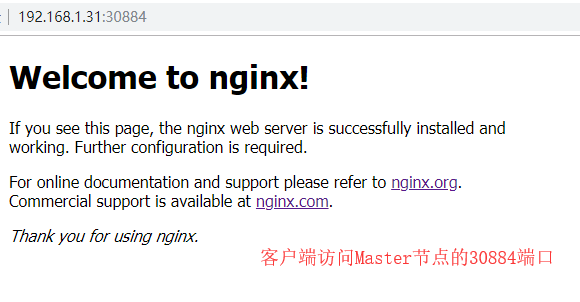

[root@k8s-master ~]# kubectl expose deployments/mynginx --type="NodePort" --port=80 --name=mynginx-svc #创建一个service对象,并将mynginx创建的pod对象使用NodePort类型暴露到集群外部。

service/mynginx-svc exposed

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl get svc #查看service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 41h

mynginx-svc NodePort 10.111.89.58 <none> 80:30884/TCP 10s

nginx-svc ClusterIP 10.109.54.136 <none> 80/TCP 15h

###字段说明:

PORT(S) 这里的mynginx-svc对象可以看出,集群中各工作节点会捕获发往本地的目标端口为30884的流量,并将其代理至当前service对象的80端口。于是集群外部的用户可以使用当前集群中任一节点的此端口来请求Service对象上的服务。

[root@k8s-master ~]#

[root@k8s-master ~]# netstat -nlutp |grep 30884 #查看master节点上是否有监听上面的30884端口

tcp6 0 0 :::30884 :::* LISTEN 7340/kube-proxy

[root@k8s-node1 ~]#

[root@k8s-node1 ~]# netstat -nlutp |grep 30884 #查看node节点是否有监听上面的30884端口

tcp6 0 0 :::30884 :::* LISTEN 2537/kube-proxy客户端访问kubernetes集群的30884端口

3)Service资源对象的描述

“kuberctl describe services”命令用于打印Service对象的详细信息,它通常包括Service对象的Cluster IP,关联Pod对象使用的标签选择器及关联到的Pod资源的端点等。示例

[root@k8s-master ~]# kubectl describe service mynginx-svc

Name: mynginx-svc

Namespace: default

Labels: run=mynginx

Annotations: <none>

Selector: run=mynginx

Type: NodePort

IP: 10.111.89.58

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 30884/TCP

Endpoints: 10.244.1.14:80,10.244.2.15:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

###字段说明:

Selector 当前Service对象使用的标签选择器,用于选择关联的Pod对象

Type 即Service的类型,其值可以是ClusterIP、NodePort和LoadBalancer等其中之一

IP 当前Service对象的ClusterIP

Port 暴露的端口,即当前Service用于接收并响应的端口

TargetPort 容器中的用于暴露的目标端口,由Service Port路由请求至此端口

NodePort 当前Service的NodePort,它是否存在有效值与Type字段中的类型相关

Endpoints 后端端点,即被当前Service的Selector挑中的所有Pod的IP及其端口

Session Affinity 是否启用会话粘性

External Traffic Policy 外部流量的调度策略扩容和缩容

所谓的“伸缩(

Scaling)”就是指改变特定控制器上Pod副本数量的操作,“扩容(scaling up)”即为增加副本数量,而“缩容(scaling down)"则指缩减副本数量。不过,不论是扩容还是缩容,其数量都需要由用户明确给出。

Service对象内建的负载均衡机制可在其后端副本数量不止一个时自动进行流量分发,它还会自动监控关联到的Pod的健康状态,以确保将请求流量分发至可用的后端Pod对象。若某Deployment控制器管理包含多个Pod实例,则必要时用户还可以为其使用“滚动更新”机制将其容器镜像升级到新的版本或变更那些支持动态修改的Pod属性。使用

kubect run命令创建Deployment对象时,“--replicas=”选项能够指定由该对象创建或管理的Pod对象副本的数量,且其数量支持运行时进行修改,并立即生效。“kubectl scale”命令就是专用于变动控制器应用规模的命令,它支持对Deployment资源对象的扩容和缩容操作。

上面示例中创建的Deployment对象nginx仅创建了一个Pod对象,其所能够承载的访问请求数量即受限于这单个Pod对象的服务容量。请求流量上升到接近或超出其容量之前,可以通过kubernetes的“扩容机制”来扩招Pod的副本数量,从而提升其服务容量。

扩容示例

[root@k8s-master ~]# kubectl get pods -l run=nginx #查看标签run=nginx的pod

NAME READY STATUS RESTARTS AGE

nginx-685cc95cd4-z5z9p 1/1 Running 0 17h

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl scale deployments/nginx --replicas=3 #将其扩容到3个

deployment.extensions/nginx scaled

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl get pods -l run=nginx #再次查看

NAME READY STATUS RESTARTS AGE

nginx-685cc95cd4-f2cwb 1/1 Running 0 5s

nginx-685cc95cd4-pz9dk 1/1 Running 0 5s

nginx-685cc95cd4-z5z9p 1/1 Running 0 17h

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl describe deployments/nginx #查看Deployment对象nginx详细信息

Name: nginx

Namespace: default

CreationTimestamp: Thu, 29 Aug 2019 15:29:31 +0800

Labels: run=nginx

Annotations: deployment.kubernetes.io/revision: 1

Selector: run=nginx

Replicas: 3 desired | 3 updated | 3 total | 3 available | 0 unavailable

StrategyType: RollingUpdate

...

#由nginx自动创建的pod资源全部拥有同一个标签选择器“run=nginx”,因此,前面创建的Service资源对象nginx-svc的后端端点也已经通过标签选择器自动扩展到了这3个Pod对象相关的端点

[root@k8s-master ~]# kubectl describe service/nginx-svc

Name: nginx-svc

Namespace: default

Labels: run=nginx

Annotations: <none>

Selector: run=nginx

Type: ClusterIP

IP: 10.109.54.136

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.15:80,10.244.2.14:80,10.244.2.16:80

Session Affinity: None

Events: <none>缩容示例

缩容的方式和扩容相似,只不过是将

Pod副本的数量调至比原来小的数字即可。例如将nginx的pod副本缩减至2个

[root@k8s-master ~]# kubectl scale deployments/nginx --replicas=2

deployment.extensions/nginx scaled

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl get pods -l run=nginx

NAME READY STATUS RESTARTS AGE

nginx-685cc95cd4-pz9dk 1/1 Running 0 10m

nginx-685cc95cd4-z5z9p 1/1 Running 0 17h删除对象

有一些不再有价值的活动对象可使用

“kubectl delete”命令予以删除,需要删除Service对象nginx-svc时,即可使用下面命令完成:

[root@k8s-master ~]# kubectl get services #查看当前所有的service对象

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 43h

mynginx-svc NodePort 10.111.89.58 <none> 80:30884/TCP 96m

nginx-svc ClusterIP 10.109.54.136 <none> 80/TCP 17h

[root@k8s-master ~]# kubectl delete service nginx-svc #删除service对象nginx-svc有时候要清空某一类型下的所有对象,只需要将上面的命令对象的名称缓存

“--all”选项便能实现。例如,删除默认名称空间中所有的Deployment控制器的命令如下:

[root@k8s-master ~]# kubectl delete deployment --all

deployment.extensions "mynginx" deleted注意:受控于控制器的Pod对象在删除后会被重建,删除此类对象需要直接删除其控制器对象。不过,删除控制器时若不想删除其Pod对象,可在删除命令上使用“--cascade=false“选项。

虽然直接命令式管理的相关功能强大且适合用于操纵

Kubernetes资源对象,但其明显的缺点是缺乏操作行为以及待运行对象的可信源。另外,直接命令式管理资源对象存在较大的局限性,它们在设置资源对象属性方面提供的配置能力相当有限,而且还有不少资源并不支持命令操作进行创建,例如,用户无法创建带有多个容器的Pod对象,也无法为Pod对象创建存储卷。因此,管理资源对象更有效的方式是基于保存有对象配置信息的配置清单来进行。

Kubernetes as Database: 使用kubesql查询kubernetes资源

写在前面

kubectl虽然查询单个的kubernetes资源或者列表都已经比较方便,但是进行更为多个资源的联合查询(比如pod和node),以及查询结果的二次处理方面却是kubectl无法胜任的。所以一直以来,我都有想法将kubernetes作为数据库进行查询。在去年,我开发了第二个版本的kubesql。相关信息在https://xuxinkun.github.io/2019/03/11/kubesql/,代码留存在https://github.com/xuxinkun/kubesql/tree/python。这个版本较之我最早的spark离线方式已经有所改观,但是无法应对中型、甚至较小规模的集群,性能上存在较大问题。部署上也较为繁杂,且不够稳定,有一些bug(会异常退出)。而且对于label等字段都无法处理,可用性较差。我总起来不满意,但是一直没时间去重构。直到最近,听了关于presto的一个分享,我感觉重构的机会来了。

这一次kubesql完全抛弃了原有的架构,基于presto进行开发。这里摘抄一段presto的简介:presto是一个开源的分布式SQL查询引擎,适用于交互式分析查询,数据量支持GB到PB字节。Presto的设计和编写完全是为了解决像Facebook这样规模的商业数据仓库的交互式分析和处理速度的问题。presto具有丰富的插件接口,可以极为便捷的对接外部存储系统。

考虑使用presto的主要原因是避免了SQL查询引擎的逻辑耦合到kubesql中,同时其稳定和高性能保证了查询的效率。这样kubesql的主要逻辑专注于获取k8s的resource变化,以及将resource转化为关系型数据的逻辑上。

kubesql使用

重构后的kubesql开源项目地址在https://github.com/xuxinkun/kubesql。

先介绍下如何部署和使用。部署方式目前主要使用docker部署,很快会支持k8s的部署方式。

部署前需要获取kubeconfig。假设kubeconfig位于/root/.kube/config路径下,则只要一条命令即可运行。

docker run -it -d --name kubesql -v /root/.kube/config:/home/presto/config xuxinkun/kubesql:latest

如果桥接网络不能通k8s api,则可以使用物理机网络,加入--net=host参数即可。注意presto端口使用8080,可能会有端口冲突。

而后就可以进行使用了。使用命令为

docker exec -it kubesql presto --server localhost:8080 --catalog kubesql --schema kubesql

这时自动进入交互式查询模式,即可进行使用了。目前已经支持了pods和nodes两种资源的查询,对应为三张表,nodes,pods和containers(container是从pod中拆出来的,具体原因见下文原理一节)。

三张表支持的列参见https://github.com/xuxinkun/kubesql/blob/master/docs/table.md。

presto支持一些内置的函数,可以用这些函数来丰富查询。https://prestodb.io/docs/current/functions.html。

这里我举一些使用kubesql查询的例子。

比如想要查询每个pod的cpu资源情况(requests和limits)。

presto:kubesql> select pods.namespace,pods.name,sum("requests.cpu") as "requests.cpu" ,sum("limits.cpu") as "limits.cpu" from pods,containers where pods.uid = containers.uid group by pods.namespace,pods.name

namespace | name | requests.cpu | limits.cpu

-------------------+--------------------------------------+--------------+------------

rongqi-test-01 | rongqi-test-01-202005151652391759 | 0.8 | 8.0

ljq-nopassword-18 | ljq-nopassword-18-202005211645264618 | 0.1 | 1.0

又比如我想要查询每个node上剩余可以分配的cpu情况(用node上allocatable.cpu减去node上所有pod的requests.cpu的总和)

presto:kubesql> select nodes.name, nodes."allocatable.cpu" - podnodecpu."requests.cpu" from nodes, (select pods.nodename,sum("requests.cpu") as "requests.cpu" from pods,containers where pods.uid = containers.uid group by pods.nodename) as podnodecpu where nodes.name = podnodecpu.nodename;

name | _col1

-------------+--------------------

10.11.12.29 | 50.918000000000006

10.11.12.30 | 58.788

10.11.12.32 | 57.303000000000004

10.11.12.34 | 33.33799999999999

10.11.12.33 | 43.022999999999996

再比如需要查询所有所有2020-05-12后创建的pod。

presto:kube> select name, namespace,creationTimestamp from pods where creationTimestamp > date(''2020-05-12'') order by creationTimestamp desc;

name | namespace | creationTimestamp

------------------------------------------------------+-------------------------+-------------------------

kube-api-webhook-controller-manager-7fd78ddd75-sf5j6 | kube-api-webhook-system | 2020-05-13 07:56:27.000

还可以根据标签来查询,查询所有标签的appid是springboot,且尚未调度成功的pod。以及计数。

标签appid在pods表里则会有一列,列名为"labels.appid",使用该列作为条件来删选pod。

presto:kubesql> select namespace,name,phase from pods where phase = ''Pending'' and "labels.appid" = ''springboot'';

namespace | name | phase

--------------------+--------------+---------

springboot-test-rd | v6ynsy3f73jn | Pending

springboot-test-rd | mu4zktenmttp | Pending

springboot-test-rd | n0yvpxxyvk4u | Pending

springboot-test-rd | dd2mh6ovkjll | Pending

springboot-test-rd | hd7b0ffuqrjo | Pending

presto:kubesql> select count(*) from pods where phase = ''Pending'' and "labels.appid" = ''springboot'';

_col0

-------

5

kubesql原理

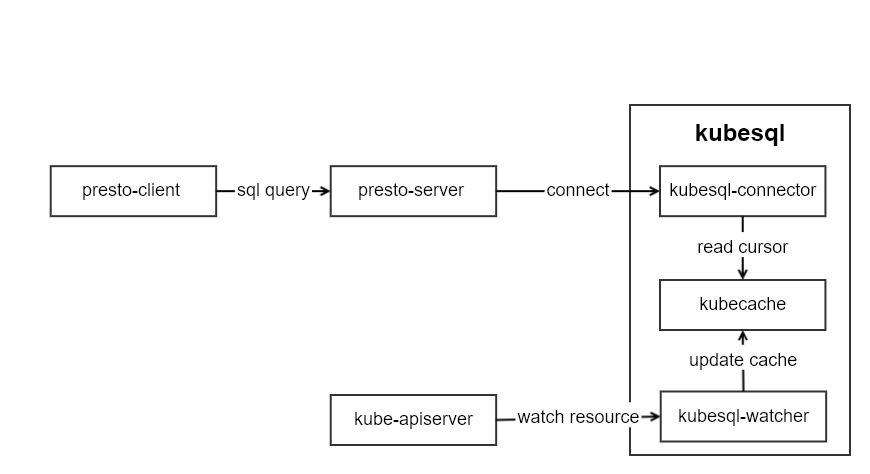

kubesql的架构如图所示:

kubesql里主要有三个模块部分:

- kubesql-watcher: 监听k8s api pod和node的变化。并将pod和node的结构化数据转化为关系型数据(以Map的方式进行保存)。

- kubecache: 用于缓存pod和node的数据。

- kubesql-connector: 作为presto的connector,接受来自presto的调用,通过kubecache查询列信息和对应数据,并返回给presto关于列和数据的信息。

其中最主要的部分是kubesql-connector。presto插件开发指南可以参考https://prestodb.io/docs/current/develop.html。我没有选择从零开始,而是基于已有的localfile插件https://github.com/prestodb/presto/tree/0.234.2/presto-local-file进行的开发。如何进行presto的插件开发,后面我再写文章来解读。

由于所有数据都缓存在内存中,因此几乎无磁盘需求。但是也需要根据集群的规模来提供较大的内存。

以pod数据为例,pod中主要数据分成三部分,metadata,spec和status。

metadata中比较难以处理的部分是label和annotation。我将label这个map进行展平,每个key都作为一列。比如

labels:

app: mysql

owner: xxx

我使用labels作为前缀,拼合labels里面的key作为列名。从而得到两条数据为:

labels.app: mysql

labels.owner: xxx

对于pod A存在app的label但是pod B并没有该标签,则对于pod B来说,该列labels.app的值则为null。

类似的annotations也是类似的处理方式。从而让annotations也就可以成为可以用于筛选pod的条件了。

对于spec来说,最大的困难在于containers的处理。因为一个pod里面可能有若干个containers,因此我直接将containers作为一张新的表。同时在containers表里增加一个uid的列,用来表明该行数据来自于哪个pod。containers里面的字段也对应都加入到containers表中。containers中比较重要的关于资源的如request和limit,我直接使用requests.作为前缀,拼合resource作为列名。比如requests.cpu,requests.memory等。这里cpu的单独处理为double类型,单位为核,比如100m这里会转化为0.1。内存等则为bigint,单位为B。

对于status中,比较难于处理的是conditions和containerStatus。conditions是一个列表,但是每个condition的type不相同。因此我将type作为前缀,用来生成conditon的列名。比如:

conditions:

- lastProbeTime: null

lastTransitionTime: 2020-04-22T09:03:10Z

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: 2020-04-22T09:03:10Z

status: "True"

type: ContainersReady

那么在pod表中,我对应可以得到这些列:

| Column | Type | Extra | Comment |

|---|---|---|---|

| containersready.lastprobetime | timestamp | ||

| containersready.lasttransitiontime | timestamp | ||

| containersready.message | varchar | ||

| containersready.reason | varchar | ||

| containersready.status | varchar | ||

| ready.lastprobetime | timestamp | ||

| ready.lasttransitiontime | timestamp | ||

| ready.message | varchar | ||

| ready.reason | varchar | ||

| ready.status | varchar |

这样我就可以通过"ready.status" = "True" 来筛选condition里type为ready且status为True的pod了。

containerStatus因为与containers一一对应,因此我将containerStatus合并到containers表里,并且根据container name一一对应起来。

后记

本次重构后kubesql我直接发布为1.0.0版本,并且已经在日常使用了。且借助于内存和presto的高性能,我测试过5万pod的集群,查询时间为毫秒级。目前暂未发现明显的bug。大家有发现bug或者新的feature也可以提issue给我。我后期也会再维护该项目。

因为目前只有pods和nodes资源,相对于k8s庞大的资源来说,还只是冰山一角。但是增加每个资源要加入相当数量的代码。我也在考虑如何使用openapi的swagger描述来自动生成代码。

部署上现在是用docker来部署,马上也会增加kubernetes的部署方式,这样会更加便捷。

同时我在考虑,在未来,让presto的每个worker负责一个集群的cache。这样一个presto集群可以查询所有的k8s集群的信息。该功能还需要再做设计和考虑。

kubernetes RBAC实战 kubernetes 用户角色访问控制,kubectl配置生成

kubernetes RBAC实战

环境准备

先用kubeadm安装好kubernetes集群,[包地址在此](https://market.aliyun.com/products/56014009/cmxz022571.html#sku=yuncode1657100000) 好用又方便,服务周到,童叟无欺

本文目的,让名为devuser的用户只能有权限访问特定namespace下的pod

命令行kubectl访问

安装cfssl

此工具生成证书非常方便, pem证书与crt证书,编码一致可直接使用 wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 chmod +x cfssl_linux-amd64 mv cfssl_linux-amd64 /bin/cfssl wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 chmod +x cfssljson_linux-amd64 mv cfssljson_linux-amd64 /bin/cfssljson wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 chmod +x cfssl-certinfo_linux-amd64 mv cfssl-certinfo_linux-amd64 /bin/cfssl-certinfo

签发客户端证书

根据ca证书与么钥签发用户证书

根证书已经在/etc/kubernetes/pki目录下了

[root@master1 ~]# ls /etc/kubernetes/pki/ apiserver.crt ca-config.json devuser-csr.json front-proxy-ca.key sa.pub apiserver.key ca.crt devuser-key.pem front-proxy-client.crt apiserver-kubelet-client.crt ca.key devuser.pem front-proxy-client.key apiserver-kubelet-client.key devuser.csr front-proxy-ca.crt sa.key

注意以下几个文件: `ca.crt ca.key ca-config.json devuser-csr.json`

创建ca-config.json文件

cat > ca-config.json < devuser-csr.json < 校验证书 cfssl-certinfo -cert kubernetes.pem

生成config文件

kubeadm已经生成了admin.conf,我们可以直接利用这个文件,省的自己再去配置集群参数

$ cp /etc/kubernetes/admin.conf devuser.kubeconfig

设置客户端认证参数:

kubectl config set-credentials devuser \ --client-certificate=/etc/kubernetes/ssl/devuser.pem \ --client-key=/etc/kubernetes/ssl/devuser-key.pem \ --embed-certs=true \ --kubeconfig=devuser.kubeconfig

设置上下文参数:

kubectl config set-context kubernetes \ --cluster=kubernetes \ --user=devuser \ --namespace=kube-system \ --kubeconfig=devuser.kubeconfig

设置莫认上下文:

kubectl config use-context kubernetes --kubeconfig=devuser.kubeconfig

以上执行一个步骤就可以看一下 devuser.kubeconfig的变化。里面最主要的三个东西

- cluster: 集群信息,包含集群地址与公钥

- user: 用户信息,客户端证书与私钥,正真的信息是从证书里读取出来的,人能看到的只是给人看的。

- context: 维护一个三元组,namespace cluster 与 user

创建角色

创建一个叫pod-reader的角色

[root@master1 ~]# cat pod-reader.yaml kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: namespace: kube-system name: pod-reader rules: - apiGroups: [""] # "" indicates the core API group resources: ["pods"] verbs: ["get", "watch", "list"]

kubectl create -f pod-reader.yaml

绑定用户

创建一个角色绑定,把pod-reader角色绑定到 devuser上

[root@master1 ~]# cat devuser-role-bind.yaml kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: read-pods namespace: kube-system subjects: - kind: User name: devuser # 目标用户 apiGroup: rbac.authorization.k8s.io roleRef: kind: Role name: pod-reader # 角色信息 apiGroup: rbac.authorization.k8s.io

kubectl create -f devuser-role-bind.yaml

使用新的config文件

$ rm .kube/config && cp devuser.kubeconfig .kube/config

效果, 已经没有别的namespace的权限了,也不能访问node信息了:

[root@master1 ~]# kubectl get node Error from server (Forbidden): nodes is forbidden: User "devuser" cannot list nodes at the cluster scope [root@master1 ~]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-55449f8d88-74x8f 1/1 Running 0 8d calico-node-clpqr 2/2 Running 0 8d kube-apiserver-master1 1/1 Running 2 8d kube-controller-manager-master1 1/1 Running 1 8d kube-dns-545bc4bfd4-p6trj 3/3 Running 0 8d kube-proxy-tln54 1/1 Running 0 8d kube-scheduler-master1 1/1 Running 1 8d [root@master1 ~]# kubectl get pod -n default Error from server (Forbidden): pods is forbidden: User "devuser" cannot list pods in the namespace "default": role.rbac.authorization.k8s.io "pod-reader" not found

dashboard访问

service account原理

k8s里面有两种用户,一种是User,一种就是service account,User给人用的,service account给进程用的,让进程有相关的权限。

如dasboard就是一个进程,我们就可以创建一个service account给它,让它去访问k8s。

我们看一下是如何把admin权限赋给dashboard的:

╰─➤ cat dashboard-admin.yaml apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard labels: k8s-app: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kube-system

把 kubernetes-dashboard 这个ServiceAccount绑定到cluster-admin这个ClusterRole上,这个cluster role非常牛逼,啥权限都有

[root@master1 ~]# kubectl describe clusterrole cluster-admin -n kube-system Name: cluster-admin Labels: kubernetes.io/bootstrapping=rbac-defaults Annotations: rbac.authorization.kubernetes.io/autoupdate=true PolicyRule: Resources Non-Resource URLs Resource Names Verbs --------- ----------------- -------------- ----- [*] [] [*] *.* [] [] [*]

而创建dashboard时创建了这个service account:

apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-system

然后deployment里指定service account

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

更安全的做法

[root@master1 ~]# cat admin-token.yaml kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: admin annotations: rbac.authorization.kubernetes.io/autoupdate: "true" roleRef: kind: ClusterRole name: cluster-admin apiGroup: rbac.authorization.k8s.io subjects: - kind: ServiceAccount name: admin namespace: kube-system --- apiVersion: v1 kind: ServiceAccount metadata: name: admin namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile

[root@master1 ~]# kubectl get secret -n kube-system|grep admin admin-token-7rdhf kubernetes.io/service-account-token 3 14m

[root@master1 ~]# kubectl describe secret admin-token-7rdhf -n kube-system Name: admin-token-7rdhf Namespace: kube-system Labels: Annotations: kubernetes.io/service-account.name=admin kubernetes.io/service-account.uid=affe82d4-d10b-11e7-ad03-00163e01d684 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1025 bytes namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi10b2tlbi03cmRoZiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImFmZmU4MmQ0LWQxMGItMTFlNy1hZDAzLTAwMTYzZTAxZDY4NCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTphZG1pbiJ9.jSfQhFsY7V0ZmfqxM8lM_UUOoUhI86axDSeyVVtldSUY-BeP2Nw4q-ooKGJTBBsrOWvMiQePcQxJTKR1K4EIfnA2FOnVm4IjMa40pr7-oRVY37YnR_1LMalG9vrWmqFiqIsKe9hjkoFDuCaP7UIuv16RsV7hRlL4IToqmJMyJ1xj2qb1oW4P1pdaRr4Pw02XBz9yBpD1fs-lbwheu1UKcEnbHS_0S3zlmAgCrpwDFl2UYOmgUKQVpJhX4wBRRQbwo1Sn4rEFVI1NIa9l_lM7Mf6YEquLHRu3BCZTdu9YfY9pevQz4OfHE0NOvDIqmGRL8Z9kPADAXbljWzcD1m1xCQ

用此token在界面上登录即可

Kubernetes 学习 5 kubernetes 资源清单定义入门

一、kubernetes 是有一个 restful 风格的 API,把各种操作对象都一律当做资源来管理。并且可通过标准的 HTTP 请求的方法 GET,PUT,DELETE,POST,等方法来完成操作,不过是通过相应的命令反馈在 kubectl 之上,如 kubectl run,get,edit,...。

二、k8s 常用的资源实例化后我们称之为对象。k8s 相关的核心资源如下。

1、workload (工作负载型资源对象):Pod,ReplicaSet,Deployment,StatefulSet,DaemonSet,Job,Cronjob...

2、Service,Ingress 服务发现和负载均衡有关 ....

3、Volume 配置与存储。 现在的 k8s 版本还支持基于 CSI,容器存储接口来支持各种各样的存储卷。我们还有另外两种特殊类型的存储卷。

a、ConfigMap :用来当配置中心使用的资源

b、Secret:和 ConfigMap 功能相同但是用来保存敏感数据。

c、DownwardAPI:把外部环境中的信息输出给容器

4、集群级的资源

a、Namespace,Node,Role (名称空间级的资源),ClusterRole,RoleBinding,ClusterRoleBinding

5、元数据型资源

a、HPA

b、PodTemplate 用于 pod 控制器创建 pod 时使用的模板。

c、LimitRange 定义资源限制

6、包括但不仅限于上述资源

三、yaml 详解

1、将 pod 信息以 yaml 格式输出

[root@k8smaster ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-848b5b879b-5k4s4 1/1 Running 0 22h

myapp-848b5b879b-bzblz 1/1 Running 0 22h

myapp-848b5b879b-hzbf5 1/1 Running 0 22h

nginx-deploy-5b595999-d9lv5 1/1 Running 0 1d

[root@k8smaster ~]# kubectl get pod myapp-848b5b879b-5k4s4 -o yaml #以yaml格式输出

apiVersion: v1 #定义对象属于k8s哪一个对应的api群组的名称和版本,给定api版本时由两个部分组成,group/version,group如果省略,表示core定义(核心组,最根本的资源)

kind: Pod #定义资源类别。用来指明这是每一种资源用来实例化成一个具体的资源对象时使用。

metadata: #元数据,内部嵌套很多二级字段和三级字段来定义

creationTimestamp: 2019-05-09T09:10:00Z

generateName: myapp-848b5b879b-

labels:

pod-template-hash: "4046164356"

run: myapp

name: myapp-848b5b879b-5k4s4

namespace: default

ownerReferences:

- apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: ReplicaSet

name: myapp-848b5b879b

uid: 8f3f5833-7232-11e9-be24-000c29d142be

resourceVersion: "48605"

selfLink: /api/v1/namespaces/default/pods/myapp-848b5b879b-5k4s4

uid: 3977b5e7-723a-11e9-be24-000c29d142be

spec: #specifications,规格。定义接下来需要创建的资源对象应该具有什么样的特性,应该满足什么样的规范。确保控制器能够被满足。

containers:

- image: ikubernetes/myapp:v1

imagePullPolicy: IfNotPresent

name: myapp

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-jvtl7

readOnly: true

dnsPolicy: ClusterFirst

nodeName: k8snode2

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations: #容忍度,能容忍哪些污点

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- name: default-token-jvtl7

secret:

defaultMode: 420

secretName: default-token-jvtl7

status: #显示当前资源的当前的状态,只读,由系统维护,而spec由用户定义。如果当前状态和目标状态不一样,k8s就是为了确保每一个资源定义完以后其当前状态无限向目标状态靠近。从而能满足用户期望。

conditions:

- lastProbeTime: null

lastTransitionTime: 2019-05-08T15:36:44Z

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: 2019-05-08T15:36:46Z

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: null

status: "True"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: 2019-05-09T09:10:00Z

status: "True"

type: PodScheduled

containerStatuses:

- containerID: docker://0eccbcf513dc608277089bfe2a7b92e1639b1d63ec5d76212a65b30fffa78774

image: ikubernetes/myapp:v1

imageID: docker-pullable://ikubernetes/myapp@sha256:9c3dc30b5219788b2b8a4b065f548b922a34479577befb54b03330999d30d513

lastState: {}

name: myapp

ready: true

restartCount: 0

state:

running:

startedAt: 2019-05-08T15:36:45Z

hostIP: 192.168.10.12

phase: Running

podIP: 10.244.2.14

qosClass: BestEffort

startTime: 2019-05-08T15:36:44Z2、创建资源的方法

a、apiserver 在定义资源时仅接收 json 格式的资源定义,因此,像我们以前使用的 run 来创建 deployment 时,run 命令会自动将给定的命令转成 json 格式。

b、yaml 格式提供配置清单,apiserver 可自动将其转为 json,而后再提交;

3、大部分资源的配置清单都由五个组成:

a、apiVersion(group/version):用来指明我们要创建的资源属于哪个资源群组 及版本,k8s 把整个 api-server 所支持的 api 有多少种分组来进行管理。分了组后,某一组中的改变我们只需要改变一个组就行了,其它组不受影响可以继续使用,另外,还有一个功能,可以让一个组加版本号以后同一个群组不同版本还能够并存。pod 是最核心资源,所以其属于核心群组 v1,控制器 deployment 等属于应用程序管理的核心资源,属于 apps/v1。我们集群一般会有三个版本,阿尔法(内测版),贝塔(公测版),stable(稳定版)。

[root@k8smaster ~]# kubectl api-versions

admissionregistration.k8s.io/v1beta1

apiextensions.k8s.io/v1beta1

apiregistration.k8s.io/v1

apiregistration.k8s.io/v1beta1

apps/v1

apps/v1beta1

apps/v1beta2

authentication.k8s.io/v1

authentication.k8s.io/v1beta1

authorization.k8s.io/v1

authorization.k8s.io/v1beta1

autoscaling/v1

autoscaling/v2beta1

batch/v1

batch/v1beta1

certificates.k8s.io/v1beta1

events.k8s.io/v1beta1

extensions/v1beta1

networking.k8s.io/v1

policy/v1beta1

rbac.authorization.k8s.io/v1

rbac.authorization.k8s.io/v1beta1

scheduling.k8s.io/v1beta1

storage.k8s.io/v1

storage.k8s.io/v1beta1

v1b、kind:资源类别

c、metadata:元数据,主要提供以下几个字段

1)、name,在同一类别中资源 name 是唯一的。实例化出来的这个资源类别下的实例的名称。

2)、namespace

3)、labels,每一种类型的资源都可以有标签,标签就是键值数据

4)、annotations,注释

5)、ownerReferences

6)、resourceVersion

7)、uid,唯一标识,由系统自动生成。

8)、selfLink,自引用,就是在我们 api 中这个资源的格式,比如

selfLink: /api/v1/namespaces/default/pods/myapp-848b5b879b-5k4s4 #在api下v1版本下namespaces为default中名称为myapp-848b5b879b-5k4s4的pod资源类型

因此每个资源的引用PATH为固定格式 /api/GROUP/VERSION/namespaces/NAMESPACE/TYPE/NAME...

d、spec:spec 可能会嵌套很多其它的二级或三级字段,不同的资源类型其 spec 中可嵌套的字段不尽相同。其定义用户的期望状态(disired state),资源被创建后状态有可能会不符合条件,因此当前状态会向期望状态靠近。由于有很多字段,因此 k8s 有内建的格式定义可用 explain 查看。

[root@k8smaster ~]# kubectl explain(解释,注解) pod

KIND: Pod

VERSION: v1

DESCRIPTION:

Pod is a collection of containers that can run on a host. This resource is

created by clients and scheduled onto hosts.

FIELDS:

apiVersion <string>#字符串

APIVersion defines the versioned schema of this representation of an

object. Servers should convert recognized schemas to the latest internal

value, and may reject unrecognized values. More info:

https://git.k8s.io/community/contributors/devel/api-conventions.md#resources

kind <string>

Kind is a string value representing the REST resource this object

represents. Servers may infer this from the endpoint the client submits

requests to. Cannot be updated. In CamelCase. More info:

https://git.k8s.io/community/contributors/devel/api-conventions.md#types-kinds

metadata <Object>#对象,需要嵌套很多二级字段

Standard object''s metadata. More info:

https://git.k8s.io/community/contributors/devel/api-conventions.md#metadata

spec <Object>

Specification of the desired behavior of the pod. More info:

https://git.k8s.io/community/contributors/devel/api-conventions.md#spec-and-status

status <Object>

Most recently observed status of the pod. This data may not be up to date.

Populated by the system. Read-only. More info:

https://git.k8s.io/community/contributors/devel/api-conventions.md#spec-and-status还可以做二级字段探究

[root@k8smaster ~]# kubectl explain pods.metadata

KIND: Pod

VERSION: v1

RESOURCE: metadata <Object>

DESCRIPTION:

Standard object''s metadata. More info:

https://git.k8s.io/community/contributors/devel/api-conventions.md#metadata

ObjectMeta is metadata that all persisted resources must have, which

includes all objects users must create.

FIELDS:

annotations <map[string]string>

Annotations is an unstructured key value map stored with a resource that

may be set by external tools to store and retrieve arbitrary metadata. They

are not queryable and should be preserved when modifying objects. More

info: http://kubernetes.io/docs/user-guide/annotations

clusterName <string>

The name of the cluster which the object belongs to. This is used to

distinguish resources with same name and namespace in different clusters.

This field is not set anywhere right now and apiserver is going to ignore

it if set in create or update request.

...e、status:当前状态(current state),本字段由 kubernetes 集群维护,用户不能定义它也不能删除它。

四、定义 yaml 文件

[root@k8smaster manifests]# pwd

/root/manifests

[root@k8smaster manifests]# ls

pod-demo.yaml

[root@k8smaster manifests]# cat pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-demo

namespace: default

labels: #也可以在此处写上{app:myapp,tier:frontend}代替下面两行

app: myapp

tier: frontend

spec:

containers: #是一个列表,具体定义方式如下

- name: myapp

image: ikubernetes/myapp:v1

- name: busybox

image: busybox:latest

command: #也可以写成中括号形式,比如可以在此处写上["/bin/sh","-c","sleep 3600"]

- "/bin/sh"

- "-c"

- "echo ${date} >> /usr/share/nginx/html/index.html;sleep 5"

[root@k8smaster manifests]# kubectl create -f pod-demo.yaml

Error from server (AlreadyExists): error when creating "pod-demo.yaml": pods "pod-demo" already exists

[root@k8smaster manifests]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

myapp-848b5b879b-5k4s4 1/1 Running 0 3d 10.244.2.14 k8snode2

myapp-848b5b879b-bzblz 1/1 Running 0 3d 10.244.1.21 k8snode1

myapp-848b5b879b-hzbf5 1/1 Running 0 3d 10.244.1.22 k8snode1

nginx-deploy-5b595999-d9lv5 1/1 Running 0 3d 10.244.2.4 k8snode2

pod-demo 1/2 CrashLoopBackOff 7 17m 10.244.2.15 k8snode2

[root@k8smaster manifests]# kubectl describe pod pod-demo

Name: pod-demo

Namespace: default

Priority: 0

PriorityClassName: <none>

Node: k8snode2/192.168.10.12

Start Time: Thu, 09 May 2019 12:26:59 +0800

Labels: app=myapp

tier=frontend

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"labels":{"app":"myapp","tier":"frontend"},"name":"pod-demo

","namespace":"default"},"spec"...Status: Running

IP: 10.244.2.15

Containers:

myapp:

Container ID: docker://b8e4c51d55ac57796b6f55499d119881ef522bcf43e673440bdf6bfe3cd81aa5

Image: ikubernetes/myapp:v1

Image ID: docker-pullable://ikubernetes/myapp@sha256:9c3dc30b5219788b2b8a4b065f548b922a34479577befb54b03330999d30d513

Port: <none>

Host Port: <none>

State: Running

Started: Thu, 09 May 2019 12:27:00 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-jvtl7 (ro)

busybox:

Container ID: docker://1d3d2c9ab4768c1d9a9dda875c772e9a3a5a489408ad965b09af4d28ee5d5092

Image: busybox:latest

Image ID: docker-pullable://busybox@sha256:4b6ad3a68d34da29bf7c8ccb5d355ba8b4babcad1f99798204e7abb43e54ee3d

Port: <none>

Host Port: <none>

Command:

/bin/sh

-c

echo ${date} >> /usr/share/nginx/html/index.html;sleep 5

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: Completed

Exit Code: 0

Started: Thu, 09 May 2019 12:44:14 +0800

Finished: Thu, 09 May 2019 12:44:19 +0800

Ready: False

Restart Count: 8

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-jvtl7 (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

default-token-jvtl7:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-jvtl7

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Pulled 4d kubelet, k8snode2 Container image "ikubernetes/myapp:v1" already present on machine

Normal Created 4d kubelet, k8snode2 Created container

Normal Started 4d kubelet, k8snode2 Started container

Normal Pulling 4d (x4 over 4d) kubelet, k8snode2 pulling image "busybox:latest"

Normal Pulled 4d (x4 over 4d) kubelet, k8snode2 Successfully pulled image "busybox:latest"

Normal Created 4d (x4 over 4d) kubelet, k8snode2 Created container

Normal Started 4d (x4 over 4d) kubelet, k8snode2 Started container

Warning BackOff 4d (x63 over 4d) kubelet, k8snode2 Back-off restarting failed container

Normal Scheduled 17m default-scheduler Successfully assigned default/pod-demo to k8snode2查看日志

[root@k8smaster manifests]# curl 10.244.2.15

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[root@k8smaster manifests]# kubectl logs pod-demo myapp

10.244.0.0 - - [09/May/2019:04:49:18 +0000] "GET / HTTP/1.1" 200 65 "-" "curl/7.29.0" "-"

[root@k8smaster manifests]# kubectl logs pod-demo busybox

/bin/sh: can''t create /usr/share/nginx/html/index.html: nonexistent directory改变容器 busybox 的启动命令后启动成功

[root@k8smaster manifests]# cat pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-demo

namespace: default

labels: #也可以在此处写上{app:myapp,tier:frontend}代替下面两行

app: myapp

tier: frontend

spec:

containers: #是一个列表,具体定义方式如下

- name: myapp

image: ikubernetes/myapp:v1

- name: busybox

image: busybox:latest

command: #也可以写成中括号形式,比如可以在此处写上["/bin/sh","-c","sleep 3600"]

- "/bin/sh"

- "-c"

- "sleep 3600"

[root@k8smaster manifests]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-848b5b879b-5k4s4 1/1 Running 0 3d

myapp-848b5b879b-bzblz 1/1 Running 0 3d

myapp-848b5b879b-hzbf5 1/1 Running 0 3d

nginx-deploy-5b595999-d9lv5 1/1 Running 0 3d

pod-demo 2/2 Running 0 1m进入到容器中

[root@k8smaster manifests]# kubectl exec -it pod-demo -c busybox /bin/sh

/ # ls

bin dev etc home proc root sys tmp usr var

/ #五、使用 kubectl 管理资源有三种用法

1、命令式用法

2、配置清单式用法 (命令式资源清单)

3、使用另外命令(声明式资源清单),确保资源尽可能的向我们声明的状态改变并随时应用。

今天的关于Kubernetes 学习 4 kubernetes 应用快速入门和kubernetes入门教程的分享已经结束,谢谢您的关注,如果想了解更多关于(三)Kubernetes 快速入门、Kubernetes as Database: 使用kubesql查询kubernetes资源、kubernetes RBAC实战 kubernetes 用户角色访问控制,kubectl配置生成、Kubernetes 学习 5 kubernetes 资源清单定义入门的相关知识,请在本站进行查询。

本文标签: