最近很多小伙伴都在问【云原生】使用Docker快速部署openGauss主备环境和docker部署openstack这两个问题,那么本篇文章就来给大家详细解答一下,同时本文还将给你拓展docker安装

最近很多小伙伴都在问【云原生】使用 Docker 快速部署 openGauss 主备环境和docker部署openstack这两个问题,那么本篇文章就来给大家详细解答一下,同时本文还将给你拓展docker安装opengauss数据库、openGauss1.1.0 主备部署、openGauss分布式安装_搭建_快速部署openGauss3.0.0分布式(openGauss课程)、【云原生 | 37】Docker快速部署编程语言Golang等相关知识,下面开始了哦!

本文目录一览:- 【云原生】使用 Docker 快速部署 openGauss 主备环境(docker部署openstack)

- docker安装opengauss数据库

- openGauss1.1.0 主备部署

- openGauss分布式安装_搭建_快速部署openGauss3.0.0分布式(openGauss课程)

- 【云原生 | 37】Docker快速部署编程语言Golang

【云原生】使用 Docker 快速部署 openGauss 主备环境(docker部署openstack)

目录

-

- 主备部署

- 数据同步验证

- 问题 & 解决方法

上篇使用 Docker 部署 openGauss 中,有小伙伴问

查看官方产品文档确实关于容器安装的内容比较少,但恩墨开源镜像介绍说支持主备部署。本篇记录了基于 Docker 快速部署 openGauss 主备环境及中间遇到的问题,希望对学习 openGauss 的同学有所帮助。

主备部署

1. 关于 Docker 的安装还有 openGauss 镜像的下载请参考上一篇《【云原生】使用 Docker 部署 openGauss》。拉取完 openGauss 镜像后,在 openGauss 官网代码仓下载配置文件 create_master_slave.sh。

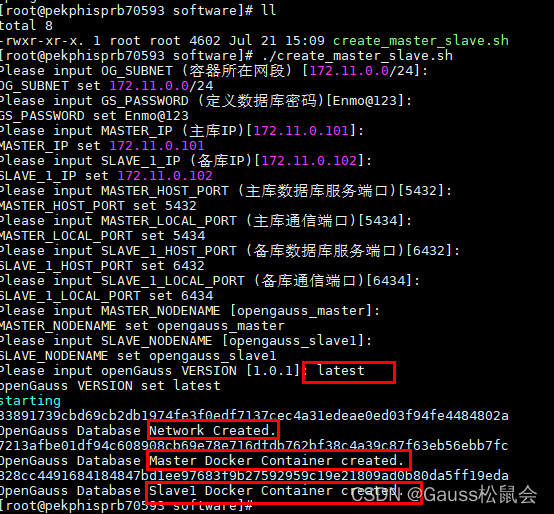

wget https://raw.githubusercontent.com/enmotech/enmotech-docker-opengauss/master/create_master_slave.sh2. 根据实际情况将修改部署参数。我们可以简单看一下这个脚本。前面是收集一些用户指定的安装信息,包括网段、数据库密码、主库和备库 IP 和端口、主库和备库的容器名和安装的版本。注意,这里网络是容器的网络,并不是宿主机的网络,也就是我们可以在一个宿主机上完成主备容器的创建。

Please input OG_SUBNET (容器所在网段) [172.11.0.0/24]:

OG_SUBNET set 172.11.0.0/24

Please input GS_PASSWORD (定义数据库密码)[Enmo@123]:

GS_PASSWORD set Enmo@123

Please input MASTER_IP (主库IP)[172.11.0.101]:

MASTER_IP set 172.11.0.101

Please input SLAVE_1_IP (备库IP)[172.11.0.102]:

SLAVE_1_IP set 172.11.0.102

Please input MASTER_HOST_PORT (主库数据库服务端口)[5432]:

MASTER_HOST_PORT set 5432

Please input MASTER_LOCAL_PORT (主库通信端口)[5434]:

MASTER_LOCAL_PORT set 5434

Please input SLAVE_1_HOST_PORT (备库数据库服务端口)[6432]:

SLAVE_1_HOST_PORT set 6432

Please input SLAVE_1_LOCAL_PORT (备库通信端口)[6434]:

SLAVE_1_LOCAL_PORT set 6434

Please input MASTER_NODENAME [opengauss_master]:

MASTER_NODENAME set opengauss_master

Please input SLAVE_NODENAME [opengauss_slave1]:

SLAVE_NODENAME set opengauss_slave1

Please input openGauss VERSION [1.0.1]: latest

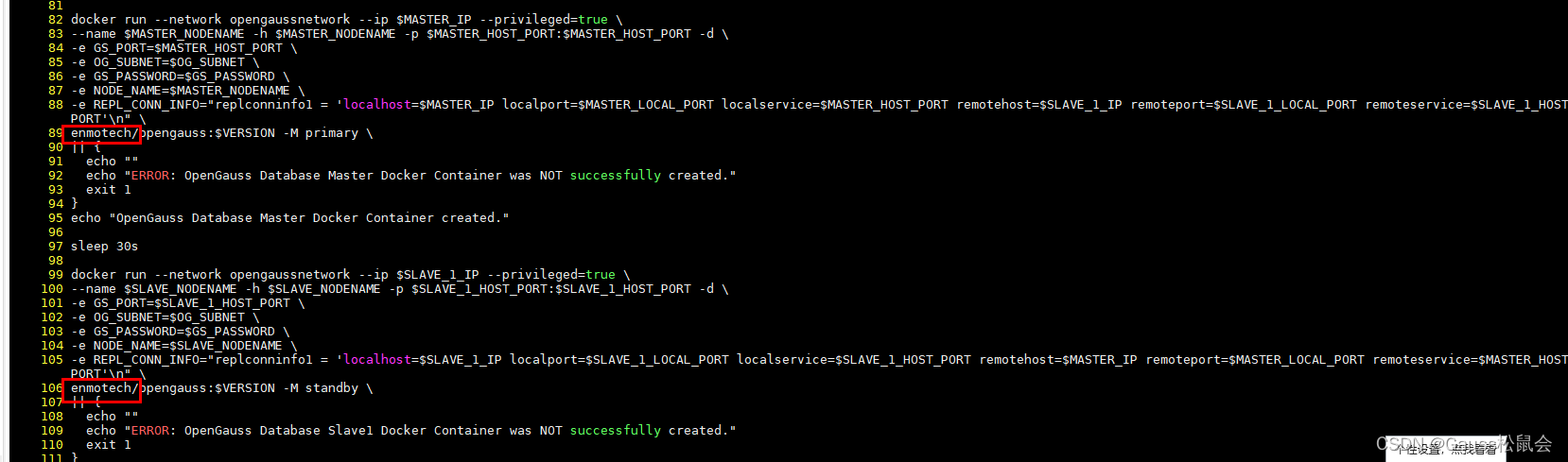

openGauss VERSION set latest脚本里都给了默认值,实际使用的时候可以直接按 Enter 使用默认值。但是要注意,最后版本那里默认值是 1.0.1,跟实际不一样。因为我这里使用的是云和恩墨的镜像,镜像名称是 enmotech/opengauss。所以需要将 89 行和 106 行的 opengauss 前添加 “enmotech/”。当然不想修改脚本的话,也可以按照文章最后问题 & 解决中的方法修改镜像名字。

[root@pekphisprb70593 software]# chmod +x create_master_slave.sh

-rwxr-xr-x. 1 root root 4602 Jul 21 15:09 create_master_slave.sh

[root@pekphisprb70593 software]# ./create_master_slave.shversion 根据自己 pull 的镜像版本,输入 latest 或者 3.0.0。

使用 sh -x 显示详情可以看到具体执行的内容。给好奇宝宝了解下 。整个安装脚本就是根据收集的信息完成主要的三个步骤,第一个是添加 docker 网络,对应的是 “docker network create --subnet=$OG_SUBNET opengaussnetwork” 部分。第二个是创建 master 容器。第三个就是创建 slave 容器。

+ docker network create --subnet=172.11.0.0/24 opengaussnetwork

1992a584d62f408119e85537c1ad461b8720027e7361477b90f4c101feb6bcf6

+ echo ''OpenGauss Database Network Created.''

OpenGauss Database Network Created.

+ docker run --network opengaussnetwork --ip 172.11.0.101 --privileged=true --name opengauss_master -h opengauss_master -p 5432:5432 -v /enmotech/opengauss:/var/lib/opengauss -d -e GS_PORT=5432 -e OG_SUBNET=172.11.0.0/24 -e GS_PASSWORD=Enmo@123 -e NODE_NAME=opengauss_master -e ''REPL_CONN_INFO=replconninfo1 = ''\''''localhost=172.11.0.101 localport=5434 localservice=5432 remotehost=172.11.0.102 remoteport=6434 remoteservice=6432''\''''\n'' enmotech/opengauss:latest -M primary

d2fddba3abc39ea6ad2c7c789d3245d41e0ee840e5e30a68a3f9d3a9ae7f0b64

+ echo ''OpenGauss Database Master Docker Container created.''

OpenGauss Database Master Docker Container created.

+ sleep 30s

+ docker run --network opengaussnetwork --ip 172.11.0.102 --privileged=true --name opengauss_slave1 -h opengauss_slave1 -p 6432:6432 -v /enmotech/opengauss:/var/lib/opengauss -d -e GS_PORT=6432 -e OG_SUBNET=172.11.0.0/24 -e GS_PASSWORD=Enmo@123 -e NODE_NAME=opengauss_slave1 -e ''REPL_CONN_INFO=replconninfo1 = ''\''''localhost=172.11.0.102 localport=6434 localservice=6432 remotehost=172.11.0.101 remoteport=5434 remoteservice=5432''\''''\n'' enmotech/opengauss:latest -M standby

070095ac8b005a06b7aa1dd1b3d9c8652f69aa70394ba496b906aa3b66f2a68b

+ echo ''OpenGauss Database Slave1 Docker Container created.''

OpenGauss Database Slave1 Docker Container created.3. 执行成功后可以使用 docker ps 查询一下容器进程。在该宿主机上启用了两个容器实例,分别为 opengauss 主备实例。PORTS 为 5432 的也就是第二个为主,第一个 6432 的为备。当然我是根据最后的 NAME 区分的。

进入容器查询下数据库状态。

[root@pekphisprb70593 software]# docker exec -it 7213afbe01df /bin/bash

root@opengauss_master:/# su - omm

omm@opengauss_master:~$ gs_ctl query -D /var/lib/opengauss/data/

[2022-07-25 02:17:55.086][581][][gs_ctl]: gs_ctl query ,datadir is /var/lib/opengauss/data

状态 Normal。

数据同步验证

在主库建个用户和表。

[root@pekphisprb70593 software]# docker exec -it opengauss_master /bin/bash

root@opengauss_master:/# su - omm

omm@opengauss_master:~$ gsql -r

gsql ((openGauss 3.0.0 build 02c14696) compiled at 2022-04-01 18:12:34 commit 0 last mr ) Non-SSL connection (SSL connection is recommended when requiring high-security) Type "help" for help. omm=# create user wxx with password "hello@1234"; CREATE ROLE omm=# create table test1(id int,name varchar(20));

CREATE TABLE

omm=# INSERT INTO test1 VALUES (1, ''oligei'');

INSERT 0 1

omm=# \q 去从库验证数据同步:

[root@pekphisprb70593 software]# docker exec -it opengauss_slave1 /bin/bash

root@opengauss_slave1:/# su - omm

omm@opengauss_slave1:~$ gsql -r -p6432

gsql ((openGauss 3.0.0 build 02c14696) compiled at 2022-04-01 18:12:34 commit 0 last mr )

Non-SSL connection (SSL connection is recommended when requiring high-security)

Type "help" for help.

omm=# \d

List of relations

Schema | Name | Type | Owner | Storage

--------+-------+-------+-------+----------------------------------

public | test1 | table | omm | {

orientation=row,compression=no}

(1 row)

omm=# select * from test1;

id | name

----+--------

1 | oligei

(1 row)

omm=# select * from pg_user; 执行结果如下图。

从上面也可以看出,在主容器上建的表和数据还有用户,均可以在备容器查询到,同步成功。

不过,基于 Docker 的主备部署这个特性官方并没有太多相关介绍,后续使用过程中有什么问题也可以在社区进行提问和自行改进。

问题 & 解决方法

可能遇到的问题

- 执行失败后重新执行脚本报错:

Error response from daemon: network with name opengaussnetwork already exists

ERROR: OpenGauss Database Network was NOT successfully created.

HINT: opengaussnetwork Maybe Already Exsist Please Execute ‘docker network rm opengaussnetwork’

问题原因就是这个 create_master_slave.sh 脚本执行失败没有回滚,需要手动回滚已执行成功的操作。所以,这里需要把已经创建的 docker 网络删掉。

[root@pekphisprb70593 software]# docker network rm opengaussnetwork

opengaussnetwork2. 不想修改原有的脚本,还有其他版本能将镜像适配吗?

可以通过修改宿主机上镜像 tag 的方式修改 openGaus 的镜像标签。docker tag 就相当于镜像的 “另存为” 功能。

[root@pekphisprb70594 ~]# docker tag --help

Usage: docker tag SOURCE_IMAGE[:TAG] TARGET_IMAGE[:TAG]

Create a tag TARGET_IMAGE that refers to SOURCE_IMAGE

[root@pekphisprb70594 ~]# docker tag enmotech/opengauss:latest opengauss:latest

[root@pekphisprb70594 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

enmotech/opengauss latest e6b31cb4b7b9 3 months ago 245MB

opengauss latest e6b31cb4b7b9 3 months ago 245MB这样宿主机上的 openGauss 镜像就换了个名字,原 create_master_slave.sh 无需修改。在执行脚本的时候 version 还是输入 latest(或者自己 pull 的其他版本比如 3.0.0)。

如果您觉得博主的文章还不错或者有帮助的话,请关注一下博主,如果三连收藏支持就更好啦!谢谢各位大佬给予的鼓励!

docker安装opengauss数据库

opengauss官网:https://opengauss.org/

opengauss镜像:https://hub.docker.com/r/enmotech/opengauss

一:镜像拉取并运行

docker run --name opengauss --privileged=true -d -e GS_USERNAME=gaussdb -e GS_PASSWORD=openGauss@123 -p 5432:5432 enmotech/opengauss:3.0.0二:挂载本地目录

docker cp opengauss:/var/lib/opengauss/data C:\opengauss【本地挂载目录】 #将容器指定目录拷贝到本机指定目录

docker stop opengauss #停止opengauss运行容器

docker rm opengauss #删除opengauss容器

docker run --name opengauss --privileged=true -d -e GS_USERNAME=gaussdb -e GS_PASSWORD=openGauss@123 -v C:\opengauss\data:/var/lib/opengauss/data -p 5432:5432 enmotech/opengauss:3.0.0 #运行容器并挂载目录三:设置远程连接opengauss

进入opengauss容器中配置opengauss

docker exec -it opengauss bash1:修改pg_hba.conf配置信息

vim /var/lib/opengauss/data/pg_hba.conf修改内容如下

host all all 127.0.0.1/32 md5#修改为md5

host all all 0.0.0.0/0 md5#新增配置

# IPv6 local connections:

host all all ::1/128 md5#修改md52:修改postgresql.conf配置信息

vim /var/lib/opengauss/data/postgresql.conf修改内容如下

listen_addresses = ''*''

local_bind_address = ''0.0.0.0''

password_encryption_type = 03:重新加载配置信息

su - omm #切换omm 用户

gs_ctl stop -D /var/lib/opengauss/data -Z /var/lib/opengauss/data #停止运行重新加载配置4:重新启动opengauss

docker start opengauss

docker exec -it opengauss bash

su - omm

gs_ctl start -D /var/lib/opengauss/data -Z /var/lib/opengauss/data5:navicat连接成功

6:创建用户

gsql -d postgres #进入数据库

CREATE USER test PASSWORD ''test@123456''; #创建用户并设置密码

ALTER ROLE test SYSADMIN; #设置权限

openGauss1.1.0 主备部署

openGauss1.1.0 主备部署 #

openGauss 1.1.0 版本介绍:

openGauss 1.1.0 定位为 Update 版本,与原来的 1.0.1 版本特性功能保持兼容。主要提供如下功能:

-

继承功能:

-

SQL 标准语法、UPSERT、数据类型、XML 类型、表、临时表、全局临时表、外部表、视图、物化视图、索引、外键、Gin 索引、序列、函数、触发器、ROWNUM、聚合函数 median 等基础功能;

-

存储过程、存储过程内 commit/rollback、参数的存储过程/函数调用省略()、存储过程调试、自治事务;

-

认证、权限管理、网络通信安全和数据库审计等安全特性;

-

主备双机、逻辑复制、极致 RTO、备机扩容高可用功能;

-

范围分区、全局分区索引、基于范围分区的自动扩展分区;

-

全量物理备份、逻辑备份、增量备份和恢复、恢复到指定时间点(PITR);

-

MOT 内存表、NUMA 化优化高性能能力,并行查询;

-

容器化部署、IPv6 协议、postgis 插件等;

-

AI 能力:参数自调优、慢 SQL 发现。

-

-

新增特性:

LIST 分区和 HASH 分区、DDL 权限授权和回收、级联备机、字符数据类型长度兼容 PG、自动索引推荐、慢 SQL 发现更新、秒级参数推荐、AI 时序预测与异常发现、deepSQL、plpython、备机备份、自治事务重构,并行查询重构、数据类型支持 sysdate、支持增删备机节点、支持多版本 python、在线添加索引、升级工具、安装与 om 工具解耦。

-

处于 beta 测试阶段的特性: MOT 内存表、并行查询和容器化等特性。

-

该版本的维护周期为半年。

当下载并打开 openGauss 1.1.0 的 CHM 官方文档时发现,在安装概述中关于备机数量的描述存在些许错误,这里需要纠正一下:openGauss 1.1.0 版本目前可以支持 8 台备机+4 台级联备机,至于是否支持 8 台级联备机目前没有看到确切的测试信息。

环境准备 #

1.1 IP 地址规划

| IP地址规划 |

||||

|---|---|---|---|---|

| 主机名 |

prod.opengauss.com |

stb1.opengauss.com |

stb2.opengauss.com |

casstb.opengauss.com |

| 角色 |

Primary |

Standby1 |

Standby2 |

Cascade Standby |

| IP地址 |

192.168.0.11 |

192.168.0.12 |

192.168.0.13 |

192.168.0.14 |

1.2 测试环境硬件配置

| 组件 |

配置 |

|---|---|

| CPU |

4c |

| 内存 |

8GB |

| 硬盘 |

40GB |

| 网卡速率 |

1000Mb |

## 检查CPU核数

cat /proc/cpuinfo |grep processor

## 检查内存大小

free -m

## 检查系统版本

cat /etc/redhat-release

1.3 软件依赖包需求

| 条目 |

要求 |

|---|---|

| Linux操作系统 |

openEuler 20.3LTS/CentOS 7.6 |

| Linux文件系统 |

剩余inode个数>15亿 |

| 工具 |

java-1.8.0 bzip2 |

| Python |

openEuler: 支持Python 3.7.x CentOS 7.6:支持Python 3.6.x |

| 其他依赖软件包 |

libaio-devel >= 0.3.109-13 flex >= 2.5.31 bison >= 2.7.4 ncurses-devel >= 5.9-13.20130511 glibc-devel >= 2.17-111 patch >= 2.7.1-10 lsb_release >= 4.1 readline-devel >= 7.0-13 |

1.4 配置 YUM 源并安装依赖软件包

## 配置华为YUM源

mkdir /etc/yum.repos.d/bak

mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/bak/

wget -O /etc/yum.repos.d/CentOS-Base.repo https://repo.huaweicloud.com/repository/conf/CentOS-7-reg.repo

## curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.myhuaweicloud.com/repo/CentOS-Base-7.repo 华为云内网可以使用该YUM源

yum clean all

## 使用华为YUM源安装依赖的软件包

yum install -y lksctp*

yum install -y java-1.8.0-openjdk* psmisc bzip2 python3

yum install -y libaio-devel flex bison ncurses-devel glibc-devel patch redhat-lsb-core

## 检查软件包的安装情况

rpm -qa java-1.8.0-openjdk psmisc bzip2 libaio-devel flex bison ncurses-devel glibc-devel patch redhat-lsb-core lksctp-tools* python3

## 检查SCTP驱动是否支持

checksctp

操作系统配置(所有节点操作) #

2.1 编辑 Hosts 文件

## 检查主机名和IP地址

hostname && ifconfig |grep broadcast|awk ''{print $2}''

## 添加Hosts解析

cat >> /etc/hosts <<EOF

192.168.0.11 prod.opengauss.com #Gauss OM IP Hosts Mapping

192.168.0.12 stb1.opengauss.com #Gauss OM IP Hosts Mapping

192.168.0.13 stb2.opengauss.com #Gauss OM IP Hosts Mapping

192.168.0.14 casstb.opengauss.com #Gauss OM IP Hosts Mapping

EOF

cat /etc/hosts

2.2 关闭防火墙

systemctl status firewalld

systemctl disable firewalld.service

systemctl stop firewalld.service

2.3 关闭 SELinux(重启操作系统生效)

sed -i ''/SELINUX=/d'' /etc/selinux/config

echo "SELINUX=disabled" >> /etc/selinux/config

cat /etc/selinux/config|grep -v ^#|grep -v ''^$''

2.4 修改字符集参数

echo "export LANG=en_US.UTF-8" >> ~/.bash_profile

source ~/.bash_profile

env|grep LANG

2.5 设置时区并统一主机时间(建议生产环境开启 NTP 服务同步时间)

rm -fr /etc/localtime

ln -s /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

ll /etc/localtime

2.6 关闭 SWAP(可选)

关闭 swap 操作在 1.0.0 文档中是必选项,目的是为了尽量减少 swap 分区对 openGauss 数据库的性能影响,让数据库自己判断数据的冷热程度,对内存数据进行换入换出操作。 但由于后期大家做实验时的物理机内存都比较小,很容易出现因为内存不足而导致数据库 crash 的现象,在交流群里进行一番争议和讨论后,在 1.0.1 版本的文档中已经将关闭 swap 的操作变为可选项,建议在大内存(128GB 以上)的操作系统中关闭 swap 选项,小内存的操作系统建议打开 swap 选项,以保证数据库的稳定性。

## 修改分区表文件,删除SWAP挂载信息

cp /etc/fstab /etc/fstab.bak

sed -i ''/swap/s/^/#/'' /etc/fstab

cat /etc/fstab|grep -v ^#|grep -v ''^$''

## 关闭swap

swapoff -a

2.7 配置 SSH 服务(允许 root 登录,关闭 Banner)

# 允许root登录,关闭登录Banner,配置文件需要重启生效

sed -i ''/Banner/s/^/#/'' /etc/ssh/sshd_config

sed -i ''/PermitRootLogin/s/^/#/'' /etc/ssh/sshd_config

echo -e "\n" >> /etc/ssh/sshd_config

echo "Banner none " >> /etc/ssh/sshd_config

echo "PermitRootLogin yes" >> /etc/ssh/sshd_config

cat /etc/ssh/sshd_config |grep -v ^#|grep -E ''PermitRootLogin|Banner''

2.8 关闭 RemoveIPC(CentOS 7.6 已经默认关闭,该步骤可以跳过)

## 关闭RemoveIPC,CentOS 7.6已经默认关闭,该步骤可以跳过

## sed -i ''/^RemoveIPC/d'' /etc/systemd/logind.conf

## sed -i ''/^RemoveIPC/d'' /usr/lib/systemd/system/systemd-logind.service

## echo "RemoveIPC=no" >> /etc/systemd/logind.conf

## echo "RemoveIPC=no" >> /usr/lib/systemd/system/systemd-logind.service

## systemctl daemon-reload

## systemctl restart systemd-logind

## loginctl show-session | grep RemoveIPC

## systemctl show systemd-logind | grep RemoveIPC

2.9 关闭透明大页 transparent_hugepage(官方文档中未提及)

Linux 的透明大页功能会降低操作系统的 IO 性能,而且需要一定的 CPU 资源对大页碎片进行整理,在实际生产中也遇到很多案例是因为透明大页的问题导致数据库 Hang 或彻底宕机。所以,有经验的 DBA 在部署数据库时一般都会选择关闭操作系统的透明大页(THP)。

在 openGauss 1.1.0 版本的安装部署中,gs_preinstall 脚本会关闭透明大页,但个人认为做的不够彻底,如下:

[root@prod ~]# cat /sys/kernel/mm/transparent_hugepage/enabled

always madvise [never]

[root@prod ~]# cat /sys/kernel/mm/transparent_hugepage/defrag

[always] madvise never

此处,按照以前常用的关闭透明页操作,手动在 rc.local 中添加了关闭 THP 的操作:

[root@prod ~]# vi /etc/rc.d/rc.local

----------------------------------------------------------

if test -f /sys/kernel/mm/transparent_hugepage/enabled; then

echo never > /sys/kernel/mm/transparent_hugepage/enabled

fi

if test -f /sys/kernel/mm/transparent_hugepage/defrag; then

echo never > /sys/kernel/mm/transparent_hugepage/defrag

fi

----------------------------------------------------------

[root@prod ~]# chmod +x /etc/rc.d/rc.local

重启系统后,验证 THP 的关闭状态:

[root@prod ~]# cat /sys/kernel/mm/transparent_hugepage/enabled

always madvise [never]

[root@prod ~]# cat /sys/kernel/mm/transparent_hugepage/defrag

always madvise [never]

2.10 重启操作系统

reboot

Tips:

- 用户、用户组、安装目录建议不要提前创建和指定,最好通过 gs_preinstall 脚本自动创建;

- 操作系统资源限制操作不需要手动提前配置,使用 gs_preinstall 脚本自动配置;

- 操作系统内核参数配置操作不需要提前配置,使用 gs_preinstall 脚本自动配置,但有 4 条网络方面的内核参数依然需要手动配置(详见下文记录)。

预安装 openGauss 软件(在 Primary 主机执行) #

3.1 配置 cluster_config.xml 文件

mkdir -p /soft/openGauss

chmod 775 -R /soft

cat >> /soft/openGauss/cluster_config.xml << EOF

<?xml version="1.0" encoding="UTF-8"?>

<ROOT>

<!-- openGauss整体信息 -->

<CLUSTER>

<!-- 数据库名称 -->

<PARAM name="clusterName" value="gsCluster" />

<!-- 数据库节点名称(hostname) -->

<PARAM name="nodeNames" value="prod.opengauss.com,stb1.opengauss.com,stb2.opengauss.com,casstb.opengauss.com" />

<!-- 数据库安装目录 与其他路径相互独立-->

<PARAM name="gaussdbAppPath" value="/gauss/app" />

<!-- 日志目录 -->

<PARAM name="gaussdbLogPath" value="/gauss/log" />

<!-- 临时文件目录 -->

<PARAM name="tmpMppdbPath" value="/gauss/tmp" />

<!--数据库工具目录 -->

<PARAM name="gaussdbToolPath" value="/gauss/om" />

<!--数据库core文件目录-->

<PARAM name="corePath" value="/gauss/corefile" />

<!-- 节点后端通讯IP,与nodeNames对应 -->

<PARAM name="backIp1s" value="192.168.0.11,192.168.0.12,192.168.0.13,192.168.0.14"/>

</CLUSTER>

<!-- 每台服务器上的节点部署信息 -->

<DEVICELIST>

<!-- node1上的节点部署信息 -->

<DEVICE sn="prod.opengauss.com">

<!-- node1的hostname -->

<PARAM name="name" value="prod.opengauss.com"/>

<!-- 节点所在的AZ及AZ优先级 -->

<PARAM name="azName" value="AZ1"/>

<PARAM name="azPriority" value="1"/>

<!-- 若服务器仅有一个网卡,将backIP1和sshIP1配置成同一个IP -->

<PARAM name="backIp1" value="192.168.0.11"/>

<PARAM name="sshIp1" value="192.168.0.11"/>

<!--dbnode-->

<!--当前主机上需要部署的数据库节点个数-->

<PARAM name="dataNum" value="1"/>

<!--DBnode端口号-->

<PARAM name="dataPortBase" value="26000"/>

<!--DBnode侦听IP-->

<PARAM name="dataListenIp1" value="192.168.0.11,192.168.0.12,192.168.0.13,192.168.0.14"/>

<!--DBnode主节点上数据目录,及备机数据目录-->

<PARAM name="dataNode1" value="/gauss/data/db1,stb1.opengauss.com,/gauss/data/db1,stb2.opengauss.com,/gauss/data/db1,casstb.opengauss.com,/gauss/data/db1"/>

<!--DBnode节点上设定同步模式的节点数-->

<PARAM name="dataNode1_syncNum" value="0"/>

</DEVICE>

<DEVICE sn="stb1.opengauss.com">

<!-- node2的hostname -->

<PARAM name="name" value="stb1.opengauss.com"/>

<!-- 节点所在的AZ及AZ优先级 -->

<PARAM name="azName" value="AZ1"/>

<PARAM name="azPriority" value="1"/>

<!-- 若服务器仅有一个网卡,将backIP1和sshIP1配置成同一个IP -->

<PARAM name="backIp1" value="192.168.0.12"/>

<PARAM name="sshIp1" value="192.168.0.12"/>

</DEVICE>

<DEVICE sn="stb2.opengauss.com">

<!-- node3的hostname -->

<PARAM name="name" value="stb2.opengauss.com"/>

<!-- 节点所在的AZ及AZ优先级 -->

<PARAM name="azName" value="AZ1"/>

<PARAM name="azPriority" value="1"/>

<!-- 若服务器仅有一个网卡,将backIP1和sshIP1配置成同一个IP -->

<PARAM name="backIp1" value="192.168.0.13"/>

<PARAM name="sshIp1" value="192.168.0.13"/>

</DEVICE>

<DEVICE sn="casstb.opengauss.com">

<!-- node4的hostname -->

<PARAM name="name" value="casstb.opengauss.com"/>

<!-- 节点所在的AZ及AZ优先级 -->

<PARAM name="azName" value="AZ1"/>

<PARAM name="azPriority" value="1"/>

<!-- 若服务器仅有一个网卡,将backIP1和sshIP1配置成同一个IP -->

<PARAM name="backIp1" value="192.168.0.14"/>

<PARAM name="sshIp1" value="192.168.0.14"/>

<!-- node1是否为级联备, on表示该实例为级联备,另外级联备机在相同的AZ里需要配有备机 -->

<PARAM name="cascadeRole" value="on"/>

</DEVICE>

</DEVICELIST>

</ROOT>

EOF

cat /soft/openGauss/cluster_config.xml

Tips:

- 安装目录和数据目录必须为空或者不存在,否则可能导致安装失败;

- 部分的配置取消了 openGauss 的类型参数配置:name=“clusterType” value=“single-inst”;

- 部分在官方文档的示例中,将节点唯一识别号 sn 由原来的纯数字示例改为主机名示例,即 sn=hostname;

- 部分增加了级联参数 cascadeRole;

- 部分的 dataListenIp1 参数,在 openGauss 1.0.0 时会报错,官方文档示例中也已经删除该参数,但在 1.1.0 版本中,该参数可以正确无误地运行。

3.2 上传并解压安装包

openGauss 软件下载地址 https://opengauss.org/zh/download.html

使用远程终端 MobaXterm 将软件包上传至 Primary 服务器:

解压数据库安装包:

cd /soft/openGauss

tar -zxvf openGauss-1.1.0-CentOS-64bit-all.tar.gz

3.3 交互式安装

由于 openGauss 1.1.0 的软件包将 om 工具和 openGauss-server 分离,此处的安装步骤稍微有一点差别,多一步解压 om 工具压缩包的操作。

gs_preinstall 脚本会自动创建用户、用户组和安装目录,并授予目录正确的权限。

解压 om 软件包

cd /soft/openGauss/

tar -xvf openGauss-1.1.0-CentOS-64bit-om.tar.gz

加载环境变量

export LD_LIBRARY_PATH=/soft/openGauss/script/gspylib/clib:$LD_LIBRARY_PATH

执行交互式安装脚本

cd /soft/openGauss/script

[root@prod script]# ./gs_preinstall -U omm -G dbgrp -X /soft/openGauss/cluster_config.xml

Parsing the configuration file.

Successfully parsed the configuration file.

Installing the tools on the local node.

Successfully installed the tools on the local node.

Are you sure you want to create trust for root (yes/no)? yes

Please enter password for root.

Password:

Creating SSH trust for the root permission user.

Checking network information.

All nodes in the network are Normal.

Successfully checked network information.

Creating SSH trust.

Creating the local key file.

Successfully created the local key files.

Appending local ID to authorized_keys.

Successfully appended local ID to authorized_keys.

Updating the known_hosts file.

Successfully updated the known_hosts file.

Appending authorized_key on the remote node.

Successfully appended authorized_key on all remote node.

Checking common authentication file content.

Successfully checked common authentication content.

Distributing SSH trust file to all node.

Successfully distributed SSH trust file to all node.

Verifying SSH trust on all hosts.

Successfully verified SSH trust on all hosts.

Successfully created SSH trust.

Successfully created SSH trust for the root permission user.

Setting pssh path

Successfully set core path.

Distributing package.

Begin to distribute package to tool path.

Successfully distribute package to tool path.

Begin to distribute package to package path.

Successfully distribute package to package path.

Successfully distributed package.

Are you sure you want to create the user[omm] and create trust for it (yes/no)? yes

Please enter password for cluster user.

Password:

Please enter password for cluster user again.

Password:

Successfully created [omm] user on all nodes.

Preparing SSH service.

Successfully prepared SSH service.

Installing the tools in the cluster.

Successfully installed the tools in the cluster.

Checking hostname mapping.

Successfully checked hostname mapping.

Creating SSH trust for [omm] user.

Checking network information.

All nodes in the network are Normal.

Successfully checked network information.

Creating SSH trust.

Creating the local key file.

Successfully created the local key files.

Appending local ID to authorized_keys.

Successfully appended local ID to authorized_keys.

Updating the known_hosts file.

Successfully updated the known_hosts file.

Appending authorized_key on the remote node.

Successfully appended authorized_key on all remote node.

Checking common authentication file content.

Successfully checked common authentication content.

Distributing SSH trust file to all node.

Successfully distributed SSH trust file to all node.

Verifying SSH trust on all hosts.

Successfully verified SSH trust on all hosts.

Successfully created SSH trust.

Successfully created SSH trust for [omm] user.

Checking OS software.

Successfully check os software.

Checking OS version.

Successfully checked OS version.

Creating cluster''s path.

Successfully created cluster''s path.

Setting SCTP service.

Successfully set SCTP service.

Set and check OS parameter.

Setting OS parameters.

Successfully set OS parameters.

Warning: Installation environment contains some warning messages.

Please get more details by "/soft/openGauss/script/gs_checkos -i A -h prod.opengauss.com,stb1.opengauss.com,stb2.opengauss.com,casstb.opengauss.com --detail".

Set and check OS parameter completed.

Preparing CRON service.

Successfully prepared CRON service.

Setting user environmental variables.

Successfully set user environmental variables.

Setting the dynamic link library.

Successfully set the dynamic link library.

Setting Core file

Successfully set core path.

Setting pssh path

Successfully set pssh path.

Set ARM Optimization.

No need to set ARM Optimization.

Fixing server package owner.

Setting finish flag.

Successfully set finish flag.

Preinstallation succeeded.

Tips:

- 在使用 gs_preinstall 脚本后,limits 资源虽然已经设置,但仍然存在无法动态生效的参数,需要重启系统使之生效;

- 若是共用的环境,需要加入–sep-env-file=ENVFILE 参数分离环境变量,避免与其他用户相互影响。

3.4 添加 4 条网络内核参数

由于 pre_install 脚本执行完毕后,再次检查会发现以下网络参数不满足需求,这里提前设置

[root@prod ~]# cat >>/etc/sysctl.conf <<EOF

net.ipv4.tcp_retries1 = 5

net.ipv4.tcp_syn_retries = 5

net.sctp.path_max_retrans = 10

net.sctp.max_init_retransmits = 10

EOF

3.5 重启所有节点,使内核参数和资源限制参数生效

reboot

安装验证 #

以 root 用户执行脚本,检查操作系统参数:

[root@prod ~]# /soft/openGauss/script/gs_checkos -i A -h prod.opengauss.com,stb1.opengauss.com,stb2.opengauss.com,casstb.opengauss.com --detail

Checking items:

A1. [ OS version status ] : Normal

[casstb.opengauss.com]

centos_7.6_64bit

[stb2.opengauss.com]

centos_7.6_64bit

[stb1.opengauss.com]

centos_7.6_64bit

[prod.opengauss.com]

centos_7.6_64bit

A2. [ Kernel version status ] : Normal

The names about all kernel versions are same. The value is "3.10.0-1160.6.1.el7.x86_64".

A3. [ Unicode status ] : Normal

The values of all unicode are same. The value is "LANG=en_US.UTF-8".

A4. [ Time zone status ] : Normal

The informations about all timezones are same. The value is "+0800".

A5. [ Swap memory status ] : Normal

The value about swap memory is correct.

A6. [ System control parameters status ] : Normal

All values about system control parameters are correct.

A7. [ File system configuration status ] : Normal

Both soft nofile and hard nofile are correct.

A8. [ Disk configuration status ] : Normal

The value about XFS mount parameters is correct.

A9. [ Pre-read block size status ] : Normal

The value about Logical block size is correct.

A10.[ IO scheduler status ] : Normal

The value of IO scheduler is correct.

A11.[ Network card configuration status ] : Warning

[stb2.opengauss.com]

BondMode Null

Warning reason: Failed to obtain the network card speed value. Commands for obtain the network card speed: /sbin/ethtool eth0 | grep ''Speed:''. Error:

[stb1.opengauss.com]

BondMode Null

Warning reason: Failed to obtain the network card speed value. Commands for obtain the network card speed: /sbin/ethtool eth0 | grep ''Speed:''. Error:

[casstb.opengauss.com]

BondMode Null

Warning reason: Failed to obtain the network card speed value. Commands for obtain the network card speed: /sbin/ethtool eth0 | grep ''Speed:''. Error:

[prod.opengauss.com]

BondMode Null

Warning reason: Failed to obtain the network card speed value. Commands for obtain the network card speed: /sbin/ethtool eth0 | grep ''Speed:''. Error:

A12.[ Time consistency status ] : Warning

[casstb.opengauss.com]

The NTPD not detected on machine and local time is "2021-01-06 16:29:20".

[stb1.opengauss.com]

The NTPD not detected on machine and local time is "2021-01-06 16:29:20".

[prod.opengauss.com]

The NTPD not detected on machine and local time is "2021-01-06 16:29:20".

[stb2.opengauss.com]

The NTPD not detected on machine and local time is "2021-01-06 16:29:20".

A13.[ Firewall service status ] : Normal

The firewall service is stopped.

A14.[ THP service status ] : Normal

The THP service is stopped.

Total numbers:14. Abnormal numbers:0. Warning numbers:2.

Warning 说明:

- 网卡告警:由于测试环境使用的华为云实例对网络做了优化,ethtool 工具无法获取网卡速率信息,该告警可以忽略。

- 时间同步告警:测试环境没有配置 NTP 服务器导致该告警,此处的告警可以忽略,但建议在生产环境下最好配置 NTP 服务器。

部署安装 openGauss 软件 #

执行 openGauss 安装脚本:

chmod -R 775 /soft/

chown omm:dbgrp /soft -R

su - omm

gs_install -X /soft/openGauss/cluster_config.xml \

--gsinit-parameter="--encoding=UTF8" \ ## 建议生产环境使用UTF-8编码,否则默认是SQL_ASCII编码

--dn-guc="max_connections=1000" \ ## 以下5条内存参数仅仅是为了使得openGauss能够在4GB内存的机器运行,生产环境请根据实际情况做优化

--dn-guc="max_process_memory=3GB" \

--dn-guc="shared_buffers=128MB" \

--dn-guc="bulk_write_ring_size=128MB" \

--dn-guc="cstore_buffers=16MB"

输出内容:

Parsing the configuration file.

Check preinstall on every node.

Successfully checked preinstall on every node.

Creating the backup directory.

Successfully created the backup directory.

begin deploy..

Installing the cluster.

begin prepare Install Cluster..

Checking the installation environment on all nodes.

begin install Cluster..

Installing applications on all nodes.

Successfully installed APP.

begin init Instance..

encrypt cipher and rand files for database.

Please enter password for database: ## 系统初始用户omm的密码复杂度要求:大写+小写+数字+特殊字符(任选3类,至少8位)

Please repeat for database:

begin to create CA cert files

The sslcert will be generated in /gauss/app/share/sslcert/om

Cluster installation is completed.

Configuring.

Deleting instances from all nodes.

Successfully deleted instances from all nodes.

Checking node configuration on all nodes.

Initializing instances on all nodes.

Updating instance configuration on all nodes.

Check consistence of memCheck and coresCheck on database nodes.

Successful check consistence of memCheck and coresCheck on all nodes.

Configuring pg_hba on all nodes.

Configuration is completed.

Successfully started cluster.

Successfully installed application.

end deploy..

Tips:

- 安装过程中会生成 ssl 证书,证书存放路径为{gaussdbAppPath}/share/sslcert/om,其中{gaussdbAppPath}为集群 openGauss 配置文件中指定的程序安装目录;

- 日志文件路径下会生成两个日志文件:“gs_install-YYYY-MMDD_HHMMSS.log”和“gs_local-YYYY-MM-DD_HHMMSS.log”。

检查数据库基本信息 #

-- 使用omm用户连接数据库

gsql -d postgres -p 26000 -r

-- 修改初始用户密码

postgres=# alter role omm identified by ''gauss@123'' replace ''gauss@2020''; -- 修改初始用户omm密码为gauss@123

-- 查询数据库信息

postgres=# select version(); -- 检查数据库版本

version

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------

PostgreSQL 9.2.4 (openGauss 1.1.0 build 392c0438) compiled at 2020-12-31 20:07:42 commit 0 last mr on x86_64-unknown-linux-gnu, compiled by g++ (GCC) 7.3.0, 64-bit

(1 row)

postgres=# \l -- 检查数据库清单

List of databases

Name | Owner | Encoding | Collate | Ctype | Access privileges

-----------+-------+----------+---------+-------+-------------------

postgres | omm | UTF8 | C | C |

template0 | omm | UTF8 | C | C | =c/omm +

| | | | | omm=CTc/omm

template1 | omm | UTF8 | C | C | =c/omm +

| | | | | omm=CTc/omm

(3 rows)

postgres=# \q -- 退出gsql客户端

检查主备状态 #

7.1 主备状态检查

[omm@prod ~]$ gs_om -t status --detail

[ Cluster State ]

cluster_state : Normal

redistributing : No

current_az : AZ_ALL

[ Datanode State ]

node node_ip instance state

----------------------------------------------------------------------------------

1 prod.opengauss.com 192.168.0.11 6001 /gauss/data/db1 P Primary Normal

2 stb1.opengauss.com 192.168.0.12 6002 /gauss/data/db1 S Standby Normal

3 stb2.opengauss.com 192.168.0.13 6003 /gauss/data/db1 S Standby Normal

4 casstb.opengauss.com 192.168.0.14 6004 /gauss/data/db1 C Cascade Normal

7.2 复制连接配置检查

postgres=# show replconninfo1;

replconninfo1

---------------------------------------------------------------------------------------------------------------------------------------------------------------------------

localhost=192.168.0.11 localport=26001 localheartbeatport=26005 localservice=26004 remotehost=192.168.0.12 remoteport=26001 remoteheartbeatport=26005 remoteservice=26004

postgres=# show replconninfo2;

replconninfo2

---------------------------------------------------------------------------------------------------------------------------------------------------------------------------

localhost=192.168.0.11 localport=26001 localheartbeatport=26005 localservice=26004 remotehost=192.168.0.13 remoteport=26001 remoteheartbeatport=26005 remoteservice=26004

postgres=# show replconninfo3;

replconninfo3

---------------------------------------------------------------------------------------------------------------------------------------------------------------------------

localhost=192.168.0.11 localport=26001 localheartbeatport=26005 localservice=26004 remotehost=192.168.0.14 remoteport=26001 remoteheartbeatport=26005 remoteservice=26004

postgres=# show max_wal_senders;

max_wal_senders

-----------------

16

7.3 检查日志传送线程状态

-- 在Primary机器检查日志发送线程状态

postgres=# \pset expanded

Expanded display is on.

postgres=# select * from pg_stat_get_wal_senders();

-[ RECORD 1 ]--------------+----------------------------------------

pid | 140097998878464

sender_pid | 21609

local_role | Primary

peer_role | Standby

peer_state | Normal

state | Streaming

catchup_start | 2021-01-06 16:40:33.679871+08

catchup_end | 2021-01-06 16:40:33.707802+08

sender_sent_location | 0/7002380

sender_write_location | 0/7002380

sender_flush_location | 0/7002380

sender_replay_location | 0/7002380

receiver_received_location | 0/7002380

receiver_write_location | 0/7002380

receiver_flush_location | 0/7002380

receiver_replay_location | 0/7002380

sync_percent | 100%

sync_state | Async

sync_priority | 0

sync_most_available | Off

channel | 192.168.0.11:26001-->192.168.0.12:54854

-[ RECORD 2 ]--------------+----------------------------------------

pid | 140097703044864

sender_pid | 21718

local_role | Primary

peer_role | Standby

peer_state | Normal

state | Streaming

catchup_start | 2021-01-06 16:40:31.364624+08

catchup_end | 2021-01-06 16:40:31.364668+08

sender_sent_location | 0/7002380

sender_write_location | 0/7002380

sender_flush_location | 0/7002380

sender_replay_location | 0/7002380

receiver_received_location | 0/7002380

receiver_write_location | 0/7002380

receiver_flush_location | 0/7002380

receiver_replay_location | 0/7002380

sync_percent | 100%

sync_state | Async

sync_priority | 0

sync_most_available | Off

channel | 192.168.0.11:26001-->192.168.0.13:48502

-- 在cascade备机(级联备机)检查日志接收线程

postgres=# \pset x

Expanded display is on.

postgres=# select * from pg_stat_get_wal_receiver();

-[ RECORD 1 ]--------------+----------------------------------------

receiver_pid | 15522

local_role | Cascade Standby

peer_role | Standby

peer_state | Normal

state | Normal

sender_sent_location | 0/7002F88

sender_write_location | 0/7002F88

sender_flush_location | 0/7002F88

sender_replay_location | 0/7002E70

receiver_received_location | 0/7002F88

receiver_write_location | 0/7002F88

receiver_flush_location | 0/7002F88

receiver_replay_location | 0/7002F88

sync_percent | 100%

channel | 192.168.0.14:46818<--192.168.0.12:26001

Tips: 级联备机会在同一个 AZ 内寻找一台备机获取需要同步的数据,但至于选择同 AZ 内的哪一台备机,具架构师的反馈,这个是随机选择。

7.4 数据同步测试

-- Primary节点创建测试数据

postgres=# create table test(name varchar(20));

CREATE TABLE

postgres=# insert into test values(''This is a test.'');

INSERT 0 1

postgres=# select * from test;

name

-----------------

This is a test.

-- Cascade Standby节点检查数据

postgres=# select * from test;

name

-----------------

This is a test.

实验环境清理 #

8.1 以 omm 用户卸载数据库

gs_uninstall --delete-data

Tips: 如果卸载失败请根据“$GAUSSLOG/om/gs_uninstall-YYYY-MM-DD_HHMMSS.log”中的日志信息排查错误。

8.2 以 root 用户清理环境

su - root

cd /soft/openGauss/script

./gs_postuninstall -U omm -X /soft/openGauss/cluster_config.xml --delete-user --delete-group

8.3 手动清理残留信息

## 手动删除残留的安装目录

rm -fr /gauss

Tips: 若为环境变量分离的模式安装的集群需删除之前 source 的环境变量分离的 env 参数,清理时需要加入–sep-env-file 参数。

openGauss分布式安装_搭建_快速部署openGauss3.0.0分布式(openGauss课程)

一、opengauss 的背景和行业现状

2022 年,七大 openGauss 商业版发布,是基于 openGauss3.0 推出商业发行版

目前海量数据库 Vastbase 表现最佳,一直是 TOP 1

众所周知,opengauss 起源于 postgresql,在此基础上做了改良

海量数据库从 postgresql 就已经开始做商业版,一直做到 opengauss,经验最丰富,其他产品例如 MongoDB 是紧随其后 (在此不讨论其存在的意义),目前产品百花齐放,更显锦上添花

Vastbase G100 采用业界证明稳定高效的日志流复制技术实现集群内主备库的数据和状态传输,

并通过一主多备的部署和配置架构实现了多点可读、数据不丢失、异地灾备等关键高可用场景。

二、部署openGauss 3.0.0分布式

4台ECS服务器,配置4vCPUs | 16GiB | c7.xlarge.4 CentOS 7.9 64bit

2.1服务器/etc/hosts配置成一样的

echo momo488m > /etc/hostname

echo momo488s1 > /etc/hostname

echo momo488s2 > /etc/hostname

echo momo488s3 > /etc/hostname

[root@momo488s1 ~]# cat /etc/hosts

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

192.168.0.250 momo488m

192.168.0.213 momo488s1

192.168.0.89 momo488s2

192.168.0.24 momo488s3

scp /etc/hosts momo488s1:/etc/hosts

scp /etc/hosts momo488s2:/etc/hosts

scp /etc/hosts momo488s3:/etc/hosts

2.2配置互信

ssh-keygen -t rsa

cat .ssh/id_rsa.pub >>~/.ssh/authorized_keys

ssh-keyscan -t rsa momo488m >>~/.ssh/known_hosts

ssh-keyscan -t rsa momo488s1 >>~/.ssh/known_hosts

ssh-keyscan -t rsa momo488s2 >>~/.ssh/known_hosts

ssh-keyscan -t rsa momo488s3 >>~/.ssh/known_hosts

scp -r ~/.ssh momo488s1:~

scp -r ~/.ssh momo488s2:~

scp -r ~/.ssh momo488s3:~

测试

ssh momo488m date

ssh momo488s1 date

ssh momo488s2 date

ssh momo488s3 date

2.3下载软件

1>下载openGauss-distributed-solutions-master.zip并上传

https://gitee.com/opengauss/openGauss-distributed-solutions

2>下载openGauss分布式镜像

wget https://opengauss.obs.cn-south-1.myhuaweicloud.com/3.0.0/distributed/openGauss-distributed-CentOS-x86_64.tar.gz

3>创建目录/data,将软解压到/data

mkdir /data

unzip openGauss-distributed-solutions-master.zip

tar -zxvf openGauss-distributed-CentOS-x86_64.tar.gz

[root@momo488m data]# scp *tar* momo488s1:~

[root@momo488m data]# scp *tar* momo488s2:~

[root@momo488m data]# scp *tar* momo488s3:~

2.4创建配置文件profile

[root@momo488m simple_install]# pwd

/root/openGauss-distributed-solutions-master/simple_install

[root@momo488m simple_install]# ll

total 44

drwxr-xr-x 4 root root 4096 Mar 31 2022 addons

-rw-r--r-- 1 root root 415 Mar 31 2022 env_clean.sh

-rw-r--r-- 1 root root 1201 Mar 31 2022 install-addons.sh

-rw-r--r-- 1 root root 652 Mar 31 2022 install-docker.sh

-rw-r--r-- 1 root root 956 Mar 31 2022 install-k8s.sh

-rw-r--r-- 1 root root 4998 Mar 31 2022 install-master.sh

-rw-r--r-- 1 root root 957 Mar 31 2022 node-template.sh

-rw-r--r-- 1 root root 60 Mar 31 2022 profile

-rw-r--r-- 1 root root 1573 Mar 31 2022 pull-docker.sh

-rw-r--r-- 1 root root 1026 Mar 31 2022 setupconfig.sh

[root@momo488m simple_install]# vi profile

[root@momo488m simple_install]# cat profile

master=192.168.0.250

node1=192.168.0.213

node2=192.168.0.89

node3=192.168.0.24

[root@momo488m simple_install]# chmod +x *.sh

2.5执行安装脚本

清理

[root@momo488m simple_install]# sh env_clean.sh

安装

[root@momo488m simple_install]# sh install-master.sh

查看

[root@momo488m simple_install]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

momo488m NotReady control-plane,master 10m v1.21.1 192.168.0.108 <none> CentOS Linux 7 (Core) 3.10.0-1160.53.1.el7.x86_64 docker://18.6.1

momo488s1 NotReady <none> 6m24s v1.21.1 192.168.0.70 <none> CentOS Linux 7 (Core) 3.10.0-1160.53.1.el7.x86_64 docker://18.6.1

momo488s2 NotReady <none> 3m24s v1.21.1 192.168.0.137 <none> CentOS Linux 7 (Core) 3.10.0-1160.53.1.el7.x86_64 docker://18.6.1

momo488s3 NotReady <none> 5s v1.21.1 192.168.0.173 <none> CentOS Linux 7 (Core) 3.10.0-1160.53.1.el7.x86_64 docker://18.6.1

[root@momo488m simple_install]# kubectl get pod --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-558bd4d5db-58c92 0/1 Pending 0 12m <none> <none> <none> <none>

kube-system coredns-558bd4d5db-zgsd7 0/1 Pending 0 12m <none> <none> <none> <none>

2.6修复coredns不启动故障

[root@momo488m ~]# docker pull quay.io/coreos/flannel:v0.15.0

[root@momo488m ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

2.7导入镜像

所有节点都执行

# docker load < haproxy100.tar.gz

# docker load < opengauss-3.0.0.tar

# docker load < shardingsphere-proxy.tar

2.8创建shardingphere集群分片

[root@momo488m ~]# cd /data/openGauss-distributed-solutions-master/distributed/

[root@momo488m distributed]# sh sharding.sh opengauss 3 3 "shared_buffers=2GB#max_connections=300#allow_concurrent_tuple_update=true"

设置密码 MoMo@488

查状态

kubectl get pod --all-namespaces -o wide

kubectl get svc --all-namespaces -o wide

查日志

kubectl logs -f --tail 200 opengauss-1-1 -n opengauss-1

2.9生成shardingphere配置文件

1>修改main_sharding_config.py

修改脚本

@classmethod

def load(cls):

with open(PathConf.get_src_yaml(), "rt", encoding=''utf-8'') as f:

count = f.read()

return yaml.safe_load(count)

@classmethod

def load_user_input(cls):

with open(PathConf.get_user_input_yaml(), "rt", encoding=''utf-8'') as f:

count = f.read()

return yaml.safe_load(count)

2>编辑user_input.yaml

dataSources:

- 10.244.1.4 5000 postgres admin MoMo@488

- 10.244.1.2 5000 postgres admin MoMo@488

- 10.244.1.3 5000 postgres admin MoMo@488

tables:

- table3 col1 2 col2 3

3>执行main_sharding_config.py

[root@momo488m distributed]# python3 main_sharding_config.py

{''schemaName'': ''sharding_db'', ''dataSources'': {''ds_0'': {''url'': ''jdbc:opengauss://10.244.2.8:5000/postgres?serverTimezone=UTC&useSSL=false'', ''username'': ''admin'', ''password'': ''MoMo@488'', ''connectionTimeoutMilliseconds'': 30000, ''idleTimeoutMilliseconds'': 60000, ''maxLifetimeMilliseconds'': 1800000, ''maxPoolSize'': 4096, ''minPoolSize'': 10}, ''ds_1'': {''url'': ''jdbc:opengauss://10.244.2.9:5000/postgres?serverTimezone=UTC&useSSL=false'', ''username'': ''admin'', ''password'': ''MoMo@488'', ''connectionTimeoutMilliseconds'': 30000, ''idleTimeoutMilliseconds'': 60000, ''maxLifetimeMilliseconds'': 1800000, ''maxPoolSize'': 4096, ''minPoolSize'': 10}}, ''rules'': {''tables'': {''test_order'': {''actualDataNodes'': ''ds_0.test_order''}, ''table3'': {''actualDataNodes'': ''ds_${0..1}.table3_${0..2}'', ''databaseStrategy'': {''standard'': {''shardingColumn'': ''col1'', ''shardingAlgorithmName'': ''ds_table3_inline''}}, ''tableStrategy'': {''standard'': {''shardingColumn'': ''col2'', ''shardingAlgorithmName'': ''tb_table3_inline''}}}}, ''defaultDatabaseStrategy'': {''none'': None}, ''defaultTableStrategy'': {''none'': None}, ''shardingAlgorithms'': {''database_inline'': {''props'': {''algorithm-expression'': ''ds_${user_id % 2}''}, ''type'': ''INLINE''}, ''ds_table3_inline'': {''props'': {''algorithm-expression'': ''ds_${col1 % 2}''}, ''type'': ''INLINE''}, ''tb_table3_inline'': {''props'': {''algorithm-expression'': ''table3_${col2 % 3}''}, ''type'': ''INLINE''}}, ''keyGenerators'': {''snowflake'': {''type'': ''SNOWFLAKE'', ''props'': {''worker-id'': 123}}}}}

2.10检查复制状态

[root@momo488m distributed]# kubectl exec -ti opengauss-1-1 -n opengauss-1 -- bash

[root@opengauss-1-1 /]# patronictl -c /home/omm/patroni.yaml topology

+-----------------+------------+---------+---------+----+-----------+-----------------+

| Member | Host | Role | State | TL | Lag in MB | Pending restart |

+ Cluster: opengauss (12571552110593558695) -------+----+-----------+-----------------+

| opengauss-1-1 | 10.244.2.5 | Leader | running | 1 | | * |

| + opengauss-1-2 | 10.244.2.4 | Replica | running | 1 | 0 | * |

| + opengauss-1-3 | 10.244.3.6 | Replica | running | 1 | 0 | * |

+-----------------+------------+---------+---------+----+-----------+-----------------+

[root@momo488m distributed]# kubectl exec -ti opengauss-2-1 -n opengauss-2 -- bash

[root@opengauss-2-1 /]# patronictl -c /home/omm/patroni.yaml topology

+-----------------+------------+---------+---------+----+-----------+-----------------+

| Member | Host | Role | State | TL | Lag in MB | Pending restart |

+ Cluster: opengauss (1022351682206865095) --------+----+-----------+-----------------+

| opengauss-2-1 | 10.244.2.3 | Leader | running | 1 | | * |

| + opengauss-2-2 | 10.244.3.3 | Replica | running | 1 | 0 | * |

| + opengauss-2-3 | 10.244.3.5 | Replica | running | 1 | 0 | * |

+-----------------+------------+---------+---------+----+-----------+-----------------+

[root@momo488m distributed]# kubectl exec -ti opengauss-3-1 -n opengauss-3 -- bash

[root@opengauss-3-1 /]# patronictl -c /home/omm/patroni.yaml topology

+-----------------+------------+---------+---------+----+-----------+-----------------+

| Member | Host | Role | State | TL | Lag in MB | Pending restart |

+ Cluster: opengauss (17450237737194323553) -------+----+-----------+-----------------+

| opengauss-3-1 | 10.244.2.6 | Leader | running | 1 | | * |

| + opengauss-3-2 | 10.244.3.4 | Replica | running | 1 | 0 | * |

| + opengauss-3-3 | 10.244.3.7 | Replica | running | 1 | 0 | * |

+-----------------+------------+---------+---------+----+-----------+-----------------+

2.11部署shardingphere服务

[root@momo488m distributed]# mkdir /etc/k8s/

[root@momo488m distributed]# cp server.yaml /etc/k8s/

[root@momo488m distributed]# cp logback.xml /etc/k8s/

如果部署到其他节点使用

scp server.yaml momo488s1:/etc/k8s/

scp logback.xml momo488s1:/etc/k8s/

[root@momo488m distributed]# sh delpoy_sharding.sh test /etc/k8s/ momo488m

pod "test-sha" deleted

service "test-service-sha" deleted

config-sharding_tmp.yaml 100% 1338 8.4MB/s 00:00

service/test-service-sha created

pod/test-sha created

2.12测试连接之前

cd /var/lib/docker/overlay2/51faaa1be0f81f56017b2bba8b2ee81123931a64814b4a20c0a0661cbcdcfc78/diff/usr/local/opengauss/lib/

把所有文件都拷贝到/usr/lib64/

2.13登录sharding_db

[root@momo488m lib64]# /var/lib/docker/overlay2/e3fb4661acf841646ac29cb23832779edd0880ab5afe2ff1f39f8f1b9a686037/diff/usr/local/opengauss/bin/gsql -d sharding_db -p 30400 -r -h momo488m -U root

Password for user root:密码是root

gsql ((openGauss 3.0.0 build ee30df52) compiled at 2022-03-30 20:04:30 commit 0 last mr )

Non-SSL connection (SSL connection is recommended when requiring high-security)

Type "help" for help.

sharding_db=>

2.14创建table插入数据

sharding_db=> create table table3 (col1 int primary key,col2 int);

CREATE TABLE

insert into table3 values (1,1);

insert into table3 values (2,2);

insert into table3 values (3,3);

insert into table3 values (4,4);

insert into table3 values (5,5);

insert into table3 values (6,6);

insert into table3 values (7,7);

insert into table3 values (8,8);

insert into table3 values (9,9);

2.15根据分库分表规则,分库数量2,分表数量3

sharding_db=> select * from table3;

col1 | col2

------+------

6 | 6

4 | 4

2 | 2

8 | 8

3 | 3

9 | 9

1 | 1

7 | 7

5 | 5

(9 rows)

table3将根据col1被分到2个数据库里,其中一个4行,一个5行

table3将在每个库里,以3个表的形式体现,根据col2被分到3个表里

2.16登录验证

[root@momo488m distributed]# kubectl exec -ti opengauss-1-1 -n opengauss-1 -- bash

[root@opengauss-1-1 /]# su omm

bash-4.2$ gsql -d postgres -p 5432 -r -U admin

Password for user admin:

gsql ((openGauss 3.0.0 build ee30df52) compiled at 2022-03-30 20:04:30 commit 0 last mr )

Non-SSL connection (SSL connection is recommended when requiring high-security)

Type "help" for help.

openGauss=> \dt

No relations found.

[root@momo488m ~]# kubectl exec -ti opengauss-2-1 -n opengauss-2 -- bash

[root@opengauss-2-1 /]# su omm

bash-4.2$ gsql -d postgres -p 5432 -r -U admin

Password for user admin:

gsql ((openGauss 3.0.0 build ee30df52) compiled at 2022-03-30 20:04:30 commit 0 last mr )

Non-SSL connection (SSL connection is recommended when requiring high-security)

Type "help" for help.

openGauss=> \dt

List of relations

Schema | Name | Type | Owner | Storage

--------+----------+-------+-------+----------------------------------

admin | table3_0 | table | admin | {orientation=row,compression=no}

admin | table3_1 | table | admin | {orientation=row,compression=no}

admin | table3_2 | table | admin | {orientation=row,compression=no}

(3 rows)

openGauss=> select * from table3_0;

col1 | col2

------+------

6 | 6

(1 row)

openGauss=> select * from table3_1;

col1 | col2

------+------

4 | 4

(1 row)

openGauss=> select * from table3_2;

col1 | col2

------+------

2 | 2

8 | 8

(2 rows)

[root@momo488m ~]# kubectl exec -ti opengauss-3-1 -n opengauss-3 -- bash

[root@opengauss-3-1 /]# su omm

bash-4.2$ gsql -d postgres -p 5432 -r -U admin

Password for user admin:

gsql ((openGauss 3.0.0 build ee30df52) compiled at 2022-03-30 20:04:30 commit 0 last mr )

Non-SSL connection (SSL connection is recommended when requiring high-security)

Type "help" for help.

openGauss=> \dt

List of relations

Schema | Name | Type | Owner | Storage

--------+----------+-------+-------+----------------------------------

admin | table3_0 | table | admin | {orientation=row,compression=no}

admin | table3_1 | table | admin | {orientation=row,compression=no}

admin | table3_2 | table | admin | {orientation=row,compression=no}

(3 rows)

openGauss=> select * from table3_0;

col1 | col2

------+------

3 | 3

9 | 9

(2 rows)

openGauss=> select * from table3_1;

col1 | col2

------+------

1 | 1

7 | 7

(2 rows)

openGauss=> select * from table3_2;

col1 | col2

------+------

5 | 5

(1 row)

本文参考官方文档

https://docs.opengauss.org/zh/

https://docs.vastdata.com.cn/zh/

【云原生 | 37】Docker快速部署编程语言Golang

今天关于【云原生】使用 Docker 快速部署 openGauss 主备环境和docker部署openstack的分享就到这里,希望大家有所收获,若想了解更多关于docker安装opengauss数据库、openGauss1.1.0 主备部署、openGauss分布式安装_搭建_快速部署openGauss3.0.0分布式(openGauss课程)、【云原生 | 37】Docker快速部署编程语言Golang等相关知识,可以在本站进行查询。

本文标签: