本文将分享Docker环境下搭建DNSLVS(keepAlived)OpenResty服务器简易集群的详细内容,并且还将对docker搭建代理服务器进行详尽解释,此外,我们还将为大家带来关于003.K

本文将分享Docker环境下搭建DNS LVS(keepAlived) OpenResty服务器简易集群的详细内容,并且还将对docker搭建代理服务器进行详尽解释,此外,我们还将为大家带来关于003.Keepalived 搭建 LVS 高可用集群、014.Docker Harbor+Keepalived+LVS+共享存储高可用架构、CentOS 7 使用 keepalived 搭建 nginx 高可用服务器简略教程、CentOS Docker容器中安装LVS负载均衡(二) keepalived的相关知识,希望对你有所帮助。

本文目录一览:- Docker环境下搭建DNS LVS(keepAlived) OpenResty服务器简易集群(docker搭建代理服务器)

- 003.Keepalived 搭建 LVS 高可用集群

- 014.Docker Harbor+Keepalived+LVS+共享存储高可用架构

- CentOS 7 使用 keepalived 搭建 nginx 高可用服务器简略教程

- CentOS Docker容器中安装LVS负载均衡(二) keepalived

Docker环境下搭建DNS LVS(keepAlived) OpenResty服务器简易集群(docker搭建代理服务器)

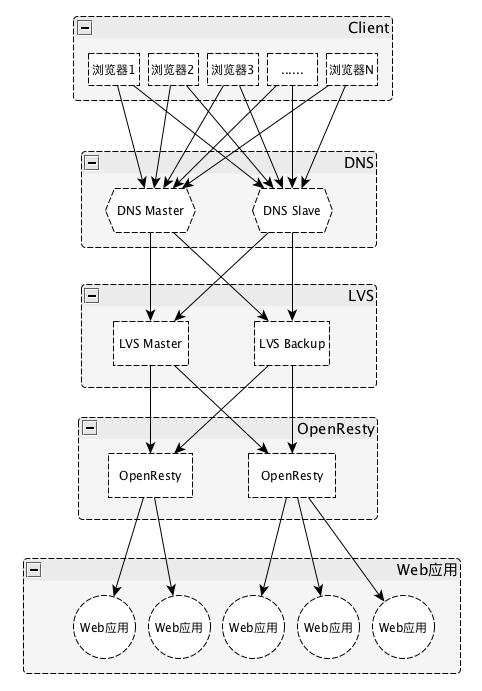

现在上网已经成为每个人必备的技能,打开浏览器,输入网址,回车,简单的几步就能浏览到漂亮的网页,那从请求发出到返回漂亮的页面是怎么做到的呢,我将从公司中一般的分层架构角度考虑搭建一个简易集群来实现。目标是做到在浏览中输入网址,打开网页,而且每一层还具有高可用,只要一层中有一台主机是存活的,整个服务都将可用。

环境

- Centos 7

- Docker

架构图

Docker

安装docker

最开始我是在MacOs系统上安装docker(下载地址),但是macOS无法直接访问docker容器的IP(官网上也有说明,有知道的朋友麻烦告知),最终在Centos7系统安装docker,我安装的是CE版本(下载及安装说明地址).

安装docker-compose

- 使用curl下载

- 将下载的文件权限修改为可执行权限

- 将docker-compose移入/usr/bin目录,以便在终端直接执行

具体参考官方安装文档

编写 dockerfile

Docker下载完成之后,编写dockerfile文件,下载centos7镜像,此处要注意,由于以后我们要使用systemctl,所以需要特殊处理,如下:

FROM centos:7

ENV container docker

RUN (cd /lib/systemd/system/sysinit.target.wants/; for i in *; do [ $i == \

systemd-tmpfiles-setup.service ] || rm -f $i; done); \

rm -f /lib/systemd/system/multi-user.target.wants/*;\

rm -f /etc/systemd/system/*.wants/*;\

rm -f /lib/systemd/system/local-fs.target.wants/*; \

rm -f /lib/systemd/system/sockets.target.wants/*udev*; \

rm -f /lib/systemd/system/sockets.target.wants/*initctl*; \

rm -f /lib/systemd/system/basic.target.wants/*;\

rm -f /lib/systemd/system/anaconda.target.wants/*;

VOLUME [ "/sys/fs/cgroup" ]

CMD ["/usr/sbin/init"]

具体情况请参考官方文档说明

DNS

我计划安装两台DNS服务器,一台Master,一台Slave,Master配置IP与域名的正向与反向对应关系,Slave进行同步。

编写docker-compose.yml

假定上面下载的centos image的名称为centos,标签为latest

version: "3"

services:

dns_master:

image: centos:latest

container_name: dns_master

hostname: dns_master

privileged: true

dns: 192.168.254.10

networks:

br0:

ipv4_address: 192.168.254.10

dns_slave:

image: centos:latest

container_name: dns_slave

hostname: dns_slave

privileged: true

dns:

- 192.168.254.10

- 192.168.254.11

networks:

br0:

ipv4_address: 192.168.254.11

networks:

br0:

driver: bridge

ipam:

driver: default

config:

-

subnet: 192.168.254.0/24

从docker-compose.yml文件可知我选择了bridge桥接网络模式,并为dns master和dns slave分别分配了ip. 在docker-compose.yml文件所在目录运行 docker-compose up 命令,创建名称分别为dns_master和dns_slave的容器。

配置DNS Master服务器

1.我们进入dns_master容器

docker exec -it dns_master /bin/bash

2.安装bind9 dns package

yum install bind bind-utils -y

3.修改配置文件named.conf

vim /etc/named.conf

注意以双星号(**)包围的内容,只是为了强调,实际配置时应去掉

options {

listen-on port 53 { 127.0.0.1; **192.168.254.10;** }; //Master Dns Ip

listen-on-v6 port 53 { ::1; };

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

recursing-file "/var/named/data/named.recursing";

secroots-file "/var/named/data/named.secroots";

allow-query { localhost; **192.168.254.0/24;** }; // IP Ranges

allow-transfer { localhost; **192.168.254.11;**}; // Slave Ip

......

....

zone "." IN {

type hint;

file "named.ca";

};

**

zone "elong.com" IN {

type master;

file "forward.yanggy"; // 正向解析文件

allow-update { none; };

};

zone "254.168.192.in-addr.arpa" IN {

type master;

file "reverse.yanggy"; // 反向解析文件

allow-update { none;};

};

**

include "/etc/named.rfc1912.zones";

include "/etc/named.root.key";

- 配置正向解析文件forward.yanggy

vim /var/named/forward.yanggy

$TTL 86400

@ IN SOA masterdns.yanggy.com. root.yanggy.com. (

2019011201 ;Serial

3600 ;Refresh

1800 ;Retry

64800 ;Expire

86400 ;Minimum TTL

)

@ IN NS masterdns.yanggy.com.

@ IN NS slavedns.yanggy.com.

@ IN A 192.168.254.10

@ IN A 192.168.254.11

masterdns IN A 192.168.254.10

slavedns IN A 192.168.254.11

4.配置反向解析文件

vim /var/named/reverse.yanggy

$TTL 86400

@ IN SOA masterdns.yanggy.com. root.yanggy.com. (

2019011301 ;Serial

3600 ;Refresh

1800 ;Retry

604800 ;Expire

86400 ;Minimum TTL

)

@ IN NS masterdns.yanggy.com.

@ IN NS slavedns.yanggy.com.

@ IN PTR yanggy.com.

masterdns IN A 192.168.254.10

slavedns IN A 192.168.254.11

10 IN PTR masterdns.yanggy.com.

11 IN PTR slavedns.yanggy.com.

5.检查配置文件的正确性

named-checkconf /etc/named.conf

named-checkzone yanggy.com /var/named/forward.yanggy

named-checkzone yanggy.com /var/named/reverse.yanggy

第一条命令如果没错误,什么都不会输出,后面两条命令如果没错误,则输出内容包含OK.

6.启动named服务

systemctl enable named

systemctl start named

7.配置相关文件所属用户和组

chgrp named -R /var/named

chown -v root:named /etc/named.conf

restorecon -rv /var/named

restorecon /etc/named.conf

8.安装配置完成,开始测试

dig masterdns.yanggy.com

; <<>> DiG 9.9.4-RedHat-9.9.4-72.el7 <<>> masterdns.yanggy.com

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 65011

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 2, ADDITIONAL: 2

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;masterdns.yanggy.com. IN A

;; ANSWER SECTION:

masterdns.yanggy.com. 86400 IN A 192.168.254.10

;; AUTHORITY SECTION:

yanggy.com. 86400 IN NS masterdns.yanggy.com.

yanggy.com. 86400 IN NS slavedns.yanggy.com.

;; ADDITIONAL SECTION:

slavedns.yanggy.com. 86400 IN A 192.168.254.11

;; Query time: 19 msec

;; SERVER: 127.0.0.11#53(127.0.0.11)

;; WHEN: Mon Jan 14 09:56:22 UTC 2019

;; MSG SIZE rcvd: 117

- 退出容器后,将此容器保存为image:dns_image,以后dns_master就用此image

docker commit dns_master dns_master

配置DNS Slave服务器

1.进入容器和安装bind。

yum install bind bind-utils -y

2.配置named.conf

options {

listen-on port 53 { 127.0.0.1; 192.168.254.11;};

listen-on-v6 port 53 { ::1; };

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

recursing-file "/var/named/data/named.recursing";

secroots-file "/var/named/data/named.secroots";

allow-query { localhost;192.168.254.0/24;};

....

....

zone "yanggy.com" IN {

type slave;

file "slaves/yanggy.fwd";

masters {192.168.254.10;};

};

zone "254.168.192.in-addr.arpa" IN {

type slave;

file "slaves/yanggy.rev";

masters {192.168.254.10;};

};

include "/etc/named.rfc1912.zones";

include "/etc/named.root.key";

3.启动dns服务

systemctl enable named

systemctl start named

4.启动成功后,就会在目录/var/named/slaves/下出现yagnggy.fwd和yanggy.rev,不用手动配置

5.配置相关文件的所属用户和用户组

chgrp named -R /var/named

chown -v root:named /etc/named.conf

restorecon -rv /var/named

restorecon /etc/named.conf

6.配置完后,也可照上面方法测试,看是否正常。 7.退出窗器后,将此容器保存为image:dns_slave,以后dns_slave就用此image

LVS+KeepAlived

1.在dns的docker-compose.yum添加如下内容,创建lvs和openresty容器

lvs01:

image: centos:latest

container_name: lvs01

hostname: lvs01

privileged: true

dns:

- 192.168.254.10

- 192.168.254.11

volumes:

- /home/yanggy/docker/lvs01/:/home/yanggy/

- /home/yanggy/docker/lvs01/etc/:/etc/keepalived/

networks:

br0:

ipv4_address: 192.168.254.13

lvs02:

image: centos:latest

container_name: lvs02

hostname: lvs02

privileged: true

dns:

- 192.168.254.10

- 192.168.254.11

volumes:

- /home/yanggy/docker/lvs02/:/home/yanggy/

- /home/yanggy/docker/lvs02/etc/:/etc/keepalived/

networks:

br0:

ipv4_address: 192.168.254.14

resty01:

image: centos:latest

container_name: resty01

hostname: resty01

privileged: true

expose:

- "80"

dns:

- 192.168.254.10

- 192.168.254.11

volumes:

- /home/yanggy/docker/web/web01/:/home/yanggy/

networks:

br0:

ipv4_address: 192.168.254.15

resty02:

image: centos:latest

container_name: web02

hostname: web02

privileged: true

expose:

- "80"

dns:

- 192.168.254.10

- 192.168.254.11

volumes:

- /home/yanggy/docker/web/web02/:/home/yanggy/

networks:

br0:

ipv4_address: 192.168.254.16

2.创建lvs01和lvs02容器

docker-compose up

3.进入lvs01容器中,安装ipvsadm和keepalived

yum install ipvsadm -y

yum install keepalived -y

4.配置keepalived

$ vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_01 #表示运行keepalived服务器的一个标识。

}

vrrp_instance VI_1 {

state MASTER #指定keepalived的角色,MASTER表示此主机是主服务器,BACKUP表示此主机是备用服务器

interface eth0 #指定HA监测的网卡

virtual_router_id 51 #虚拟路由标识,这个标识是一个数字,同一个vrrp实例使用唯一的标识。即同一vrrp_instance下,MASTER和BACKUP必须是一致的

priority 110 #定义优先级,数字越大,优先级越高,在同一个vrrp_instance下,MASTER的优先级必须大于BACKUP的优先级

advert_int 1 #设定MASTER与BACKUP负载均衡器之间同步检查的时间间隔,单位是秒

authentication { #设置验证类型和密码

auth_type PASS #设置验证类型,主要有PASS和AH两种

auth_pass 1111 #设置验证密码,在同一个vrrp_instance下,MASTER与BACKUP必须使用相同的密码才能正常通信

}

virtual_ipaddress { #设置虚拟IP地址,可以设置多个虚拟IP地址,每行一个

192.168.254.100

}

}

virtual_server 192.168.254.100 80 {

delay_loop 6 #设置运行情况检查时间,单位是秒

lb_algo rr #设置负载调度算法,这里设置为rr,即轮询算法

lb_kind DR #设置LVS实现负载均衡的机制,有NAT、TUN、DR三个模式可选

persistence_timeout 0 #会话保持时间,单位是秒。这个选项对动态网页是非常有用的,为集群系统中的session共享提供了一个很好的解决方案。

#有了这个会话保持功能,用户的请求会被一直分发到某个服务节点,直到超过这个会话的保持时间。

#需要注意的是,这个会话保持时间是最大无响应超时时间,也就是说,用户在操作动态页面时,如果50秒内没有执行任何操作

#那么接下来的操作会被分发到另外的节点,但是如果用户一直在操作动态页面,则不受50秒的时间限制

protocol TCP #指定转发协议类型,有TCP和UDP两种

real_server 192.168.254.15 80 {

weight 1 #配置服务节点的权值,权值大小用数字表示,数字越大,权值越高,设置权值大小可以为不同性能的服务器

#分配不同的负载,可以为性能高的服务器设置较高的权值,而为性能较低的服务器设置相对较低的权值,这样才能合理地利用和分配系统资源

TCP_CHECK {

connect_port 80

connect_timeout 3 #表示3秒无响应超时

nb_get_retry 3 #表示重试次数

delay_before_retry 3 #表示重试间隔

}

}

real_server 192.168.254.16 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

从上面的配置可以看到真实的服务器(RS)地址是192.168.254.15和192.168.254.16.

另一台LVS容器也是如此配置,不过需要修改router_id 为LVS_02,state为BACKUP,priority设置的比MASTER低一点,比如100.

在两台lvs上分别执行如下命令,启动keepalived

systemctl enable keepalived

systemctl start keepalived

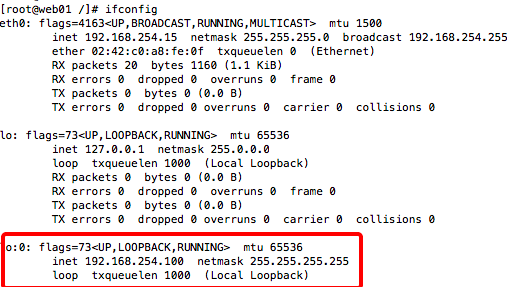

5.登录到上面两台RS容器上,编写如下脚本,假设名称为rs.sh 为lo:0绑定VIP地址、抑制ARP广播

#!/bin/bash

ifconfig lo:0 192.168.254.100 broadcast 192.168.254.100 netmask 255.255.255.255 up

route add -host 192.168.254.100 dev lo:0

echo "1" > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" > /proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" > /proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" > /proc/sys/net/ipv4/conf/all/arp_announce

sysctl -p &>/dev/null

脚本编写完毕后,将脚本设置成可执行,然后运行。使用ifconfig命令检查配置结果 .

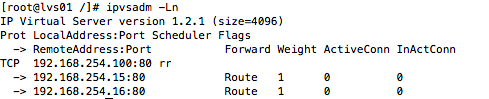

6.登录到lvs 使用ipvsadm命令查看映射状态(要想得到下图,还需要在192.168.254.15和192.168.254.16容器中安装openresty并启动,见下面openresty部分)

ipvsadm -Ln

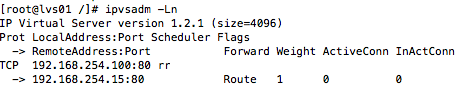

7.测试keepalived监测状态 将192.168.254.16:80服务关闭

docker stop web02

再次使用ipvsadm查看状态,可以看见IP:192.168.254.16:80已经剔除,以后的请求都转发到192.168.254.15:80上。

8.退出容器,使用docker commit lvs01 lvs,保存为lvs镜像,修改docker-compose.yml文件中lvs01和lvs02的image值为lvs:latest.

以后启动容器resty01和resty02之后,需要手动执行一下rs.sh脚本

OpenResty

1.创建并启动resty01容器,然后进入容器中 安装就不介绍了,自行看官网上安装说明。 安装完之后,在用户的目录中执行如下命令:

mkdir ~/work

cd ~/work

mkdir logs/ conf/

然后在conf目录下新建nginx.conf配置文件,填写如下内容:

[root@centos7 docker]# vim web/resty01/work/conf/nginx.conf

worker_processes 1;

error_log logs/error.log;

events {

worker_connections 1024;

}

http {

upstream web-group1 {

server 192.168.254.17:80 weight=1;

server 192.168.254.18:80 weight=1;

}

server {

listen 80;

location / {

default_type text/html;

proxy_pass http://web-group1;

}

}

}

192.168.254.17和192.168.254.18是上游web服务器的IP,负载均衡方法还可以配置权重或Hash方式。

退出容器,然后使用docker commit resty01 openresty.保存为openresty镜像。

2.修改docker-compose.yml,将resty01和resty02容器image属性都个性为openresty,执行docker-compose up,执行成功后进入容器resty02,此时容器resty02中已经安装了openresty,同样需要在用户Home目录下创建work、conf、logs。

resty01和resty02容器都映射了宿主机的文件系统,请看docker-compose.yml文件中配置的volumes属性,所以可以在配置resty02之前,将resty01的配置复制到resty02。

修改nginx.conf

worker_processes 1;

error_log logs/error.log;

events {

worker_connections 1024;

}

http {

upstream web-group2 {

server 192.168.254.19:80 weight=1;

server 192.168.254.20:80 weight=1;

server 192.168.254.21:80 weight=1;

}

server {

listen 80;

server_name 192.168.254.16;

location / {

proxy_pass http://web-group2;

}

}

}

配置完openresty之后,启动nginx.

nginx -c /home/yanggy/work/conf/nginx.conf

可以使用netstat -nltp 检查一下80端口服务是否开启 可以将nginx注册为系统服务,以便容器启动时自动运行

Web应用

1.修改docker-compose.yml,添加如下内容

web01:

image: centos:latest

container_name: web01

hostname: web01

privileged: true

expose:

- "80"

volumes:

- /home/yanggy/docker/web/web01/conf/:/etc/httpd/conf/

- /home/yanggy/docker/web/web01/www/:/var/www/

networks:

br0:

ipv4_address: 192.168.254.17

2.创建并启动容器web01 进入容器之后,安装httpd

yum install -y httpd

3.编辑主配置文件

vim /etc/httpd/conf/httpd.conf

ServerName前的#去掉,并将服务名称个性为Web01

4.创建index.html

cd /var/www/html/

echo "<h1>Web01</h1>" > index.html

5.启动服务

systemctl enable httpd

systemctl start httpd

6.退出容器,并将容器保存为镜像web

7.向docker-compose.yml添加如下内容

web02:

image: web:latest

container_name: web02

hostname: web02

privileged: true

expose:

- "80"

volumes:

- /home/yanggy/docker/web/web02/conf/:/etc/httpd/conf/

- /home/yanggy/docker/web/web02/www/:/var/www/

networks:

br0:

ipv4_address: 192.168.254.18

web03:

image: web:latest

container_name: web03

hostname: web03

privileged: true

expose:

- "80"

volumes:

- /home/yanggy/docker/web/web03/conf/:/etc/httpd/conf/

- /home/yanggy/docker/web/web03/www/:/var/www/

networks:

br0:

ipv4_address: 192.168.254.19

web04:

image: web:latest

container_name: web04

hostname: web04

privileged: true

expose:

- "80"

volumes:

- /home/yanggy/docker/web/web04/conf/:/etc/httpd/conf/

- /home/yanggy/docker/web/web04/www/:/var/www/

networks:

br0:

ipv4_address: 192.168.254.20

web05:

image: web:latest

container_name: web05

hostname: web05

privileged: true

expose:

- "80"

volumes:

- /home/yanggy/docker/web/web05/conf/:/etc/httpd/conf/

- /home/yanggy/docker/web/web05/www/:/var/www/

networks:

br0:

ipv4_address: 192.168.254.21

8.将web01在宿主机上映射的文件夹复制四份,分别命令web01,web03,web04,web05,并修改其中的httpd.conf和index.html为相应的服务器名称 9.使用docker-compose up创建web02-05容器,此时容器内就已经启动了web服务。

打通DNS和LVS

在上面LVS部分,配置了一个虚拟IP:192.168.254.100,现我将其添加到DNS服务器中,当输入域名时,能够解析到这个虚拟IP。 1.进入dns_master容器,修改正向解析配置文件

[root@dns_master /]# vim /var/named/forward.yanggy

添加正向解析www.yanggy.com->192.168.254.100,并使用了别名webserver。

$TTL 86400

@ IN SOA masterdns.yanggy.com. root.yanggy.com. (

2019011201 ;Serial

3600 ;Refresh

1800 ;Retry

64800 ;Expire

86400 ;Minimum TTL

)

@ IN NS masterdns.yanggy.com.

@ IN NS slavedns.yanggy.com.

@ IN A 192.168.254.10

@ IN A 192.168.254.11

@ IN A 192.168.254.100

masterdns IN A 192.168.254.10

slavedns IN A 192.168.254.11

webserver IN A 192.168.254.100

www CNAME webserver

2.修改反向解析文件

vim /var/named/reverse.yanggy

192.168.254.100->webserver.yanggy.com

$TTL 86400

@ IN SOA masterdns.yanggy.com. root.yanggy.com. (

2019011301 ;Serial

3600 ;Refresh

1800 ;Retry

604800 ;Expire

86400 ;Minimum TTL

)

@ IN NS masterdns.yanggy.com.

@ IN NS slavedns.yanggy.com.

@ IN NS webserver.yanggy.com.

@ IN PTR yanggy.com.

masterdns IN A 192.168.254.10

slavedns IN A 192.168.254.11

webserver IN A 192.168.254.100

10 IN PTR masterdns.yanggy.com.

11 IN PTR slavedns.yanggy.com.

100 IN PTR webserver.yanggy.com.

www CNAME webserver

总结

在宿主机的/etc/resolv.conf配置文件中添加DNS服务器IP

# Generated by NetworkManager

nameserver 192.168.254.10

nameserver 192.168.254.11

在浏览器中输入www.yanggy.com时,首先经DNS服务器解析成192.168.254.100,再经由keepalived转到其中一个openresty nginx服务器,然后nginx服务器再转到其上游的一个web应用服务器。 DNS和LVS都是高可用的,其中一台宕机,仍能响应浏览器请求,openresty服务器也是高可用的,只有DNS和LVS服务器可用,就会将请求分发到可用的openresty上,openresty不可用时,LVS就将其摘掉,可用时再恢复。同样,web服务器也是高可用的,openresty可以监测到其上游web应用服务器的可用状态,做到动态摘除和恢复。

完整的docker-compose.yml文件内容如下:

version: "3.7"

services:

dns_master:

image: dns_master:latest

container_name: dns_master

hostname: dns_master

privileged: true

dns:

- 192.168.254.10

volumes:

- /home/yanggy/docker/dns/master/:/home/yanggy/

networks:

br0:

ipv4_address: 192.168.254.10

dns_slave:

image: dns_slave:latest

container_name: dns_slave

hostname: dns_slave

privileged: true

dns:

- 192.168.254.10

- 192.168.254.11

volumes:

- /home/yanggy/docker/dns/slave/:/home/yanggy/

networks:

br0:

ipv4_address: 192.168.254.11

client:

image: centos:latest

container_name: client

hostname: client

privileged: true

dns:

- 192.168.254.10

- 192.168.254.11

volumes:

- /home/yanggy/docker/client/:/home/yanggy/

networks:

br0:

ipv4_address: 192.168.254.12

lvs01:

image: lvs:latest

container_name: lvs01

hostname: lvs01

privileged: true

volumes:

- /home/yanggy/docker/lvs01/:/home/yanggy/

- /home/yanggy/docker/lvs01/etc/:/etc/keepalived/

networks:

br0:

ipv4_address: 192.168.254.13

lvs02:

image: lvs:latest

container_name: lvs02

hostname: lvs02

privileged: true

volumes:

- /home/yanggy/docker/lvs02/:/home/yanggy/

- /home/yanggy/docker/lvs02/etc/:/etc/keepalived/

networks:

br0:

ipv4_address: 192.168.254.14

resty01:

image: openresty:latest

container_name: resty01

hostname: resty01

privileged: true

expose:

- "80"

volumes:

- /home/yanggy/docker/web/resty01/:/home/yanggy/

networks:

br0:

ipv4_address: 192.168.254.15

resty02:

image: openresty:latest

container_name: resty02

hostname: resty02

privileged: true

expose:

- "80"

volumes:

- /home/yanggy/docker/web/resty02/:/home/yanggy/

networks:

br0:

ipv4_address: 192.168.254.16

web01:

image: web:latest

container_name: web01

hostname: web01

privileged: true

expose:

- "80"

volumes:

- /home/yanggy/docker/web/web01/conf/:/etc/httpd/conf/

- /home/yanggy/docker/web/web01/www/:/var/www/

networks:

br0:

ipv4_address: 192.168.254.17

web02:

image: web:latest

container_name: web02

hostname: web02

privileged: true

expose:

- "80"

volumes:

- /home/yanggy/docker/web/web02/conf/:/etc/httpd/conf/

- /home/yanggy/docker/web/web02/www/:/var/www/

networks:

br0:

ipv4_address: 192.168.254.18

web03:

image: web:latest

container_name: web03

hostname: web03

privileged: true

expose:

- "80"

volumes:

- /home/yanggy/docker/web/web03/conf/:/etc/httpd/conf/

- /home/yanggy/docker/web/web03/www/:/var/www/

networks:

br0:

ipv4_address: 192.168.254.19

web04:

image: web:latest

container_name: web04

hostname: web04

privileged: true

expose:

- "80"

volumes:

- /home/yanggy/docker/web/web04/conf/:/etc/httpd/conf/

- /home/yanggy/docker/web/web04/www/:/var/www/

networks:

br0:

ipv4_address: 192.168.254.20

web05:

image: web:latest

container_name: web05

hostname: web05

privileged: true

expose:

- "80"

volumes:

- /home/yanggy/docker/web/web05/conf/:/etc/httpd/conf/

- /home/yanggy/docker/web/web05/www/:/var/www/

networks:

br0:

ipv4_address: 192.168.254.21

networks:

br0:

driver: bridge

ipam:

driver: default

config:

-

subnet: 192.168.254.0/24

演示

命令行curl访问

浏览器访问

其它的比如停止一台openresty服务器或web应用服务器,请自行验证,最终目的是看能否自动摘除和恢复。

问题

1.MacOS无法直接访问容器 官网上有说明,Mac系统中无法直接从Mac中直接通过IP访问容器  如果有知道如何访问的朋友,麻烦告知。

如果有知道如何访问的朋友,麻烦告知。

2.使用ipvsadm -Ln命令时,提示ip_vs模块不存在 原因可能是宿主机的linux内核不支持也可能是宿主机没加载内ip_vs模块

查看宿主机内核,一般高于3.10都可以。

uname -a

检查宿主机是否已加载ip_vs模块

lsmod | grep ip_vs

如果没出来结果,则使用modprobe命令加载,加载完后,再使用lsmod检查一下。

modprobe ip_vs

3.上面架构的问题 由于最下层Web应用是分组的,如果其中一组所有Web服务器都宕机,则需要将上游的openresty也关掉,这样lvs才不会将流量转到已经没有可用的web应用那一组。

参考网址: https://docs.docker.com/compose/compose-file/ https://www.unixmen.com/setting-dns-server-centos-7/ https://blog.csdn.net/u012852986/article/details/52412174 http://blog.51cto.com/12227558/2096280 https://hub.docker.com/_/centos/

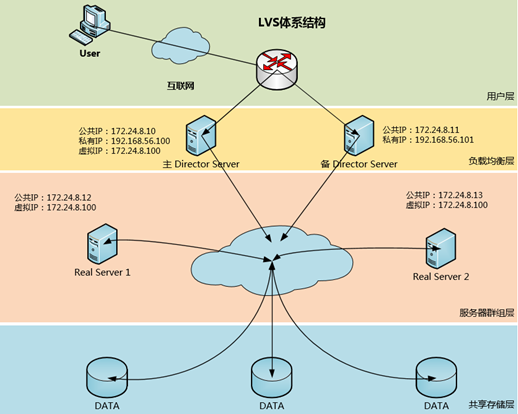

003.Keepalived 搭建 LVS 高可用集群

一 基础环境

1.1 IP 规划

|

节点类型

|

IP 规划

|

主机名

|

类型

|

|

主 Director Server

|

eth0:172.24.8.10

|

DR1

|

公共 IP

|

|

eth1:192.168.56.100

|

心跳

|

私有 IP

|

|

|

eth0:0:172.24.8.100

|

无

|

虚拟 IP

|

|

|

主 Director Server

|

eth0:172.24.8.11

|

DR2

|

公共 IP

|

|

eth1:192.168.56.101

|

心跳

|

私有 IP

|

|

|

Real Server 1

|

eth0:172.24.8.12

|

rs1

|

公共 IP

|

|

lo:0:172.24.8.100

|

无

|

虚拟 IP

|

|

|

Real Server 1

|

eth0:172.24.8.13

|

rs2

|

公共 IP

|

|

lo:0:172.24.8.100

|

无

|

虚拟 IP

|

1.2 架构规划

二 高可用 LVS 负载均衡集群部署

2.1 NTP 部署

2.2 部署 httpd 集群

1 [root@RServer01 ~]# yum -y install httpd

2 [root@RServer01 ~]# service iptables stop

3 [root@RServer01 ~]# chkconfig iptables off

4 [root@RServer01 ~]# vi /etc/selinux/config

5 SELINUX=disabled

6 [root@master ~]# setenforce 0 #关闭SELinux及防火墙 1 firewall-cmd --permanent–-add-service=keepalived

2 firewall-cmd --reload2.3 安装 Keepalived

1 [root@lvsmaster ~]# yum -y install gcc gcc-c++ make kernel-devel kernel-tools kernel-tools-libs kernel libnl libnl-devel libnfnetlink-devel openssl-devel wget openssh-clients #安装基础环境及依赖

2 [root@lvsmaster ~]# ln -s /usr/src/kernels/`uname -r` /usr/src/linux

3 [root@lvsmaster ~]# wget http://www.keepalived.org/software/keepalived-1.3.6.tar.gz

4 [root@lvsmaster ~]# tar -zxvf keepalived-1.3.6.tar.gz #编译安装Keepalived

5 [root@lvsmaster ~]# cd keepalived-1.3.6/

6 [root@lvsmaster keepalived-1.3.6]# ./configure --prefix=/usr/local/keepalived

7 [root@Master keepalived-1.3.9]# make && make install2.4 添加启动相关服务

1 [root@lvsmaster ~]# mkdir /etc/keepalived

2 [root@lvsmaster ~]# cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

3 [root@lvsmaster ~]# cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

4 [root@lvsmaster ~]# cp /usr/local/keepalived/sbin/keepalived /usr/sbin/

5 [root@lvsmaster ~]# vi /etc/init.d/keepalived #创建Keepalived启动脚本,如附件

6 #!/bin/sh

7 #

8 # keepalived High Availability monitor built upon LVS and VRRP

9 #

10 # chkconfig: - 86 14

11 # description: Robust keepalive facility to the Linux Virtual Server project \

12 # with multilayer TCP/IP stack checks.

13

14 ### BEGIN INIT INFO

15 # Provides: keepalived

16 # Required-Start: $local_fs $network $named $syslog

17 # Required-Stop: $local_fs $network $named $syslog

18 # Should-Start: smtpdaemon httpd

19 # Should-Stop: smtpdaemon httpd

20 # Default-Start:

21 # Default-Stop: 0 1 2 3 4 5 6

22 # Short-Description: High Availability monitor built upon LVS and VRRP

23 # Description: Robust keepalive facility to the Linux Virtual Server

24 # project with multilayer TCP/IP stack checks.

25 ### END INIT INFO

26

27 # Source function library.

28 . /etc/rc.d/init.d/functions

29

30 exec="/usr/sbin/keepalived"

31 prog="keepalived"

32 config="/etc/keepalived/keepalived.conf"

33

34 [ -e /etc/sysconfig/$prog ] && . /etc/sysconfig/$prog

35

36 lockfile=/var/lock/subsys/keepalived

37

38 start() {

39 [ -x $exec ] || exit 5

40 [ -e $config ] || exit 6

41 echo -n $"Starting $prog: "

42 daemon $exec $KEEPALIVED_OPTIONS

43 retval=$?

44 echo

45 [ $retval -eq 0 ] && touch $lockfile

46 return $retval

47 }

48

49 stop() {

50 echo -n $"Stopping $prog: "

51 killproc $prog

52 retval=$?

53 echo

54 [ $retval -eq 0 ] && rm -f $lockfile

55 return $retval

56 }

57

58 restart() {

59 stop

60 start

61 }

62

63 reload() {

64 echo -n $"Reloading $prog: "

65 killproc $prog -1

66 retval=$?

67 echo

68 return $retval

69 }

70

71 force_reload() {

72 restart

73 }

74

75 rh_status() {

76 status $prog

77 }

78

79 rh_status_q() {

80 rh_status &>/dev/null

81 }

82

83

84 case "$1" in

85 start)

86 rh_status_q && exit 0

87 $1

88 ;;

89 stop)

90 rh_status_q || exit 0

91 $1

92 ;;

93 restart)

94 $1

95 ;;

96 reload)

97 rh_status_q || exit 7

98 $1

99 ;;

100 force-reload)

101 force_reload

102 ;;

103 status)

104 rh_status

105 ;;

106 condrestart|try-restart)

107 rh_status_q || exit 0

108 restart

109 ;;

110 *)

111 echo $"Usage: $0 {start|stop|status|restart|condrestart|try-restart|reload|force-reload}"

112 exit 2

113 esac

114 exit $

115 [root@lvsmaster ~]# chmod u+x /etc/rc.d/init.d/keepalived

116 [root@lvsmaster ~]# vi /etc/keepalived/keepalived.conf

117 ! Configuration File for keepalived

118 ……

119 smtp_connect_timeout 30

120 router_id LVS_Master #表示运行Keepalived服务器的一个标识

121 }

122

123 vrrp_instance VI_1 {

124 state MASTER #指定Keepalived的角色

125 interface eth0 #指定HA监测网络的接口

126 virtual_router_id 51 #同一个vrrp实例使用唯一的标识,即同一个vrrp_instance下,Master和Backup必须是一致的

128 priority 100 #定义优先级,数值越大,优先级越高

129 advert_int 1 #设定Mater和Backup负载均衡器之间同步检查时间间隔

130 authentication {

131 auth_type PASS

132 auth_pass 1111

133 }

134 virtual_ipaddress {

135 172.24.8.100 #设置虚拟IP地址

136 }

137 }

138

139 virtual_server 172.24.8.100 80 {

140 delay_loop 6 #运行情况检查时间

141 lb_algo rr #设置负载均衡算法

142 lb_kind DR #设置LVS实现负载均衡的机制,有NAT/DR/TUN

143 persistence_timeout 50 #会话保持时间

144 protocol TCP #指定转发类型

145

146 real_server 172.24.8.12 80 {

147 weight 1 #服务节点的权值,数值越大,权值越高

148 TCP_CHECK {

149 connect_timeout 5 #表示无响应超时时间,单位是秒

150 nb_get_retry 3 #表示重试次数

151 delay_before_retry 3 #表示重试间隔

152 }

153 }

154 real_server 172.24.8.13 80 {

155 weight 1

156 TCP_CHECK {

157 connect_timeout 5

158 nb_get_retry 3

159 delay_before_retry 3

160 }

161 }

162 }

163 [root@lvsmaster ~]# scp /etc/keepalived/keepalived.conf 172.24.8.11:/etc/keepalived/keepalived.conf

164 [root@lvsbackup ~]# vi /etc/keepalived/keepalived.conf

165 state BACKUP

166 priority 802.5 安装 IPVS 管理工具

1 [root@lvsmaster ~]# yum -y install ipvsadm2.6 配置 Real Server 节点

1 [root@RServer01 ~]# vi /etc/init.d/lvsrs

2 [root@RServer01 ~]# chmod u+x /etc/init.d/lvsrs2.7 启动集群

1 [root@RServer01 ~]# service httpd start

2 [root@RServer01 ~]# chkconfig httpd on

3 [root@RServer02 ~]# service httpd start

4 [root@RServer02 ~]# chkconfig httpd on

5

6 [root@lvsmaster ~]# service keepalived start

7 [root@lvsmaster ~]# chkconfig keepalived on

8 [root@lvsbackup ~]# service keepalived start

9 [root@lvsbackup ~]# chkconfig keepalived on

10

11 [root@RServer01 ~]# service lvsrs start

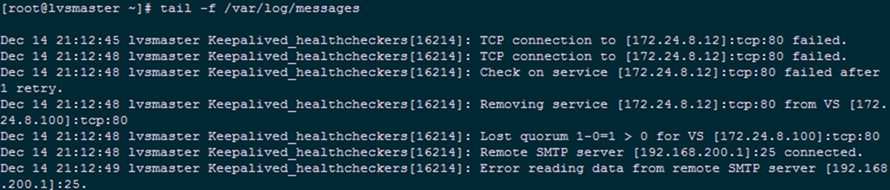

12 [root@RServer02 ~]# service lvsrs start三 测试集群

3.1 高可用功能测试

3.2 负载均衡测试

1 [root@RServer01 ~]# echo ''This is Real Server01!'' >>/var/www/html/index.html

2 [root@RServer01 ~]# echo ''This is Real Server02!'' >>/var/www/html/index.html3.3 故障切换测试

1 [root@RServer01 ~]# service httpd stop

当关掉其中一个 Real Server 时,访问 VIP,只会显示还处于集群中的 web 节点。

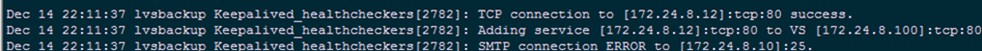

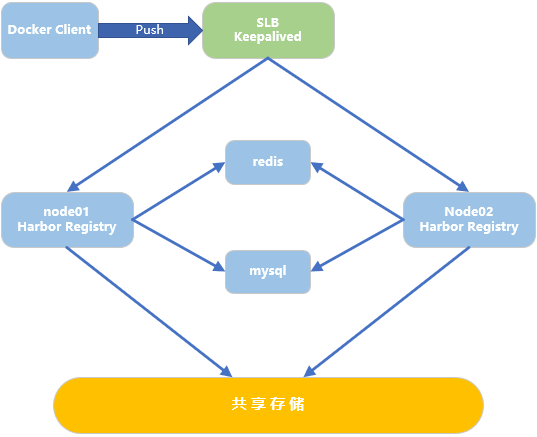

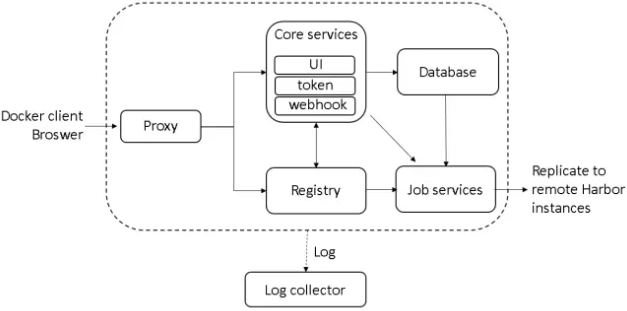

014.Docker Harbor+Keepalived+LVS+共享存储高可用架构

一 多Harbor高可用介绍

二 正式部署

2.1 前期准备

|

节点

|

IP地址

|

备注

|

|

docker01

|

172.24.8.111

|

Docker harbor node01

|

|

docker02

|

172.24.8.112

|

Docker harbor node02

|

|

docker03

|

172.24.8.113

|

mysql+redis节点

|

|

docker04

|

172.24.8.114

|

Docker客户端,用于测试仓库

|

|

nfsslb

|

172.24.8.71

|

共享nfs存储节点

Keepalived节点

VIP地址:172.24.8.200/32

|

|

slb02

|

172.24.8.72

|

Keepalived节点

VIP地址:172.24.8.200/32

|

- docker、docker-compose安装(见《009.Docker Compose基础使用》);

- ntp时钟同步(建议项);

- 相关防火墙-SELinux放通或关闭;

- nfsslb和slb02节点添加解析:echo "172.24.8.200 reg.harbor.com" >> /etc/hosts

2.2 创建nfs

1 [root@nfsslb ~]# yum -y install nfs-utils*

2 [root@nfsslb ~]# mkdir /myimages #用于共享镜像

3 [root@nfsslb ~]# mkdir /mydatabase #用于存储数据库数据

4 [root@nfsslb ~]# echo -e "/dev/vg01/lv01 /myimages ext4 defaults 0 0\n/dev/vg01/lv02 /mydatabase ext4 defaults 0 0">> /etc/fstab

5 [root@nfsslb ~]# mount -a

6 [root@nfsslb ~]# vi /etc/exports

7 /myimages 172.24.8.0/24(rw,no_root_squash)

8 /mydatabase 172.24.8.0/24(rw,no_root_squash)

9 [root@nfsslb ~]# systemctl start nfs.service

10 [root@nfsslb ~]# systemctl enable nfs.service2.3 挂载nfs

1 root@docker01:~# apt-get -y install nfs-common

2 root@docker02:~# apt-get -y install nfs-common

3 root@docker03:~# apt-get -y install nfs-common

4

5 root@docker01:~# mkdir /data

6 root@docker02:~# mkdir /data

7

8 root@docker01:~# echo "172.24.8.71:/myimages /data nfs defaults,_netdev 0 0">> /etc/fstab

9 root@docker02:~# echo "172.24.8.71:/myimages /data nfs defaults,_netdev 0 0">> /etc/fstab

10 root@docker03:~# echo "172.24.8.71:/mydatabase /database nfs defaults,_netdev 0 0">> /etc/fstab

11

12 root@docker01:~# mount -a

13 root@docker02:~# mount -a

14 root@docker03:~# mount -a

15

16 root@docker03:~# mkdir -p /database/mysql

17 root@docker03:~# mkdir -p /database/redis2.4 部署外部mysql-redis

1 root@docker03:~# mkdir docker_compose/

2 root@docker03:~# cd docker_compose/

3 root@docker03:~/docker_compose# vi docker-compose.yml

4 version: ''3''

5 services:

6 mysql-server:

7 hostname: mysql-server

8 restart: always

9 container_name: mysql-server

10 image: mysql:5.7

11 volumes:

12 - /database/mysql:/var/lib/mysql

13 command: --character-set-server=utf8

14 ports:

15 - ''3306:3306''

16 environment:

17 MYSQL_ROOT_PASSWORD: x19901123

18 # logging:

19 # driver: "syslog"

20 # options:

21 # syslog-address: "tcp://172.24.8.112:1514"

22 # tag: "mysql"

23 redis:

24 hostname: redis-server

25 container_name: redis-server

26 restart: always

27 image: redis:3

28 volumes:

29 - /database/redis:/data

30 ports:

31 - ''6379:6379''

32 # logging:

33 # driver: "syslog"

34 # options:

35 # syslog-address: "tcp://172.24.8.112:1514"

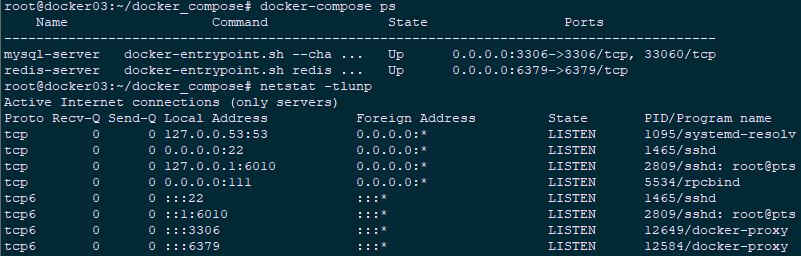

36 # tag: "redis" 1 root@docker03:~/docker_compose# docker-compose up -d

2 root@docker03:~/docker_compose# docker-compose ps #确认docker是否up

3 root@docker03:~/docker_compose# netstat -tlunp #确认相关端口是否启动

2.5 下载harbor

1 root@docker01:~# wget https://storage.googleapis.com/harbor-releases/harbor-offline-installer-v1.5.4.tgz

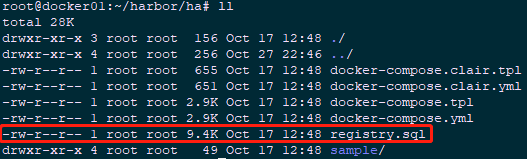

2 root@docker01:~# tar xvf harbor-offline-installer-v1.5.4.tgz2.6 导入registry表

1 root@docker01:~# apt-get -y install mysql-client

2 root@docker01:~# cd harbor/ha/

3 root@docker01:~/harbor/ha# ll

1 root@docker01:~/harbor/ha# mysql -h172.24.8.113 -uroot -p

2 mysql> set session sql_mode=''STRICT_TRANS_TABLES,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION''; #必须修改sql_mode

3 mysql> source ./registry.sql #导入registry数据表至外部数据库。

4 mysql> exit2.7 修改harbor相关配置

1 root@docker01:~/harbor/ha# cd /root/harbor/

2 root@docker01:~/harbor# vi harbor.cfg #修改harbor配置文件

3 hostname = 172.24.8.111

4 db_host = 172.24.8.113

5 db_password = x19901123

6 db_port = 3306

7 db_user = root

8 redis_url = 172.24.8.113:6379

9 root@docker01:~/harbor# vi prepare

10 empty_subj = "/C=/ST=/L=/O=/CN=/"

11 修改如下:

12 empty_subj = "/C=US/ST=California/L=Palo Alto/O=VMware, Inc./OU=Harbor/CN=notarysigner"

13 root@docker01:~/harbor# ./prepare #载入相关配置 1 root@docker01:~/harbor# cat ./common/config/ui/env #验证

2 _REDIS_URL=172.24.8.113:6379

3 root@docker01:~/harbor# cat ./common/config/adminserver/env | grep MYSQL #验证

4 MYSQL_HOST=172.24.8.113

5 MYSQL_PORT=3306

6 MYSQL_USR=root

7 MYSQL_PWD=x19901123

8 MYSQL_DATABASE=registry

2.8 docker-compose部署

1 root@docker01:~/harbor# cp docker-compose.yml docker-compose.yml.bak

2 root@docker01:~/harbor# cp ha/docker-compose.yml .

3 root@docker01:~/harbor# vi docker-compose.yml

4 log

5 ports:

6 - 1514:10514 #log需要对外部redis和mysql提供服务,因此只需要修改此处即可

7 root@docker01:~/harbor# ./install.sh2.9 重新构建外部redis和mysql

1 root@docker03:~/docker_compose# docker-compose up -d

2 root@docker03:~/docker_compose# docker-compose ps #确认docker是否up

3 root@docker03:~/docker_compose# netstat -tlunp #确认相关端口是否启动2.10 Keepalived安装

1 [root@nfsslb ~]# yum -y install gcc gcc-c++ make kernel-devel kernel-tools kernel-tools-libs kernel libnl libnl-devel libnfnetlink-devel openssl-devel

2 [root@nfsslb ~]# cd /tmp/

3 [root@nfsslb ~]# tar -zxvf keepalived-2.0.8.tar.gz

4 [root@nfsslb tmp]# cd keepalived-2.0.8/

5 [root@nfsslb keepalived-2.0.8]# ./configure --sysconf=/etc --prefix=/usr/local/keepalived

6 [root@nfsslb keepalived-2.0.8]# make && make install2.11 Keepalived配置

1 [root@nfsslb ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

2 root@docker01:~# scp harbor/ha/sample/active_active/keepalived_active_active.conf root@172.24.8.71:/etc/keepalived/keepalived.conf

3 root@docker01:~# scp harbor/ha/sample/active_active/check.sh root@172.24.8.71:/usr/local/bin/check.sh

4 root@docker01:~# scp harbor/ha/sample/active_active/check.sh root@172.24.8.72:/usr/local/bin/check.sh

5 [root@nfsslb ~]# chmod u+x /usr/local/bin/check.sh

6 [root@slb02 ~]# chmod u+x /usr/local/bin/check.sh

7 [root@nfsslb ~]# vi /etc/keepalived/keepalived.conf

8 global_defs {

9 router_id haborlb

10 }

11 vrrp_sync_groups VG1 {

12 group {

13 VI_1

14 }

15 }

16 vrrp_instance VI_1 {

17 interface eth0

18

19 track_interface {

20 eth0

21 }

22

23 state MASTER

24 virtual_router_id 51

25 priority 10

26

27 virtual_ipaddress {

28 172.24.8.200

29 }

30 advert_int 1

31 authentication {

32 auth_type PASS

33 auth_pass d0cker

34 }

35

36 }

37 virtual_server 172.24.8.200 80 {

38 delay_loop 15

39 lb_algo rr

40 lb_kind DR

41 protocol TCP

42 nat_mask 255.255.255.0

43 persistence_timeout 10

44

45 real_server 172.24.8.111 80 {

46 weight 10

47 MISC_CHECK {

48 misc_path "/usr/local/bin/check.sh 172.24.8.111"

49 misc_timeout 5

50 }

51 }

52

53 real_server 172.24.8.112 80 {

54 weight 10

55 MISC_CHECK {

56 misc_path "/usr/local/bin/check.sh 172.24.8.112"

57 misc_timeout 5

58 }

59 }

60 }

61 [root@nfsslb ~]# scp /etc/keepalived/keepalived.conf root@172.24.8.72:/etc/keepalived/keepalived.conf #Keepalived配置复制至slb02节点

62 [root@nfsslb ~]# vi /etc/keepalived/keepalived.conf

63 state BACKUP

64 priority 82.12 slb节点配置LVS

1 [root@nfsslb ~]# yum -y install ipvsadm

2 [root@nfsslb ~]# vi ipvsadm.sh

3 #!/bin/sh

4 #****************************************************************#

5 # ScriptName: ipvsadm.sh

6 # Author: xhy

7 # Create Date: 2018-10-28 02:40

8 # Modify Author: xhy

9 # Modify Date: 2018-10-28 02:40

10 # Version:

11 #***************************************************************#

12 sudo ifconfig eth0:0 172.24.8.200 broadcast 172.24.8.200 netmask 255.255.255.255 up

13 sudo route add -host 172.24.8.200 dev eth0:0

14 sudo echo "1" > /proc/sys/net/ipv4/ip_forward

15 sudo ipvsadm -C

16 sudo ipvsadm -A -t 172.24.8.200:80 -s rr

17 sudo ipvsadm -a -t 172.24.8.200:80 -r 172.24.8.111:80 -g

18 sudo ipvsadm -a -t 172.24.8.200:80 -r 172.24.8.112:80 -g

19 sudo ipvsadm

20 sudo sysctl -p

21 [root@nfsslb ~]# chmod u+x ipvsadm.sh

22 [root@nfsslb ~]# echo "source /root/ipvsadm.sh" >> /etc/rc.local #开机运行

23 [root@nfsslb ~]# ./ipvsadm.sh2.13 harbor节点配置VIP

1 root@docker01:~# vi /etc/init.d/lvsrs

2 #!/bin/bash

3 # description:Script to start LVS DR real server.

4 #

5 . /etc/rc.d/init.d/functions

6 VIP=172.24.8.200

7 #修改相应的VIP

8 case "$1" in

9 start)

10 #启动 LVS-DR 模式,real server on this machine. 关闭ARP冲突检测。

11 echo "Start LVS of Real Server!"

12 /sbin/ifconfig lo down

13 /sbin/ifconfig lo up

14 echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

15 echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

16 echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

17 echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

18 /sbin/ifconfig lo:0 $VIP broadcast $VIP netmask 255.255.255.255 up

19 /sbin/route add -host $VIP dev lo:0

20 sudo sysctl -p

21 ;;

22 stop)

23 #停止LVS-DR real server loopback device(s).

24 echo "Close LVS Director Server!"

25 /sbin/ifconfig lo:0 down

26 echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

27 echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

28 echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

29 echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

30 sudo sysctl -p

31 ;;

32 status)

33 # Status of LVS-DR real server.

34 islothere=`/sbin/ifconfig lo:0 | grep $VIP`

35 isrothere=`netstat -rn | grep "lo:0" | grep $VIP`

36 if [ ! "$islothere" -o ! "isrothere" ];then

37 # Either the route or the lo:0 device

38 # not found.

39 echo "LVS-DR real server Stopped!"

40 else

41 echo "LVS-DR real server Running..."

42 fi

43 ;;

44 *)

45 # Invalid entry.

46 echo "$0: Usage: $0 {start|status|stop}"

47 exit 1

48 ;;

49 esac

50 root@docker01:~# chmod u+x /etc/init.d/lvsrs

51 root@docker02:~# chmod u+x /etc/init.d/lvsrs2.14 启动相关服务

1 root@docker01:~# service lvsrs start

2 root@docker02:~# service lvsrs start

3 [root@nfsslb ~]# systemctl start keepalived.service

4 [root@nfsslb ~]# systemctl enable keepalived.service

5 [root@slb02 ~]# systemctl start keepalived.service

6 [root@slb02 ~]# systemctl enable keepalived.service2.15 确认验证

1 root@docker01:~# ip addr #验证docker01/02/slb是否成功启用vip三 测试验证

1 root@docker04:~# vi /etc/hosts

2 172.24.8.200 reg.harbor.com

3 root@docker04:~# vi /etc/docker/daemon.json

4 {

5 "insecure-registries": ["http://reg.harbor.com"]

6 }

7 root@docker04:~# systemctl restart docker.service

8 若是信任CA机构颁发的证书,相应关闭daemon.json中的配置。

9 root@docker04:~# docker login reg.harbor.com #登录registry

10 Username: admin

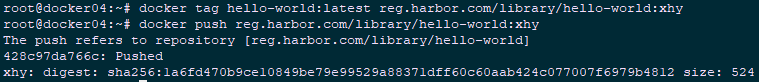

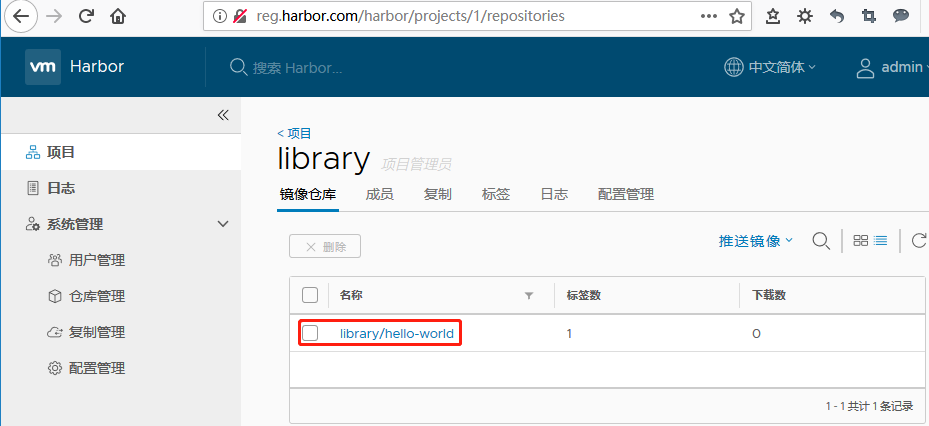

11 Password: Harbor12345 1 root@docker04:~# docker pull hello-world

2 root@docker04:~# docker tag hello-world:latest reg.harbor.com/library/hello-world:xhy

3 root@docker04:~# docker push reg.harbor.com/library/hello-world:xhy

原文出处:https://www.cnblogs.com/itzgr/p/10166760.html

CentOS 7 使用 keepalived 搭建 nginx 高可用服务器简略教程

安装nginx请看教程。

本机测试环境为CentOS7。主服务器192.168.126.130,从服务器192.168.126.129,VIP IP 192.168.126.188。

安装keepalived。

[root@promote ~]# yum install -y keepalived

[root@promote ~]# cd /etc/keepalived/

#主服务器修改网页信息,非必须操作

[root@promote ~]# echo "master nginx web server." > /usr/local/nginx/html/index.html

#从服务器修改网页信息,非必须操作

[root@promote ~]# echo "slave nginx web server." > /usr/local/nginx/html/index.html

[root@promote ~]# vim keepalived.conf

主服务器配置信息如下:

[root@promote ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.126.188

}

}

[root@promote ~]

#

vrrp_instance VI_1 {

state MASTER #主从服务器状态,主服务器down后切换到backup

interface ens33 #网卡名称

virtual_router_id 51

priority 100 #服务器优先级

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.126.188 #VIP地址

}

}

从服务器配置。

[root@promote sbin]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.126.188

}

}

[root@promote sbin]#

细心读者会发现邮件地址为192.168.200.1,邮件模块本文未使用,可以尝试删除或修改。

主从服务器启动keepalived服务。

[root@promote sbin]# service keepalived start浏览器访问192.168.126.130。Linux查看服务器信息。

[root@promote ~]# curl -i 192.168.126.130

HTTP/1.1 200 OK

Server: nginx/1.14.2

Date: Mon, 08 Apr 2019 04:23:24 GMT

Content-Type: text/html

Content-Length: 25

Last-Modified: Mon, 08 Apr 2019 04:19:05 GMT

Connection: keep-alive

ETag: "5caacbb9-19"

Accept-Ranges: bytes

master nginx web server.

[root@promote ~]#

#从服务器信息显示基本一致。关掉主服务器重新查看浏览器信息,nginx 服务器正常访问。查看服务器IP信息。

#master

[root@promote ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:f0:04:39 brd ff:ff:ff:ff:ff:ff

inet 192.168.126.130/24 brd 192.168.126.255 scope global noprefixroute dynamic ens33

valid_lft 1796sec preferred_lft 1796sec

inet 192.168.126.188/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::ccc2:d1b:1fc4:8ce2/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::c354:a1e1:869f:7ae1/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@promote ~]#

#slave

[root@promote ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:c3:f7:cb brd ff:ff:ff:ff:ff:ff

inet 192.168.126.129/24 brd 192.168.126.255 scope global noprefixroute dynamic ens33

valid_lft 1673sec preferred_lft 1673sec

inet6 fe80::ccc2:d1b:1fc4:8ce2/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@promote ~]#

尝试启用主服务器关闭从服务器,查看服务器IP信息。

#模拟主服务器down

[root@promote ~]# service keepalived stop

Redirecting to /bin/systemctl stop keepalived.service

[root@promote ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:f0:04:39 brd ff:ff:ff:ff:ff:ff

inet 192.168.126.130/24 brd 192.168.126.255 scope global noprefixroute dynamic ens33

valid_lft 1717sec preferred_lft 1717sec

inet6 fe80::ccc2:d1b:1fc4:8ce2/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::c354:a1e1:869f:7ae1/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@promote ~]#

#自动切换到master

[root@promote ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:c3:f7:cb brd ff:ff:ff:ff:ff:ff

inet 192.168.126.129/24 brd 192.168.126.255 scope global noprefixroute dynamic ens33

valid_lft 1564sec preferred_lft 1564sec

inet 192.168.126.188/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::ccc2:d1b:1fc4:8ce2/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@promote ~]# 操作实验完美实现nginx web服务器自动切换。

CentOS Docker容器中安装LVS负载均衡(二) keepalived

安装环境:

Docker容器和宿主机都是CentOS 7.3版本

前一篇CentOS Docker容器中安装LVS负载均衡(一) ipvsadm已经说了容器安装ipvsadm遇到的一个问题。

这一篇也是类似的问题,容器内要使用Keepalived,宿主机中同样要安装Keepalived

否则容器内运行ipvsadm会出现如下错误:

1. Docker容器内安装keepalived

yum install -y keepalived2. Docker宿主机上安装keepalived

yum install -y keepalived

我们今天的关于Docker环境下搭建DNS LVS(keepAlived) OpenResty服务器简易集群和docker搭建代理服务器的分享就到这里,谢谢您的阅读,如果想了解更多关于003.Keepalived 搭建 LVS 高可用集群、014.Docker Harbor+Keepalived+LVS+共享存储高可用架构、CentOS 7 使用 keepalived 搭建 nginx 高可用服务器简略教程、CentOS Docker容器中安装LVS负载均衡(二) keepalived的相关信息,可以在本站进行搜索。

本文标签: