如果您想了解由于Elasticsearch无法作为root用户运行而导致无法运行SonarServer的相关知识,那么本文是一篇不可错过的文章,我们将对elasticsearch无法启动进行全面详尽的

如果您想了解由于Elasticsearch无法作为root用户运行而导致无法运行Sonar Server的相关知识,那么本文是一篇不可错过的文章,我们将对elasticsearch无法启动进行全面详尽的解释,并且为您提供关于docker+springboot+elasticsearch+kibana+elasticsearch-head整合(详细说明 ,看这一篇就够了)、Dockerized elasticsearch和fscrawler:无法创建Elasticsearch客户端,禁用了搜寻器…连接被拒绝、Docker容器中Elasticsearch 2.4.0中的root用户、docker环境运行elasticsearch以及汉化运行kibana的有价值的信息。

本文目录一览:- 由于Elasticsearch无法作为root用户运行而导致无法运行Sonar Server(elasticsearch无法启动)

- docker+springboot+elasticsearch+kibana+elasticsearch-head整合(详细说明 ,看这一篇就够了)

- Dockerized elasticsearch和fscrawler:无法创建Elasticsearch客户端,禁用了搜寻器…连接被拒绝

- Docker容器中Elasticsearch 2.4.0中的root用户

- docker环境运行elasticsearch以及汉化运行kibana

由于Elasticsearch无法作为root用户运行而导致无法运行Sonar Server(elasticsearch无法启动)

我正在尝试安装SonarQube:我已经遵循了以下步骤:

设置SOnarQube Tuto:此处

总结一下:

- 下载Sonar并将其移至

/opt/sonar - 将这些coonfig步骤添加到

/opt/sonar/conf/sonar.properties:

sonar.jdbc.username =声纳sonar.jdbc.password =声纳

sonar.jdbc.url = jdbc:mysql:// localhost:3306 / sonar?useUnicode =

true&characterEncoding = utf8&rewriteBatchedStatements = true&useConfigs =

maxPerformance

和

sonar.web.host=127.0.0.1sonar.web.context=/sonarsonar.web.port=9000- 实现 声纳 即服务:

须藤cp /opt/sonar/bin/linux-x86-64/sonar.sh /etc/init.d/sonar

sudo gedit /etc/init.d/sonar插入两行:

SONAR_HOME=/opt/sonarPLATFORM=linux-x86-64Modify the following lines:WRAPPER_CMD="${SONAR_HOME}/bin/${PLATFORM}/wrapper"WRAPPER_CONF="${SONAR_HOME}/conf/wrapper.conf"…

PIDDIR="/var/run"Register as a Linux service:sudo update-rc.d -f sonar removesudo chmod 755 /etc/init.d/sonarsudo update-rc.d sonar defaults完成这些步骤之后:我尝试从中运行Sonar:localhost:9000/sonar执行后:sudo /etc/init.d/sonar start

`奇怪的是,它没有运行。

因此,当我运行`sudo /etc/init.d/sonar status时,我发现它会在几秒钟后继续运行,并且会在日志文件中引发一些错误,如下所示:

es.log:

2017.12.09 18:05:14 ERROR es[][o.e.b.Bootstrap] Exceptionjava.lang.RuntimeException: can not run elasticsearch as root at org.elasticsearch.bootstrap.Bootstrap.initializeNatives(Bootstrap.java:106) ~[elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.bootstrap.Bootstrap.setup(Bootstrap.java:195) ~[elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.bootstrap.Bootstrap.init(Bootstrap.java:342) [elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.bootstrap.Elasticsearch.init(Elasticsearch.java:132) [elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.bootstrap.Elasticsearch.execute(Elasticsearch.java:123) [elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.cli.EnvironmentAwareCommand.execute(EnvironmentAwareCommand.java:70) [elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.cli.Command.mainWithoutErrorHandling(Command.java:134) [elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.cli.Command.main(Command.java:90) [elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:91) [elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:84) [elasticsearch-5.6.3.jar:5.6.3]2017.12.09 18:05:14 WARN es[][o.e.b.ElasticsearchUncaughtExceptionHandler] uncaught exception in thread [main]org.elasticsearch.bootstrap.StartupException: java.lang.RuntimeException: can not run elasticsearch as root at org.elasticsearch.bootstrap.Elasticsearch.init(Elasticsearch.java:136) ~[elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.bootstrap.Elasticsearch.execute(Elasticsearch.java:123) ~[elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.cli.EnvironmentAwareCommand.execute(EnvironmentAwareCommand.java:70) ~[elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.cli.Command.mainWithoutErrorHandling(Command.java:134) ~[elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.cli.Command.main(Command.java:90) ~[elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:91) ~[elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:84) ~[elasticsearch-5.6.3.jar:5.6.3]Caused by: java.lang.RuntimeException: can not run elasticsearch as root at org.elasticsearch.bootstrap.Bootstrap.initializeNatives(Bootstrap.java:106) ~[elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.bootstrap.Bootstrap.setup(Bootstrap.java:195) ~[elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.bootstrap.Bootstrap.init(Bootstrap.java:342) ~[elasticsearch-5.6.3.jar:5.6.3] at org.elasticsearch.bootstrap.Elasticsearch.init(Elasticsearch.java:132) ~[elasticsearch-5.6.3.jar:5.6.3] ... 6 more有什么建议么 ??

答案1

小编典典更改对声纳文件的根访问权限。尝试以普通用户访问权限运行。

chown <another user>:<user group> sonar.sh

docker+springboot+elasticsearch+kibana+elasticsearch-head整合(详细说明 ,看这一篇就够了)

一开始是没有打算写这一篇博客的,但是看见好多朋友问关于elasticsearch的坑,决定还是写一份详细的安装说明与简单的测试demo,只要大家跟着我的步骤一步步来,100%是可以测试成功的。

一. docker安装

本人使用的是centos6,安装命令如下:

1.首先使用epel库安装docker

yum install -y http://mirrors.yun-idc.com/epel/6/i386/epel-release-6-8.noarch.rpm

yum install -y docker-io安装完成后,使用命令 service docker start 启动docker

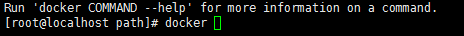

控制台输入docker ,出现如下界面表示安装成功

如果提示检查软件失败什么的,是因为之前先装了docker,再装了docker-io,直接使用命令 yum remove docker 删除docker,再执行

yum install -y docker-io 即可。

如果仍然提示 no package avalible...

使用rpm安装docker:

rpm -ivh docker源的方式 yum install

Ubuntu/Debian: curl -sSL https://get.docker.com | sh

Linux 64bit binary: https://get.docker.com/builds/Linux/x86_64/docker-1.7.1

Darwin/OSX 64bit client binary: https://get.docker.com/builds/Darwin/x86_64/docker-1.7.1

Darwin/OSX 32bit client binary: https://get.docker.com/builds/Darwin/i386/docker-1.7.1

Linux 64bit tgz: https://get.docker.com/builds/Linux/x86_64/docker-1.7.1.tgz

Windows 64bit client binary: https://get.docker.com/builds/Windows/x86_64/docker-1.7.1.exe

Windows 32bit client binary: https://get.docker.com/builds/Windows/i386/docker-1.7.1.exe

Centos 6/RHEL 6: https://get.docker.com/rpm/1.7.1/centos-6/RPMS/x86_64/docker-engine-1.7.1-1.el6.x86_64.rpm

https://get.docker.com/rpm/1.7.1/centos-6/RPMS/x86_64/docker-engine-1.7.1-1.el6.x86_64.rpm

Centos 7/RHEL 7: https://get.docker.com/rpm/1.7.1/centos-7/RPMS/x86_64/docker-engine-1.7.1-1.el7.centos.x86_64.rpm

Fedora 20: https://get.docker.com/rpm/1.7.1/fedora-20/RPMS/x86_64/docker-engine-1.7.1-1.fc20.x86_64.rpm

Fedora 21: https://get.docker.com/rpm/1.7.1/fedora-21/RPMS/x86_64/docker-engine-1.7.1-1.fc21.x86_64.rpm

Fedora 22: https://get.docker.com/rpm/1.7.1/fedora-22/RPMS/x86_64/docker-engine-1.7.1-1.fc22.x86_64.rpm

2.下载elasticsearch 镜像

在下载elasticsearch镜像之前,可以先改一下docker的镜像加速功能,我是用的阿里云的镜像加速,登录阿里云官网,产品-->云计算基础-->容器镜像服务, 进入管理控制台,如下图所示,复制加速器地址。

进入虚拟机,vim /etc/sysconfig/docker,加入下面一行配置

other_args="--registry-mirror=https://xxxx.aliyuncs.com"

:wq 保存退出

service docker restart 重启docker服务

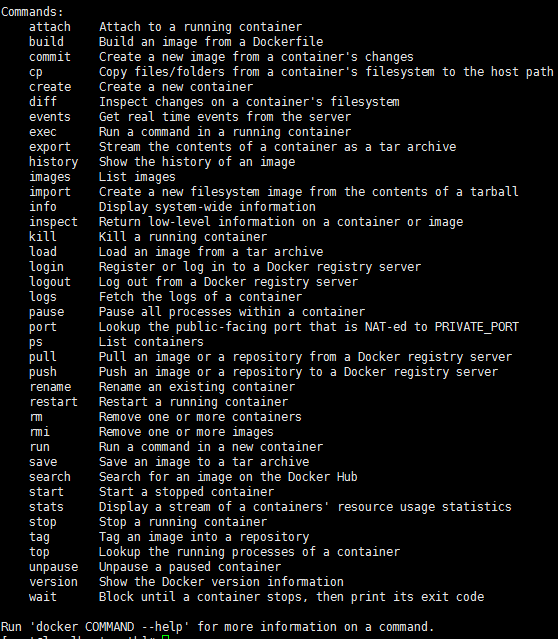

ps -aux|grep docker 输入如下命令查看docker信息,出现如下信息则加速器配置成功

然后就可以快速pull镜像了。

docker pull elasticsearch

docker pull kibana

docker pull mobz/elasticsearch-head:5

将我们需要的三个镜像拉取下来

mkdir -p /opt/elasticsearch/data

vim /opt/elasticsearch/elasticsearch.yml

docker run --name elasticsearch -p 9200:9200 -p 9300:9300 -p 5601:5601 -e "discovery.type=single-node" -v /opt/elasticsearch/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml -v /opt/elasticsearch/data:/usr/share/elasticsearch/data -d elasticsearchelasticsearch.yml 配置文件内容:

cluster.name: elasticsearch_cluster

node.name: node-master

node.master: true

node.data: true

http.port: 9200

network.host: 0.0.0.0

network.publish_host: 192.168.6.77

discovery.zen.ping.unicast.hosts: ["192.168.6.77","192.168.6.78"]

http.host: 0.0.0.0

http.cors.enabled: true

http.cors.allow-origin: "*"

bootstrap.memory_lock: false

bootstrap.system_call_filter: false

# Uncomment the following lines for a production cluster deployment

#transport.host: 0.0.0.0

discovery.zen.minimum_master_nodes: 1注意我的elasticsearch 是在两台服务器上的,所以集群部署的时候可以省略端口号,因为都是9300,如果你的集群是部署在同一台os上,则需要区分出来端口号。

cluster.name: elasticsearch_cluster 集群名字,所有集群统一。

node.name: node-master 你的节点名称,所有集群必须区分开。

http.cors.enabled: true

http.cors.allow-origin: "*" 支持跨域

discovery.zen.minimum_master_nodes: 1 可被发现作为主节点的数量

network.publish_host: 192.168.6.77 对外公布服务的真实IP

network.host: 0.0.0.0 开发环境可以设置成0.0.0.0,真正的生产环境需要指定IP地址。

我们启动elasticsearch容器多加了一个端口映射 -p 5601:5601 目的是让kibana使用该容器的端口映射,无需指定kibana容器的映射了。

启动kibana:

docker run --name kibana -e ELASTICSEARCH_URL=http://127.0.0.1:9200 --net=container:elasticsearch -d kibana--net=container:elasticsearch 命令为指定使用elasticsearch的映射

这个时候,一般elasticsearch是启动报错的,我们需要修改一下linux的文件配置,如文件描述符的大小等。

需要修改的文件一共两个:

切换到root用户

1、vi /etc/security/limits.conf 修改如下配置

* soft nofile 65536

* hard nofile 131072

2、vi /etc/sysctl.conf 添加配置

vm.max_map_count=655360

运行命令 sysctl -p这个时候配置完了输入命令

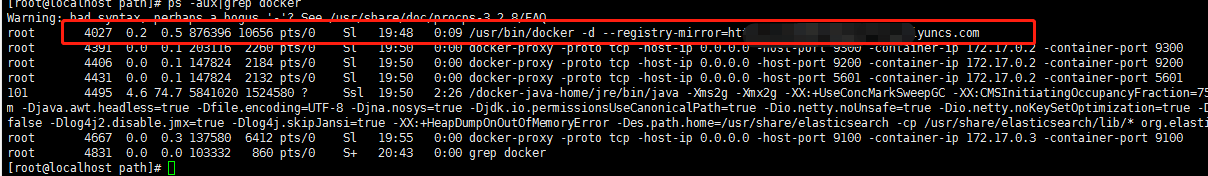

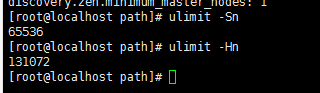

ulimit -Sn

ulimit -Hn

出现如下信息即配置成功。

可是这个时候重启容器依然是报错的,没报错是你运气好,解决办法是重启一下docker就OK了。

service docker restart

然后重启elasticsearch 容器

docker restart elasticsearch

访问ip:9200

出现如下信息,则elasticsearch 启动成功

{

"name" : "node-master",

"cluster_name" : "elasticsearch_cluster",

"cluster_uuid" : "QzD1rYUOSqmpoXPYQqolqQ",

"version" : {

"number" : "5.6.12",

"build_hash" : "cfe3d9f",

"build_date" : "2018-09-10T20:12:43.732Z",

"build_snapshot" : false,

"lucene_version" : "6.6.1"

},

"tagline" : "You Know, for Search"

}

接着同样的操作,在另外一台os上启动elasticsearch,两台不同的地方一是第二台我们不需要做-p 5601:5601的端口映射,第二是elasticsearch.yml配置文件的一些不同:cluster.name: elasticsearch_cluster

node.name: node-slave

node.master: false

node.data: true

http.port: 9200

network.host: 0.0.0.0

network.publish_host: 192.168.6.78

discovery.zen.ping.unicast.hosts: ["192.168.6.77:9300","192.168.6.78:9300"]

http.host: 0.0.0.0

http.cors.enabled: true

http.cors.allow-origin: "*"

bootstrap.memory_lock: false

bootstrap.system_call_filter: false

# Uncomment the following lines for a production cluster deployment

# #transport.host: 0.0.0.0

# #discovery.zen.minimum_master_nodes: 1

#两台os中的elasticsearch都启动成功后,便可以启动kibana和head了。

重启kibana

docker restart kibana

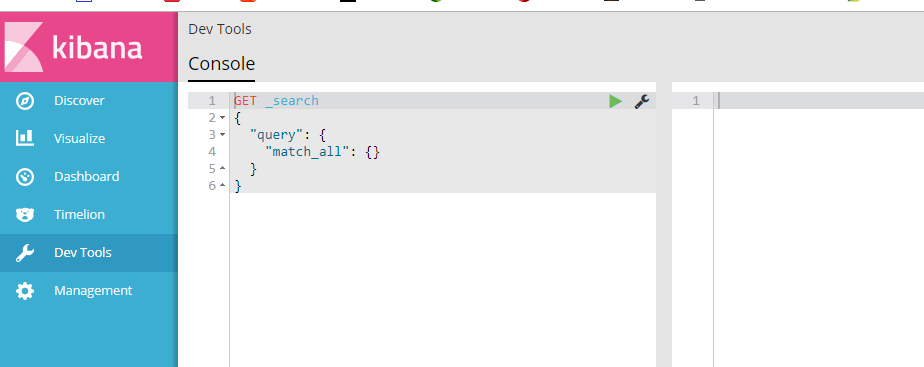

访问http://ip:5601

出现如下页面则kibana启动成功

启动elasticsearch-head监控es服务

docker run -d -p 9100:9100 mobz/elasticsearch-head:5

访问ip:9100

出现如下界面即启动head成功

到此我们的准备工作算是完成了,接下来新建springboot项目,pom引入

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-elasticsearch</artifactId>

</dependency>

其他mvc mybatis配置在此不再赘述,application.yml配置如下:

spring:

data:

elasticsearch:

cluster-nodes: 192.168.6.77:9300

repositories.enabled: true

cluster-name: elasticsearch_cluster编写仓库测试类 UserRepository:

package com.smkj.user.repository;

import org.springframework.data.elasticsearch.repository.ElasticsearchRepository;

import org.springframework.stereotype.Component;

import com.smkj.user.entity.XymApiUser;

/**

* @author dalaoyang

* @Description

* @project springboot_learn

* @package com.dalaoyang.repository

* @email yangyang@dalaoyang.cn

* @date 2018/5/4

*/

@Component

public interface UserRepository extends ElasticsearchRepository<XymApiUser,String> {

}实体类中,加上如下注解:

@SuppressWarnings("serial")

@AllArgsConstructor

@NoArgsConstructor

@Data

@Accessors(chain=true)

@Document(indexName = "testuser",type = "XymApiUser")

最后一个注解是使用elasticsearch必加的,类似数据库和数据表映射

上边的几个就是贴出来给大家推荐一下lombok这个小东东,挺好用的,具体使用方法可以自行百度,不喜欢的同学可以直接删掉。

然后编写controller:

package com.smkj.user.controller;

import java.util.List;

import org.elasticsearch.action.get.GetResponse;

import org.elasticsearch.client.transport.TransportClient;

import org.elasticsearch.index.query.QueryBuilder;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.index.query.functionscore.FunctionScoreQueryBuilder;

import org.elasticsearch.index.query.functionscore.ScoreFunctionBuilders;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.domain.Page;

import org.springframework.data.domain.PageRequest;

import org.springframework.data.domain.Pageable;

import org.springframework.data.elasticsearch.core.query.NativeSearchQueryBuilder;

import org.springframework.data.elasticsearch.core.query.SearchQuery;

import org.springframework.data.web.PageableDefault;

import org.springframework.http.HttpStatus;

import org.springframework.http.ResponseEntity;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.PathVariable;

import org.springframework.web.bind.annotation.RestController;

import com.google.common.collect.Lists;

import com.smkj.user.entity.XymApiUser;

import com.smkj.user.repository.UserRepository;

import com.smkj.user.service.UserService;

@RestController

public class UserController {

@Autowired

private UserService userService;

@Autowired

private UserRepository userRepository;

@Autowired

private TransportClient client;

@GetMapping("user/{id}")

public XymApiUser getUserById(@PathVariable String id) {

XymApiUser apiuser = userService.selectByPrimaryKey(id);

userRepository.save(apiuser);

System.out.println(apiuser.toString());

return apiuser;

}

/**

* @param title 搜索标题

* @param pageable page = 第几页参数, value = 每页显示条数

*/

@GetMapping("get/{id}")

public ResponseEntity search(@PathVariable String id,@PageableDefault(page = 1, value = 10) Pageable pageable){

if (id.isEmpty()) {

return new ResponseEntity(HttpStatus.NOT_FOUND);

}

// 通过索引、类型、id向es进行查询数据

GetResponse response = client.prepareGet("testuser", "xymApiUser", id).get();

if (!response.isExists()) {

return new ResponseEntity(HttpStatus.NOT_FOUND);

}

// 返回查询到的数据

return new ResponseEntity(response.getSource(), HttpStatus.OK);

}

/**

* 3、查 +++:分页、分数、分域(结果一个也不少)

* @param page

* @param size

* @param q

* @return

* @return

*/

@GetMapping("/{page}/{size}/{q}")

public Page<XymApiUser> searchCity(@PathVariable Integer page, @PathVariable Integer size, @PathVariable String q) {

SearchQuery searchQuery = new NativeSearchQueryBuilder()

.withQuery( QueryBuilders.prefixQuery("cardNum",q))

.withPageable(PageRequest.of(page, size))

.build();

Page<XymApiUser> pagea = userRepository.search(searchQuery);

System.out.println(pagea.getTotalPages());

return pagea;

}

}

访问 http://localhost:8080/0/10/6212263602070571344

到此结束,不明白的朋友可以留言。

Dockerized elasticsearch和fscrawler:无法创建Elasticsearch客户端,禁用了搜寻器…连接被拒绝

第一次运行fscrawler(即docker-compose run fscrawler)时,它将使用以下默认设置创建/config/{fscrawer_job}/_settings.yml:

elasticsearch:

nodes:

- url: "http://127.0.0.1:9200"

这将导致fscrawler尝试连接到localhost(即127.0.0.1)。但是,当fscrawler位于Docker容器中时,这将失败,因为它正在尝试与CONTAINER的本地主机连接。在我的情况下,这尤其令人困惑,因为Elasticsearch WAS可以作为本地主机访问,但可以在我的物理计算机的本地主机(而不是容器的本地主机)上访问。更改网址后,fscrawler可以连接到Elasticsearch实际上所在的网络地址。

elasticsearch:

nodes:

- url: "http://elasticsearch:9200"

我使用了以下Docker镜像:https://hub.docker.com/r/toto1310/fscrawler

# FILE: docker-compose.yml

version: '2.2'

services:

# FSCrawler

fscrawler:

image: toto1310/fscrawler

container_name: fscrawler

volumes:

- ${PWD}/config:/root/.fscrawler

- ${PWD}/data:/tmp/es

networks:

- esnet

command: fscrawler job_name

# Elasticsearch Cluster

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:7.3.2

container_name: elasticsearch

environment:

- node.name=elasticsearch

- discovery.seed_hosts=elasticsearch2

- cluster.initial_master_nodes=elasticsearch,elasticsearch2

- cluster.name=docker-cluster

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- esdata01:/usr/share/elasticsearch/data

ports:

- 9200:9200

networks:

- esnet

elasticsearch2:

image: docker.elastic.co/elasticsearch/elasticsearch:7.3.2

container_name: elasticsearch2

environment:

- node.name=elasticsearch2

- discovery.seed_hosts=elasticsearch

- cluster.initial_master_nodes=elasticsearch,elasticsearch2

- cluster.name=docker-cluster

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- esdata02:/usr/share/elasticsearch/data

networks:

- esnet

volumes:

esdata01:

driver: local

esdata02:

driver: local

networks:

esnet:

冉docker-compose up elasticsearch elasticsearch2

调出elasticsearch节点。

运行docker-compose run fscrawler创建_settings.yml

将_settings.yml编辑为

elasticsearch:

nodes:

- url: "http://elasticsearch:9200"

启动了fscrawler docker-compose up fscrawler

Docker容器中Elasticsearch 2.4.0中的root用户

我正在使用Docker运行ELK堆栈以进行日志管理,当前配置为ES 1.7,Logstash 1.5.4和Kibana 4.1.4.现在我尝试将Elasticsearch升级到2.4.0,在https://download.elastic.co/elasticsearch/release/org/elasticsearch/distribution/tar/elasticsearch/2.4.0/elasticsearch-2.4.0.tar.gz中使用targer使用tar.gz文件.由于ES 2.X不允许以root用户身份运行,我已经使用过

-Des.insecure.allow.root=true

运行elasticsearch服务时选项,但我的容器无法启动.日志没有提到任何问题.

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 874 100 874 0 0 874k 0 --:--:-- --:--:-- --:--:-- 853k

//opt//log-management//elasticsearch/bin/elasticsearch: line 134: hostname: command not found

Scheduler@0.0.0 start /opt/log-management/Scheduler

node scheduler-app.js

ESExportWrapper@0.0.0 start /opt/log-management/ESExportWrapper

node app.js

Jobs are registered

[2016-09-28 09:04:24,646][INFO ][bootstrap ] max_open_files [1048576]

[2016-09-28 09:04:24,686][WARN ][bootstrap ] running as ROOT user. this is a bad idea!

Native thread-sleep not available.

This will result in much slower performance,but it will still work.

You should re-install spawn-sync or upgrade to the lastest version of node if possible.

Check /opt/log-management/ESExportWrapper/node_modules/sync-request/node_modules/spawn-sync/error.log for more details

[2016-09-28 09:04:24,874][INFO ][node ] [Kismet Deadly] version[2.4.0],pid[1],build[ce9f0c7/2016-08-29T09:14:17Z]

[2016-09-28 09:04:24,874][INFO ][node ] [Kismet Deadly] initializing ...

Wed,28 Sep 2016 09:04:24 GMT express deprecated app.configure: Check app.get('env') in an if statement at lib/express/index.js:60:5

Wed,28 Sep 2016 09:04:24 GMT connect deprecated multipart: use parser (multiparty,busboy,formidable) npm module instead at node_modules/express/node_modules/connect/lib/middleware/bodyParser.js:56:20

Wed,28 Sep 2016 09:04:24 GMT connect deprecated limit: Restrict request size at location of read at node_modules/express/node_modules/connect/lib/middleware/multipart.js:86:15

[2016-09-28 09:04:25,399][INFO ][plugins ] [Kismet Deadly] modules [reindex,lang-expression,lang-groovy],plugins [],sites []

[2016-09-28 09:04:25,423][INFO ][env ] [Kismet Deadly] using [1] data paths,mounts [[/data (/dev/mapper/platform-data)]],net usable_space [1tb],net total_space [1tb],spins? [possibly],types [xfs]

[2016-09-28 09:04:25,423][INFO ][env ] [Kismet Deadly] heap size [7.8gb],compressed ordinary object pointers [true]

[2016-09-28 09:04:25,455][WARN ][threadpool ] [Kismet Deadly] requested thread pool size [60] for [index] is too large; setting to maximum [24] instead

[2016-09-28 09:04:27,575][INFO ][node ] [Kismet Deadly] initialized

[2016-09-28 09:04:27,575][INFO ][node ] [Kismet Deadly] starting ...

[2016-09-28 09:04:27,695][INFO ][transport ] [Kismet Deadly] publish_address {10.240.118.68:9300},bound_addresses {[::1]:9300},{127.0.0.1:9300}

[2016-09-28 09:04:27,700][INFO ][discovery ] [Kismet Deadly] ccs-elasticsearch/q2Sv4FUFROGIdIWJrNENVA

任何线索将不胜感激.

编辑1:作为// opt //日志管理// elasticsearch / bin / elasticsearch:第134行:hostname:command not found是一个错误而docker镜像没有hostname实用程序,我尝试使用uname -ncommand来获取HOSTNAME ES.现在它不会抛出主机名错误,但问题仍然存在.它没有开始.

它是否正确使用?

还有一个疑问,当我使用的是当前正在运行的ES 1.7时,主机名实用程序也不在那里,但它运行没有任何问题.非常困惑.

使用uname -n后记录:

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 1083 100 1083 0 0 1093k 0 --:--:-- --:--:-- --:--:-- 1057k

> ESExportWrapper@0.0.0 start /opt/log-management/ESExportWrapper

> node app.js

> Scheduler@0.0.0 start /opt/log-management/Scheduler

> node scheduler-app.js

Jobs are registered

[2016-09-30 10:10:37,785][INFO ][bootstrap ] max_open_files [1048576]

[2016-09-30 10:10:37,822][WARN ][bootstrap ] running as ROOT user. this is a bad idea!

Native thread-sleep not available.

This will result in much slower performance,but it will still work.

You should re-install spawn-sync or upgrade to the lastest version of node if possible.

Check /opt/log-management/ESExportWrapper/node_modules/sync-request/node_modules/spawn-sync/error.log for more details

[2016-09-30 10:10:37,993][INFO ][node ] [Helleyes] version[2.4.0],build[ce9f0c7/2016-08-29T09:14:17Z]

[2016-09-30 10:10:37,993][INFO ][node ] [Helleyes] initializing ...

Fri,30 Sep 2016 10:10:38 GMT express deprecated app.configure: Check app.get('env') in an if statement at lib/express/index.js:60:5

Fri,30 Sep 2016 10:10:38 GMT connect deprecated multipart: use parser (multiparty,formidable) npm module instead at node_modules/express/node_modules/connect/lib/middleware/bodyParser.js:56:20

Fri,30 Sep 2016 10:10:38 GMT connect deprecated limit: Restrict request size at location of read at node_modules/express/node_modules/connect/lib/middleware/multipart.js:86:15

[2016-09-30 10:10:38,435][INFO ][plugins ] [Helleyes] modules [reindex,sites []

[2016-09-30 10:10:38,455][INFO ][env ] [Helleyes] using [1] data paths,types [xfs]

[2016-09-30 10:10:38,456][INFO ][env ] [Helleyes] heap size [7.8gb],compressed ordinary object pointers [true]

[2016-09-30 10:10:38,483][WARN ][threadpool ] [Helleyes] requested thread pool size [60] for [index] is too large; setting to maximum [24] instead

[2016-09-30 10:10:40,151][INFO ][node ] [Helleyes] initialized

[2016-09-30 10:10:40,152][INFO ][node ] [Helleyes] starting ...

[2016-09-30 10:10:40,278][INFO ][transport ] [Helleyes] publish_address {10.240.118.68:9300},{127.0.0.1:9300}

[2016-09-30 10:10:40,283][INFO ][discovery ] [Helleyes] ccs-elasticsearch/wvVGkhxnTqaa_wS5GGjZBQ

[2016-09-30 10:10:40,360][WARN ][transport.netty ] [Helleyes] exception caught on transport layer [[id: 0x329b2977,/172.17.0.15:53388 => /10.240.118.69:9300]],closing connection

java.lang.NullPointerException

at org.elasticsearch.transport.netty.MessageChannelHandler.handleException(MessageChannelHandler.java:179)

at org.elasticsearch.transport.netty.MessageChannelHandler.handlerResponseError(MessageChannelHandler.java:174)

at org.elasticsearch.transport.netty.MessageChannelHandler.messageReceived(MessageChannelHandler.java:122)

at org.jboss.netty.channel.SimpleChannelUpstreamHandler.handleUpstream(SimpleChannelUpstreamHandler.java:70)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:564)

at org.jboss.netty.channel.DefaultChannelPipeline$DefaultChannelHandlerContext.sendUpstream(DefaultChannelPipeline.java:791)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:296)

at org.jboss.netty.handler.codec.frame.FrameDecoder.unfoldAndFireMessageReceived(FrameDecoder.java:462)

at org.jboss.netty.handler.codec.frame.FrameDecoder.callDecode(FrameDecoder.java:443)

at org.jboss.netty.handler.codec.frame.FrameDecoder.messageReceived(FrameDecoder.java:303)

at org.jboss.netty.channel.SimpleChannelUpstreamHandler.handleUpstream(SimpleChannelUpstreamHandler.java:70)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:564)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:559)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:268)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:255)

at org.jboss.netty.channel.socket.nio.NioWorker.read(NioWorker.java:88)

at org.jboss.netty.channel.socket.nio.AbstractNioWorker.process(AbstractNioWorker.java:108)

at org.jboss.netty.channel.socket.nio.AbstractNioSelector.run(AbstractNioSelector.java:337)

at org.jboss.netty.channel.socket.nio.AbstractNioWorker.run(AbstractNioWorker.java:89)

at org.jboss.netty.channel.socket.nio.NioWorker.run(NioWorker.java:178)

at org.jboss.netty.util.ThreadRenamingRunnable.run(ThreadRenamingRunnable.java:108)

at org.jboss.netty.util.internal.DeadLockProofWorker$1.run(DeadLockProofWorker.java:42)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

[2016-09-30 10:10:40,360][WARN ][transport.netty ] [Helleyes] exception caught on transport layer [[id: 0xdf31e5e6,/172.17.0.15:46846 => /10.240.118.70:9300]],closing connection

java.lang.NullPointerException

at org.elasticsearch.transport.netty.MessageChannelHandler.handleException(MessageChannelHandler.java:179)

at org.elasticsearch.transport.netty.MessageChannelHandler.handlerResponseError(MessageChannelHandler.java:174)

at org.elasticsearch.transport.netty.MessageChannelHandler.messageReceived(MessageChannelHandler.java:122)

at org.jboss.netty.channel.SimpleChannelUpstreamHandler.handleUpstream(SimpleChannelUpstreamHandler.java:70)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:564)

at org.jboss.netty.channel.DefaultChannelPipeline$DefaultChannelHandlerContext.sendUpstream(DefaultChannelPipeline.java:791)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:296)

at org.jboss.netty.handler.codec.frame.FrameDecoder.unfoldAndFireMessageReceived(FrameDecoder.java:462)

at org.jboss.netty.handler.codec.frame.FrameDecoder.callDecode(FrameDecoder.java:443)

at org.jboss.netty.handler.codec.frame.FrameDecoder.messageReceived(FrameDecoder.java:303)

at org.jboss.netty.channel.SimpleChannelUpstreamHandler.handleUpstream(SimpleChannelUpstreamHandler.java:70)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:564)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:559)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:268)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:255)

at org.jboss.netty.channel.socket.nio.NioWorker.read(NioWorker.java:88)

at org.jboss.netty.channel.socket.nio.AbstractNioWorker.process(AbstractNioWorker.java:108)

at org.jboss.netty.channel.socket.nio.AbstractNioSelector.run(AbstractNioSelector.java:337)

at org.jboss.netty.channel.socket.nio.AbstractNioWorker.run(AbstractNioWorker.java:89)

at org.jboss.netty.channel.socket.nio.NioWorker.run(NioWorker.java:178)

at org.jboss.netty.util.ThreadRenamingRunnable.run(ThreadRenamingRunnable.java:108)

at org.jboss.netty.util.internal.DeadLockProofWorker$1.run(DeadLockProofWorker.java:42)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

[2016-09-30 10:10:41,798][WARN ][transport.netty ] [Helleyes] exception caught on transport layer [[id: 0xcff0b2b6,/172.17.0.15:46958 => /10.240.118.70:9300]],800][WARN ][transport.netty ] [Helleyes] exception caught on transport layer [[id: 0xb47caaf6,/172.17.0.15:53501 => /10.240.118.69:9300]],closing connection

java.lang.NullPointerException

at org.elasticsearch.transport.netty.MessageChannelHandler.handleException(MessageChannelHandler.java:179)

at org.elasticsearch.transport.netty.MessageChannelHandler.handlerResponseError(MessageChannelHandler.java:174)

at org.elasticsearch.transport.netty.MessageChannelHandler.messageReceived(MessageChannelHandler.java:122)

at org.jboss.netty.channel.SimpleChannelUpstreamHandler.handleUpstream(SimpleChannelUpstreamHandler.java:70)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:564)

at org.jboss.netty.channel.DefaultChannelPipeline$DefaultChannelHandlerContext.sendUpstream(DefaultChannelPipeline.java:791)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:296)

at org.jboss.netty.handler.codec.frame.FrameDecoder.unfoldAndFireMessageReceived(FrameDecoder.java:462)

at org.jboss.netty.handler.codec.frame.FrameDecoder.callDecode(FrameDecoder.java:443)

at org.jboss.netty.handler.codec.frame.FrameDecoder.messageReceived(FrameDecoder.java:303)

at org.jboss.netty.channel.SimpleChannelUpstreamHandler.handleUpstream(SimpleChannelUpstreamHandler.java:70)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:564)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:559)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:268)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:255)

at org.jboss.netty.channel.socket.nio.NioWorker.read(NioWorker.java:88)

at org.jboss.netty.channel.socket.nio.AbstractNioWorker.process(AbstractNioWorker.java:108)

at org.jboss.netty.channel.socket.nio.AbstractNioSelector.run(AbstractNioSelector.java:337)

at org.jboss.netty.channel.socket.nio.AbstractNioWorker.run(AbstractNioWorker.java:89)

at org.jboss.netty.channel.socket.nio.NioWorker.run(NioWorker.java:178)

at org.jboss.netty.util.ThreadRenamingRunnable.run(ThreadRenamingRunnable.java:108)

at org.jboss.netty.util.internal.DeadLockProofWorker$1.run(DeadLockProofWorker.java:42)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

[2016-09-30 10:10:43,302][WARN ][transport.netty ] [Helleyes] exception caught on transport layer [[id: 0x6247aa3f,/172.17.0.15:47057 => /10.240.118.70:9300]],303][WARN ][transport.netty ] [Helleyes] exception caught on transport layer [[id: 0x1d266aa0,/172.17.0.15:53598 => /10.240.118.69:9300]],closing connection

java.lang.NullPointerException

at org.elasticsearch.transport.netty.MessageChannelHandler.handleException(MessageChannelHandler.java:179)

at org.elasticsearch.transport.netty.MessageChannelHandler.handlerResponseError(MessageChannelHandler.java:174)

at org.elasticsearch.transport.netty.MessageChannelHandler.messageReceived(MessageChannelHandler.java:122)

at org.jboss.netty.channel.SimpleChannelUpstreamHandler.handleUpstream(SimpleChannelUpstreamHandler.java:70)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:564)

at org.jboss.netty.channel.DefaultChannelPipeline$DefaultChannelHandlerContext.sendUpstream(DefaultChannelPipeline.java:791)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:296)

at org.jboss.netty.handler.codec.frame.FrameDecoder.unfoldAndFireMessageReceived(FrameDecoder.java:462)

at org.jboss.netty.handler.codec.frame.FrameDecoder.callDecode(FrameDecoder.java:443)

at org.jboss.netty.handler.codec.frame.FrameDecoder.messageReceived(FrameDecoder.java:303)

at org.jboss.netty.channel.SimpleChannelUpstreamHandler.handleUpstream(SimpleChannelUpstreamHandler.java:70)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:564)

at org.jboss.netty.channel.DefaultChannelPipeline.sendUpstream(DefaultChannelPipeline.java:559)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:268)

at org.jboss.netty.channel.Channels.fireMessageReceived(Channels.java:255)

at org.jboss.netty.channel.socket.nio.NioWorker.read(NioWorker.java:88)

at org.jboss.netty.channel.socket.nio.AbstractNioWorker.process(AbstractNioWorker.java:108)

at org.jboss.netty.channel.socket.nio.AbstractNioSelector.run(AbstractNioSelector.java:337)

at org.jboss.netty.channel.socket.nio.AbstractNioWorker.run(AbstractNioWorker.java:89)

java.util.concurrent.ThreadPoolExecutor中的$Worker.run(ThreadPoolExecutor.java:617)

在java.lang.Thread.run(Thread.java:745)

[2016-09-30 10:10:44,807] [INFO] [cluster.service] [Helleyes] new_master {Helleyes} {wvVGkhxnTqaa_wS5GGjZBQ} {10.240.118.68} {10.240.118.68:9300},原因:zen-disco-join (elections_as_master,[0]加入收到)

[2016-09-30 10:10:44,852] [INFO] [http] [Helleyes] publish_address {10.240.118.68:9200},bound_addresses {[:: 1]:9200},{127.0.0.1:9200}

[2016-09-30 10:10:44,852] [INFO] [节点] [Helleyes]开始了

[2016-09-30 10:10:44,984] [INFO] [网关] [Helleyes]将[32]索引恢复到cluster_state

部署失败后出错

Failed: [10.240.118.68] (item={u'url': u'http://10.240.118.68:9200'}) => {"content": "","Failed": true,"item": {"url": "http://10.240.118.68:9200"},"msg": "Status code was not [200]: Request Failed:

编辑2:即使安装了hostnameutility并且工作正常,容器也无法启动.日志与EDIT 1相同.

编辑3:容器确实启动但在地址http:// nodeip:9200处无法访问.在3个节点中,只有1个有2.4个其他2个仍然有1.7个而2.4不是集群的一部分.在运行2.4的容器内部,curl到localhost:9200给出了运行的elasticsearch结果,但是从外部无法访问.

编辑4:我尝试在集群上运行ES 2.4的基本安装,在同样的设置ES 1.7工作正常.我已经运行了ES迁移插件来检查群集是否可以运行ES 2.4并且它给了我绿色.基本安装细节如下

Dockerfile

#Pulling SLES12 thin base image

FROM private-registry-1

#Author

MAINTAINER XYZ

# Pre-requisite - Adding repositories

RUN zypper ar private-registry-2

RUN zypper --no-gpg-checks -n refresh

#Install required packages and dependencies

RUN zypper -n in net-tools-1.60-764.185 wget-1.14-7.1 python-2.7.9-14.1 python-base-2.7.9-14.1 tar-1.27.1-7.1

#Downloading elasticsearch executable

ENV ES_VERSION=2.4.0

ENV ES_DIR="//opt//log-management//elasticsearch"

ENV ES_CONfig_PATH="${ES_DIR}//config"

ENV ES_REST_PORT=9200

ENV ES_INTERNAL_COM_PORT=9300

workdir /opt/log-management

RUN wget private-registry-3/elasticsearch/elasticsearch/${ES_VERSION}.tar/elasticsearch-${ES_VERSION}.tar.gz --no-check-certificate

RUN tar -xzvf ${ES_DIR}-${ES_VERSION}.tar.gz \

&& rm ${ES_DIR}-${ES_VERSION}.tar.gz \

&& mv ${ES_DIR}-${ES_VERSION} ${ES_DIR}

#Exposing elasticsearch server container port to the HOST

EXPOSE ${ES_REST_PORT} ${ES_INTERNAL_COM_PORT}

#Removing binary files which are not needed

RUN zypper -n rm wget

# Removing zypper repos

RUN zypper rr caspiancs_common

#Running elasticsearch executable

workdir ${ES_DIR}

ENTRYPOINT ${ES_DIR}/bin/elasticsearch -Des.insecure.allow.root=true

用.构建

docker build -t es-test .

1)当使用docker run -d –name elasticsearch –net = host -p 9200:9200 -p 9300:9300 es-test时,如其中一条评论中所述,并在容器或节点内卷曲localhost:9200正在运行容器,我得到了正确的响应.我仍然无法到达9200端口上的群集的其他节点.

2)当使用docker run -d –name elasticsearch -p 9200:9200 -p 9300:9300 es-test并在容器内卷曲localhost:9200时,它工作正常,但不在节点给我错误

curl: (56) Recv failure: Connection reset by peer

我仍然无法到达9200端口上的群集的其他节点.

编辑5:使用this answer on this question,我得到了运行ES 2.4的三个容器中的三个.但ES无法与所有这三个容器组成一个集群.网络配置如下

network.host:0.0.0.0,http.port:9200,

#configure elasticsearch.yml for clustering

echo 'discovery.zen.ping.unicast.hosts: [ELASTICSEARCH_IPS] ' >> ${ES_CONfig_PATH}/elasticsearch.yml

使用docker日志获取的日志有以下内容:

[2016-10-06 12:31:28,887][WARN ][bootstrap ] running as ROOT user. this is a bad idea!

[2016-10-06 12:31:29,080][INFO ][node ] [Screech] version[2.4.0],build[ce9f0c7/2016-08-29T09:14:17Z]

[2016-10-06 12:31:29,081][INFO ][node ] [Screech] initializing ...

[2016-10-06 12:31:29,652][INFO ][plugins ] [Screech] modules [reindex,sites []

[2016-10-06 12:31:29,684][INFO ][env ] [Screech] using [1] data paths,mounts [[/ (rootfs)]],net usable_space [8.7gb],net total_space [9.7gb],spins? [unkNown],types [rootfs]

[2016-10-06 12:31:29,684][INFO ][env ] [Screech] heap size [989.8mb],compressed ordinary object pointers [true]

[2016-10-06 12:31:29,720][WARN ][threadpool ] [Screech] requested thread pool size [60] for [index] is too large; setting to maximum [5] instead

[2016-10-06 12:31:31,387][INFO ][node ] [Screech] initialized

[2016-10-06 12:31:31,387][INFO ][node ] [Screech] starting ...

[2016-10-06 12:31:31,456][INFO ][transport ] [Screech] publish_address {172.17.0.16:9300},bound_addresses {[::]:9300}

[2016-10-06 12:31:31,465][INFO ][discovery ] [Screech] ccs-elasticsearch/YeO41MBIR3uqzZzISwalmw

[2016-10-06 12:31:34,500][WARN ][discovery.zen ] [Screech] Failed to connect to master [{Bobster}{Gh-6yBggRIypr7OuW1tXhA}{172.17.0.15}{172.17.0.15:9300}],retrying...

ConnectTransportException[[Bobster][172.17.0.15:9300] connect_timeout[30s]]; nested: ConnectException[Connection refused: /172.17.0.15:9300];

at org.elasticsearch.transport.netty.NettyTransport.connecttochannels(NettyTransport.java:1002)

at org.elasticsearch.transport.netty.NettyTransport.connectToNode(NettyTransport.java:937)

at org.elasticsearch.transport.netty.NettyTransport.connectToNode(NettyTransport.java:911)

at org.elasticsearch.transport.TransportService.connectToNode(TransportService.java:260)

at org.elasticsearch.discovery.zen.Zendiscovery.joinElectedMaster(Zendiscovery.java:444)

at org.elasticsearch.discovery.zen.Zendiscovery.innerJoinCluster(Zendiscovery.java:396)

at org.elasticsearch.discovery.zen.Zendiscovery.access$4400(Zendiscovery.java:96)

at org.elasticsearch.discovery.zen.Zendiscovery$JoinThreadControl$1.run(Zendiscovery.java:1296)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.net.ConnectException: Connection refused: /172.17.0.15:9300

at sun.nio.ch.socketChannelImpl.checkConnect(Native Method)

每当我提到运行该容器的主机的IP地址为network.host时,我最终会遇到旧情况,即只有一个容器运行ES 2.4,另外两个容器运行1.7.

刚刚看到docker代理正在收听9300或“我认为”正在收听.

elasticsearch-server/src/main/docker # netstat -nlp | grep 9300

tcp 0 0 :::9300 :::* LISTEN 6656/docker-proxy

这有什么线索?

最佳答案

我能够使用以下设置形成群集

network.publish_host = CONTAINER_HOST_ADDRESS即容器正在运行的节点的地址.

network.bind_host = 0.0.0.0

transport.publish_port = 9300

transport.publish_host = CONTAINER_HOST_ADDRESS

当您在代理/负载均衡器(如Nginx或haproxy)之后运行ES时,tranport.publish_port非常重要.

docker环境运行elasticsearch以及汉化运行kibana

#!/bin/bash

docker run \

--name jm-es \

--restart=always \

-e "discovery.type=single-node" \

-e "cluster.name=jm-es" \

-p 9200:9200 \

-p 9300:9300 \

-d \

elasticsearch:7.6.1#!/bin/bash

docker run \

--name jm-kbn \

--restart=always \

--link jm-es:elasticsearch \

-e "SERVER_NAME=jm-kbn" \

-e "I18N_LOCALE=zh-CN" \

-p 5601:5601 \

-d \

kibana:7.6.1

关于由于Elasticsearch无法作为root用户运行而导致无法运行Sonar Server和elasticsearch无法启动的介绍已经告一段落,感谢您的耐心阅读,如果想了解更多关于docker+springboot+elasticsearch+kibana+elasticsearch-head整合(详细说明 ,看这一篇就够了)、Dockerized elasticsearch和fscrawler:无法创建Elasticsearch客户端,禁用了搜寻器…连接被拒绝、Docker容器中Elasticsearch 2.4.0中的root用户、docker环境运行elasticsearch以及汉化运行kibana的相关信息,请在本站寻找。

本文标签: