想了解TiDBBringsDistributedScalabilitytoSQL的新动态吗?本文将为您提供详细的信息,此外,我们还将为您介绍关于1804.03235-Largescaledistrib

想了解TiDB Brings Distributed Scalability to SQL的新动态吗?本文将为您提供详细的信息,此外,我们还将为您介绍关于1804.03235-Large scale distributed neural network training through online distillation.md、A DISTRIBUTED SYSTEMS READING LIST、A revolutionary architecture for building a distributed graph、Build self-healing distributed systems with Spring的新知识。

本文目录一览:- TiDB Brings Distributed Scalability to SQL

- 1804.03235-Large scale distributed neural network training through online distillation.md

- A DISTRIBUTED SYSTEMS READING LIST

- A revolutionary architecture for building a distributed graph

- Build self-healing distributed systems with Spring

TiDB Brings Distributed Scalability to SQL

A rash of new databases have emerged, such as Google Spanner, FaunaDB, Cockroach and TimeScaleDB, that are focused on solving the problem of scale that plagues standard SQL. Now another entrant, the Beijing, China-based PingCap’s open source TiDB project, aims to make it as scalable as NoSQL systems while maintaining ACID transactions.

Its support for the MySQL protocol means users can reuse many MySQL tools and greatly reduce migration costs, according to PingCap co-founder and CEO Qi (Max) Liu. You can use it to replace MySQL for applications without changing any code in most cases. And it scales horizontally; increase the capacity simply by adding more machines.

Liu presented TiDB at the Percona Live conference in Amsterdam last October. The project was in beta then; it’s since evolved to release candidate. On Thursday, PingCap co-founder and Chief Technology Officer Edward Huang will be speaking about TiDB at the Percona Live event in Santa Clara, Calif.

They tout that TiDB offers the best of both the SQL and NoSQL worlds. They focused on making it:

easy to use;

ensuring that no data is ever lost; it is self-healing from failures;

cross-platform and can run in any environment;

and open source.

It also allows online schema changes, so the schema can evolve with your requirements. You can add new columns and indices without stopping or affecting operations in progress.

As an open source project, it has more than 100 contributors, Liu said in an email interview.

PingCap drew inspiration for TiDB from Google F1 distributed database and Spanner. Google built Spanner atop its own proprietary systems and it’s not open source, considered a downside to some.

“With Spanner, you’re making a commitment to running the service in Google Compute Engine (GCE) and probably running it there for the service’s lifetime. You’re not going to have an off-ramp if you choose to run your own stack,” Spencer Kimball, CEO of Cockroach Labs, told The New Stack previously. Keeping Track of All the Bikes

TiDB takes a loose coupling approach. It consists of a MySQL Server layer and the SQL layer. Its foundation is the open source distributed transactional key-value database TiKV, another PingCap project, which uses the programming language Rust and the distributed protocol Raft. TiDB is written in Go. Inside TiKV are MVCC (multi-version concurrency control), Raft, and for local key-value storage, it uses RocksDB. It also uses the Spark Connector.

TiDB makes two distinctions from Spanner, Liu said:

While the bottom layer of Spanner relies on Google’s Colossus distributed file system, TiDB ensures that the log is safely stored in the Raft layer. TiDB does not depend on any distributed file system, which greatly lowers write latency.

“We also see great potential in SQL optimizer, but Google didn’t seem to go deep into this aspect in its F1 paper. When designing our project, we aimed to explore the optimizer’s capability,” he said.

Spanner gained attention for its use of atomic clocks to gain time synchronization among geographically distributed data centers. TiDB does not use atomic and GPS clocks. Instead, it relies on Timestamp Allocator introduced in Percolator, a paper published by Google in 2006.

It supports the popular containers such as Docker. And the team is working to make it work with Kubernetes, though, for this work, Liu pointed out difficulties there to the Amsterdam audience.

The biggest problem they’re working on now is latency, especially between geographically distributed data centers, he said, one he hopes to have resolved in the near future.

PingCAP was founded in April 2015 by Huang, a senior distributed system engineer; Cui Qiu, a senior system engineer; and Liu, also an infrastructure engineer. It has 48 engineers working in Beijing and others working remotely from elsewhere in China.

Its clients include mobile gaming provider GAEA, which uses TiDB to support its cross-platform real-time advertising system, which requires high-volume data capacity and experiences peak loads during certain periods. TiDB supports automatic sharding and the bottom layer, TiKV, automatically distributes data among the cluster, which helps GAEA cut the cost of operation and maintenance, Liu said.

Another customer is the cashless and station-free bike sharing platform Mobike which uses TiDB for data analysis and to replace a MySQL database for online orders, which now number more than 400 million.

1804.03235-Large scale distributed neural network training through online distillation.md

现有分布式模型训练的模式

- 分布式SGD

- 并行SGD: 大规模训练中,一次的最长时间取决于最慢的机器

- 异步SGD: 不同步的数据,有可能导致权重更新向着未知方向

- 并行多模型 :多个集群训练不同的模型,再组合最终模型,但是会消耗inference运行时

- 蒸馏:流程复杂

- student训练数据集的选择

- unlabeled的数据

- 原始数据

- 留出来的数据

- student训练数据集的选择

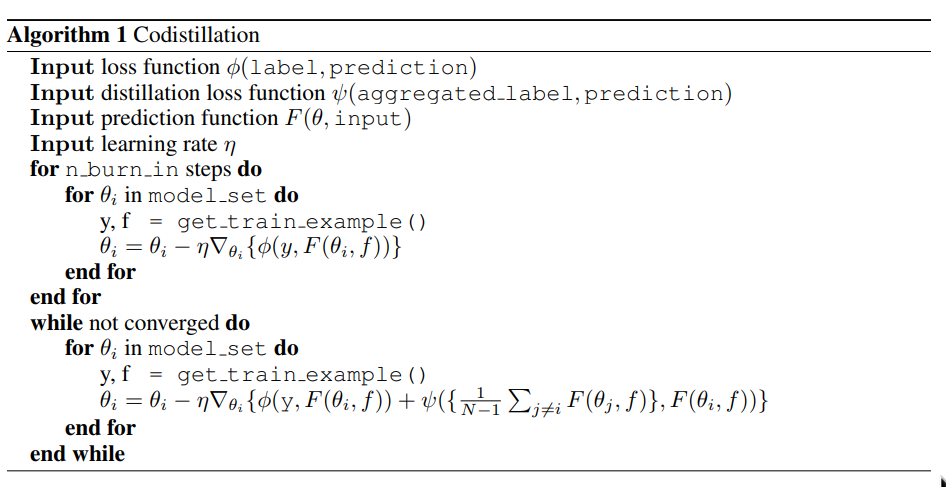

协同蒸馏

- using the same architecture for all the models;

- using the same dataset to train all the models; and

- using the distillation loss during training before any model has fully converged.

特点 - 就算thacher和student是完全相同的模型设置,只要其内容足够不同,也是能够获得有效的提升的 - 即是模型未收敛,收益也是有的 - 丢掉teacher和student的区分,互相训练,也是有好处的 - 不是同步的模型也是可以的。

算法简单易懂,而且步骤看上去不是很复杂。

使用out of state模型权重的解释:

- every change in weights leads to a change in gradients, but as training progresses towards convergence, weight updates should substantially change only the predictions on a small subset of the training data;

- weights (and gradients) are not statistically identifiable as different copies of the weights might have arbitrary scaling differences, permuted hidden units, or otherwise rotated or transformed hidden layer feature space so that averaging gradients does not make sense unless models are extremely similar;

- sufficiently out-of-sync copies of the weights will have completely arbitrary differences that change the meaning of individual directions in feature space that are not distinguishable by measuring the loss on the training set;

- in contrast, output units have a clear and consistent meaning enforced by the loss function and the training data.

所以这里似乎是说,随机性的好处?

一种指导性的实用框架设计:

- Each worker trains an independent version of the model on a locally available subset of the training data.

- Occasionally, workers checkpoint their parameters.

- Once this happens, other workers can load the freshest available checkpoints into memory and perform codistillation. 再加上,可以在小一些的集群上使用分布式SGD。

另外论文中提到,这种方式,比起每次直接发送梯度和权重,只需要偶尔载入checkpoint,而且各个模型集群在运算上是完全相互独立的。这个倒是确实能减少一些问题。 但是,如果某个模型垮掉了,完全没收敛呢?

另外,没看出来这种框架哪里简单了,管理模型和checkpoint不是一个简单的事情。

实验结论

20TB的数据,有钱任性

论文中提到,并不是机器越多,最终模型效果越好,似乎32-128是比较合适的,更多了,模型收敛速度和性能不会更好,有时反而会有下降。

论文中的实验结果2a,最好的还是双模型并行,其次是协同蒸馏,最差的是unigram的smooth0.9,label smooth 0.99跟直接训练表现差不多,毕竟只是一个随机噪声。 另外,通过对比相同数据的协同蒸馏2b,和随机数据的协同整理,实验发现,随机数据实际上让模型有更好的表现 3在imagenet上的实验,出现了跟2a差不多的结果。 4中虽然不用非得用最新的模型,但是,协同蒸馏,使用太久远的checkpoint还是会显著降低训练效率的。

欠拟合的模型是有用的,但是过拟合的模型在蒸馏中可能不太有价值。 协同蒸馏比双步蒸馏能更快的收敛,而且更有效率。

3.5中介绍的,也是很多时候面临的问题,因为初始化,训练过程的参数不一样等问题,可能导致两次训练出来的模型的输出有很大区别。例如分类模型,可能上次训练的在某些分类上准确,而这次训练的,在这些分类上就不准确了。模型平均或者蒸馏法能有效避免这个问题。

总结

balabalabala 实验只尝试了两个模型,多个模型的多种拓扑结构也值得尝试。

很值得一读的一个论文。

A DISTRIBUTED SYSTEMS READING LIST

『MARK』A DISTRIBUTED SYSTEMS READING LIST

原文:http://dancres.org/reading_list.html

Introduction

I often argue that the toughest thing about distributed systems is changing the way you think. The below is a collection of material I''ve found useful for motivating these changes.

Thought Provokers

Ramblings that make you think about the way you design. Not everything can be solved with big servers, databases and transactions.

- Harvest, Yield and Scalable Tolerant Systems - Real world applications of CAP from Brewer et al

- On Designing and Deploying Internet Scale Services - James Hamilton

- Latency Exists, Cope! - Commentary on coping with latency and it''s architectural impacts

- Latency - the new web performance bottleneck - not at all new (see Patterson), but noteworthy

- The Perils of Good Abstractions - Building the perfect API/interface is difficult

- Chaotic Perspectives - Large scale systems are everything developers dislike - unpredictable, unordered and parallel

- Website Architecture - A collection of scalable architecture papers from various of the large websites

- Data on the Outside versus Data on the Inside - Pat Helland

- Memories, Guesses and Apologies - Pat Helland

- SOA and Newton''s Universe - Pat Helland

- Building on Quicksand - Pat Helland

- Why Distributed Computing? - Jim Waldo

- A Note on Distributed Computing - Waldo, Wollrath et al

- Stevey''s Google Platforms Rant - Yegge''s SOA platform experience

Amazon

Somewhat about the technology but more interesting is the culture and organization they''ve created to work with it.

- A Conversation with Werner Vogels - Coverage of Amazon''s transition to a service-based architecture

- Discipline and Focus - Additional coverage of Amazon''s transition to a service-based architecture

- Vogels on Scalability

- SOA creates order out of chaos @ Amazon

Current "rocket science" in distributed systems.

- MapReduce

- Chubby Lock Manager

- Google File System

- BigTable

- Data Management for Internet-Scale Single-Sign-On

- Dremel: Interactive Analysis of Web-Scale Datasets

- Large-scale Incremental Processing Using Distributed Transactions and Notifications

- Megastore: Providing Scalable, Highly Available Storage for Interactive Services - Smart design for low latency Paxos implementation across datacentres.

Consistency Models

Key to building systems that suit their environments is finding the right tradeoff between consistency and availability.

- CAP Conjecture - Consistency, Availability, Parition Tolerance cannot all be satisfied at once

- Consistency, Availability, and Convergence - Proves the upper bound for consistency possible in a typical system

- CAP Twelve Years Later: How the "Rules" Have Changed - Eric Brewer expands on the original tradeoff description

- Consistency and Availability - Vogels

- Eventual Consistency - Vogels

- Avoiding Two-Phase Commit - Two phase commit avoidance approaches

- 2PC or not 2PC, Wherefore Art Thou XA? - Two phase commit isn''t a silver bullet

- Life Beyond Distributed Transactions - Helland

- If you have too much data, then ''good enough'' is good enough - NoSQL, Future of data theory - Pat Helland

- Starbucks doesn''t do two phase commit - Asynchronous mechanisms at work

- You Can''t Sacrifice Partition Tolerance - Additional CAP commentary

- Optimistic Replication - Relaxed consistency approaches for data replication

Theory

Papers that describe various important elements of distributed systems design.

- Distributed Computing Economics - Jim Gray

- Rules of Thumb in Data Engineering - Jim Gray and Prashant Shenoy

- Fallacies of Distributed Computing - Peter Deutsch

- Impossibility of distributed consensus with one faulty process - also known as FLP [access requires account and/or payment, a free version can be found here]

- Unreliable Failure Detectors for Reliable Distributed Systems. A method for handling the challenges of FLP

- Lamport Clocks - How do you establish a global view of time when each computer''s clock is independent

- The Byzantine Generals Problem

- Lazy Replication: Exploiting the Semantics of Distributed Services

- Scalable Agreement - Towards Ordering as a Service

Languages and Tools

Issues of distributed systems construction with specific technologies.

- Programming Distributed Erlang Applications: Pitfalls and Recipes - Building reliable distributed applications isn''t as simple as merely choosing Erlang and OTP.

Infrastructure

- Principles of Robust Timing over the Internet - Managing clocks is essential for even basics such as debugging

Storage

- Consistent Hashing and Random Trees

- Amazon''s Dynamo Storage Service

Paxos Consensus

Understanding this algorithm is the challenge. I would suggest reading "Paxos Made Simple" before the other papers and again afterward.

- The Part-Time Parliament - Leslie Lamport

- Paxos Made Simple - Leslie Lamport

- Paxos Made Live - An Engineering Perspective - Chandra et al

- Revisiting the Paxos Algorithm - Lynch et al

- How to build a highly available system with consensus - Butler Lampson

- Reconfiguring a State Machine - Lamport et al - changing cluster membership

- Implementing Fault-Tolerant Services Using the State Machine Approach: a Tutorial - Fred Schneider

Other Consensus Papers

- Mencius: Building Efficient Replicated State Machines for WANs - consensus algorithm for wide-area network

Gossip Protocols (Epidemic Behaviours)

- How robust are gossip-based communication protocols?

- Astrolabe: A Robust and Scalable Technology For Distributed Systems Monitoring, Management, and Data Mining

- Epidemic Computing at Cornell

- Fighting Fire With Fire: Using Randomized Gossip To Combat Stochastic Scalability Limits

- Bi-Modal Multicast

Experience at MySpace

One of the larger websites out there with a high write load which is not the norm (most are read dominated).

- Inside MySpace

- Mix06 - Running a Megasite on Microsoft Technologies - Mix 06 Sessions/

- MySpace Storage Challenges

eBay

Interesting they dumped most of J2EE and use a lot of db partitioning. Check out their site upgrade tool as well.

- SD Forum 2006

A revolutionary architecture for building a distributed graph

转自:https://blog.apollographql.com/apollo-federation-f260cf525d21

What if you could access all of your organization’s data by typing a single GraphQL query, even if that data lived in separate places? Up until now, this goal has been difficult to achieve without committing to a monolithic architecture or writing fragile schema stitching code.

Ideally, we want to expose one graph for all of our organization’s data without experiencing the pitfalls of a monolith. What if we could have the best of both worlds: a complete schema to connect all of our data with a distributed architecture so teams can own their portion of the graph?

That dream is finally a reality with the launch of Apollo Federation, an architecture for building a distributed graph. Best of all, it’s open source! Try it out now or keep reading to learn more.

Introducing Apollo Federation

Apollo Federation is our answer for implementing GraphQL in a microservice architecture. It’s designed to replace schema stitching and solve pain points such as coordination, separation of concerns, and brittle gateway code.

Federation is based on these core principles:

- Building a graph should be declarative. With federation, you compose a graph declaratively from within your schema instead of writing imperative schema stitching code.

- Code should be separated by concern, not by types. Often no single team controls every aspect of an important type like a User or Product, so the definition of these types should be distributed across teams and codebases, rather than centralized.

- The graph should be simple for clients to consume. Together, federated services can form a complete, product-focused graph that accurately reflects how it’s being consumed on the client.

- It’s just GraphQL, using only spec-compliant features of the language.Any language, not just JavaScript, can implement federation.

Apollo Federation is inspired by years of conversations with teams that use GraphQL to power their most important projects. Time and time again, we’ve heard from developers that they need an efficient way to connect data across services. Let’s learn how in the next section!

Core concepts

Connecting data between multiple services is where federation truly shines. The two essential techniques for connecting data are type references and type extensions. A type that can be connected to a different service in the graph is called an entity, and we specify directives on it to indicate how both services should connect.

Type references

With Apollo Federation, we can declaratively reference types that live in different schemas. Let’s look at an example where we have two separate services for accounts and reviews. We want to create a User type that is referenced by both services.

In the accounts service, we use the @key directive to convert the User type to an entity. With only one line of code, we’ve indicated that the User type can be connected from other services in the graph through its id field.

In the reviews service, we can return the User type even though it’s defined in a different service. Thanks to type references, we can seamlessly connect the accounts and reviews service in one complete graph.

type Review {

author: User

}

On the client, you can query the data as if both types were defined in the same schema. You no longer have to create extra field names like authorId or make separate round trips to a different service.

query GetReviews {

reviews {

author {

username

}

}

}

Type extensions

To enable organizing services by concern, a service can extend a type defined in a different service to add new fields.

In the reviews service, we extend the User entity from the accounts service. First, we use the @key directive to specify that these two services should connect together on the id field defined in the @external accounts service. Then, we add the functionality for a user to fetch their reviews.

extend type User @key(fields: "id") {

id: ID! @external

reviews: [Review]

}

The client only sees the final User type, which includes all of the fields merged together. Type extensions for Apollo Federation will feel familiar if you’ve used Apollo Client for local state management because they share the same programming model.

Production-ready features

We designed Apollo Federation to be approachable to start with, yet capable of handling the most complex real-world use cases:

- Multiple primary keys: It’s not always practical to reference an entity with a single primary key. With Apollo Federation, we can reference types with multiple primary keys by adding several

@keydirectives. - Compound primary keys: Keys aren’t limited to a single field. Rather, they can be any valid selection set, including those with nested fields.

- Shortcuts for faster data fetching: In large organizations, it’s common to denormalize data across multiple services. With the

@providesdirective, services can use that denormalization to their advantage to provide shortcuts for fetching data faster.

Architecture

To implement Apollo Federation, you need two components:

- Federated services, which are standalone GraphQL APIs. You can connect data between them by extending types and creating references with the

@keydirective. - A gateway to compose the complete graph and execute federated queries

Setting up federation with Apollo Server doesn’t require any manual stitching code. Once you have your services, you can spin up a gateway simply by passing them as a list:

const { ApolloGateway } = require("@apollo/gateway");

const gateway = new ApolloGateway({

serviceList: [

{ name: "accounts", url: "https://pw678w138q.sse.codesandbox.io/graphql" },

{ name: "reviews", url: "https://0yo165yq9v.sse.codesandbox.io/graphql" },

{ name: "products", url: "https://x7jn4y20pp.sse.codesandbox.io/graphql" },

{ name: "inventory", url: "https://o5oxqmn7j9.sse.codesandbox.io/graphql" }

]

});

The gateway crunches all of this into a single schema, indistinguishable from a hand-written monolith. To any client consuming a federated graph, it’s just regular GraphQL.

We didn’t want the gateway to be a black box where federated schemas and queries magically turn into data. To give teams full visibility into what’s happening with their composed graph, Apollo Server provides a query plan inspector out of the box:

Try Apollo Federation today!

We’re excited to open source Apollo Federation and get feedback from the community. To get started, we’ve created an example repo that demonstrates how to build a federated graph with four services and a gateway. If you want to play with the example in the browser, check out this CodeSandbox.

Along with the example app, we added a new section to the Apollo Server docs about federation. Read the docs to get up to speed quickly and migrate over from schema stitching.

What’s next

This release is the first chapter of distributed GraphQL. We can’t wait to hear about the many exciting ideas that stem from federation and see the amazing things you build. In the future, we’re eager to work with the GraphQL community to support new features and tools such as:

- Additional languages (e.g. Java, Scala, Python) for federated services. If you’re a server maintainer, please read our federation spec to learn about adding support and reach out to us with any questions on Spectrum.

- More federation-aware tooling, such as adding federation directive support to the Apollo VS Code extension

- Service extensions for passing additional metadata back to the gateway

- Support for subscriptions and

@defer

We’d love to hear your feedback on Apollo Federation. Join the conversation on Spectrum and let us know what you think!

Build self-healing distributed systems with Spring

原文链接:http://www.infoworld.com/article/2925047/application-development/build-self-healing-distributed-systems-with-spring-cloud.html

这篇文章介绍了如何 Spring Cloud 是如何帮助构建一个高可用的分布式系统的。在同类文章算是介绍的很不错的,感觉比 Spring Blog 里面的文章还要更全面易懂。

文章开始先介绍了构建分布式系统时会遇到的各种需要解决的问题,然后分别由这些问题引出 Spring Cloud 中的各种技术。

Spring Cloud Config Server

Spring Cloud Config Server 以 Git、SVN 等 VCS 系统存储 properties、yml 等格式的配置文件,构建了一个可横向伸缩的配置服务器。且不说使用效果怎么样,这个思想还是很有启发性的。配置文件通常是文本文件,用 VCS 存储是顺理成章的,同时还具备了版本控制功能,使得配置的变化被记录了下来。再在这个基础之上,加上横向伸缩的能力,便成为了一个很不错的配置服务器。

Spring Cloud Bus

Spring Cloud Bus 为 Spring 应用提供了 Message Broker 的功能。目前的唯一实现是基于 AMQP 的(用 RabbitMQ 为消息中间件)。目前,Cloud Bus 的一个应用是通过消息中间件,将配置改变的事件通知给业务组件。被 @RefreshScope 表示的 Bean 便具有了感知配置变化的能力。

Spring Cloud Netflix

Spring Cloud Netflix 是 Spring Cloud 中被介绍最多的部分。基于 Netflix 一套经过实战的解决方案,Spring Cloud Netflix 为分布式系统提供了很多易用又强大的功能。

Hystrix

对于 Eureka 和 Ribbon,我不多介绍,因为这两个是被提到比较多的技术。多介绍一下 Hystrix。Hystrix 会对提高分布式系统在远程调用方面的可用性和鲁棒性非常有帮助的一个组件。它提供了熔断器等模式(服务降级)。同时,它还提供了一个很不错的远程调用监控的功能(如原文图6所示的 Hystrix Dashboard)。Spring Cloud Netflix 使得 Hystrix 提供的这些功能(当然也包括 Eureka 和 Ribbon 所提供的功能)可以很透明地、非侵入式地被 Spring 应用所使用。

目前常见的应用监控解决方案,如国内厂商比较广泛使用的大众点评的 CAT,其使用方式还是一种侵入是的。这种方式是业务代码和监控系统相耦合,同时也使代码的可读性降低。所以最好的方式是非侵入式的监控(某些场合实现起来很困难,比如异步 API)。在这方面,Spring Cloud Netflix 在 REST API 调用方面给我们提供了一个不错的可选择的方案。

Zuul

Zuul 是 Netflix 所用的反向代理模块。不同于 Nginx 和 HAProxy,Zuul 使用的是动态加载 Groovy 缩写的 Filter 的方式来定义路由规则。因为采用了编程的方式,所以其自定义能力大大强于 Nginx 和 HAProxy(此处是凭经验推断)。

Spring Cloud Netflix 进一步地在 Zuul 的基础上提供了 OAuth2 SSO 的能力。

总结

大致读了一下这篇文章,发现了 Spring Cloud 更多的亮点和可以为项目之所用的地方。我相信深入了解 Spring Cloud 以及其设计思想会给我们的分布式系统带来质的提高。

今天关于TiDB Brings Distributed Scalability to SQL的介绍到此结束,谢谢您的阅读,有关1804.03235-Large scale distributed neural network training through online distillation.md、A DISTRIBUTED SYSTEMS READING LIST、A revolutionary architecture for building a distributed graph、Build self-healing distributed systems with Spring等更多相关知识的信息可以在本站进行查询。

本文标签: