本文将带您了解关于docker-使用Yaml文件配置运行Kubectl的新内容,同时我们还将为您解释yaml文件docker的相关知识,另外,我们还将为您提供关于bash–在运行kubectlexec

本文将带您了解关于docker-使用Yaml文件配置运行Kubectl的新内容,同时我们还将为您解释yaml文件 docker的相关知识,另外,我们还将为您提供关于bash – 在运行kubectl exec时禁用Kubernetes上的网络日志、centos7安装docker-1.13.1, kubelet/kubectl/kubeadm-1.9.5、Docker Kubernetes yaml 创建管理 Pod、Docker Kubernetes YAML 文件常用指令的实用信息。

本文目录一览:- docker-使用Yaml文件配置运行Kubectl(yaml文件 docker)

- bash – 在运行kubectl exec时禁用Kubernetes上的网络日志

- centos7安装docker-1.13.1, kubelet/kubectl/kubeadm-1.9.5

- Docker Kubernetes yaml 创建管理 Pod

- Docker Kubernetes YAML 文件常用指令

docker-使用Yaml文件配置运行Kubectl(yaml文件 docker)

还有一个类似的问题here,但我想我想要不同的东西.

对于那些熟悉docker-compose的人来说,有个很棒的命令可以在容器中运行一次命令,这对于在每次部署之前启动迁移都非常有帮助:

docker-compose -f docker-compose.prod.yml run web npm run migrate

另外,因为这是一个单行命令,所以可以轻松实现自动化:例如使用Makefile或ansible / chef / saltstack.

我发现的唯一东西是kubectl run,它与docker run更相似.但是docker-compose run允许我们使用配置文件,而docker-run不允许:

kubectl run rp2migrate --command -- npm run migrate

这可能会起作用,但是我需要列出20个环境变量,并且确实不想在命令行中执行此操作.相反,我想传递一个标志来指定yaml config,如下所示:

kubectl run rp2migrate -f k8s/rp2/rp2-deployment.yaml --command -- npm run migrate

Kubernetes也将init容器作为beta功能(截至目前)-http://kubernetes.io/docs/user-guide/production-pods/#handling-initialization

您可能应该利用Kubernetes PostStart挂钩.如下所示:

lifecycle:

postStart:

exec:

command:

- "npm"

- "run"

- "migrate"

http://kubernetes.io/docs/user-guide/container-environment/

为您的Pod指定的环境变量也将可用:

Additionally,user-defined environment variables from the pod deFinition,are also available to the container,as are any environment variables specified statically in the Docker image

bash – 在运行kubectl exec时禁用Kubernetes上的网络日志

) Data frame handling

I0331 17:46:15.486652 3807 logs.go:41] (0xc4201158c0) Data frame received for 5

I0331 17:46:15.486671 3807 logs.go:41] (0xc42094a000) (5) Data frame handling

I0331 17:46:15.486682 3807 logs.go:41] (0xc42094a000) (5) Data frame sent

root@hello-node-2399519400-6q6s3:/# I0331 17:46:16.667823 3807 logs.go:41] (0xc420687680) (3) Writing data frame

I0331 17:46:16.669223 3807 logs.go:41] (0xc4201158c0) Data frame received for 5

I0331 17:46:16.669244 3807 logs.go:41] (0xc42094a000) (5) Data frame handling

I0331 17:46:16.669254 3807 logs.go:41] (0xc42094a000) (5) Data frame sent

root@hello-node-2399519400-6q6s3:/# I0331 17:46:17.331753 3807 logs.go:41] (0xc420687680) (3) Writing data frame

I0331 17:46:17.333338 3807 logs.go:41] (0xc4201158c0) Data frame received for 5

I0331 17:46:17.333358 3807 logs.go:41] (0xc42094a000) (5) Data frame handling

I0331 17:46:17.333369 3807 logs.go:41] (0xc42094a000) (5) Data frame sent

I0331 17:46:17.333922 3807 logs.go:41] (0xc4201158c0) Data frame received for 5

I0331 17:46:17.333943 3807 logs.go:41] (0xc42094a000) (5) Data frame handling

I0331 17:46:17.333956 3807 logs.go:41] (0xc42094a000) (5) Data frame sent

root@hello-node-2399519400-6q6s3:/# I0331 17:46:17.738444 3807 logs.go:41] (0xc420687680) (3) Writing data frame

I0331 17:46:17.740563 3807 logs.go:41] (0xc4201158c0) Data frame received for 5

I0331 17:46:17.740591 3807 logs.go:41] (0xc42094a000) (5) Data frame handling

I0331 17:46:17.740606 3807 logs.go:41] (0xc42094a000) (5) Data frame sent

没有’t’选项会更好一些:

kubectl exec -i hello-4103519535-hcdm6 -- /bin/bash I0331 18:29:06.918584 4992 logs.go:41] (0xc4200878c0) (0xc4204c5900) Create stream I0331 18:29:06.918714 4992 logs.go:41] (0xc4200878c0) (0xc4204c5900) Stream added,broadcasting: 1 I0331 18:29:06.928571 4992 logs.go:41] (0xc4200878c0) Reply frame received for 1 I0331 18:29:06.928605 4992 logs.go:41] (0xc4200878c0) (0xc4203ffc20) Create stream I0331 18:29:06.928614 4992 logs.go:41] (0xc4200878c0) (0xc4203ffc20) Stream added,broadcasting: 3 I0331 18:29:06.930565 4992 logs.go:41] (0xc4200878c0) Reply frame received for 3 I0331 18:29:06.930603 4992 logs.go:41] (0xc4200878c0) (0xc4204c59a0) Create stream I0331 18:29:06.930615 4992 logs.go:41] (0xc4200878c0) (0xc4204c59a0) Stream added,broadcasting: 5 I0331 18:29:06.932455 4992 logs.go:41] (0xc4200878c0) Reply frame received for 5 I0331 18:29:06.932499 4992 logs.go:41] (0xc4200878c0) (0xc420646000) Create stream I0331 18:29:06.932511 4992 logs.go:41] (0xc4200878c0) (0xc420646000) Stream added,broadcasting: 7 I0331 18:29:06.935363 4992 logs.go:41] (0xc4200878c0) Reply frame received for 7 echo toto I0331 18:29:08.943066 4992 logs.go:41] (0xc4203ffc20) (3) Writing data frame I0331 18:29:08.947811 4992 logs.go:41] (0xc4200878c0) Data frame received for 5 I0331 18:29:08.947837 4992 logs.go:41] (0xc4204c59a0) (5) Data frame handling I0331 18:29:08.947851 4992 logs.go:41] (0xc4204c59a0) (5) Data frame sent toto

有没有办法禁用它?

它会来自我的环境吗?

我仍然不确定它是否来自Kubernetes或我的环境.

解决方法

centos7安装docker-1.13.1, kubelet/kubectl/kubeadm-1.9.5

当我使用亚马逊云服务建好的centos7服务时,发现docker版本太旧了,而目前kubernetes不支持docker-ce,

这里主要讲一下在安装docker-1.13.1及启动需要修改的一些配置文件.

centos7安装docker-1.13.1

删除旧版本的docker

sudo yum remove -y docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine安装docker

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

sudo yum install docker -y启动docker

修改/etc/sysconfig/docker文件

# 将--selinux-enabled设置为false,不然可能会由于selinux服务不能用导致docker启动失败

OPTIONS=''--selinux-enabled=false --log-driver=json-file --signature-verification=false''修改systemd启动参数

# 这个启动参数是centos7自带的docker1.11.1版本需要的,会导致docker1.13.1启动失败

mv /etc/systemd/system/docker.service.d/execstart.conf /etc/systemd/system/docker.service.d/execstart.conf.cp

systemctl daemon-reload

systemctl enable docker && systemctl start dockercentos7安装kubelet/kubectl/kubeadm-1.9.5

安装kubeadm, kubelet, kubectl

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

yum install -y kubelet-1.9.5 kubeadm-1.9.5 kubectl-1.9.5修改k8s.conf

官网文档上写一些用户在RHEL/Centos7系统上安装时,由于iptables被绕过导致路由错误,需要在

sysctl的config文件中将net.bridge.bridge-nf-call-iptables设置为1.

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system启动kubelet

systemctl enable kubelet && systemctl start kubelet

Docker Kubernetes yaml 创建管理 Pod

Docker Kubernetes yaml 创建管理 Pod

环境:

- 系统:Centos 7.4 x64

- Docker版本:18.09.0

- Kubernetes版本:v1.8

- 管理节点:192.168.1.79

- 工作节点:192.168.1.78

- 工作节点:192.168.1.77

管理节点:创建pod yaml文件

vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-test

labels:

os: centos

spec:

containers:

- name: hello

image: centos:6

env:

- name: Test

value: "123456"

command: ["bash","-c","while true;do date;sleep 1;done"]

# api版本

apiVersion: v1

# 指定创建资源对象

kind: Pod

# 源数据、可以写name,命名空间,对象标签

metadata:

# 服务名称

name: pod-test

# 标签

labels:

# 标签名

os: centos

# 容器资源信息

spec:

# 容器管理

containers:

# 容器名称

- name: hello

# 容器镜像

image: centos:6

# 添加环境变量

env:

# 创建key

- name: Test

# 创建value

value: "123456"

# 启动容器后执行命令

command: ["bash","-c","while true;do date;sleep 1;done"]注:一个pod可指定多个容器。command命令执行一个持续命令避免容器关闭。

管理节点:创建pod

kubectl create -f pod.yaml管理节点:基本管理操作

基本管理:

# 创建pod资源

kubectl create -f pod.yaml

# 查看pods

kubectl get pods pod-test

# 查看pod描述

kubectl describe pod pod-test

# 替换资源

kubectl replace -f pod.yaml -force

# 删除资源

kubectl delete pod pod-test

Docker Kubernetes YAML 文件常用指令

YAML 文件常用指令

配置文件说明:

- 定义配置时,指定最新稳定版 API(当前为 v1)。

- 配置文件应该存储在集群之外的版本控制仓库中。如果需要,可以快速回滚配置、重新创建和恢复。

- 应该使用 YAML 格式编写配置文件,而不是 JSON。尽管这些格式都可以使用,但 YAML 对用户更加友好。

- 可以将相关对象组合成单个文件,通常会更容易管理。

- 不要没必要的指定默认值,简单和最小配置减少错误。

- 在注释中说明一个对象描述更好维护。

- YAML 是一种标记语言很直观的数据序列化格式,可读性高。类似于 XML 数据描述语言,语法比 XML 简单的很多。

- YAML 数据结构通过缩进来表示,连续的项目通过减号来表示,键值对用冒号分隔,数组用中括号括起来,hash 用花括号括起来。

YAML 文件格式注意事项:

- 1. 不支持制表符 tab 键缩进,需要使用空格缩进

- 2. 通常开头缩进 2 个空格

- 3. 字符后缩进 1 个空格,

- 4. “---” 表示 YAML 格式,一个文件的开始

- 5. “#” 注释

- # 指定 api 版本

- apiVersion:

- # 指定需要创建的资源对象

- kind:

- # 源数据、可以写 name,命名空间,对象标签

- metadata:

- # 指定对象名称

- name:

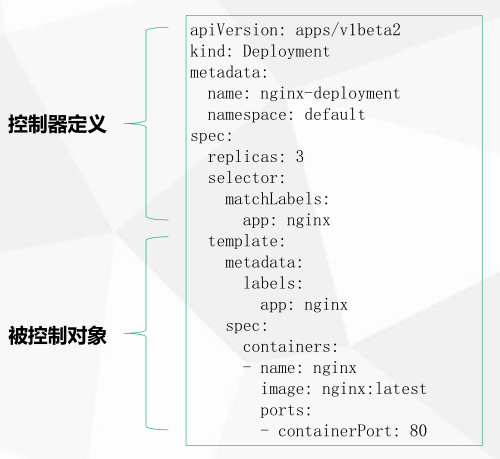

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: nginx-deployment- # 描述资源相关信息

- spec:

- # 指定 pod 副本数,默认 1

- replicas:

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3- # 资源标签选择器

- selector:

- # 描述资源具体信息

- template:

- # 匹配标签字段

- matchLabels:

- # 指定 pod 标签 value:key

- labels:

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx- # 指定容器信息

- containers:

- # 指定容器名称

- - name:

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.9

ports:

- containerPort: 80- # 指定镜像名称

- image:

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.9

ports:

- containerPort: 80- # 暴露容器端口

- ports:

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.9

ports:

- containerPort: 80- # 指定暴露容器端口

- - containerPort:

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.9

ports:

- containerPort: 80- # 添加环境变量

- env:

apiVersion: v1

kind: Pod

metadata:

name: pod-test

labels:

os: centos

spec:

containers:

- name: hello

image: centos:6

env:

# 变量key

- name: Test

# 变量value

value: "123456"- # 启动容器后执行命令

- command:

apiVersion: v1

kind: Pod

metadata:

name: pod-test

labels:

os: centos

spec:

containers:

- name: hello

image: centos:6

command: ["bash","-c","while true;do date;sleep 1;done"]- # 重启策略 可添加(Always,OnFailure,Never)

- restartPolicy:

apiVersion: v1

kind: Pod

metadata:

name: pod-test

labels:

os: centos

spec:

containers:

- name: hello

image: centos:6

restartPolicy: OnFailure- # 健康检查模式(httpGet、exec、tcpSocket)

- livenessProbe:

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.10

ports:

- containerPort: 80

livenessProbe:

# 健康检查模式

httpGet:

# 指定检查目录

path: /index.html

# 访问端口

port: 80- # 容器内管理 volume 数据卷

- volumeMounts:

apiVersion: v1

kind: Pod

metadata:

name: pod-test

labels:

test: centos

spec:

containers:

- name: hello-read

image: centos:6

# 容器内管理数据卷

volumeMounts:

# 数据卷名称

- name: data

# 容器数据卷路径

mountPath: /data

# 数据卷

volumes:

# 数据卷名称

- name: data

# 数据宿主机卷路径

hostPath:

# 指定宿主机数据卷路径

path: /data- # 宿主级管理 volume 数据卷管理

- volumes:

apiVersion: v1

kind: Pod

metadata:

name: pod-test

labels:

test: centos

spec:

containers:

- name: hello-read

image: centos:6

# 容器内管理数据卷

volumeMounts:

# 数据卷名称

- name: data

# 容器数据卷路径

mountPath: /data

# 数据卷

volumes:

# 数据卷名称

- name: data

# 数据宿主机卷路径

hostPath:

# 指定宿主机数据卷路径

path: /data- # hsotip 监听 IP,可通过哪些宿主级 ip 访问

- hostIP:

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod2

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.10

# hostport管理

ports:

# 指定http

- name: http

# 指定端口

containerPort: 80

# hsotip监听IP,可通过哪些宿主级ip访问

hostIP: 0.0.0.0

# 宿主级暴露端口,它会映射到containerport的容器端口

hostPort: 89

# 指定协议类型

protocol: TCP- # 宿主级暴露端口,它会映射到 containerport 的容器端口

- hostPort:

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod2

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.10

# hostport管理

ports:

# 指定http

- name: http

# 指定端口

containerPort: 80

# hsotip监听IP,可通过哪些宿主级ip访问

hostIP: 0.0.0.0

# 宿主级暴露端口,它会映射到containerport的容器端口

hostPort: 89

# 指定协议类型

protocol: TCP- # 暴露端口的协议类型

- protocol:

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod2

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.10

# hostport管理

ports:

# 指定http

- name: http

# 指定端口

containerPort: 80

# hsotip监听IP,可通过哪些宿主级ip访问

hostIP: 0.0.0.0

# 宿主级暴露端口,它会映射到containerport的容器端口

hostPort: 89

# 指定协议类型

protocol: TCP- # 固定 IP 地址

- clusterIP:

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

ports:

- name: http

protocol: TCP

port: 888

targetPort: 80

clusterIP: "10.10.10.11"- # 服务类型

- type:

apiVersion: v1

kind: Service

metadata:

name: nginx-service2

labels:

app: nginx

spec:

selector:

app: nginx

ports:

- name: http

port: 8080

targetPort: 80

# 服务类型

type: NodePort- # node 节点创建 socker 的暴露端口,默认 30000~32767

- nodePort:

apiVersion: v1

kind: Service

metadata:

name: nginx-service2

labels:

app: nginx

spec:

selector:

app: nginx

ports:

- name: http

port: 8080

targetPort: 80

# 服务类型

type: NodePort

今天的关于docker-使用Yaml文件配置运行Kubectl和yaml文件 docker的分享已经结束,谢谢您的关注,如果想了解更多关于bash – 在运行kubectl exec时禁用Kubernetes上的网络日志、centos7安装docker-1.13.1, kubelet/kubectl/kubeadm-1.9.5、Docker Kubernetes yaml 创建管理 Pod、Docker Kubernetes YAML 文件常用指令的相关知识,请在本站进行查询。

本文标签: