在本文中,我们将详细介绍CentOS7.6使用kubeadm安装Kubernetes13的各个方面,并为您提供关于kubernetescentos8的相关解答,同时,我们也将为您带来关于CentOS部

在本文中,我们将详细介绍CentOS 7.6 使用kubeadm安装Kubernetes 13的各个方面,并为您提供关于kubernetes centos8的相关解答,同时,我们也将为您带来关于CentOS 部署 Kubernetes1.13 集群 - 1(使用 kubeadm 安装 K8S)、Centos7 使用 kubeadm 安装Kubernetes 1.13.3、centos7 使用 kubeadm 安装部署 kubernetes 1.14、centos7 使用 kubeadm 搭建 kubernetes 集群的有用知识。

本文目录一览:- CentOS 7.6 使用kubeadm安装Kubernetes 13(kubernetes centos8)

- CentOS 部署 Kubernetes1.13 集群 - 1(使用 kubeadm 安装 K8S)

- Centos7 使用 kubeadm 安装Kubernetes 1.13.3

- centos7 使用 kubeadm 安装部署 kubernetes 1.14

- centos7 使用 kubeadm 搭建 kubernetes 集群

CentOS 7.6 使用kubeadm安装Kubernetes 13(kubernetes centos8)

实验环境:VMware Fusion 11.0.2

操作系统:CentOS 7.6

装系统的时候就已经设置为静态IP了,语言为英语,时区是上海。另外因为kubernetes默认不支持swap分区,所以在硬盘分区的时候直接把swap分区拿掉了。这里可以先忽略这些。

如果装系统时分配了 swap 分区,临时关闭 swap 分区可以用: swapoff -a ,永久关闭可以在 /etc/fstab 里面注释掉,这段开始忘了写了,用红色写出来吧。

未声明的话,下列命令在 k8s2m 和 k8s2n 上都能执行。

1、配置 SSH 免密登陆

在本机的hosts 文件中加入如下内容:

72.16.183.151 k8s2m 172.16.183.161 k8s2n

然后查看本机是否有 id_rsa.pub 文件,如果没有则通过 ssh-keygen 生成

if [ -f "$HOME/.ssh/id_rsa.pub" ];then echo "File exists"; else ssh-keygen; fi

将本地公钥安装到虚拟机的root账户下

ssh-copy-id [email protected] ssh-copy-id [email protected]

至此免密登陆配置完成。

2、解决 setLocale 问题

接下来开两个终端通过ssh进入系统,在终端得到如下输出:

-bash: warning: setlocale: LC_CTYPE: cannot change locale (UTF-8): No such file or directory

在终端直接执行如下命令,然后退出ssh,再次进入,或者直接重启虚拟机

cat <<EOF > /etc/environment LANG=en_US.UTF-8 LC_ALL=C EOF

3、设置 SELinux 为 permissive 模式

setenforce 0 sed -i ‘s/^SELINUX=enforcing$/SELINUX=permissive/‘ /etc/selinux/config

4、停止并且禁用动态防火墙

systemctl disable firewalld && systemctl stop firewalld

5、添加 kubernetes.repo 和 docker-ce.repo

原本是都用 cat EOF 方式来做的,结果发现在我MAC上SSH连接进去执行后文件内容一致,但即便 yum clean all 后仍然无法生效,这里还是使用 vi,vim等编辑器往里面粘贴算了。

vi /etc/yum.repos.d/kubernetes.repo

[kubernetes] name=Kubernetes baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

vi /etc/yum.repos.d/docker-ce.repo

[docker-ce-stable] name=Docker CE Stable - $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/stable enabled=1 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-stable-debuginfo] name=Docker CE Stable - Debuginfo $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/debug-$basearch/stable enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-stable-source] name=Docker CE Stable - Sources baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/source/stable enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-edge] name=Docker CE Edge - $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/edge enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-edge-debuginfo] name=Docker CE Edge - Debuginfo $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/debug-$basearch/edge enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-edge-source] name=Docker CE Edge - Sources baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/source/edge enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-test] name=Docker CE Test - $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/test enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-test-debuginfo] name=Docker CE Test - Debuginfo $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/debug-$basearch/test enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-test-source] name=Docker CE Test - Sources baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/source/test enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-nightly] name=Docker CE Nightly - $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/$basearch/nightly enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-nightly-debuginfo] name=Docker CE Nightly - Debuginfo $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/debug-$basearch/nightly enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-nightly-source] name=Docker CE Nightly - Sources baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/7/source/nightly enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

6、安装 docker-ce

这里kubeadm的版本是1.13.1,支持的 docker 版本最高应该是 18.06.x,通过执行 yum list docker-ce --showduplicates | sort -r 得到 docker 各版本的列表:

这里选 18.06.1.ce-3.el7

docker-ce.x86_64 3:18.09.0-3.el7 docker-ce-stable docker-ce.x86_64 18.06.1.ce-3.el7 docker-ce-stable docker-ce.x86_64 18.06.0.ce-3.el7 docker-ce-stable docker-ce.x86_64 18.03.1.ce-1.el7.centos docker-ce-stable docker-ce.x86_64 18.03.0.ce-1.el7.centos docker-ce-stable docker-ce.x86_64 17.12.1.ce-1.el7.centos docker-ce-stable docker-ce.x86_64 17.12.0.ce-1.el7.centos docker-ce-stable docker-ce.x86_64 17.09.1.ce-1.el7.centos docker-ce-stable docker-ce.x86_64 17.09.0.ce-1.el7.centos docker-ce-stable docker-ce.x86_64 17.06.2.ce-1.el7.centos docker-ce-stable docker-ce.x86_64 17.06.1.ce-1.el7.centos docker-ce-stable docker-ce.x86_64 17.06.0.ce-1.el7.centos docker-ce-stable docker-ce.x86_64 17.03.3.ce-1.el7 docker-ce-stable docker-ce.x86_64 17.03.2.ce-1.el7.centos docker-ce-stable docker-ce.x86_64 17.03.1.ce-1.el7.centos docker-ce-stable docker-ce.x86_64 17.03.0.ce-1.el7.centos docker-ce-stable

安装 docker-ce:

yum install -y docker-ce-18.06.1.ce-3.el7

启动 docker 服务,并将 docker 服务设置为开机启动

systemctl enable docker && systemctl start docker

7、安装 kubelet、kubeadm 和 kubectl

以下是安装 kubernetes 所需的工具

yum install -y kubelet kubeadm kubectl

启动 docker、kubelet 服务,并将 docker、kubelet 服务设置为开机启动

systemctl enable kubelet && systemctl start kubelet

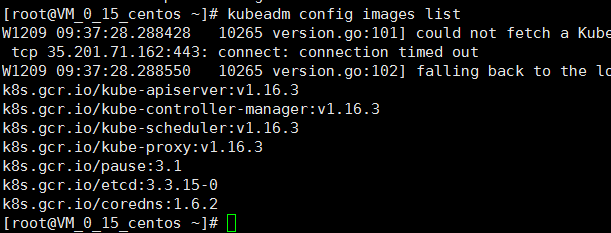

8、安装镜像

列出 kubeadm 需要安装的镜像列表,然后使用 sed 修改后执行:

kubeadm config images list |sed -e ‘s#k8s.gcr.io\/coredns#coredns\/coredns#g‘|sed -e ‘s/^/docker pull /g‘ -e ‘s#k8s.gcr.io#mirrorgooglecontainers#g‘|sh -x

装好的镜像 tag 跟 kubeadm 直接装的是不一样的,这里给予修正:

docker images |grep -E ‘mirrorgooglecontainers|coredns/coredns‘|awk ‘{print "docker tag ",$1":"$2,$1":"$2}‘|sed -e ‘s#mirrorgooglecontainers#k8s.gcr.io#2‘|sed -e ‘s#coredns#k8s.gcr.io#3‘|sh -x

最后移除掉多余的 tag

docker images |grep -E ‘mirrorgooglecontainers|coredns/coredns‘|awk ‘{print "docker rmi ",$1":"$2}‘|sh -x

9、配置 net.bridge.bridge-nf-call-iptables

cat <<EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sysctl --system

然后重启 kubelet

systemctl daemon-reload

systemctl restart kubelet

10、创建kubernetes master,只需要在 master 节点(k8s2m)上执行

在 master 上执行下面命令创建 kubernetes master,为何加 --pod-network-cidr=10.244.0.0/16 看这里

kubeadm init --pod-network-cidr=10.244.0.0/16

命令执行结束后,根据提示执行如下操作,如果本身是 root 用户,第三条其实不用执行的:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

下面还有一条如下形式的提示,拷贝出来,在 worker 节点(k8s2n)上需要执行

kubeadm join 172.16.183.151:6443 --token ############## --discovery-token-ca-cert-hash #############################

最后安装 pod network add-on,这里装 flannel,在安装之前这里有如下说明,也是为何

链接地址: https://kubernetes.io/docs/setup/independent/create-cluster-kubeadm/#tabs-pod-install-4

For flannel to work correctly,you must pass --pod-network-cidr=10.244.0.0/16 to kubeadm init.

安装flannel

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

如果想要查看 pods 或 nodes,分别执行如下命令(另外 pods 就是跑在 docker 上的,也可以通过 docker ps 来查看):

kubectl get pods --all-namespaces kubectl get nodes --all-namespaces

想要查看实时状态还可以执行如下命令,-n2也就是2秒刷新一次结果:

watch -n2 kubectl get pods --all-namespaces

watch -n2 kubectl get nodes --all-namespaces

11、将 worker 节点(k8s2n) 加入 master 节点(k8s2m)

最后只需要在 worker 节点(k8s2n)上执行如下命令(在 master 节点 init 结束让存下的命令):

kubeadm join 172.16.183.151:6443 --token ############## --discovery-token-ca-cert-hash #############################

当在master 节点 (k8s2m) 执行 kubectl get nodes ,能看到两个节点,并且状态都是 Ready 的时候,这里的任务也就暂时完成了。

CentOS 部署 Kubernetes1.13 集群 - 1(使用 kubeadm 安装 K8S)

参考:https://www.kubernetes.org.cn/4956.html

1. 准备

说明:准备工作需要在集群所有的主机上执行

1.1 系统配置

在安装之前,需要先做如下准备。三台 CentOS 主机如下:

cat /etc/hosts

192.168.0.19 tf-01

192.168.0.20 tf-02

192.168.0.21 tf-03如果各个主机启用了防火墙,需要开放 Kubernetes 各个组件所需要的端口,可以查看 Installing kubeadm 中的”Check required ports” 一节。 这里简单起见在各节点禁用防火墙:

systemctl stop firewalld

systemctl disable firewalld禁用 SELINUX:

setenforce 0

vi /etc/selinux/config

SELINUX=disabled创建 /etc/sysctl.d/k8s.conf 文件,添加如下内容:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1执行命令使修改生效。

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf1.2kube-proxy 开启 ipvs 的前置条件

由于 ipvs 已经加入到了内核的主干,所以为 kube-proxy 开启 ipvs 的前提需要加载以下的内核模块:

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

在所有的Kubernetes节点上执行以下脚本:

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4上面脚本创建了的 /etc/sysconfig/modules/ipvs.modules 文件,保证在节点重启后能自动加载所需模块。

使用 lsmod | grep -e ip_vs -e nf_conntrack_ipv4 命令查看是否已经正确加载所需的内核模块。

lsmod | grep -e ip_vs -e nf_conntrack_ipv4接下来还需要确保各个节点上已经安装了 ipset 软件包 yum install ipset。 为了便于查看 ipvs 的代理规则,最好安装一下管理工具 ipvsadm yum install ipvsadm。

yum install ipset

ipvsadm yum install ipvsadm如果以上前提条件如果不满足,则即使 kube-proxy 的配置开启了 ipvs 模式,也会退回到 iptables 模式

1.3 安装 Docker

Kubernetes 从 1.6 开始使用 CRI (Container Runtime Interface) 容器运行时接口。默认的容器运行时仍然是 Docker,使用的是 kubelet 中内置 dockershim CRI 实现。

安装 docker 的 yum 源:

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo查看最新的 Docker 版本:

yum list docker-ce.x86_64 --showduplicates |sort -r

docker-ce.x86_64 3:18.09.0-3.el7 docker-ce-stable

docker-ce.x86_64 18.06.1.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.06.0.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.03.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 18.03.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.12.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.12.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.09.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.09.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.2.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.3.ce-1.el7 docker-ce-stable

docker-ce.x86_64 17.03.2.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.0.ce-1.el7.centos docker-ce-stableKubernetes 1.12 已经针对 Docker 的 1.11.1, 1.12.1, 1.13.1, 17.03, 17.06, 17.09, 18.06 等版本做了验证,需要注意 Kubernetes 1.12 最低支持的 Docker 版本是 1.11.1。Kubernetes 1.13 对 Docker 的版本依赖方面没有变化。 我们这里在各节点安装 docker 的 18.06.1 版本。

yum makecache fast

yum install -y --setopt=obsoletes=0 \

docker-ce-18.06.1.ce-3.el7

systemctl start docker

systemctl enable docker确认一下 iptables filter 表中 FOWARD 链的默认策略 (pllicy) 为 ACCEPT。

iptables -nvL

Chain INPUT (policy ACCEPT 263 packets, 19209 bytes)

pkts bytes target prot opt in out source destination

Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

0 0 DOCKER-USER all -- * * 0.0.0.0/0 0.0.0.0/0

0 0 DOCKER-ISOLATION-STAGE-1 all -- * * 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all -- * docker0 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED

0 0 DOCKER all -- * docker0 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all -- docker0 !docker0 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all -- docker0 docker0 0.0.0.0/0 0.0.0.0/0Docker 从 1.13 版本开始调整了默认的防火墙规则,禁用了 iptables filter 表中 FOWARD 链,这样会引起 Kubernetes 集群中跨 Node 的 Pod 无法通信。但这里通过安装 docker 1806,发现默认策略又改回了 ACCEPT,这个不知道是从哪个版本改回的,因为我们线上版本使用的 1706 还是需要手动调整这个策略的。

2. 使用 kubeadm 部署 Kubernetes

2.1 安装 kubeadm 和 kubelet

下面在各节点安装 kubeadm 和 kubelet:

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

注意:此处与参考的原文不同,原文用了 google 的资源,因为 google 联不上,此处改为 aliyun,且不开启 check(check=0)

yum makecache fast

yum install -y kubelet kubeadm kubectl

...

Installed:

kubeadm.x86_64 0:1.13.0-0 kubectl.x86_64 0:1.13.0-0 kubelet.x86_64 0:1.13.0-0

Dependency Installed:

cri-tools.x86_64 0:1.12.0-0 kubernetes-cni.x86_64 0:0.6.0-0 socat.x86_64 0:1.7.3.2-2.el7从安装结果可以看出还安装了 cri-tools, kubernetes-cni, socat 三个依赖:

- 官方从 Kubernetes 1.9 开始就将 cni 依赖升级到了 0.6.0 版本,在当前 1.12 中仍然是这个版本

- socat 是 kubelet 的依赖

- cri-tools 是 CRI (Container Runtime Interface) 容器运行时接口的命令行工具

运行 kubelet –help 可以看到原来 kubelet 的绝大多数命令行 flag 参数都被 DEPRECATED 了,如:

......

--address 0.0.0.0 The IP address for the Kubelet to serve on (set to 0.0.0.0 for all IPv4 interfaces and `::` for all IPv6 interfaces) (default 0.0.0.0) (DEPRECATED: This parameter should be set via the config file specified by the Kubelet''s --config flag. See https://kubernetes.io/docs/tasks/administer-cluster/kubelet-config-file/ for more information.)

......而官方推荐我们使用–config 指定配置文件,并在配置文件中指定原来这些 flag 所配置的内容。具体内容可以查看这里 Set Kubelet parameters via a config file。这也是 Kubernetes 为了支持动态 Kubelet 配置(Dynamic Kubelet Configuration)才这么做的,参考 Reconfigure a Node’s Kubelet in a Live Cluster。

kubelet 的配置文件必须是 json 或 yaml 格式,具体可查看这里。

Kubernetes 1.8 开始要求关闭系统的 Swap,如果不关闭,默认配置下 kubelet 将无法启动。(如果不能关闭 Swap,则需要修改 kubelet 的配置,下附)

关闭系统的 Swap 方法如下:

swapoff -a 修改 /etc/fstab 文件,注释掉 SWAP 的自动挂载,使用 free -m 确认 swap 已经关闭。

vim /etc/fstab

free -m

swappiness 参数调整,修改 /etc/sysctl.d/k8s.conf 添加下面一行:

vm.swappiness=0执行 sysctl -p /etc/sysctl.d/k8s.conf 使修改生效。

sysctl -p /etc/sysctl.d/k8s.conf

如果集群主机上还运行其他服务,关闭 swap 可能会对其他服务产生影响,则可以修改 kubelet 的配置去掉这个限制:

使用 kubelet 的启动参数–fail-swap-on=false 去掉必须关闭 Swap 的限制。 修改 /etc/sysconfig/kubelet,加入 KUBELET_EXTRA_ARGS=--fail-swap-on=false

KUBELET_EXTRA_ARGS=--fail-swap-on=false

2.2 使用 kubeadm init 初始化集群

在各节点开机启动 kubelet 服务:

Centos7 使用 kubeadm 安装Kubernetes 1.13.3

目录

[toc]

什么是Kubeadm?

大多数与 Kubernetes 的工程师,都应该会使用 kubeadm。它是管理集群生命周期的重要工具,从创建到配置再到升级; kubeadm 处理现有硬件上的生产集群的引导,并以最佳实践方式配置核心 Kubernetes 组件,以便为新节点提供安全而简单的连接流程并支持轻松升级。

在Kubernetes 的文档Creating a single master cluster with kubeadm中已经给出了目前kubeadm的主要特性已经处于 Beta 状态了,在 2018 年就会转换成正式发布 (GA) 状态态,说明 kubeadm 离可以在生产环境中使用的距离越来越近了。

什么是容器存储接口(CSI)?

容器存储接口最初于 1.9 版本中作为 alpha 测试功能引入,在 1.10 版本中进入 beta 测试,如今终于进入 GA 阶段正式普遍可用。在 CSI 的帮助下,Kubernetes 卷层将真正实现可扩展性。通过 CSI ,第三方存储供应商将可以直接编写可与 Kubernetes 互操作的代码,而无需触及任何 Kubernetes 核心代码。事实上,相关规范也已经同步进入 1.0 阶段。

什么是CoreDNS?

在1.11中,官方宣布 CoreDNS 已达到基于DNS的服务发现的一般可用性。在1.13中,CoreDNS 现在将 kube-dns 替换为 Kubernetes 的默认DNS服务器。CoreDNS 是一个通用的,权威的DNS服务器,提供与 Kubernetes 向后兼容但可扩展的集成。CoreDNS 比以前的DNS服务器具有更少的移动部件,因为它是单个可执行文件和单个进程,并通过创建自定义DNS条目来支持灵活的用例。它也用Go编写,使其具有内存安全性。

1、环境准备

本文中的案例会有四台机器,他们的Host和IP地址如下

| IP地址 | 主机名 |

|---|---|

| 10.0.0.100 | c0(master) |

| 10.0.0.101 | c1 |

| 10.0.0.102 | c2 |

| 10.0.0.103 | c3 |

每一台机器的 host 以 c0 为例:

[root@c0 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.0.0.100 c0

10.0.0.101 c1

10.0.0.102 c2

10.0.0.103 c3

1.1、网络配置

每一台机器上都要操作,以下以c0为例

[root@c0 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=eth0

UUID=6d8d9ad6-37b5-431a-ab16-47d0aa00d01f

DEVICE=eth0

ONBOOT=yes

IPADDR0=10.0.0.100

PREFIXO0=24

GATEWAY0=10.0.0.1

DNS1=10.0.0.1

DNS2=8.8.8.8

重启网络:

[root@c0 ~]# service network restart

更改源为阿里云

[root@c0 ~]# yum install -y wget

[root@c0 ~]# cd /etc/yum.repos.d/

[root@c0 yum.repos.d]# mv CentOS-Base.repo CentOS-Base.repo.bak

[root@c0 yum.repos.d]# wget http://mirrors.aliyun.com/repo/Centos-7.repo

[root@c0 yum.repos.d]# wget http://mirrors.163.com/.help/CentOS7-Base-163.repo

[root@c0 yum.repos.d]# yum clean all

[root@c0 yum.repos.d]# yum makecache

安装网络工具包和基础工具包

[root@c0 ~]# yum install net-tools checkpolicy gcc dkms foomatic openssh-server bash-completion -y

1.2、更改 hostname

每一台机器上依次设置 hostname,以下以 c0 为例

[root@c0 ~]# hostnamectl --static set-hostname c0

[root@c0 ~]# hostnamectl status

Static hostname: c0

Icon name: computer-vm

Chassis: vm

Machine ID: 04c3f6d56e788345859875d9f49bd4bd

Boot ID: ba02919abe4245aba673aaf5f778ad10

Virtualization: kvm

Operating System: CentOS Linux 7 (Core)

CPE OS Name: cpe:/o:centos:centos:7

Kernel: Linux 3.10.0-957.el7.x86_64

Architecture: x86-64

1.3、配置 SSH 免密码登录登录

每一台机器都单独生成,以 c0 为例

[root@c0 ~]# ssh-keygen

#一路按回车到最后

将 ssh-keygen 生成的密钥,分别复制到其他三台机器,以下以 c0 为例

[root@c0 ~]# ssh-copy-id c0

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host ''c0 (10.0.0.100)'' can''t be established.

ECDSA key fingerprint is SHA256:O8y8TBSZfBYiHPvJPPuAd058zkfsOfnBjvnf/3cvOCQ.

ECDSA key fingerprint is MD5:da:3c:29:65:f2:86:e9:61:cb:39:57:5b:5e:e2:77:7c.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@c0''s password:

[root@c0 ~]# rm -rf ~/.ssh/known_hosts

[root@c0 ~]# clear

[root@c0 ~]# ssh-copy-id c0

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host ''c0 (10.0.0.100)'' can''t be established.

ECDSA key fingerprint is SHA256:O8y8TBSZfBYiHPvJPPuAd058zkfsOfnBjvnf/3cvOCQ.

ECDSA key fingerprint is MD5:da:3c:29:65:f2:86:e9:61:cb:39:57:5b:5e:e2:77:7c.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@c0''s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh ''c0''"

and check to make sure that only the key(s) you wanted were added.

[root@c0 ~]# ssh-copy-id c1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host ''c1 (10.0.0.101)'' can''t be established.

ECDSA key fingerprint is SHA256:O8y8TBSZfBYiHPvJPPuAd058zkfsOfnBjvnf/3cvOCQ.

ECDSA key fingerprint is MD5:da:3c:29:65:f2:86:e9:61:cb:39:57:5b:5e:e2:77:7c.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@c1''s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh ''c1''"

and check to make sure that only the key(s) you wanted were added.

[root@c0 ~]# ssh-copy-id c2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host ''c2 (10.0.0.102)'' can''t be established.

ECDSA key fingerprint is SHA256:O8y8TBSZfBYiHPvJPPuAd058zkfsOfnBjvnf/3cvOCQ.

ECDSA key fingerprint is MD5:da:3c:29:65:f2:86:e9:61:cb:39:57:5b:5e:e2:77:7c.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@c2''s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh ''c2''"

and check to make sure that only the key(s) you wanted were added.

[root@c0 ~]# ssh-copy-id c3

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host ''c3 (10.0.0.103)'' can''t be established.

ECDSA key fingerprint is SHA256:O8y8TBSZfBYiHPvJPPuAd058zkfsOfnBjvnf/3cvOCQ.

ECDSA key fingerprint is MD5:da:3c:29:65:f2:86:e9:61:cb:39:57:5b:5e:e2:77:7c.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@c3''s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh ''c3''"

and check to make sure that only the key(s) you wanted were added.

测试密钥是否配置成功

[root@c0 ~]# for N in $(seq 0 3); do ssh c$N hostname; done;

c0

c1

c2

c3

1.4、关闭防火墙

在每一台机器上运行以下命令,以 c0 为例:

[root@c0 ~]# systemctl stop firewalld && systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

1.5、关闭交换分区

在每一台机器上运行以下命令,以 c0 为例

[root@c0 ~]# swapoff -a

关闭前和关闭后,可以使用

free -h命令查看swap的状态,关闭后的total应该是0

编辑配置文件: /etc/fstab ,注释最后一条 /dev/mapper/centos-swap swap,以 c0 为例

[root@c0 ~]# sed -i "s/\/dev\/mapper\/centos-swap/# \/dev\/mapper\/centos-swap/" /etc/fstab

[root@c1 ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Mon Jan 28 11:49:11 2019

#

# Accessible filesystems, by reference, are maintained under ''/dev/disk''

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=93572ab6-90da-4cfe-83a4-93be7ad8597c /boot xfs defaults 0 0

# /dev/mapper/centos-swap swap swap defaults 0 0

1.6、关闭 SeLinux

在每一台机器上,关闭 SeLinux,以 c0 为例

[root@c0 ~]# setenforce 0

setenforce: SELinux is disabled

[root@c0 ~]# sed -i "s/SELINUX=enforcing/SELINUX=permissive/" /etc/selinux/config

[root@c0 ~]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=permissive

# SELINUXTYPE= can take one of three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

SELinux就是安全加强的Linux,通过命令

setenforce 0和sed... 可以将 SELinux 设置为 permissive 模式(将其禁用)。 只有执行这一操作之后,容器才能访问宿主的文件系统,进而能够正常使用 Pod 网络。您必须这么做,直到 kubelet 做出升级支持 SELinux 为止。

1.7、配置 IPTABLES

在每一台机器上操作,以 c0 为例

[root@c0 ~]# cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

[root@c0 ~]# sysctl --system

* Applying /usr/lib/sysctl.d/00-system.conf ...

net.bridge.bridge-nf-call-ip6tables = 0

net.bridge.bridge-nf-call-iptables = 0

net.bridge.bridge-nf-call-arptables = 0

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.all.promote_secondaries = 1

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ...

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

* Applying /etc/sysctl.conf ...

一些 RHEL/CentOS 7 的用户曾经遇到过:由于

iptables被绕过导致网络请求被错误的路由。您得保证在您的sysctl配置中net.bridge.bridge-nf-call-iptables被设为1

1.8、安装 NTP

在每一台机器上,安装 NTP 时间同步工具,并启动 NTP

[root@c0 ~]# yum install ntp -y

设置 NTP 开机启动,同时启动 NTP

[root@c0 ~]# systemctl enable ntpd && systemctl start ntpd

依次查看每台机器上的时间:

[root@c0 ~]# for N in $(seq 0 3); do ssh c$N date; done;

Sat Feb 9 18:11:48 CST 2019

Sat Feb 9 18:11:48 CST 2019

Sat Feb 9 18:11:49 CST 2019

Sat Feb 9 18:11:49 CST 2019

1.9、升级内核

因为 3.10 版本内核且缺少 ip_vs_fo.ko 模块,将导致 kube-proxy 无法开启 ipvs 模式。ip_vs_fo.ko 模块的最早版本为 3.19 版本,这个内核版本在 RedHat 系列发行版的常见RPM源中是不存在的。

在每一台机器上操作,以 c0 为例

[root@c0 ~]# rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

[root@c0 ~]# yum --enablerepo=elrepo-kernel install kernel-ml-devel kernel-ml -y

重启系统 reboot 后,手动选择新内核,然后输入以下命令,可以查看新内核的状态:

[root@c0 ~]# hostnamectl

Static hostname: c0

Icon name: computer-vm

Chassis: vm

Machine ID: 04c3f6d56e788345859875d9f49bd4bd

Boot ID: 40a19388698f4907bd233a8cff76f36e

Virtualization: kvm

Operating System: CentOS Linux 7 (Core)

CPE OS Name: cpe:/o:centos:centos:7

Kernel: Linux 4.20.7-1.el7.elrepo.x86_64

Architecture: x86-64

2、安装 Docker 18.06.1-ce

2.1、删除旧版本的 Docker

官方提供的删除方法

$ sudo yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

另外一种删除旧版的 Docker 方法,先查询安装过的 Docker

[root@c0 ~]# yum list installed | grep docker

Repository base is listed more than once in the configuration

Repository updates is listed more than once in the configuration

Repository extras is listed more than once in the configuration

Repository centosplus is listed more than once in the configuration

containerd.io.x86_64 1.2.2-3.el7 @docker-ce-stable

docker-ce.x86_64 3:18.09.1-3.el7 @docker-ce-stable

docker-ce-cli.x86_64 1:18.09.1-3.el7 @docker-ce-stable

删除已安装的 Docker

[root@c0 ~]# yum -y remove docker-ce.x86_64 docker-ce-cli.x86_64 containerd.io.x86_64

删除 Docker 镜像/容器

[root@c0 ~]# rm -rf /var/lib/docker

2.2、设置存储库

安装所需要的包,yum-utils 提供了 yum-config-manager 实用程序, device-mapper-persistent-data 和 lvm2 是 devicemapper 需要的存储驱动程序。

在每一台机器上操作,以 c0 为例

[root@c0 ~]# sudo yum install -y yum-utils device-mapper-persistent-data lvm2

[root@c0 ~]# sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

2.3、安装 Docker

[root@c0 ~]# sudo yum install docker-ce-18.06.1.ce-3.el7 -y

2.4、启动 Docker

[root@c0 ~]# systemctl enable docker && systemctl start docker

3、确保每个节点上 MAC 地址和 product_uuid 的唯一性

-

您可以使用下列命令获取网络接口的 MAC 地址:ip link 或是 ifconfig -a

-

可以通过命令

cat product_uuid sudo cat /sys/class/dmi/id/product_uuid或dmidecode -s system-uuid来查看

一般来讲,硬件设备会拥有独一无二的地址,但是有些虚拟机可能会雷同。Kubernetes 使用这些值来唯一确定集群中的节点。如果这些值在集群中不唯一,可能会导致安装失败。

4、安装Kubernetes 1.13.3

Master 节点

| 规则 | 方向 | 端口范围 | 作用 | 使用者 |

|---|---|---|---|---|

| TCP | Inbound | 6443* | Kubernetes API server | All |

| TCP | Inbound | 2379-2380 | etcd server client API | kube-apiserver, etcd |

| TCP | Inbound | 10250 | Kubelet API | Self, Control plane |

| TCP | Inbound | 10251 | kube-scheduler | Self |

| TCP | Inbound | 10252 | kube-controller-manager | Sel |

Worker 节点

| 规则 | 方向 | 端口范围 | 作用 | 使用者 |

|---|---|---|---|---|

| TCP | Inbound | 10250 | Kubelet API | Self, Control plane |

| TCP | Inbound | 30000-32767 | NodePort Services** | All |

4.1、安装 kubeadm, kubelet 和 kubectl

需要在每台机器上都安装以下的软件包:

- kubeadm: 用来初始化集群的指令。

- kubelet: 在集群中的每个节点上用来启动 pod 和 container 等。

- kubectl: 用来与集群通信的命令行工具。

4.1.1、替换阿里云的源安装kubernetes.repo

[root@c0 ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

4.1.2、安装 kubeadm1.13.3, kubelet1.13.3 和 kubectl1.13.3

查看可用版本

[root@c0 ~]# yum list --showduplicates | grep ''kubeadm\|kubectl\|kubelet''

安装 kubeadm1.13.3, kubelet1.13.3 和 kubectl1.13.3

[root@c0 ~]# yum install -y kubelet-1.13.3 kubeadm-1.13.3 kubectl-1.13.3 --disableexcludes=kubernetes

此时还不能启动 kubelet,先设置开机启动:

[root@c0 ~]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /etc/systemd/system/kubelet.service.

4.1.3、修改 kubelet 配置文件

查看 kubelet 安装了哪些文件?

[root@c0 ~]# rpm -ql kubelet

/etc/kubernetes/manifests # 清单目录

/etc/sysconfig/kubelet # 配置文件

/etc/systemd/system/kubelet.service # unit file

/usr/bin/kubelet # 主程序

修改 kubelet 配置文件

[root@c0 ~]# sed -i "s/KUBELET_EXTRA_ARGS=/KUBELET_EXTRA_ARGS=\"--fail-swap-on=false\"/" /etc/sysconfig/kubelet

[root@c0 ~]# cat /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

4.2、初始化 Master 节点

如果是第一次运行,下载 Docker 镜像后再运行 kubeadm init会比较慢,也可以通过 kubeadm config images pull 命令先将镜像下载到本地。 kubeadm init 首先会执行一系列的运行前检查来确保机器满足运行 Kubernetes 的条件。 这些检查会抛出警告并在发现错误的时候终止整个初始化进程。 然后 kubeadm init 会下载并安装集群的控制面组件,这可能会花费几分钟时间 命令执行完以后,会自动启动 kubelet Docker 镜像

[root@c0 ~]# kubeadm init --kubernetes-version=v1.13.3 --pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.13.3

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using ''kubeadm config images pull''

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [c0 localhost] and IPs [10.0.0.100 127.0.0.1 ::1]

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [c0 localhost] and IPs [10.0.0.100 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [c0 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.0.0.100]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 21.504487 seconds

[uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.13" in namespace kube-system with the configuration for the kubelets in the cluster

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "c0" as an annotation

[mark-control-plane] Marking the node c0 as control-plane by adding the label "node-role.kubernetes.io/master=''''"

[mark-control-plane] Marking the node c0 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: m4f2wo.ich4mi5dj85z24pz

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 10.0.0.100:6443 --token m4f2wo.ich4mi5dj85z24pz --discovery-token-ca-cert-hash sha256:dd7a5193aeabee6fe723984f557d121a074aa4e40cdd3d701741d585a3a2f43c

请备份好

kubeadm init输出中的kubeadm join命令,因为您会需要这个命令来给集群添加节点。

如果需要让普通用户可以运行 kubectl,请运行如下命令,其实这也是 kubeadm init 输出的一部分:

[root@c0 ~]# mkdir -p $HOME/.kube

[root@c0 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@c0 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

使用 docker images 可以查看已经下载好的镜像

[root@c0 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-apiserver v1.13.3 fe242e556a99 9 days ago 181MB

k8s.gcr.io/kube-controller-manager v1.13.3 0482f6400933 9 days ago 146MB

k8s.gcr.io/kube-proxy v1.13.3 98db19758ad4 9 days ago 80.3MB

k8s.gcr.io/kube-scheduler v1.13.3 3a6f709e97a0 9 days ago 79.6MB

k8s.gcr.io/coredns 1.2.6 f59dcacceff4 3 months ago 40MB

k8s.gcr.io/etcd 3.2.24 3cab8e1b9802 4 months ago 220MB

k8s.gcr.io/pause 3.1 da86e6ba6ca1 13 months ago 742kB

使用 docker ps 命令,可以看到在运行的 Docker 容器

[root@c0 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a3807d518520 98db19758ad4 "/usr/local/bin/kube…" 3 minutes ago Up 3 minutes k8s_kube-proxy_kube-proxy-gg5xd_kube-system_81300c8f-2e0b-11e9-acd0-001c42508c6a_0

49af1ad74d31 k8s.gcr.io/pause:3.1 "/pause" 3 minutes ago Up 3 minutes k8s_POD_kube-proxy-gg5xd_kube-system_81300c8f-2e0b-11e9-acd0-001c42508c6a_0

8b4a7e0e0e9e 3a6f709e97a0 "kube-scheduler --ad…" 3 minutes ago Up 3 minutes k8s_kube-scheduler_kube-scheduler-c0_kube-system_b734fcc86501dde5579ce80285c0bf0c_0

099c14b0ea76 3cab8e1b9802 "etcd --advertise-cl…" 3 minutes ago Up 3 minutes k8s_etcd_etcd-c0_kube-system_bb7da2b04eb464afdde00da66617b2fc_0

425196638f87 fe242e556a99 "kube-apiserver --au…" 3 minutes ago Up 3 minutes k8s_kube-apiserver_kube-apiserver-c0_kube-system_a6ec524e7fe1ac12a93850d3faff1d19_0

86e53f9cd1b0 0482f6400933 "kube-controller-man…" 3 minutes ago Up 3 minutes k8s_kube-controller-manager_kube-controller-manager-c0_kube-system_844e381a44322ac23d6f33196cc0751c_0

d0c5544ec9c3 k8s.gcr.io/pause:3.1 "/pause" 3 minutes ago Up 3 minutes k8s_POD_kube-scheduler-c0_kube-system_b734fcc86501dde5579ce80285c0bf0c_0

31161f991a5f k8s.gcr.io/pause:3.1 "/pause" 3 minutes ago Up 3 minutes k8s_POD_kube-controller-manager-c0_kube-system_844e381a44322ac23d6f33196cc0751c_0

11246ac9c5c4 k8s.gcr.io/pause:3.1 "/pause" 3 minutes ago Up 3 minutes k8s_POD_kube-apiserver-c0_kube-system_a6ec524e7fe1ac12a93850d3faff1d19_0

320b61f9d9c4 k8s.gcr.io/pause:3.1 "/pause" 3 minutes ago Up 3 minutes k8s_POD_etcd-c0_kube-system_bb7da2b04eb464afdde00da66617b2fc_0

查看节点状态

[root@c0 ~]# kubectl get cs,node

NAME STATUS MESSAGE ERROR

componentstatus/controller-manager Healthy ok

componentstatus/scheduler Healthy ok

componentstatus/etcd-0 Healthy {"health": "true"}

NAME STATUS ROLES AGE VERSION

node/c0 NotReady master 75m v1.13.3

此时节点的状态为

NotReady,部署好Flannel后,会变更 为Ready

4.2.1、部署 Flannel

创建 /home/work/_src/kube-flannel.yml 文件并保存,内容如下:

[root@c0 ~]# cat /home/work/_src/kube-flannel.yml

---

apiVersion: extensions/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: [''NET_ADMIN'']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unsed in CaaSP

rule: ''RunAsAny''

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: [''extensions'']

resources: [''podsecuritypolicies'']

verbs: [''use'']

resourceNames: [''psp.flannel.unprivileged'']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: amd64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: arm64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: arm

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: ppc64le

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: s390x

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

启动 Flannel 服务

[root@c0 ~]# kubectl apply -f kube-flannel.yml

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created

查看节点状态

[root@c0 ~]# kubectl get cs,node

NAME STATUS MESSAGE ERROR

componentstatus/controller-manager Healthy ok

componentstatus/scheduler Healthy ok

componentstatus/etcd-0 Healthy {"health": "true"}

NAME STATUS ROLES AGE VERSION

node/c0 Ready master 80m v1.13.3

此时

c0的STATUS已经是Ready

4.3、设置 Node 节点加入集群

将新节点添加到集群为每一台机器上执行以下操作:

kubeadm join --token <token> <master-ip>:<master-port> --discovery-token-ca-cert-hash sha256:<hash>

如果忘记了 Master 的 Token,可以在 Master 上输入以下命令查看:

[root@c0 ~]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

m4f2wo.ich4mi5dj85z24pz 22h 2019-02-12T22:44:01+08:00 authentication,signing The default bootstrap token generated by ''kubeadm init''. system:bootstrappers:kubeadm:default-node-token

默认情况下 Token 过期是时间是24小时,如果 Token 过期以后,可以输入以下命令,生成新的 Token

kubeadm token create

——discovery-token-ca-cert-hash 的查看方法,在 Master 运行以下命令查看

[root@c0 ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed ''s/^.* //''

dd7a5193aeabee6fe723984f557d121a074aa4e40cdd3d701741d585a3a2f43c

接下来我们开始正式将 Node 节点加入到 Master ,输入以下命令

[root@c1 ~]# kubeadm join 10.0.0.100:6443 --token m4f2wo.ich4mi5dj85z24pz --discovery-token-ca-cert-hash sha256:dd7a5193aeabee6fe723984f557d121a074aa4e40cdd3d701741d585a3a2f43c

[preflight] Running pre-flight checks

[discovery] Trying to connect to API Server "10.0.0.100:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://10.0.0.100:6443"

[discovery] Requesting info from "https://10.0.0.100:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "10.0.0.100:6443"

[discovery] Successfully established connection with API Server "10.0.0.100:6443"

[join] Reading configuration from the cluster...

[join] FYI: You can look at this config file with ''kubectl -n kube-system get cm kubeadm-config -oyaml''

[kubelet] Downloading configuration for the kubelet from the "kubelet-config-1.13" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[tlsbootstrap] Waiting for the kubelet to perform the TLS Bootstrap...

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "c1" as an annotation

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run ''kubectl get nodes'' on the master to see this node join the cluster.

在 Master 查看节点加入情况,其他节点加入以后:

[root@c0 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

c0 Ready master 3h51m v1.13.3

c1 Ready <none> 3h48m v1.13.3

c2 Ready <none> 2m20s v1.13.3

c3 Ready <none> 83s v1.13.3

在 Node 节点上查看 Docker 容器运行状态

[root@c1 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

15536bfa9396 ff281650a721 "/opt/bin/flanneld -…" About a minute ago Up About a minute k8s_kube-flannel_kube-flannel-ds-amd64-ql2p2_kube-system_93dcecd5-2e1c-11e9-bd82-001c42508c6a_0

668e864b541f 98db19758ad4 "/usr/local/bin/kube…" About a minute ago Up About a minute k8s_kube-proxy_kube-proxy-fz9xp_kube-system_93dd3109-2e1c-11e9-bd82-001c42508c6a_0

34465abc64c7 k8s.gcr.io/pause:3.1 "/pause" About a minute ago Up About a minute k8s_POD_kube-flannel-ds-amd64-ql2p2_kube-system_93dcecd5-2e1c-11e9-bd82-001c42508c6a_0

38e8facd94ad k8s.gcr.io/pause:3.1 "/pause" About a minute ago Up About a minute k8s_POD_kube-proxy-fz9xp_kube-system_93dd3109-2e1c-11e9-bd82-001c42508c6a_0

最后在 Master 节点上查看 Pod 运行状态,可以的看到 kube-flannel 和 kube-flannel 在每一个 Node 节点上都有运行

[root@c0 ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-86c58d9df4-cl8bd 1/1 Running 0 3h51m 10.172.0.6 c0 <none> <none>

coredns-86c58d9df4-ctgpv 1/1 Running 0 3h51m 10.172.0.7 c0 <none> <none>

etcd-c0 1/1 Running 0 3h50m 10.0.0.100 c0 <none> <none>

kube-apiserver-c0 1/1 Running 0 3h50m 10.0.0.100 c0 <none> <none>

kube-controller-manager-c0 1/1 Running 0 3h50m 10.0.0.100 c0 <none> <none>

kube-flannel-ds-amd64-6m2sx 1/1 Running 0 107s 10.0.0.103 c3 <none> <none>

kube-flannel-ds-amd64-78vsg 1/1 Running 0 2m44s 10.0.0.102 c2 <none> <none>

kube-flannel-ds-amd64-8df6l 1/1 Running 0 3h49m 10.0.0.100 c0 <none> <none>

kube-flannel-ds-amd64-ql2p2 1/1 Running 0 3h49m 10.0.0.101 c1 <none> <none>

kube-proxy-6wmf7 1/1 Running 0 2m44s 10.0.0.102 c2 <none> <none>

kube-proxy-7ggm8 1/1 Running 0 107s 10.0.0.103 c3 <none> <none>

kube-proxy-b247j 1/1 Running 0 3h51m 10.0.0.100 c0 <none> <none>

kube-proxy-fz9xp 1/1 Running 0 3h49m 10.0.0.101 c1 <none> <none>

kube-scheduler-c0 1/1 Running 0 3h50m 10.0.0.100 c0 <none> <none>

4.4、从集群中删除 Node

可以运行下面的命令删除 Node

kubectl drain <node name> --delete-local-data --force --ignore-daemonsets

kubectl delete node <node name>

在 Node 被删除,需要重启所有 kubeadm 安装状态:

kubeadm reset

5、在 K8s 上部署一个 Whoami

whoami 是一个简单的HTTP docker服务,用于打印容器ID

5.1、在 Master 运行部署 Whoami

[root@c0 _src]# kubectl create deployment whoami --image=idoall/whoami

deployment.apps/whoami created

5.2、查看 Whoami 部署状态

通过下面的命令,查看所有的部署情况

[root@c0 ~]# kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

whoami 1/1 1 1 2m56s

查看 Whoami 的部署信息

[root@c0 ~]# kubectl describe deployment whoami

查看 Whoami 的容器日志

[root@c0 ~]# kubectl describe po whoami

Name: whoami-7c846b698d-8qdrp

Namespace: default

Priority: 0

PriorityClassName: <none>

Node: c1/10.0.0.101

Start Time: Tue, 12 Feb 2019 00:18:06 +0800

Labels: app=whoami

pod-template-hash=7c846b698d

Annotations: <none>

Status: Running

IP: 10.244.1.2

Controlled By: ReplicaSet/whoami-7c846b698d

Containers:

whoami:

Container ID: docker://89836e848175edb747bf590acc51c1cf8825640a7c212b6dfd22a77ab805829a

Image: idoall/whoami

Image ID: docker-pullable://idoall/whoami@sha256:6e79f7182eab032c812f6dafdaf55095409acd64d98a825c8e4b95e173e198f2

Port: <none>

Host Port: <none>

State: Running

Started: Tue, 12 Feb 2019 00:18:18 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-xxx7l (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-xxx7l:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-xxx7l

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m59s default-scheduler Successfully assigned default/whoami-7c846b698d-8qdrp to c1

Normal Pulling <invalid> kubelet, c1 pulling image "idoall/whoami"

Normal Pulled <invalid> kubelet, c1 Successfully pulled image "idoall/whoami"

Normal Created <invalid> kubelet, c1 Created container

Normal Started <invalid> kubelet, c1 Started container

5.3、为 Whoami 扩展端口

创建一个可以通过互联网访问的 Whoami 容器

[root@c0 ~]# kubectl create service nodeport whoami --tcp=80:80

service/whoami created

上面的命令将在主机上为

Whoami部署创建面向公众的服务。 由于这是一个节点端口部署,因此 kubernetes 会将此服务分配给32000+范围内的主机上的端口。

查看当前的服务状态

[root@c0 ~]# kubectl get svc,pods -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 18m <none>

service/whoami NodePort 10.102.196.38 <none> 80:32707/TCP 36s app=whoami

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/whoami-7c846b698d-8qdrp 1/1 Running 0 5m25s 10.244.1.2 c1 <none> <none>

上面的服务可以看到

Whoami运行在32707端口,通过http://10.0.0.101:32707访问

5.4、测试 Whoami 服务是否运行正常

[root@c0 ~]# curl c1:32707

[mshk.top]I''m whoami-7c846b698d-8qdrp

5.5、扩展部署应用

kubectl scale --replicas=5 deployment/whoami

deployment.extensions/whoami scaled

查看扩展后的结果,可以看到 Whoami 在 c1、c2、c3上面都有部署

[root@c0 ~]# kubectl get svc,pods -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 25m <none>

service/whoami NodePort 10.102.196.38 <none> 80:32707/TCP 7m26s app=whoami

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/whoami-7c846b698d-8qdrp 1/1 Running 0 12m 10.244.1.2 c1 <none> <none>

pod/whoami-7c846b698d-9rzlh 1/1 Running 0 58s 10.244.2.2 c2 <none> <none>

pod/whoami-7c846b698d-b6h9p 1/1 Running 0 58s 10.244.1.3 c1 <none> <none>

pod/whoami-7c846b698d-lphdg 1/1 Running 0 58s 10.244.2.3 c2 <none> <none>

pod/whoami-7c846b698d-t7nsk 1/1 Running 0 58s 10.244.3.2 c3 <none> <none>

测试扩展后的结果

[root@c0 ~]# curl c0:32707

[mshk.top]I''m whoami-7c846b698d-8qdrp

[root@c0 ~]# curl c0:32707

[mshk.top]I''m whoami-7c846b698d-8qdrp

[root@c0 ~]# curl c0:32707

[mshk.top]I''m whoami-7c846b698d-t7nsk

[root@c0 ~]# curl c0:32707

[mshk.top]I''m whoami-7c846b698d-8qdrp

[root@c0 ~]# curl c0:32707

[mshk.top]I''m whoami-7c846b698d-lphdg

[root@c0 ~]# curl c0:32707

[mshk.top]I''m whoami-7c846b698d-b6h9p

ClusterIP模式会提供一个集群内部的虚拟IP(与Pod不在同一网段),以供集群内部的Pod之间通信使用。

5.6、删除 Whoami 部署

[root@c0 ~]# kubectl delete deployment whoami

deployment.extensions "whoami" deleted

[root@c0 ~]# kubectl get deployments

No resources found.

6、部署 Kubernetes Web UI (Dashboard)

从版本1.7开始,仪表板不再具有默认授予的完全管理员权限。所有权限都被撤销,并且只授予了使 Dashboard 工作所需的最小权限。

6.1、通过配置文件部署

我们使用官方提供的 v1.10.1 版本的配置文件 创建并保存文件名/home/work/_src/kubernetes-dashboard.yaml,文件的内容如下:

[root@c0 _src]# cat /home/work/_src/kubernetes-dashboard.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ------------------- Dashboard Secret ------------------- #

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Role & Role Binding ------------------- #

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create ''kubernetes-dashboard-key-holder'' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create ''kubernetes-dashboard-settings'' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update ''kubernetes-dashboard-settings'' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Deployment ------------------- #

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

创建 Dashboard 服务

[root@c0 _src]# kubectl apply -f kubernetes-dashboard.yaml

secret/kubernetes-dashboard-certs created

serviceaccount/kubernetes-dashboard created

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

deployment.apps/kubernetes-dashboard created

service/kubernetes-dashboard created

6.2、修改配置文件的服务类型为NodePort

输入以下命令,可以查看服务的yml信息,将type: ClusterIP替换成type: NodePort,然后保存。

[root@c0 _src]# kubectl -n kube-system edit service kubernetes-dashboard

service/kubernetes-dashboard edited

查看yml信息,看到格式类似下面:

# Please edit the object below. Lines beginning with a ''#'' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

...

name: kubernetes-dashboard

namespace: kube-system

resourceVersion: "343478"

selfLink: /api/v1/namespaces/kube-system/services/kubernetes-dashboard-head

uid: 8e48f478-993d-11e7-87e0-901b0e532516

spec:

clusterIP: 10.100.124.90

externalTrafficPolicy: Cluster

ports:

- port: 443

protocol: TCP

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

sessionAffinity: None

# type: ClusterIP

# 修改为NodePort对外提供服务

type: NodePort

status:

loadBalancer: {}

NodePort模式下Kubernetes将会在每个Node上打开一个端口并且每个Node的端口都是一样的,通过<NodeIP>:NodePort的方式Kubernetes集群外部的程序可以访问 Service。

通过下面的命令,可以查看到,服务已在服务器的端口31230(HTTPS)上公开。现在,您可以从以下浏览器访问它:https://10.0.0.100:30779。

[root@c0 ~]# kubectl -n kube-system get service kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.101.41.130 <none> 443:30779/TCP 44s

查看 Dashboard 状态

[root@c0 ~]# kubectl get pods --all-namespaces | grep kubernetes-dashboard

kube-system kubernetes-dashboard-57df4db6b-6scvx 1/1 Running 0 4m9s

查看 Dashboard 日志

[root@c0 ~]# kubectl logs -f kubernetes-dashboard-57df4db6b-6scvx -n kube-system

2019/02/11 16:10:15 Starting overwatch

2019/02/11 16:10:15 Using in-cluster config to connect to apiserver

2019/02/11 16:10:15 Using service account token for csrf signing

2019/02/11 16:10:15 Successful initial request to the apiserver, version: v1.13.3

2019/02/11 16:10:15 Generating JWE encryption key

2019/02/11 16:10:15 New synchronizer has been registered: kubernetes-dashboard-key-holder-kube-system. Starting

2019/02/11 16:10:15 Starting secret synchronizer for kubernetes-dashboard-key-holder in namespace kube-system

2019/02/11 16:10:15 Storing encryption key in a secret

2019/02/11 16:10:15 Creating in-cluster Heapster client

2019/02/11 16:10:15 Metric client health check failed: the server could not find the requested resource (get services heapster). Retrying in 30 seconds.

2019/02/11 16:10:15 Auto-generating certificates

2019/02/11 16:10:15 Successfully created certificates

2019/02/11 16:10:15 Serving securely on HTTPS port: 8443

.....

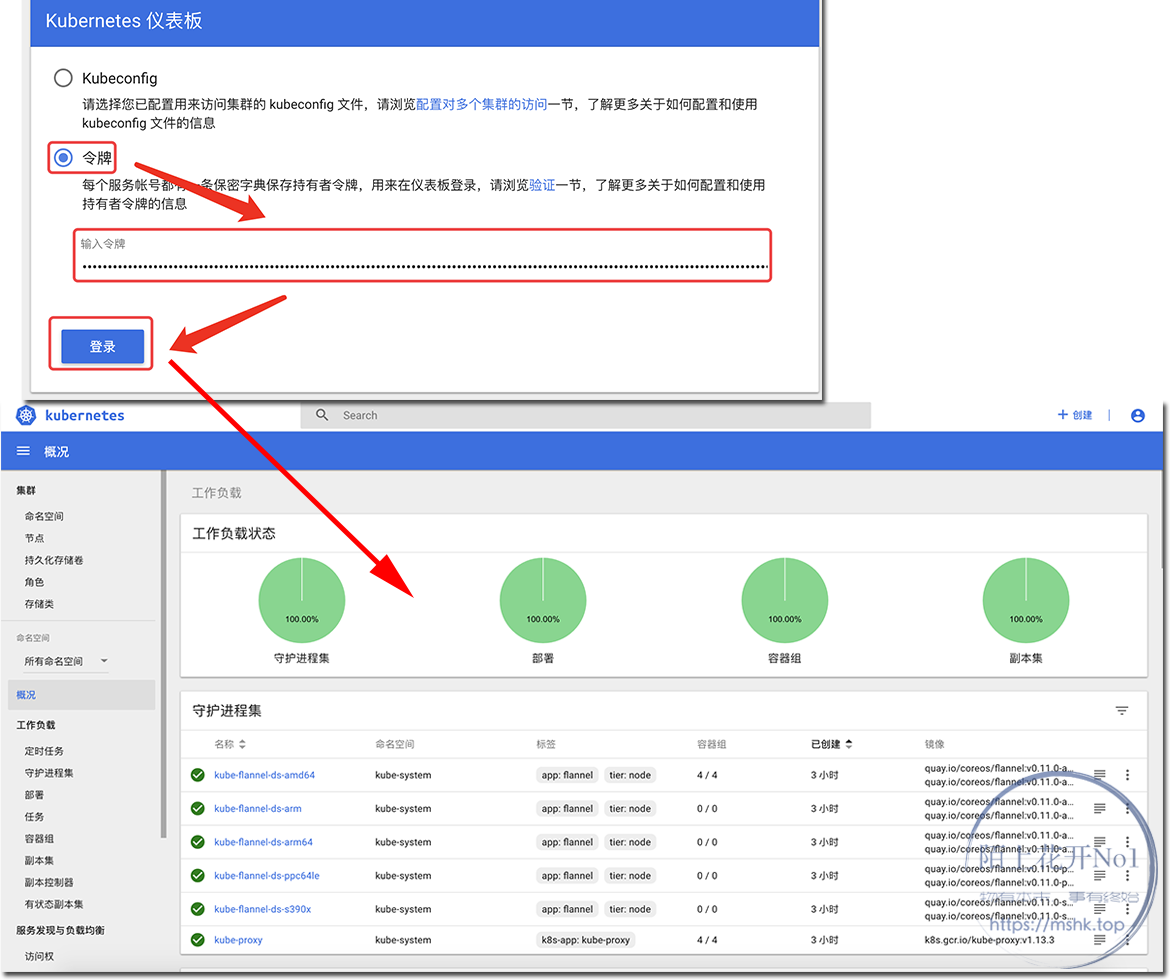

6.3、创建访问 Dashboard Token

需要创建一个 Admin 用户并授予 Admin 角色绑定,使用下面的 yaml文件 创建 admin 用户并赋予管理员权限,然后可以通过 Token 访问 kubernetes 您可以通过创建以下 ClusterRoleBinding 来授予 Dashboard 服务 Admin 管理员权限。根据下面的提示生成 /home/work/_src/kubernetes-dashboard-admin.yaml。 使用kubectl create -f /home/work/_src/kubernetes-dashboard-admin.yaml进行部署。

[root@c0 ~]# cat /home/work/_src/kubernetes-dashboard-admin.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

[root@c0 _src]# kubectl create -f kubernetes-dashboard-admin.yaml

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

创建完成后获取 secret 中 token 的值。

[root@c0 _src]# kubectl get secret -o wide --all-namespaces | grep kubernetes-dashboard-token

kube-system kubernetes-dashboard-token-fbl6l kubernetes.io/service-account-token 3 3h20m

[root@c0 _src]# kubectl -n kube-system describe secret kubernetes-dashboard-token-fbl6l

Name: kubernetes-dashboard-token-fbl6l

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard

kubernetes.io/service-account.uid: 091b4de4-2e05-11e9-8e1f-001c42508c6a

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1mYmw2bCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjA5MWI0ZGU0LTJlMDUtMTFlOS04ZTFmLTAwMWM0MjUwOGM2YSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.LUjBR3xdsB0foba63228UEZiG2DoYmk5s84fQt1FXRkC4PoEMAkVW0hrrCIGeSlwLGFujY4w9SkYyex4shMFZaZgKKvu_lrx2qHXZSmGGq7sqH7h0K-3ZrCgXSc4_eEIz2VyNE6SBV6VxU0F-sYzv6WR6v2Z8uudszD5GULsHsNK3xcSjaoyf468_wD9Es0lzpZUXWAl87o-L-a4SehU47xNQ2cCWQyinQl5NdDaySCprQ4QUn5xYa71JK7ZTwWD3qiNAQWH4F64f5xI1RaG854J-ycjZ3xJcWsVCeMiZrjATGi9Y0jaZu356uQ-AkVWGWZ2ERm_zOfPElZd0SssFg

上面的

token就是登录用的密码

也可以通过 jsonpath 直接获取 token

[root@c0 _src]# kubectl -n kube-system get secret kubernetes-dashboard-token-fbl6l -o jsonpath={.data.token}|base64 -d

eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1mYmw2bCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjA5MWI0ZGU0LTJlMDUtMTFlOS04ZTFmLTAwMWM0MjUwOGM2YSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.LUjBR3xdsB0foba63228UEZiG2DoYmk5s84fQt1FXRkC4PoEMAkVW0hrrCIGeSlwLGFujY4w9SkYyex4shMFZaZgKKvu_lrx2qHXZSmGGq7sqH7h0K-3ZrCgXSc4_eEIz2VyNE6SBV6VxU0F-sYzv6WR6v2Z8uudszD5GULsHsNK3xcSjaoyf468_wD9Es0lzpZUXWAl87o-L-a4SehU47xNQ2cCWQyinQl5NdDaySCprQ4QUn5xYa71JK7ZTwWD3qiNAQWH4F64f5xI1RaG854J-ycjZ3xJcWsVCeMiZrjATGi9Y0jaZu356uQ-AkVWGWZ2ERm_zOfPElZd0SssFg

也可以使用下面的命令,直接获取 kubernetes-dashboard-token 的值,然后直接打印输出

[root@c0 _src]# k8tokenvalue=`kubectl get secret -o wide --all-namespaces | grep kubernetes-dashboard-token | awk ''{print $2}''`;kubectl -n kube-system get secret $k8tokenvalue -o jsonpath={.data.token}|base64 -d | awk ''{print $1}''

eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1mYmw2bCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjA5MWI0ZGU0LTJlMDUtMTFlOS04ZTFmLTAwMWM0MjUwOGM2YSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.LUjBR3xdsB0foba63228UEZiG2DoYmk5s84fQt1FXRkC4PoEMAkVW0hrrCIGeSlwLGFujY4w9SkYyex4shMFZaZgKKvu_lrx2qHXZSmGGq7sqH7h0K-3ZrCgXSc4_eEIz2VyNE6SBV6VxU0F-sYzv6WR6v2Z8uudszD5GULsHsNK3xcSjaoyf468_wD9Es0lzpZUXWAl87o-L-a4SehU47xNQ2cCWQyinQl5NdDaySCprQ4QUn5xYa71JK7ZTwWD3qiNAQWH4F64f5xI1RaG854J-ycjZ3xJcWsVCeMiZrjATGi9Y0jaZu356uQ-AkVWGWZ2ERm_zOfPElZd0SssFg

6.4、通过 Token 访问 Kubernetes Web UI (Dashboard)

如下图中选择令牌,输入上面的 Token 信息,点击登录,登录以后就会看到如下的界面:

6.5、删除 Kubernetes Web UI (Dashboard) 服务

[root@c0 ~]# kubectl delete -f https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

secret "kubernetes-dashboard-certs" deleted

serviceaccount "kubernetes-dashboard" deleted

role.rbac.authorization.k8s.io "kubernetes-dashboard-minimal" deleted

rolebinding.rbac.authorization.k8s.io "kubernetes-dashboard-minimal" deleted

deployment.apps "kubernetes-dashboard" deleted

service "kubernetes-dashboard" deleted

7、部署 Heapster 组件

Heapster 用于计算并分析集群资源利用率、监控集群容器

7.1、下载官方提供的 yml 文件

[root@c0 _src]# pwd

/home/work/_src

[root@c0 _src]# wget https://github.com/kubernetes-retired/heapster/archive/v1.5.3.tar.gz

--2019-02-11 23:46:53-- https://github.com/kubernetes-retired/heapster/archive/v1.5.3.tar.gz

Resolving github.com (github.com)... 192.30.253.113, 192.30.253.112

Connecting to github.com (github.com)|192.30.253.113|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://codeload.github.com/kubernetes-retired/heapster/tar.gz/v1.5.3 [following]

--2019-02-11 23:46:55-- https://codeload.github.com/kubernetes-retired/heapster/tar.gz/v1.5.3

Resolving codeload.github.com (codeload.github.com)... 192.30.255.121, 192.30.255.120

Connecting to codeload.github.com (codeload.github.com)|192.30.255.121|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: unspecified [application/x-gzip]

Saving to: ‘v1.5.3.tar.gz’

[ <=> ] 4,898,117 2.52MB/s in 1.9s

2019-02-11 23:47:00 (2.52 MB/s) - ‘v1.5.3.tar.gz’ saved [4898117]

[root@c0 _src]# tar -xvf v1.5.3.tar.gz

将里面的镜像源替换成阿里云

[root@c0 _src]# cd heapster-1.5.3/deploy/kube-config/influxdb/

[root@c0 influxdb]# sed -i "s/k8s.gcr.io/registry.cn-hangzhou.aliyuncs.com\/google_containers/" grafana.yaml

[root@c0 influxdb]# sed -i "s/k8s.gcr.io/registry.cn-hangzhou.aliyuncs.com\/google_containers/" heapster.yaml

[root@c0 influxdb]# sed -i "s/k8s.gcr.io/registry.cn-hangzhou.aliyuncs.com\/google_containers/" influxdb.yaml

7.2、部署 Heapster

[root@c0 influxdb]# ls

grafana.yaml heapster-rbac.yaml heapster.yaml influxdb.yaml

[root@c0 influxdb]# ls

grafana.yaml heapster.yaml influxdb.yaml

[root@c0 influxdb]# kubectl create -f .

deployment.extensions/monitoring-grafana created

service/monitoring-grafana created

serviceaccount/heapster created

deployment.extensions/heapster created

service/heapster created