以上就是给各位分享Pythonnumpy模块-promote_types()实例源码,其中也会对python中numpy模块进行解释,同时本文还将给你拓展Jupyter中的Numpy在打印时出错(Py

以上就是给各位分享Python numpy 模块-promote_types() 实例源码,其中也会对python中numpy模块进行解释,同时本文还将给你拓展Jupyter 中的 Numpy 在打印时出错(Python 版本 3.8.8):TypeError: 'numpy.ndarray' object is not callable、numpy.random.random & numpy.ndarray.astype & numpy.arange、numpy.ravel()/numpy.flatten()/numpy.squeeze()、Numpy:数组创建 numpy.arrray() , numpy.arange()、np.linspace ()、数组基本属性等相关知识,如果能碰巧解决你现在面临的问题,别忘了关注本站,现在开始吧!

本文目录一览:- Python numpy 模块-promote_types() 实例源码(python中numpy模块)

- Jupyter 中的 Numpy 在打印时出错(Python 版本 3.8.8):TypeError: 'numpy.ndarray' object is not callable

- numpy.random.random & numpy.ndarray.astype & numpy.arange

- numpy.ravel()/numpy.flatten()/numpy.squeeze()

- Numpy:数组创建 numpy.arrray() , numpy.arange()、np.linspace ()、数组基本属性

Python numpy 模块-promote_types() 实例源码(python中numpy模块)

Python numpy 模块,promote_types() 实例源码

我们从Python开源项目中,提取了以下36个代码示例,用于说明如何使用numpy.promote_types()。

- def test_promote_types_endian(self):

- # promote_types should always return native-endian types

- assert_equal(np.promote_types(''<i8'', ''<i8''), np.dtype(''i8''))

- assert_equal(np.promote_types(''>i8'', ''>i8''), np.dtype(''i8''))

- assert_equal(np.promote_types(''>i8'', ''>U16''), np.dtype(''U21''))

- assert_equal(np.promote_types(''<i8'', ''<U16''), np.dtype(''U21''))

- assert_equal(np.promote_types(''>U16'', np.dtype(''U21''))

- assert_equal(np.promote_types(''<U16'', np.dtype(''U21''))

- assert_equal(np.promote_types(''<S5'', ''<U8''), np.dtype(''U8''))

- assert_equal(np.promote_types(''>S5'', ''>U8''), np.dtype(''U8''))

- assert_equal(np.promote_types(''<U8'', ''<S5''), np.dtype(''U8''))

- assert_equal(np.promote_types(''>U8'', ''>S5''), np.dtype(''U8''))

- assert_equal(np.promote_types(''<U5'', ''>U5''), np.dtype(''U8''))

- assert_equal(np.promote_types(''<M8'', ''<M8''), np.dtype(''M8''))

- assert_equal(np.promote_types(''>M8'', ''>M8''), np.dtype(''M8''))

- assert_equal(np.promote_types(''<m8'', ''<m8''), np.dtype(''m8''))

- assert_equal(np.promote_types(''>m8'', ''>m8''), np.dtype(''m8''))

- def test_promote_types_endian(self):

- # promote_types should always return native-endian types

- assert_equal(np.promote_types(''<i8'', np.dtype(''m8''))

- def test_promote_types_endian(self):

- # promote_types should always return native-endian types

- assert_equal(np.promote_types(''<i8'', np.dtype(''m8''))

- def test_promote_types_endian(self):

- # promote_types should always return native-endian types

- assert_equal(np.promote_types(''<i8'', np.dtype(''m8''))

- def testGram(test):

- instance, reference=test[TEST.INSTANCE], test[TEST.REFERENCE]

- # usually expect the normalized matrix to be promoted in type complexity

- # due to division by column-norm during the process. However there exist

- # matrices that treat the problem differently. Exclude the expected pro-

- # motion for them.

- query=({} if isinstance(instance, (Diag, Eye, Zero))

- else {TEST.TYPE_PROMOTION: np.float32})

- # account for "extra computation stage" in gram

- query[TEST.TOL_POWER]=test.get(TEST.TOL_POWER, 1.) * 2

- query[TEST.RESULT_OUTPUT]=instance.gram.array

- query[TEST.RESULT_REF]=reference.astype(

- np.promote_types(np.float32, reference.dtype)).T.conj().dot(reference)

- # ignore actual type of generated gram:

- query[TEST.CHECK_DATATYPE]=False

- return compareResults(test, query)

- ################################################## test: T (property)

- def test_promote_types_endian(self):

- # promote_types should always return native-endian types

- assert_equal(np.promote_types(''<i8'', np.dtype(''m8''))

- def test_promote_types_endian(self):

- # promote_types should always return native-endian types

- assert_equal(np.promote_types(''<i8'', np.dtype(''m8''))

- def test_jacobian_set_item(self, dtypes, shapes):

- shape, constructor, expected_shape = shapes

- dtype, value = dtypes

- prob = Problem(model=Group())

- comp = ExplicitSetItemComp(dtype, value, shape, constructor)

- prob.model.add_subsystem(''C1'', comp)

- prob.setup(check=False)

- prob.set_solver_print(level=0)

- prob.run_model()

- prob.model.run_apply_nonlinear()

- prob.model.run_linearize()

- expected = constructor(value)

- with prob.model._subsystems_allprocs[0].jacobian_context() as J:

- jac_out = J[''out'', ''in''] * -1

- self.assertEqual(len(jac_out.shape), 2)

- expected_dtype = np.promote_types(dtype, float)

- self.assertEqual(jac_out.dtype, expected_dtype)

- assert_rel_error(self, jac_out, np.atleast_2d(expected).reshape(expected_shape), 1e-15)

- def test_promote_types_endian(self):

- # promote_types should always return native-endian types

- assert_equal(np.promote_types(''<i8'', np.dtype(''m8''))

- def test_promote_types_strings(self):

- assert_equal(np.promote_types(''bool'', ''S''), np.dtype(''S5''))

- assert_equal(np.promote_types(''b'', np.dtype(''S4''))

- assert_equal(np.promote_types(''u1'', np.dtype(''S3''))

- assert_equal(np.promote_types(''u2'', np.dtype(''S5''))

- assert_equal(np.promote_types(''u4'', np.dtype(''S10''))

- assert_equal(np.promote_types(''u8'', np.dtype(''S20''))

- assert_equal(np.promote_types(''i1'', np.dtype(''S4''))

- assert_equal(np.promote_types(''i2'', np.dtype(''S6''))

- assert_equal(np.promote_types(''i4'', np.dtype(''S11''))

- assert_equal(np.promote_types(''i8'', np.dtype(''S21''))

- assert_equal(np.promote_types(''bool'', ''U''), np.dtype(''U5''))

- assert_equal(np.promote_types(''b'', np.dtype(''U4''))

- assert_equal(np.promote_types(''u1'', np.dtype(''U3''))

- assert_equal(np.promote_types(''u2'', np.dtype(''U5''))

- assert_equal(np.promote_types(''u4'', np.dtype(''U10''))

- assert_equal(np.promote_types(''u8'', np.dtype(''U20''))

- assert_equal(np.promote_types(''i1'', np.dtype(''U4''))

- assert_equal(np.promote_types(''i2'', np.dtype(''U6''))

- assert_equal(np.promote_types(''i4'', np.dtype(''U11''))

- assert_equal(np.promote_types(''i8'', np.dtype(''U21''))

- assert_equal(np.promote_types(''bool'', ''S1''), np.dtype(''S5''))

- assert_equal(np.promote_types(''bool'', ''S30''), np.dtype(''S30''))

- assert_equal(np.promote_types(''b'', np.dtype(''S4''))

- assert_equal(np.promote_types(''b'', np.dtype(''S30''))

- assert_equal(np.promote_types(''u1'', np.dtype(''S3''))

- assert_equal(np.promote_types(''u1'', np.dtype(''S30''))

- assert_equal(np.promote_types(''u2'', np.dtype(''S5''))

- assert_equal(np.promote_types(''u2'', np.dtype(''S30''))

- assert_equal(np.promote_types(''u4'', np.dtype(''S10''))

- assert_equal(np.promote_types(''u4'', np.dtype(''S30''))

- assert_equal(np.promote_types(''u8'', np.dtype(''S20''))

- assert_equal(np.promote_types(''u8'', np.dtype(''S30''))

- def test_dtype_promotion(self):

- # datetime <op> datetime computes the Metadata gcd

- # timedelta <op> timedelta computes the Metadata gcd

- for mM in [''m'', ''M'']:

- assert_equal(

- np.promote_types(np.dtype(mM+''8[2Y]''), np.dtype(mM+''8[2Y]'')),

- np.dtype(mM+''8[2Y]''))

- assert_equal(

- np.promote_types(np.dtype(mM+''8[12Y]''), np.dtype(mM+''8[15Y]'')),

- np.dtype(mM+''8[3Y]''))

- assert_equal(

- np.promote_types(np.dtype(mM+''8[62M]''), np.dtype(mM+''8[24M]'')),

- np.dtype(mM+''8[2M]''))

- assert_equal(

- np.promote_types(np.dtype(mM+''8[1W]''), np.dtype(mM+''8[2D]'')),

- np.dtype(mM+''8[1D]''))

- assert_equal(

- np.promote_types(np.dtype(mM+''8[W]''), np.dtype(mM+''8[13s]'')),

- np.dtype(mM+''8[s]''))

- assert_equal(

- np.promote_types(np.dtype(mM+''8[13W]''), np.dtype(mM+''8[49s]'')),

- np.dtype(mM+''8[7s]''))

- # timedelta <op> timedelta raises when there is no reasonable gcd

- assert_raises(TypeError, np.promote_types,

- np.dtype(''m8[Y]''), np.dtype(''m8[D]''))

- assert_raises(TypeError,

- np.dtype(''m8[M]''), np.dtype(''m8[W]''))

- # timedelta <op> timedelta may overflow with big unit ranges

- assert_raises(OverflowError,

- np.dtype(''m8[W]''), np.dtype(''m8[fs]''))

- assert_raises(OverflowError,

- np.dtype(''m8[s]''), np.dtype(''m8[as]''))

- def print_coercion_table(ntypes, inputfirstvalue, inputsecondvalue, firstarray, use_promote_types=False):

- print(''+'', end='' '')

- for char in ntypes:

- print(char, end='' '')

- print()

- for row in ntypes:

- if row == ''O'':

- rowtype = GenericObject

- else:

- rowtype = np.obj2sctype(row)

- print(row, end='' '')

- for col in ntypes:

- if col == ''O'':

- coltype = GenericObject

- else:

- coltype = np.obj2sctype(col)

- try:

- if firstarray:

- rowvalue = np.array([rowtype(inputfirstvalue)], dtype=rowtype)

- else:

- rowvalue = rowtype(inputfirstvalue)

- colvalue = coltype(inputsecondvalue)

- if use_promote_types:

- char = np.promote_types(rowvalue.dtype, colvalue.dtype).char

- else:

- value = np.add(rowvalue, colvalue)

- if isinstance(value, np.ndarray):

- char = value.dtype.char

- else:

- char = np.dtype(type(value)).char

- except ValueError:

- char = ''!''

- except OverflowError:

- char = ''@''

- except TypeError:

- char = ''#''

- print(char, end='' '')

- print()

- def dtype(self):

- """Return dtype of image data in file."""

- # subblock data can be of different pixel type

- dtype = self.filtered_subblock_directory[0].dtype[-2:]

- for directory_entry in self.filtered_subblock_directory:

- dtype = numpy.promote_types(dtype, directory_entry.dtype[-2:])

- return dtype

- def test_promote_types_strings(self):

- assert_equal(np.promote_types(''bool'', np.dtype(''S30''))

- def test_dtype_promotion(self):

- # datetime <op> datetime computes the Metadata gcd

- # timedelta <op> timedelta computes the Metadata gcd

- for mM in [''m'', np.dtype(''m8[as]''))

- def print_coercion_table(ntypes, end='' '')

- print()

- def test_promote_types_strings(self):

- assert_equal(np.promote_types(''bool'', np.dtype(''S30''))

- def test_dtype_promotion(self):

- # datetime <op> datetime computes the Metadata gcd

- # timedelta <op> timedelta computes the Metadata gcd

- for mM in [''m'', np.dtype(''m8[as]''))

- def print_coercion_table(ntypes, end='' '')

- print()

- def test_promote_types_strings(self):

- assert_equal(np.promote_types(''bool'', np.dtype(''S30''))

- def test_dtype_promotion(self):

- # datetime <op> datetime computes the Metadata gcd

- # timedelta <op> timedelta computes the Metadata gcd

- for mM in [''m'', np.dtype(''m8[as]''))

- def print_coercion_table(ntypes, end='' '')

- print()

- def testLargestSV(test):

- query={TEST.TYPE_EXPECTED: np.float64}

- instance=test[TEST.INSTANCE]

- # account for "extra computation stage" (gram) in largestSV

- query[TEST.TOL_POWER]=test.get(TEST.TOL_POWER, 1.) * 2

- query[TEST.TOL_minePS]=_getTypeEps(safeTypeExpansion(instance.dtype))

- # determine reference result

- largestSV=np.linalg.svd(test[TEST.REFERENCE], compute_uv=False)[0]

- query[TEST.RESULT_REF]=np.array(

- largestSV, dtype=np.promote_types(largestSV.dtype, np.float64))

- # largestSV may not converge fast enough for a bad random starting point

- # so retry some times before throwing up

- for tries in range(9):

- maxSteps=100. * 10. ** (tries / 2.)

- query[TEST.RESULT_OUTPUT]=np.array(

- instance.getLargestSV(maxSteps=maxSteps, alwaysReturn=True))

- result=compareResults(test, query)

- if result[TEST.RESULT]:

- break

- return result

- ################################################## test: gram (property)

- def test_promote_types_strings(self):

- assert_equal(np.promote_types(''bool'', np.dtype(''S30''))

- def test_dtype_promotion(self):

- # datetime <op> datetime computes the Metadata gcd

- # timedelta <op> timedelta computes the Metadata gcd

- for mM in [''m'', np.dtype(''m8[as]''))

- def print_coercion_table(ntypes, end='' '')

- print()

- def test_promote_types_strings(self):

- assert_equal(np.promote_types(''bool'', np.dtype(''S30''))

- def test_dtype_promotion(self):

- # datetime <op> datetime computes the Metadata gcd

- # timedelta <op> timedelta computes the Metadata gcd

- for mM in [''m'', np.dtype(''m8[as]''))

- def print_coercion_table(ntypes, end='' '')

- print()

- def test_promote_types_strings(self):

- assert_equal(np.promote_types(''bool'', np.dtype(''S30''))

- def test_dtype_promotion(self):

- # datetime <op> datetime computes the Metadata gcd

- # timedelta <op> timedelta computes the Metadata gcd

- for mM in [''m'', np.dtype(''m8[as]''))

- def print_coercion_table(ntypes, end='' '')

- print()

- def combine_data_frame_files(output_filename, input_filenames):

- in_files = [ h5py.File(f, ''r'') for f in input_filenames ]

- column_names = [ tuple(sorted(f.attrs.get("column_names"))) for f in in_files ]

- uniq = set(column_names)

- if len(uniq) > 1:

- raise Exception("you''re attempting to combine incompatible data frames")

- if len(uniq) == 0:

- r = "No input files? output: %s,inputs: %s" % (output_filename, str(input_filenames))

- raise Exception(r)

- column_names = uniq.pop()

- if os.path.exists(output_filename):

- os.remove(output_filename)

- out = h5py.File(output_filename)

- out.attrs.create("column_names", column_names)

- # Write successive columns

- for c in column_names:

- datasets = [f[c] for f in in_files if len(f[c]) > 0]

- num_w_levels = np.sum([has_levels(ds) for ds in datasets if len(ds) > 0])

- fract_w_levels = float(num_w_levels) / (len(datasets) + 1)

- if fract_w_levels > 0.25:

- combine_level_column(out, datasets, c)

- continue

- # filter out empty rows from the type promotion,unless they''re all empty

- types = [get_col_type(ds) for ds in datasets if len(ds) > 0]

- if len(types) == 0:

- # Fall back to getting column types from empty data frames

- types = [get_col_type(f[c]) for f in in_files]

- common_type = reduce(np.promote_types, types)

- # numpy doesn''t understand vlen strings -- so always promote to vlen strings if anything is using them

- if vlen_string in types:

- common_type = vlen_string

- out_ds = out.create_dataset(c, shape=(0,), maxshape=(None, dtype=common_type, compression=COMPRESSION, shuffle=True, chunks=(CHUNK_SIZE,))

- item_count = 0

- for ds in datasets:

- new_items = ds.shape[0]

- out_ds.resize((item_count + new_items,))

- data = ds[:]

- if has_levels(ds):

- levels = get_levels(ds)

- data = levels[data]

- out_ds[item_count:(item_count + new_items)] = data

- item_count += new_items

- for in_f in in_files:

- in_f.close()

- out.close()

- def ISTA(

- fmatA,

- arrB,

- numLambda=0.1,

- numMaxSteps=100

- ):

- ''''''

- Wrapper around the ISTA algrithm to allow processing of arrays of signals

- fmatA - input system matrix

- arrB - input data vector (measurements)

- numLambda - balancing parameter in optimization problem

- between data fidelity and sparsity

- numMaxSteps - maximum number of steps to run

- numL - step size during the conjugate gradient step

- ''''''

- if len(arrB.shape) > 2:

- raise ValueError("Only n x m arrays are supported for ISTA")

- # calculate the largest singular value to get the right step size

- numL = 1.0 / (fmatA.largestSV ** 2)

- arrX = np.zeros(

- (fmatA.numM, arrB.shape[1]),

- dtype=np.promote_types(np.float32, arrB.dtype)

- )

- # start iterating

- for numStep in range(numMaxSteps):

- # do the gradient step and threshold

- arrStep = arrX - numL * fmatA.backward(fmatA.forward(arrX) - arrB)

- arrX = _softThreshold(arrStep, numL * numLambda * 0.5)

- # return the unthresholded values for all non-zero support elements

- return np.where(arrX != 0, arrStep, arrX)

- ################################################################################

- ### Maintenance and Documentation

- ################################################################################

- ################################################## inspection interface

- def FISTA(

- fmatA,

- arrB,

- numLambda=0.1,

- numMaxSteps=100

- ):

- ''''''

- Wrapper around the FISTA algrithm to allow processing of arrays of signals

- fmatA - input system matrix

- arrB - input data vector (measurements)

- numLambda - balancing parameter in optimization problem

- between data fidelity and sparsity

- numMaxSteps - maximum number of steps to run

- numL - step size during the conjugate gradient step

- ''''''

- if len(arrB.shape) > 2:

- raise ValueError("Only n x m arrays are supported for FISTA")

- # calculate the largest singular value to get the right step size

- numL = 1.0 / (fmatA.largestSV ** 2)

- t = 1

- arrX = np.zeros(

- (fmatA.numM, arrB.dtype)

- )

- # initial arrY

- arrY = np.copy(arrX)

- # start iterating

- for numStep in range(numMaxSteps):

- arrXold = np.copy(arrX)

- # do the gradient step and threshold

- arrStep = arrY - numL * fmatA.backward(fmatA.forward(arrY) - arrB)

- arrX = _softThreshold(arrStep, numL * numLambda * 0.5)

- # update t

- tOld =t

- t = (1 + np.sqrt(1 + 4 * t ** 2)) / 2

- # update arrY

- arrY = arrX + ((tOld - 1) / t) * (arrX - arrXold)

- # return the unthresholded values for all non-zero support elements

- return np.where(arrX != 0, arrX)

- ################################################################################

- ### Maintenance and Documentation

- ################################################################################

- ################################################## inspection interface

- def _set_abs(self, abs_key, subjac):

- """

- Set sub-Jacobian.

- Parameters

- ----------

- abs_key : (str,str)

- Absolute name pair of sub-Jacobian.

- subjac : int or float or ndarray or sparse matrix

- sub-Jacobian as a scalar,vector,array,or AIJ list or tuple.

- """

- if not issparse(subjac):

- # np.promote_types will choose the smallest dtype that can contain both arguments

- subjac = np.atleast_1d(subjac)

- safe_dtype = np.promote_types(subjac.dtype, float)

- subjac = subjac.astype(safe_dtype, copy=False)

- # Bail here so that we allow top level jacobians to be of reduced size when indices are

- # specified on driver vars.

- if self._override_checks:

- self._subjacs[abs_key] = subjac

- return

- if abs_key in self._subjacs_info:

- subjac_info = self._subjacs_info[abs_key][0]

- rows = subjac_info[''rows'']

- else:

- rows = None

- if rows is None:

- # Dense subjac

- shape = self._abs_key2shape(abs_key)

- subjac = np.atleast_2d(subjac)

- if subjac.shape == (1, 1):

- subjac = subjac[0, 0] * np.ones(shape, dtype=safe_dtype)

- else:

- subjac = subjac.reshape(shape)

- if abs_key in self._subjacs and self._subjacs[abs_key].shape == shape:

- np.copyto(self._subjacs[abs_key], subjac)

- else:

- self._subjacs[abs_key] = subjac.copy()

- else:

- # Sparse subjac

- if subjac.shape == (1,):

- subjac = subjac[0] * np.ones(rows.shape, dtype=safe_dtype)

- if subjac.shape != rows.shape:

- raise ValueError("Sub-jacobian for key %s has "

- "the wrong shape (%s),expected (%s)." %

- (abs_key, subjac.shape, rows.shape))

- if abs_key in self._subjacs and subjac.shape == self._subjacs[abs_key][0].shape:

- np.copyto(self._subjacs[abs_key][0], subjac)

- else:

- self._subjacs[abs_key] = [subjac.copy(), rows, subjac_info[''cols'']]

- else:

- self._subjacs[abs_key] = subjac

Jupyter 中的 Numpy 在打印时出错(Python 版本 3.8.8):TypeError: 'numpy.ndarray' object is not callable

如何解决Jupyter 中的 Numpy 在打印时出错(Python 版本 3.8.8):TypeError: ''numpy.ndarray'' object is not callable?

晚安, 尝试打印以下内容时,我在 jupyter 中遇到了 numpy 问题,并且得到了一个 错误: 需要注意的是python版本是3.8.8。 我先用 spyder 测试它,它运行正确,它给了我预期的结果

使用 Spyder:

import numpy as np

for i in range (5):

n = np.random.rand ()

print (n)

Results

0.6604903457995978

0.8236300859753154

0.16067650689842816

0.6967868357083673

0.4231597934445466

现在有了 jupyter

import numpy as np

for i in range (5):

n = np.random.rand ()

print (n)

-------------------------------------------------- ------

TypeError Traceback (most recent call last)

<ipython-input-78-0c6a801b3ea9> in <module>

2 for i in range (5):

3 n = np.random.rand ()

----> 4 print (n)

TypeError: ''numpy.ndarray'' object is not callable

感谢您对我如何在 Jupyter 中解决此问题的帮助。

非常感谢您抽出宝贵时间。

阿特,约翰”

解决方法

暂无找到可以解决该程序问题的有效方法,小编努力寻找整理中!

如果你已经找到好的解决方法,欢迎将解决方案带上本链接一起发送给小编。

小编邮箱:dio#foxmail.com (将#修改为@)

numpy.random.random & numpy.ndarray.astype & numpy.arange

今天看到这样一句代码:

xb = np.random.random((nb, d)).astype(''float32'') #创建一个二维随机数矩阵(nb行d列)

xb[:, 0] += np.arange(nb) / 1000. #将矩阵第一列的每个数加上一个值要理解这两句代码需要理解三个函数

1、生成随机数

numpy.random.random(size=None)

size为None时,返回float。

size不为None时,返回numpy.ndarray。例如numpy.random.random((1,2)),返回1行2列的numpy数组

2、对numpy数组中每一个元素进行类型转换

numpy.ndarray.astype(dtype)

返回numpy.ndarray。例如 numpy.array([1, 2, 2.5]).astype(int),返回numpy数组 [1, 2, 2]

3、获取等差数列

numpy.arange([start,]stop,[step,]dtype=None)

功能类似python中自带的range()和numpy中的numpy.linspace

返回numpy数组。例如numpy.arange(3),返回numpy数组[0, 1, 2]

numpy.ravel()/numpy.flatten()/numpy.squeeze()

numpy.ravel(a, order=''C'')

Return a flattened array

numpy.chararray.flatten(order=''C'')

Return a copy of the array collapsed into one dimension

numpy.squeeze(a, axis=None)

Remove single-dimensional entries from the shape of an array.

相同点: 将多维数组 降为 一维数组

不同点:

ravel() 返回的是视图(view),意味着改变元素的值会影响原始数组元素的值;

flatten() 返回的是拷贝,意味着改变元素的值不会影响原始数组;

squeeze()返回的是视图(view),仅仅是将shape中dimension为1的维度去掉;

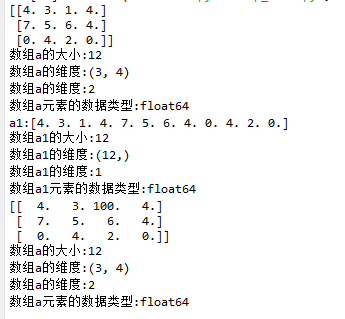

ravel()示例:

1 import matplotlib.pyplot as plt

2 import numpy as np

3

4 def log_type(name,arr):

5 print("数组{}的大小:{}".format(name,arr.size))

6 print("数组{}的维度:{}".format(name,arr.shape))

7 print("数组{}的维度:{}".format(name,arr.ndim))

8 print("数组{}元素的数据类型:{}".format(name,arr.dtype))

9 #print("数组:{}".format(arr.data))

10

11 a = np.floor(10*np.random.random((3,4)))

12 print(a)

13 log_type(''a'',a)

14

15 a1 = a.ravel()

16 print("a1:{}".format(a1))

17 log_type(''a1'',a1)

18 a1[2] = 100

19

20 print(a)

21 log_type(''a'',a)

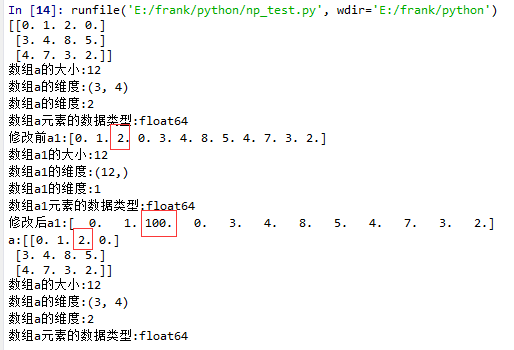

flatten()示例

1 import matplotlib.pyplot as plt

2 import numpy as np

3

4 def log_type(name,arr):

5 print("数组{}的大小:{}".format(name,arr.size))

6 print("数组{}的维度:{}".format(name,arr.shape))

7 print("数组{}的维度:{}".format(name,arr.ndim))

8 print("数组{}元素的数据类型:{}".format(name,arr.dtype))

9 #print("数组:{}".format(arr.data))

10

11 a = np.floor(10*np.random.random((3,4)))

12 print(a)

13 log_type(''a'',a)

14

15 a1 = a.flatten()

16 print("修改前a1:{}".format(a1))

17 log_type(''a1'',a1)

18 a1[2] = 100

19 print("修改后a1:{}".format(a1))

20

21 print("a:{}".format(a))

22 log_type(''a'',a)

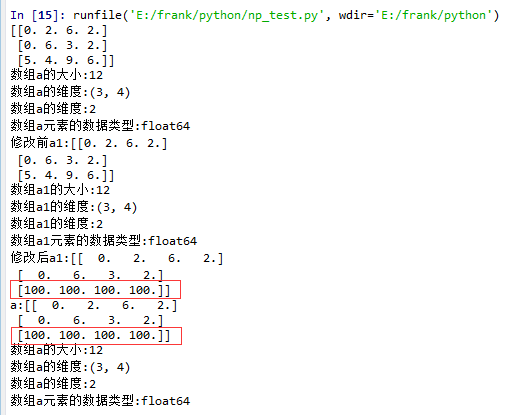

squeeze()示例:

1. 没有single-dimensional entries的情况

1 import matplotlib.pyplot as plt

2 import numpy as np

3

4 def log_type(name,arr):

5 print("数组{}的大小:{}".format(name,arr.size))

6 print("数组{}的维度:{}".format(name,arr.shape))

7 print("数组{}的维度:{}".format(name,arr.ndim))

8 print("数组{}元素的数据类型:{}".format(name,arr.dtype))

9 #print("数组:{}".format(arr.data))

10

11 a = np.floor(10*np.random.random((3,4)))

12 print(a)

13 log_type(''a'',a)

14

15 a1 = a.squeeze()

16 print("修改前a1:{}".format(a1))

17 log_type(''a1'',a1)

18 a1[2] = 100

19 print("修改后a1:{}".format(a1))

20

21 print("a:{}".format(a))

22 log_type(''a'',a)

从结果中可以看到,当没有single-dimensional entries时,squeeze()返回额数组对象是一个view,而不是copy。

2. 有single-dimentional entries 的情况

1 import matplotlib.pyplot as plt

2 import numpy as np

3

4 def log_type(name,arr):

5 print("数组{}的大小:{}".format(name,arr.size))

6 print("数组{}的维度:{}".format(name,arr.shape))

7 print("数组{}的维度:{}".format(name,arr.ndim))

8 print("数组{}元素的数据类型:{}".format(name,arr.dtype))

9 #print("数组:{}".format(arr.data))

10

11 a = np.floor(10*np.random.random((1,3,4)))

12 print(a)

13 log_type(''a'',a)

14

15 a1 = a.squeeze()

16 print("修改前a1:{}".format(a1))

17 log_type(''a1'',a1)

18 a1[2] = 100

19 print("修改后a1:{}".format(a1))

20

21 print("a:{}".format(a))

22 log_type(''a'',a)

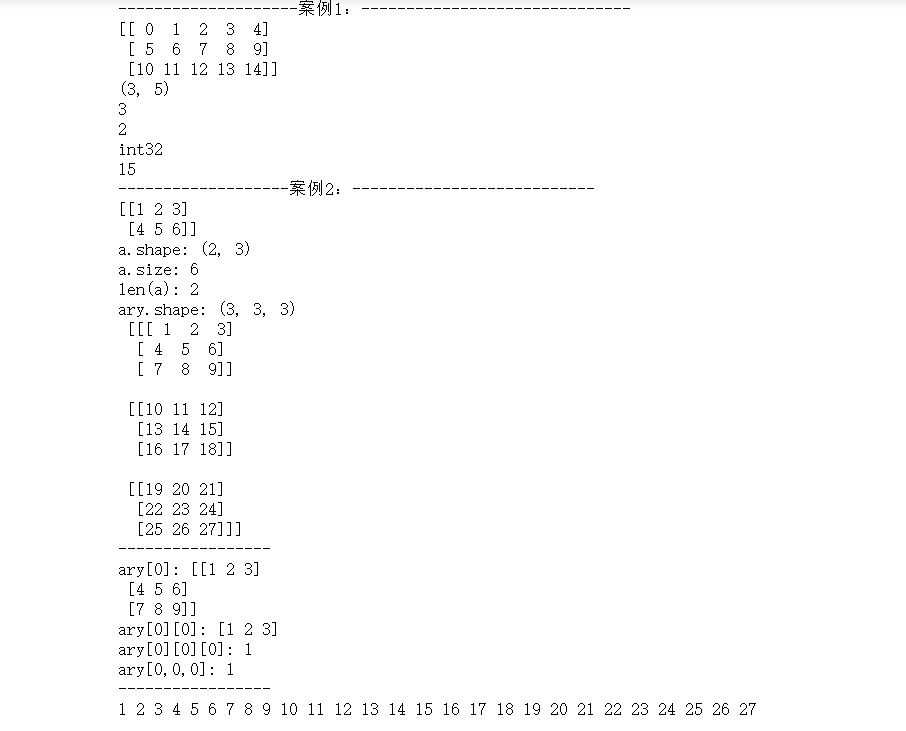

Numpy:数组创建 numpy.arrray() , numpy.arange()、np.linspace ()、数组基本属性

一、Numpy数组创建

part 1:np.linspace(起始值,终止值,元素总个数

import numpy as np

''''''

numpy中的ndarray数组

''''''

ary = np.array([1, 2, 3, 4, 5])

print(ary)

ary = ary * 10

print(ary)

''''''

ndarray对象的创建

''''''

# 创建二维数组

# np.array([[],[],...])

a = np.array([[1, 2, 3, 4], [5, 6, 7, 8]])

print(a)

# np.arange(起始值, 结束值, 步长(默认1))

b = np.arange(1, 10, 1)

print(b)

print("-------------np.zeros(数组元素个数, dtype=''数组元素类型'')-----")

# 创建一维数组:

c = np.zeros(10)

print(c, ''; c.dtype:'', c.dtype)

# 创建二维数组:

print(np.zeros ((3,4)))

print("----------np.ones(数组元素个数, dtype=''数组元素类型'')--------")

# 创建一维数组:

d = np.ones(10, dtype=''int64'')

print(d, ''; d.dtype:'', d.dtype)

# 创建三维数组:

print(np.ones( (2,3,4), dtype=np.int32 ))

# 打印维度

print(np.ones( (2,3,4), dtype=np.int32 ).ndim) # 返回:3(维)

结果图:

part 2 :np.linspace ( 起始值,终止值,元素总个数)

import numpy as np

a = np.arange( 10, 30, 5 )

b = np.arange( 0, 2, 0.3 )

c = np.arange(12).reshape(4,3)

d = np.random.random((2,3)) # 取-1到1之间的随机数,要求设置为诶2行3列的结构

print(a)

print(b)

print(c)

print(d)

print("-----------------")

from numpy import pi

print(np.linspace( 0, 2*pi, 100 ))

print("-------------np.linspace(起始值,终止值,元素总个数)------------------")

print(np.sin(np.linspace( 0, 2*pi, 100 )))

结果图:

二、Numpy的ndarray对象属性:

数组的结构:array.shape

数组的维度:array.ndim

元素的类型:array.dtype

数组元素的个数:array.size

数组的索引(下标):array[0]

''''''

数组的基本属性

''''''

import numpy as np

print("--------------------案例1:------------------------------")

a = np.arange(15).reshape(3, 5)

print(a)

print(a.shape) # 打印数组结构

print(len(a)) # 打印有多少行

print(a.ndim) # 打印维度

print(a.dtype) # 打印a数组内的元素的数据类型

# print(a.dtype.name)

print(a.size) # 打印数组的总元素个数

print("-------------------案例2:---------------------------")

a = np.array([[1, 2, 3], [4, 5, 6]])

print(a)

# 测试数组的基本属性

print(''a.shape:'', a.shape)

print(''a.size:'', a.size)

print(''len(a):'', len(a))

# a.shape = (6, ) # 此格式可将原数组结构变成1行6列的数据结构

# print(a, ''a.shape:'', a.shape)

# 数组元素的索引

ary = np.arange(1, 28)

ary.shape = (3, 3, 3) # 创建三维数组

print("ary.shape:",ary.shape,"\n",ary )

print("-----------------")

print(''ary[0]:'', ary[0])

print(''ary[0][0]:'', ary[0][0])

print(''ary[0][0][0]:'', ary[0][0][0])

print(''ary[0,0,0]:'', ary[0, 0, 0])

print("-----------------")

# 遍历三维数组:遍历出数组里的每个元素

for i in range(ary.shape[0]):

for j in range(ary.shape[1]):

for k in range(ary.shape[2]):

print(ary[i, j, k], end='' '')

结果图:

关于Python numpy 模块-promote_types() 实例源码和python中numpy模块的介绍已经告一段落,感谢您的耐心阅读,如果想了解更多关于Jupyter 中的 Numpy 在打印时出错(Python 版本 3.8.8):TypeError: 'numpy.ndarray' object is not callable、numpy.random.random & numpy.ndarray.astype & numpy.arange、numpy.ravel()/numpy.flatten()/numpy.squeeze()、Numpy:数组创建 numpy.arrray() , numpy.arange()、np.linspace ()、数组基本属性的相关信息,请在本站寻找。

本文标签: