本文将分享聊聊chronos的DeleteBgWorker的详细内容,并且还将对chronodisruption进行详尽解释,此外,我们还将为大家带来关于android.widget.Chronome

本文将分享聊聊 chronos 的 DeleteBgWorker的详细内容,并且还将对chronodisruption进行详尽解释,此外,我们还将为大家带来关于android.widget.Chronometer.OnChronometerTickListener的实例源码、Apache Spark:“未能启动org.apache.spark.deploy.worker.Worker”或Master、attachment delete deletion commit work issue、BackgroundWorker RunWorkerCompleted事件的相关知识,希望对你有所帮助。

本文目录一览:- 聊聊 chronos 的 DeleteBgWorker(chronodisruption)

- android.widget.Chronometer.OnChronometerTickListener的实例源码

- Apache Spark:“未能启动org.apache.spark.deploy.worker.Worker”或Master

- attachment delete deletion commit work issue

- BackgroundWorker RunWorkerCompleted事件

聊聊 chronos 的 DeleteBgWorker(chronodisruption)

序

本文主要研究一下 chronos 的 DeleteBgWorker

DeleteBgWorker

DDMQ/carrera-chronos/src/main/java/com/xiaojukeji/chronos/workers/DeleteBgWorker.java

public class DeleteBgWorker {

private static final Logger LOGGER = LoggerFactory.getLogger(DeleteBgWorker.class);

private static final DeleteConfig DELETE_CONFIG = ConfigManager.getConfig().getDeleteConfig();

private static final int SAVE_HOURS_OF_DATA = DELETE_CONFIG.getSaveHours();

private static final long INITIAL_DELAY_MINUTES = 1; // 1 分钟

private static final long PERIOD_MINUTES = 10; // 10 分钟

private static volatile DeleteBgWorker instance = null;

private static final SimpleDateFormat formatter = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

/**

* 2017/10/13 00:00:00

*/

private static final long MIN_TIMESTAMP = 1507824000;

private static final ScheduledExecutorService SCHEDULE = new ScheduledThreadPoolExecutor(1,

new BasicThreadFactory.Builder().namingPattern("delete-bg-worker-schedule-%d").daemon(true).build());

private DeleteBgWorker() {

}

public void start() {

SCHEDULE.scheduleAtFixedRate(() -> {

byte[] beginKey = String.valueOf(MIN_TIMESTAMP).getBytes(Charsets.UTF_8);

final long seekTimestampInSecond = MetaService.getSeekTimestamp();

byte[] endKey = String.valueOf(seekTimestampInSecond - SAVE_HOURS_OF_DATA * 60 * 60).getBytes(Charsets.UTF_8);

deleteRange(beginKey, endKey);

}, INITIAL_DELAY_MINUTES, PERIOD_MINUTES, TimeUnit.MINUTES);

LOGGER.info("DeleteBgWorker has started, initialDelayInMinutes:{}", INITIAL_DELAY_MINUTES);

}

private void deleteRange(final byte[] beginKey, final byte[] endKey) {

LOGGER.info("deleteRange start, beginKey:{}, endKey:{}", new String(beginKey), new String(endKey));

final long start = System.currentTimeMillis();

RDB.deleteFilesInRange(CFManager.CFH_DEFAULT, beginKey, endKey);

LOGGER.info("deleteRange end, beginKey:{}({}), endKey:{}({}), cost:{}ms",

new String(beginKey), formatter.format(Long.parseLong(new String(beginKey)) * 1000),

new String(endKey), formatter.format(Long.parseLong(new String(endKey)) * 1000),

System.currentTimeMillis() - start);

}

public void stop() {

SCHEDULE.shutdownNow();

while (!SCHEDULE.isShutdown()) {

LOGGER.info("DeleteBgWorker is shutting down!");

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

LOGGER.info("DeleteBgWorker was forced to shutdown, err:{}", e.getMessage(), e);

}

}

LOGGER.info("DeleteBgWorker was shutdown!");

}

public static DeleteBgWorker getInstance() {

if (instance == null) {

synchronized (DeleteBgWorker.class) {

if (instance == null) {

instance = new DeleteBgWorker();

}

}

}

return instance;

}

}

- DeleteBgWorker 提供了静态方法 getInstance 来获取或创建单例,该类提供了 start、stop 两个方法;start 方法会往 SCHEDULE 注册一个调度任务,每隔 PERIOD_MINUTES 执行一次,它主要执行 deleteRange 方法;deleteRange 主要是执行 RDB.deleteFilesInRange (CFManager.CFH_DEFAULT, beginKey, endKey),它会从 MetaService 获取 seekTimestamp 来计算 endKey;stop 方法则是关闭 SCHEDULE

deleteFilesInRange

DDMQ/carrera-chronos/src/main/java/com/xiaojukeji/chronos/db/RDB.java

public class RDB {

private static final org.slf4j.Logger LOGGER = LoggerFactory.getLogger(RDB.class);

static RocksDB DB;

//......

public static boolean deleteFilesInRange(final ColumnFamilyHandle cfh, final byte[] beginKey,

final byte[] endKey) {

try {

DB.deleteRange(cfh, beginKey, endKey);

LOGGER.debug("succ delete range, columnFamilyHandle:{}, beginKey:{}, endKey:{}",

cfh.toString(), new String(beginKey), new String(endKey));

} catch (RocksDBException e) {

LOGGER.error("error while delete range, columnFamilyHandle:{}, beginKey:{}, endKey:{}, err:{}",

cfh.toString(), new String(beginKey), new String(endKey), e.getMessage(), e);

return false;

}

return true;

}

//......

}

- deleteFilesInRange 方法主要是执行 DB.deleteRange (cfh, beginKey, endKey)

MetaService

DDMQ/carrera-chronos/src/main/java/com/xiaojukeji/chronos/services/MetaService.java

public class MetaService {

private static final Logger LOGGER = LoggerFactory.getLogger(MetaService.class);

private static volatile long seekTimestamp = -1;

private static volatile long zkSeekTimestamp = -1;

private static volatile Map<String, Long> zkQidOffsets = new ConcurrentHashMap<>();

private static final DbConfig dbConfig = ConfigManager.getConfig().getDbConfig();

private static final ScheduledExecutorService SCHEDULER = new ScheduledThreadPoolExecutor(1,

new BasicThreadFactory.Builder().namingPattern("offset-seektimestamp-schedule-%d").daemon(true).build());

public static void load() {

final long start = System.currentTimeMillis();

if (seekTimestamp == -1) {

seekTimestamp = loadSeekTimestampFromFile();

}

final long cost = System.currentTimeMillis() - start;

LOGGER.info("succ load seekTimestamp, seekTimestamp:{}, cost:{}ms", seekTimestamp, cost);

SCHEDULER.scheduleWithFixedDelay(() -> {

// 如果是master则拉取并上报zk offset和seekOffset

if (MasterElection.isMaster()) {

MqConsumeStatService.getInstance().uploadOffsetsToZk();

uploadSeekTimestampToZk();

}

}, 5, 1, TimeUnit.SECONDS);

}

private static long loadSeekTimestampFromFile() {

String seekTimestampStr = FileIOUtils.readFile2String(dbConfig.getSeekTimestampPath());

if (StringUtils.isBlank(seekTimestampStr)) {

final long initSeekTimestamp = TsUtils.genTS();

boolean result = FileIOUtils.writeFileFromString(dbConfig.getSeekTimestampPath(), String.valueOf(initSeekTimestamp));

if (result) {

LOGGER.info("init seekTimestamp and succ save, current seekTimestamp:{}", initSeekTimestamp);

} else {

LOGGER.error("init seekTimestamp and fail to save, current seekTimestamp:{}", initSeekTimestamp);

}

return initSeekTimestamp;

}

LOGGER.info("succ load seekTimestamp from file, seekTimestamp:{}", Long.parseLong(seekTimestampStr));

return Long.parseLong(seekTimestampStr);

}

public static long getSeekTimestamp() {

return seekTimestamp;

}

/**

* 此处的lock不能去掉

* 判断消息超时时

*/

public static void nextSeekTimestamp() {

Batcher.lock.lock();

try {

seekTimestamp++;

boolean result = FileIOUtils.writeFileFromString(dbConfig.getSeekTimestampPath(), String.valueOf(seekTimestamp));

if (result) {

LOGGER.info("incr seekTimestamp and succ save, next seekTimestamp:{}", seekTimestamp);

} else {

LOGGER.error("incr seekTimestamp and fail to save, next seekTimestamp:{}", seekTimestamp);

}

} finally {

Batcher.lock.unlock();

}

}

public static void uploadSeekTimestampToZk() {

String seekTimestampStr = String.valueOf(MetaService.getSeekTimestamp());

ZkUtils.createOrUpdateValue(Constants.SEEK_TIMESTAMP_ZK_PATH, seekTimestampStr);

LOGGER.debug("upload seekTimestamp to zk, seekTimestamp:{}", seekTimestampStr);

}

public static Map<String, Long> getZkQidOffsets() {

return zkQidOffsets;

}

public static void setZkQidOffsets(Map<String, Long> zkQidOffsets) {

MetaService.zkQidOffsets = zkQidOffsets;

}

public static long getZkSeekTimestamp() {

return zkSeekTimestamp;

}

public static void setZkSeekTimestamp(long zkSeekTimestamp) {

MetaService.zkSeekTimestamp = zkSeekTimestamp;

}

}

- MetaService 提供了 load、getSeekTimestamp、nextSeekTimestamp、uploadSeekTimestampToZk 等方法;load 方法在 seekTimestamp 为 - 1 时执行 loadSeekTimestampFromFile,之后注册一个定时任务每隔 1 秒,判断是否是 master,如果是则执行 MqConsumeStatService.getInstance ().uploadOffsetsToZk () 以及 uploadSeekTimestampToZk;nextSeekTimestamp 方法会先更新内存的 seekTimestamp,然后使用 FileIOUtils.writeFileFromString 将其值写入文件

小结

DeleteBgWorker 提供了静态方法 getInstance 来获取或创建单例,该类提供了 start、stop 两个方法;start 方法会往 SCHEDULE 注册一个调度任务,每隔 PERIOD_MINUTES 执行一次,它主要执行 deleteRange 方法;deleteRange 主要是执行 RDB.deleteFilesInRange (CFManager.CFH_DEFAULT, beginKey, endKey),它会从 MetaService 获取 seekTimestamp 来计算 endKey;stop 方法则是关闭 SCHEDULE

doc

- carrera-chronos

android.widget.Chronometer.OnChronometerTickListener的实例源码

@Override

public View onCreateView(LayoutInflater inflater,ViewGroup container,Bundle savedInstanceState) {

SharedPreferences sharedPref = getActivity().getSharedPreferences(getString(R.string.preference_file_key),Context.MODE_PRIVATE);

mTime = sharedPref.getInt(getString(R.string.value_timer),mTime);

View rootView = inflater.inflate(R.layout.fragment_layout_measurement,container,false);

mStopWatch = (CustomStopwatch) rootView.findViewById(R.id.stopwatch);

mStopWatch.setTime(mTime);

mStopWatch.setonChronometerTickListener(new OnChronometerTickListener() {

public void onChronometerTick(Chronometer arg0) {

boolean isAlert = false;

if (mStopWatch.measurementIsFinished() && !isAlert) {

mCallback.onTimerEnd(mTime);

stopTimer();

isAlert = true;

}

}

}

);

return rootView;

}

/**

* 开始计时

*/

public void start() {

// System.out.println("开始计时了。。。");

// 显示录制时间的textview

m_csbtn_recordtime.setVisibility(View.VISIBLE);

// 计时器控件重新置数,并开始启动计时器

m_chronometer_recordtime.setBase(SystemClock.elapsedRealtime());

m_chronometer_recordtime.start();

// 当背景改变是后背景改变

m_chronometer_recordtime

.setonChronometerTickListener(new OnChronometerTickListener() {

// 记录录制时间变量

@Override

public void onChronometerTick(Chronometer chronometer) {

// Todo Auto-generated method stub

// 为显示录制时间的textview赋值

String time = chronometer.getText().toString();

System.out.println("time为" + time);

// 确保显示时间的格式为00:00:00

if (time.length() == 5) {

m_csbtn_recordtime.setText("00:"

+ chronometer.getText());

} else if (time.length() == 7) {

m_csbtn_recordtime.setText("0"

+ chronometer.getText());

} else if (time.length() == 8) {

m_csbtn_recordtime.setText(chronometer.getText());

}

if (WiCameraActivity.mCurrentDegree == 90||WiCameraActivity.mCurrentDegree == 270) {

WiCameraActivity.m_al_camera_overlayui

.postInvalidate();

}

}

});

}

@Override

public View onCreateView(LayoutInflater inflater,Bundle savedInstanceState) {

fragmentView = (LinearLayout) inflater.inflate(R.layout.main,false);

layout1 = (LinearLayout) fragmentView.findViewById(R.id.linearLayout1);

layout2 = (LinearLayout) fragmentView.findViewById(R.id.linearLayout2);

layout4 = (LinearLayout) fragmentView.findViewById(R.id.linearLayout4);

layout5 = (LinearLayout) fragmentView.findViewById(R.id.linearLayout5);

defineWidgets(fragmentView);

if (savedInstanceState != null)

chrono.setBase(chronoBaseValue);

chrono.setonChronometerTickListener(new OnChronometerTickListener() {

public void onChronometerTick(Chronometer arg0) {

showCalculatedData(arg0);

if (prefs.getInt("practice_display5",TypesOfPractices.BASIC_PRACTICE.getTypes()) == TypesOfPractices.HIIT_PRACTICE

.getTypes())

showHiitTrainingDataIndisplay(arg0);

}

});

mapView = (MapView) fragmentView.findViewById(R.id.practice_mapview);

mapView.getoverlays().clear();

mapView.setBuiltInZoomControls(true);

mapView.setMultiTouchControls(true);

mapView.setUseSafeCanvas(true);

setHardwareaccelerationOff();

mapController = (MapController) mapView.getController();

ScaleBarOverlay mScaleBarOverlay = new ScaleBarOverlay(mContext);

mapView.getoverlays().add(mScaleBarOverlay);

pathOverlay = new PathOverlay(Color.BLUE,mContext);

pathOverlay.getPaint().setStyle(Style.stroke);

pathOverlay.getPaint().setstrokeWidth(3);

pathOverlay.getPaint().setAntiAlias(true);

mapView.getoverlays().add(pathOverlay);

this.mCompassOverlay = new CompassOverlay(mContext,new InternalCompassOrientationProvider(mContext),mapView);

mCompassOverlay.setEnabled(true);

mapView.getoverlays().add(mCompassOverlay);

this.mLocationOverlay = new MyLocationNewOverlay(mContext,new GpsMyLocationProvider(mContext),mapView);

mLocationOverlay.setDrawAccuracyEnabled(true);

mapView.getoverlays().add(mLocationOverlay);

MapOverlay touchOverlay = new MapOverlay(mContext);

mapView.getoverlays().add(touchOverlay);

mLocationOverlay.runOnFirstFix(new Runnable() {

public void run() {

if (centerPoint == null)

centerPoint = mLocationOverlay.getMyLocation();

centerMyLocation(centerPoint);

}

});

mapView.postInvalidate();

return fragmentView;

}

@Override

protected void onStart() {

// Todo Auto-generated method stub

super.onStart();

stopwatch.setonChronometerTickListener(new OnChronometerTickListener() {

@Override

public void onChronometerTick(Chronometer arg0) {

int countup = (int) ((SystemClock.elapsedRealtime() - arg0

.getBase()) / 1000);

String asText = (countup / 60) + ":" + (countup % 60);

tvtimer.setText("Time Elapsed :- " + asText);

}

});

}

private void setonChronometer(){

ch.setonChronometerTickListener(new OnChronometerTickListener() {

@Override

public void onChronometerTick(Chronometer chronometer) {

long aux = SystemClock.elapsedRealtime() - chronometer.getBase();

hmmss = timeFormat.format(aux);

chronometer.setText(hmmss);

}

});

}

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

getfigureFromPreferences();

getTypeFromPreferences();

musicOn();

play();

oldfigure=Preferences.getfigureName(this);

//ActionBar actionbar=getActionBar(); //SDK 11 Needed!

setStopWatch((Chronometer) findViewById(R.id.chrono));

startTime = SystemClock.elapsedRealtime();

//textGoesHere = (TextView) findViewById(R.id.textGoesHere);

getStopWatch().setonChronometerTickListener(new OnChronometerTickListener(){

@Override

public void onChronometerTick(Chronometer arg0) {

countUp = (SystemClock.elapsedRealtime() - arg0.getBase()) / 1000;

//String asText = (countUp / 60) + ":" + (countUp % 60);

// textGoesHere.setText(asText);

game.setSeconds((int)countUp);

setPlayerNameFromsetUPreferences();

game.setCurrentPlayer(playerName);

}

});

getStopWatch().start();

}

Apache Spark:“未能启动org.apache.spark.deploy.worker.Worker”或Master

我在Ubuntu14.04上运行的Openstack上创build了一个Spark集群,内存为8gb。 我创build了两个虚拟机,每个3gb(为父操作系统保留2 GB)。 此外,我从第一台虚拟机创build一个主人和两个工人,从第二台机器创build三个工人。

spark-env.sh文件具有基本设置

export SPARK_MASTER_IP=10.0.0.30 export SPARK_WORKER_INSTANCES=2 export SPARK_WORKER_MEMORY=1g export SPARK_WORKER_CORES=1

每当我用start-all.sh部署集群,我得到“无法启动org.apache.spark.deploy.worker.Worker”,有的时候“无法启动org.apache.spark.deploy.master.Master”。 当我看到日志文件来查找错误,我得到以下

Spark命令:/ usr / lib / jvm / java-7 -openjdk-amd64 / bin / java -cp> /home/ubuntu/spark-1.5.1/sbin /../ conf /:/ home / ubuntu / spark- > 1.5.1 /组件/目标/阶-2.10 /火花组件-1.5.1-> hadoop2.2.0.jar:/home/ubuntu/spark-1.5.1/lib_managed/jars/datanucleus-api-> jdo- 3.2.6.jar:/home/ubuntu/spark-1.5.1/lib_managed/jars/datanucleus-core-> 3.2.10.jar:/home/ubuntu/spark-1.5.1/lib_managed/jars/datanucleus-rdbms – > 3.2.9.jar -xms1g -Xmx1g -XX:MaxPermSize = 256m> org.apache.spark.deploy.master.Master –ip 10.0.0.30 –port 7077 –webui-> port 8080

尝试在群集上无头执行Netlogo时发生Java错误

使用Python在unix / linux中的单个目录中的文件数量限制

带有IIS7.5的ColdFusion 9群集

新起搏器中的crm_mon命令不起作用

如何在计算机群集上运行进程时访问标准输出?

虽然我得到了失败的信息,但是主人或工人在几秒钟后仍然活着。

有人可以解释原因吗?

使用Linux虚拟服务器在MMO游戏中对区域进行负载平衡

运行数十个小时后,在远程集群上出现奇怪的“Stale file handle,errno = 116”

Windows群集 – SSH似乎失败

如何configuration“与心脏起搏器清除过期的失败计数”时间

使用qsub运行shellscript时出现''文件意外结束''和''错误导入函数定义''错误

Spark配置系统是一堆乱七八糟的环境变量,参数标志和Java属性文件。 我花了几个小时追踪相同的警告,并解开Spark初始化过程,这里是我发现的:

sbin/start-all.sh调用sbin/start-master.sh (然后sbin/start-slaves.sh )

sbin/start-master.sh调用sbin/spark-daemon.sh start org.apache.spark.deploy.master.Master ...

sbin/spark-daemon.sh start ...分叉一个调用bin/spark-class org.apache.spark.deploy.master.Master ... ,捕获生成的进程ID(PID),睡2秒,然后检查该pid的命令名是否是“java”

bin/spark-class是一个bash脚本,所以它以命令名“bash”开头,然后进入:

(重新)加载Spark环境,方法是使用bin/load-spark-env.sh

找到java可执行文件

找到合适的Spark jar

调用java ... org.apache.spark.launcher.Main ...来获取Spark部署所需的完整类路径

然后最后通过exec将控制交给java ... org.apache.spark.deploy.master.Master ,此时命令名变为“java”

如果从4.1到4.5的时间超过了2秒,那么在我以前(以及您的)体验中,在java以前从未运行过的新操作系统上,这些操作看起来几乎是不可避免的,尽管没有任何东西失败了。

奴隶们会因为同样的原因而抱怨,并且一直在等到主人真的可以使用,但是他们应该继续重试,直到他们成功地连接到主人。

我在EC2上运行了一个非常标准的Spark部署; 我用:

conf/spark-defaults.conf来设置spark.executor.memory并通过spark.{driver,executor}.extraClasspath添加一些自定义的jar spark.{driver,executor}.extraClasspath

conf/spark-env.sh来设置SPARK_WORKER_CORES=$(($(nproc) * 2))

conf/slaves列出我的奴隶

以下是我如何开始Spark部署,绕过一些{bin,sbin}/*.sh雷区/迷宫:

# on master,with SPARK_HOME and conf/slaves set appropriately mapfile -t ARGS < <(java -cp $SPARK_HOME/lib/spark-assembly-1.6.1-hadoop2.6.0.jar org.apache.spark.launcher.Main org.apache.spark.deploy.master.Master | tr '''' ''n'') # $ARGS Now contains the full call to start the master,which I daemonize with nohup SPARK_PUBLIC_DNS=0.0.0.0 nohup "${ARGS[@]}" >> $SPARK_HOME/master.log 2>&1 < /dev/null &

我仍然使用sbin/start-daemon.sh来启动从站,因为这比在ssh命令中调用nohup更简单:

MASTER=spark://$(hostname -i):7077 while read -r; do ssh -o StrictHostKeyChecking=no $REPLY "$SPARK_HOME/sbin/spark-daemon.sh start org.apache.spark.deploy.worker.Worker 1 $MASTER" & done <$SPARK_HOME/conf/slaves # this forks the ssh calls,so wait for them to exit before you logout

那里! 它假定我使用的是所有的默认端口和东西,而且我并没有像把空格放在文件名中那样愚蠢的操作,但是我认为这种方式更加简单。

总结

以上是小编为你收集整理的Apache Spark:“未能启动org.apache.spark.deploy.worker.Worker”或Master全部内容。

如果觉得小编网站内容还不错,欢迎将小编网站推荐给好友。

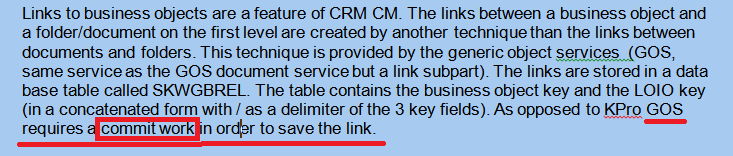

attachment delete deletion commit work issue

Sent: Friday, November 29, 2013 7:44 PM

我直接创建document的时候,是在一个test report里面做的,如果没有加commit work,document创建了之后就get不到,加上才work。

Host BO 和其attachment的relationshi是通过 GOS 维护的。

只有代码里出现COMMIT WORK, GOS的方法才会在新的update process里被触发。要debug必须打开update debugging。

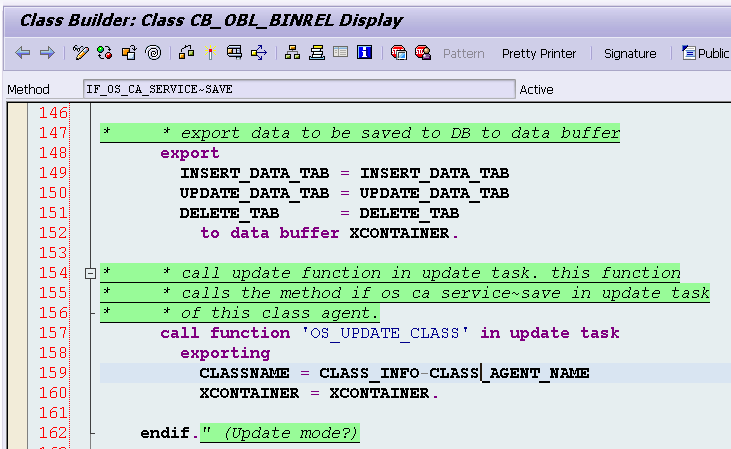

[外链图片转存失败(img-p18C8WYx-1563690249217)(https://user-images.githubusercontent.com/5669954/32708070-faa50bc2-c862-11e7-8efd-0f311fd0cf84.png)]

真正的link是在这里存的,如果没有commit work,这些代码都不会被执行到:

IC 点了end button存BO和interaction的link,也是用的类似的办法,由application call 一个commit,会trigger Genil的框架执行一次commit work。

[外链图片转存失败(img-iVauU0BJ-1563690249219)(https://user-images.githubusercontent.com/5669954/32708072-fb1263ac-c862-11e7-9b95-f211aa2eaf6b.png)]

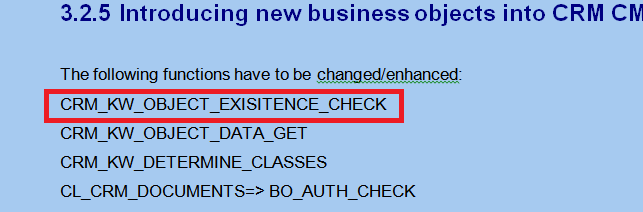

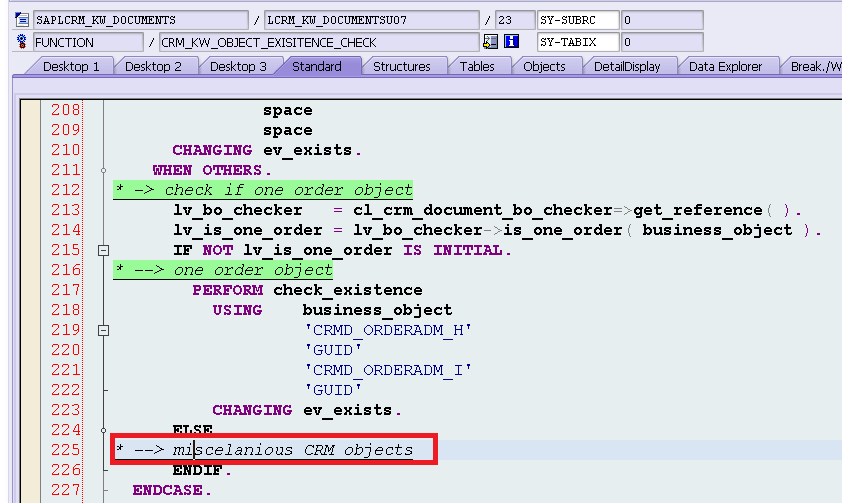

我找到我们social post和service request behavior 不一致的原因了。

问题就出在红色的这个FM我们没有enhance:

它会判断当前的BO在DB是否存在,根据结果决定是否需要一个显式的commit:

[外链图片转存失败(img-YNAXKloF-1563690249220)(https://user-images.githubusercontent.com/5669954/32708075-fb7a1b6e-c862-11e7-94c7-1bd283fff4a0.png)]

我们的socialpost落到了WHEN OTHERS里,因为也不是social post,所以就默认为ev_exist = false. 所以总是需要显式call commit work 才能真正删除掉link。

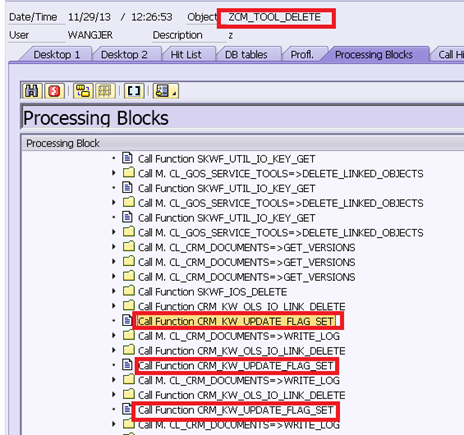

所以我们要么enhance上面那个FM,要么继续在我们的code里使用COMMIT WORK

同样的代码,输入参数是service request和我们的social post,行为不一致。代码对我们来说很陌生。

于是我用SAT 分别跑两个结果出来,一对比,很快就找到了root cause。

右边是我们的case,红色的FM是不应该出现的。稍稍分析就能知道为什么它被错误的调用到了,从而也就找到了root cause。

上次那个debug的练习后来你找到结果了么?

[外链图片转存失败(img-YsgYj6AD-1563690249221)(https://user-images.githubusercontent.com/5669954/32708077-fbe8f340-c862-11e7-90c5-5ede288dc95a.png)]

本文同步分享在 博客“汪子熙”(CSDN)。

如有侵权,请联系 support@oschina.cn 删除。

本文参与“OSC源创计划”,欢迎正在阅读的你也加入,一起分享。

BackgroundWorker RunWorkerCompleted事件

我的C#应用程序有几个后台工作人员。有时,一名后台工作者会解雇另一名。当第一个后台工作人员完成并且RunWorkerCompleted事件被触发时,该事件将在哪个线程上触发,UI或从哪个线程RunWorkerAsync调用?我正在使用Microsoft

Visual C#2008 Express Edition。您可能有任何想法或建议。谢谢。

答案1

小编典典如果BackgroundWorker是从UI线程创建的,则RunWorkerCompleted事件还将在UI线程上引发。

如果是从后台线程创建的,则事件将在未定义的后台线程上引发(不一定是同一线程,除非您使用custom SynchronizationContext)。

有趣的是,这似乎并没有在MSDN上得到充分记录。我能够找到的最佳参考是在这里:

在您的应用程序中实现多线程的首选方法是使用BackgroundWorker组件。的BackgroundWorker的组件使用一个事件驱动的模型为多线程。

后台线程运行您的 DoWork事件处理程序,而创建控件的线程运行您的ProgressChanged和RunWorkerCompleted事件处理程序。您可以从ProgressChanged和RunWorkerCompleted事件处理程序中调用控件。

今天关于聊聊 chronos 的 DeleteBgWorker和chronodisruption的讲解已经结束,谢谢您的阅读,如果想了解更多关于android.widget.Chronometer.OnChronometerTickListener的实例源码、Apache Spark:“未能启动org.apache.spark.deploy.worker.Worker”或Master、attachment delete deletion commit work issue、BackgroundWorker RunWorkerCompleted事件的相关知识,请在本站搜索。

本文标签: