对于想了解使用ansiblekubectl插件连接kubernetespod以及实现原理的读者,本文将提供新的信息,我们将详细介绍ansible安装kubernetes,并且为您提供关于a30.ans

对于想了解使用ansible kubectl插件连接kubernetes pod以及实现原理的读者,本文将提供新的信息,我们将详细介绍ansible安装kubernetes,并且为您提供关于a30.ansible 生产实战案例 --基于kubeadm-v1.20安装kubernetes--Kubeadm升级、docker – Kubectl:Kubernetes与minikube超时、jenkins 部署在 k8s 里,如何使用 kubernetes cli plugin 的 withKubeConfig 执行 kubectl 脚本、kubectl管理kubernetes集群的有价值信息。

本文目录一览:- 使用ansible kubectl插件连接kubernetes pod以及实现原理(ansible安装kubernetes)

- a30.ansible 生产实战案例 --基于kubeadm-v1.20安装kubernetes--Kubeadm升级

- docker – Kubectl:Kubernetes与minikube超时

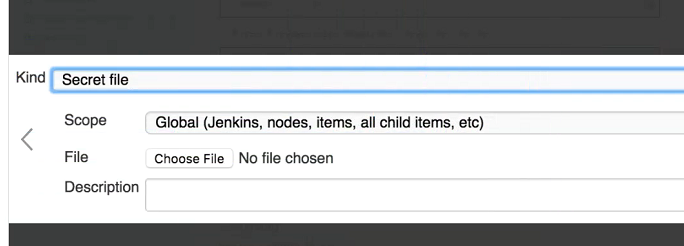

- jenkins 部署在 k8s 里,如何使用 kubernetes cli plugin 的 withKubeConfig 执行 kubectl 脚本

- kubectl管理kubernetes集群

使用ansible kubectl插件连接kubernetes pod以及实现原理(ansible安装kubernetes)

ansible kubectl connection plugin

ansible是目前业界非常火热的自动化运维工具。ansible可以通过ssh连接到目标机器上,从而完成指定的命令或者操作。

在kubernetes集群中,因为并不是所有的服务都是那么容器化。有时候也会用到ansible进行一些批量运维的工作。

一种方式是可以在容器中启动ssh,然后再去连接执行。但是并不是所有的容器都会启动ssh。

针对于这种情况,我想到了直接用kubectl进行连接操作,因此开发了kubectl的connection插件,并贡献给了社区。

该功能无需容器中启动ssh服务即可使用,已经合入ansible的主干,自ansible 2.5版本后随ansible发布。

详细操作文档可以参考

安装使用

ansible 2.5后内置了该connection plugin,所以之后的版本都可以自动支持。如果是之前的版本,需要自行合并该PR。

PR参考:https://github.com/ansible/ansible/commit/ca4eb07f46e7e3112757cb1edc7bd71fdd6dacad#diff-12d9b364560fd0bec5a1cee5bd0b3c24。

该connection plugin需要使用kubectl的二进制文件,务必将kubectl先进行安装,一般可以放置在/usr/bin下。

操作样例

以下是一个inventory的配置。

[root@f34cee76e36a kubesql]# cat inventory

[kube-test:vars]

ansible_connection=kubectl

ansible_kubectl_kubeconfig=/etc/kubeconfig

[kube-test]

hcnmore-32385abb-9f753dda-nxr8h ansible_kubectl_namespace=nevermore对于kube-test组,ansible_connection=kubectl用于标识使用kubectl的连接插件。

ansible_kubectl_kubeconfig用于标识使用的kubeconfig的位置。当然,也支持使用无认证、使用用户名密码认证等等方式进行连接。这些配置可以参考官网的说明:

在inventory配置完成后,即可进行ansible的操作了。这里我们查看一下当前的工作目录。

[root@f34cee76e36a kubesql]# ansible -i inventory kube-test -m shell -a "pwd"

hcnmore-32385abb-9f753dda-nxr8h | CHANGED | rc=0 >>

/playbook样例使用

该connection plugin同样支持使用playbook对批量任务进行执行。以下是一个playbook的样例。

- hosts: kube-test

gather_facts: False

tasks:

- shell: pwd我们复用了先前样例的inventory进行执行,同时打开-v进行观察。

[root@f34cee76e36a kubesql]# ansible-playbook -i inventory playbook -v

Using /etc/ansible/ansible.cfg as config file

PLAY [kube-test] *************************************************************************************************************************************************************************************************

TASK [shell] *****************************************************************************************************************************************************************************************************

changed: [hcnmore-32385abb-9f753dda-nxr8h] => {"changed": true, "cmd": "pwd", "delta": "0:00:00.217860", "end": "2019-03-18 20:55:39.479859", "rc": 0, "start": "2019-03-18 20:55:39.261999", "stderr": "", "stderr_lines": [], "stdout": "/", "stdout_lines": ["/"]}

PLAY RECAP *******************************************************************************************************************************************************************************************************

hcnmore-32385abb-9f753dda-nxr8h : ok=1 changed=1 unreachable=0 Failed=0 可以看到在目标机器上成功进行了命令执行。

优势与弊端

kubectl connection plugin不仅支持命令的执行,其他如cp文件、fetch文件等基本操作都可以执行。当然如执行shell、script脚本、密钥管理等复杂操作也都可以通过这个插件进行执行。但是这些复杂操作有的需要目标机器上安装python等环境进行支持,这个要根据不同模块的需求具体来看。

使用openshift的插件oc的使用方式与kubectl类似,这里不重复介绍了。

可以说这个kubectl的插件可以解决大部分的日常运维操作,但是也有一些弊端,就是使用kubectl的二进制进行连接。每次每个连接都需要启动一个进程进行kubectl执行,而且连接速度不快。因此不太适合大批量的容器高频操作。

我个人的建议是可以用这个插件进行注入密钥、低频启停服务的运维操作。大批量的操作仍然最好使用ssh进行。

实现原理

ansible connection plugin

ansible的connection plugin有很多,目前支持的有ssh、docker、kubectl等等。要实现一个ansible的连接插件,其实主要实现的是三个接口:

- exec_command: 在目标机执行一个命令,并返回执行结果。

- put_file: 将本地的一个文件传送到目标机上。

- fetch_file: 将目标机的一个文件拉回到本地。

这三个接口对应三个原生的模块,依次是raw,cp和fetch。而ansible的几乎所有复杂功能,都是通过这三个接口来进行实现的。比如shell或者script,就是通过将脚本或者命令形成的脚本cp到目标机上,而后进行命令执行完成的。

kubectl exec

kubectl exec天然就是执行命令的。docker client 有cp的功能,但是kubectl并不具有(kubectl 1.5版本后也支持了cp)。这里用了一个小的技巧,就是使用dd命令配合kubectl exec进行文件的传输和拉取。

因此要求目标容器中也要有dd命令,否则该插件也无法工作。

dd主要用于读取、转换和输出数据。我们将一个src文件拷贝到另外一个地方dest,可以使用dd if=/tmp/src of=/tmp/dest。dd也支持从标准输入中获取数据或者输出到标准输出中。因此拷贝也就可以使用dd if=/tmp/src | dd of=/tmp/dest的方法。

理解了这些,我们就可以使用kubectl来进行文件的传输了。那么向容器中传输文件的方式就可以使用 dd if=/tmp/src | kubectl exec -i podname dd of=/tmp/dest。反过来,从容器中拉取文件就对应可以使用kubectl exec -i podname dd of=/tmp/src | dd of=/tmp/src。

特别注意,向容器中传输文件的

-i参数是必须的。因为需要用-i参数开启支持kubectl从标准输入中获取数据。

这样就通过kubectl exec,实现了执行命令、文件传输以及拉取的效果。对应在ansible的connection plugin中进行实现,即可使得kubernetes的pod容器也支持ansible的运维控制。具体实现代码就不再重复讲述,详细可以参考

总结

以上是小编为你收集整理的使用ansible kubectl插件连接kubernetes pod以及实现原理全部内容。

如果觉得小编网站内容还不错,欢迎将小编网站推荐给好友。

原文地址:https://www.cnblogs.com/xuxinkun/p/10556900.html

a30.ansible 生产实战案例 --基于kubeadm-v1.20安装kubernetes--Kubeadm升级

19.k8s升级

19.1 升级master

19.1.1 升级master

[root@ansible-server ansible]# mkdir -p roles/kubeadm-update-master/{tasks,vars}

[root@ansible-server ansible]# cd roles/kubeadm-update-master/

[root@ansible-server kubeadm-update-master]# ls

files tasks vars

[root@ansible-server kubeadm-update-master]# vim vars/main.yml

KUBEADM_VERSION: 1.20.15

HARBOR_DOMAIN: harbor.raymonds.cc

MASTER01: 172.31.3.101

MASTER02: 172.31.3.102

MASTER03: 172.31.3.103

[root@ansible-server kubeadm-update-master]# vim tasks/upgrade_master01.yml

- name: install CentOS or Rocky socat

yum:

name: socat

when:

- (ansible_distribution=="CentOS" or ansible_distribution=="Rocky")

- inventory_hostname in groups.ha

- name: install Ubuntu socat

apt:

name: socat

force: yes

when:

- ansible_distribution=="Ubuntu"

- inventory_hostname in groups.ha

- name: down master01

shell:

cmd: ssh -o StrictHostKeyChecking=no root@k8s-lb "echo "disable server kubernetes-6443/{{ MASTER01 }}" | socat stdio /var/lib/haproxy/haproxy.sock"

when:

- ansible_hostname=="k8s-master01"

- name: install CentOS or Rocky kubeadm for master

yum:

name: kubelet-{{ KUBEADM_VERSION }},kubeadm-{{ KUBEADM_VERSION }},kubectl-{{ KUBEADM_VERSION }}

when:

- (ansible_distribution=="CentOS" or ansible_distribution=="Rocky")

- ansible_hostname=="k8s-master01"

- name: install Ubuntu kubeadm for master

apt:

name: kubelet={{ KUBEADM_VERSION }}-00,kubeadm={{ KUBEADM_VERSION }}-00,kubectl={{ KUBEADM_VERSION }}-00

force: yes

when:

- ansible_distribution=="Ubuntu"

- ansible_hostname=="k8s-master01"

- name: restart kubelet

systemd:

name: kubelet

state: restarted

daemon_reload: yes

when:

- ansible_hostname=="k8s-master01"

- name: get kubeadm version

shell:

cmd: kubeadm config images list --kubernetes-version=v{{ KUBEADM_VERSION }} | awk -F "/" ''{print $NF}''

register: KUBEADM_IMAGES_VERSION

when:

- ansible_hostname=="k8s-master01"

- name: download kubeadm image for master01

shell: |

{% for i in KUBEADM_IMAGES_VERSION.stdout_lines %}

docker pull registry.aliyuncs.com/google_containers/{{ i }}

docker tag registry.aliyuncs.com/google_containers/{{ i }} {{ HARBOR_DOMAIN }}/google_containers/{{ i }}

docker rmi registry.aliyuncs.com/google_containers/{{ i }}

docker push {{ HARBOR_DOMAIN }}/google_containers/{{ i }}

{% endfor %}

when:

- ansible_hostname=="k8s-master01"

- name: kubeadm upgrade

shell:

cmd: |

kubeadm upgrade apply v{{ KUBEADM_VERSION }} <<EOF

y

EOF

sleep 240s

when:

- ansible_hostname=="k8s-master01"

- name: up master01

shell:

cmd: ssh -o StrictHostKeyChecking=no root@k8s-lb "echo "enable server kubernetes-6443/{{ MASTER01 }}" | socat stdio /var/lib/haproxy/haproxy.sock"

when:

- ansible_hostname=="k8s-master01"

[root@ansible-server kubeadm-update-master]# vim tasks/upgrade_master02.yml

- name: down master02

shell:

cmd: ssh -o StrictHostKeyChecking=no root@k8s-lb "echo "disable server kubernetes-6443/{{ MASTER02 }}" | socat stdio /var/lib/haproxy/haproxy.sock"

when:

- ansible_hostname=="k8s-master02"

- name: install CentOS or Rocky kubeadm for master

yum:

name: kubelet-{{ KUBEADM_VERSION }},kubeadm-{{ KUBEADM_VERSION }},kubectl-{{ KUBEADM_VERSION }}

when:

- (ansible_distribution=="CentOS" or ansible_distribution=="Rocky")

- ansible_hostname=="k8s-master02"

- name: install Ubuntu kubeadm for master

apt:

name: kubelet={{ KUBEADM_VERSION }}-00,kubeadm={{ KUBEADM_VERSION }}-00,kubectl={{ KUBEADM_VERSION }}-00

force: yes

when:

- ansible_distribution=="Ubuntu"

- ansible_hostname=="k8s-master02"

- name: restart kubelet

systemd:

name: kubelet

state: restarted

daemon_reload: yes

when:

- ansible_hostname=="k8s-master02"

- name: get kubeadm version

shell:

cmd: kubeadm config images list --kubernetes-version=v{{ KUBEADM_VERSION }} | awk -F "/" ''{print $NF}''

register: KUBEADM_IMAGES_VERSION

when:

- ansible_hostname=="k8s-master02"

- name: download kubeadm image for master02

shell: |

{% for i in KUBEADM_IMAGES_VERSION.stdout_lines %}

docker pull {{ HARBOR_DOMAIN }}/google_containers/{{ i }}

{% endfor %}

when:

- ansible_hostname=="k8s-master02"

- name: kubeadm upgrade

shell:

cmd: |

kubeadm upgrade apply v{{ KUBEADM_VERSION }} <<EOF

y

EOF

sleep 240s

when:

- ansible_hostname=="k8s-master02"

- name: up master02

shell:

cmd: ssh -o StrictHostKeyChecking=no root@k8s-lb "echo "enable server kubernetes-6443/{{ MASTER02 }}" | socat stdio /var/lib/haproxy/haproxy.sock"

when:

- ansible_hostname=="k8s-master02"

[root@ansible-server kubeadm-update-master]# vim tasks/upgrade_master03.yml

- name: down master03

shell:

cmd: ssh -o StrictHostKeyChecking=no root@k8s-lb "echo "disable server kubernetes-6443/{{ MASTER03 }}" | socat stdio /var/lib/haproxy/haproxy.sock"

when:

- ansible_hostname=="k8s-master03"

- name: install CentOS or Rocky kubeadm for master

yum:

name: kubelet-{{ KUBEADM_VERSION }},kubeadm-{{ KUBEADM_VERSION }},kubectl-{{ KUBEADM_VERSION }}

when:

- (ansible_distribution=="CentOS" or ansible_distribution=="Rocky")

- ansible_hostname=="k8s-master03"

- name: install Ubuntu kubeadm for master

apt:

name: kubelet={{ KUBEADM_VERSION }}-00,kubeadm={{ KUBEADM_VERSION }}-00,kubectl={{ KUBEADM_VERSION }}-00

force: yes

when:

- ansible_distribution=="Ubuntu"

- ansible_hostname=="k8s-master03"

- name: restart kubelet

systemd:

name: kubelet

state: restarted

daemon_reload: yes

when:

- ansible_hostname=="k8s-master03"

- name: get kubeadm version

shell:

cmd: kubeadm config images list --kubernetes-version=v{{ KUBEADM_VERSION }} | awk -F "/" ''{print $NF}''

register: KUBEADM_IMAGES_VERSION

when:

- ansible_hostname=="k8s-master03"

- name: download kubeadm image for master03

shell: |

{% for i in KUBEADM_IMAGES_VERSION.stdout_lines %}

docker pull {{ HARBOR_DOMAIN }}/google_containers/{{ i }}

{% endfor %}

when:

- ansible_hostname=="k8s-master03"

- name: kubeadm upgrade

shell:

cmd: |

kubeadm upgrade apply v{{ KUBEADM_VERSION }} <<EOF

y

EOF

sleep 240s

when:

- ansible_hostname=="k8s-master03"

- name: up master03

shell:

cmd: ssh -o StrictHostKeyChecking=no root@k8s-lb "echo "enable server kubernetes-6443/{{ MASTER03 }}" | socat stdio /var/lib/haproxy/haproxy.sock"

when:

- ansible_hostname=="k8s-master03"

[root@ansible-server kubeadm-update-master]# vim tasks/main.yml

- include: upgrade_master01.yml

- include: upgrade_master02.yml

- include: upgrade_master03.yml

[root@ansible-server kubeadm-update-master]# cd ../../

[root@ansible-server ansible]# tree roles/kubeadm-update-master/

roles/kubeadm-update-master/

├── tasks

│ ├── main.yml

│ ├── upgrade_master01.yml

│ ├── upgrade_master02.yml

│ └── upgrade_master03.yml

└── vars

└── main.yml

2 directories, 5 files

[root@ansible-server ansible]# vim kubeadm_update_master_role.yml

---

- hosts: k8s_cluster:ha

roles:

- role: kubeadm-update-master

[root@ansible-server ansible]# ansible-playbook kubeadm_update_master_role.yml

19.1.2 验证master

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready control-plane,master 18h v1.20.15

k8s-master02.example.local Ready control-plane,master 18h v1.20.15

k8s-master03.example.local Ready control-plane,master 18h v1.20.15

k8s-node01.example.local Ready <none> 18h v1.20.14

k8s-node02.example.local Ready <none> 18h v1.20.14

k8s-node03.example.local Ready <none> 18h v1.20.14

19.2 升级calico

[root@ansible-server ansible]# mkdir -p roles/calico-update/{tasks,vars,templates}

[root@ansible-server ansible]# cd roles/calico-update

[root@ansible-server calico-update]# ls

tasks templates vars

[root@ansible-server calico-update]# vim vars/main.yml

HARBOR_DOMAIN: harbor.raymonds.cc

MASTER01: 172.31.3.101

MASTER02: 172.31.3.102

MASTER03: 172.31.3.103

[root@ansible-server calico-update]# wget https://docs.projectcalico.org/manifests/calico-etcd.yaml -p templates/calico-etcd.yaml.j2

[root@k8s-master01 ~]# vim templates/calico-etcd.yaml.j2

...

spec:

selector:

matchLabels:

k8s-app: calico-node

updateStrategy:

type: OnDelete #修改这里,calico不会滚动更新,只有重启了kubelet,才会更新

template:

Metadata:

labels:

k8s-app: calico-node

...

#修改下面内容

[root@ansible-server calico-update]# grep "etcd_endpoints:.*" templates/calico-etcd.yaml.j2

etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"

[root@ansible-server calico-update]# sed -i ''s#etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"#etcd_endpoints: "{% for i in groups.master %}https://{{ hostvars[i].ansible_default_ipv4.address }}:2379{% if not loop.last %},{% endif %}{% endfor %}"#g'' templates/calico-etcd.yaml.j2

[root@ansible-server calico-update]# grep "etcd_endpoints:.*" templates/calico-etcd.yaml.j2

etcd_endpoints: "{% for i in groups.master %}https://{{ hostvars[i].ansible_default_ipv4.address }}:2379{% if not loop.last %},{% endif %}{% endfor %}"

[root@ansible-server calico-update]# vim tasks/calico_file.yml

- name: copy calico-etcd.yaml file

template:

src: calico-etcd.yaml.j2

dest: /root/calico-etcd.yaml

when:

- ansible_hostname=="k8s-master01"

[root@ansible-server calico-update]# vim tasks/config.yml

- name: get ETCD_KEY key

shell:

cmd: cat /etc/kubernetes/pki/etcd/server.key | base64 | tr -d ''\n''

register: ETCD_KEY

when:

- ansible_hostname=="k8s-master01"

- name: Modify the ".*etcd-key:.*" line

replace:

path: /root/calico-etcd.yaml

regexp: ''# (etcd-key:) null''

replace: ''\1 {{ ETCD_KEY.stdout }}''

when:

- ansible_hostname=="k8s-master01"

- name: get ETCD_CERT key

shell:

cmd: cat /etc/kubernetes/pki/etcd/server.crt | base64 | tr -d ''\n''

register: ETCD_CERT

when:

- ansible_hostname=="k8s-master01"

- name: Modify the ".*etcd-cert:.*" line

replace:

path: /root/calico-etcd.yaml

regexp: ''# (etcd-cert:) null''

replace: ''\1 {{ ETCD_CERT.stdout }}''

when:

- ansible_hostname=="k8s-master01"

- name: get ETCD_CA key

shell:

cmd: cat /etc/kubernetes/pki/etcd/ca.crt | base64 | tr -d ''\n''

when:

- ansible_hostname=="k8s-master01"

register: ETCD_CA

- name: Modify the ".*etcd-ca:.*" line

replace:

path: /root/calico-etcd.yaml

regexp: ''# (etcd-ca:) null''

replace: ''\1 {{ ETCD_CA.stdout }}''

when:

- ansible_hostname=="k8s-master01"

- name: Modify the ".*etcd_ca:.*" line

replace:

path: /root/calico-etcd.yaml

regexp: ''(etcd_ca:) ""''

replace: ''\1 "/calico-secrets/etcd-ca"''

when:

- ansible_hostname=="k8s-master01"

- name: Modify the ".*etcd_cert:.*" line

replace:

path: /root/calico-etcd.yaml

regexp: ''(etcd_cert:) ""''

replace: ''\1 "/calico-secrets/etcd-cert"''

when:

- ansible_hostname=="k8s-master01"

- name: Modify the ".*etcd_key:.*" line

replace:

path: /root/calico-etcd.yaml

regexp: ''(etcd_key:) ""''

replace: ''\1 "/calico-secrets/etcd-key"''

when:

- ansible_hostname=="k8s-master01"

- name: Modify the ".*CALICO_IPV4POOL_CIDR.*" line

replace:

path: /root/calico-etcd.yaml

regexp: ''# (- name: CALICO_IPV4POOL_CIDR)''

replace: ''\1''

when:

- ansible_hostname=="k8s-master01"

- name: get POD_subnet

shell:

cmd: cat /etc/kubernetes/manifests/kube-controller-manager.yaml | grep cluster-cidr= | awk -F= ''{print $NF}''

register: POD_subnet

when:

- ansible_hostname=="k8s-master01"

- name: Modify the ".*192.168.0.0.*" line

replace:

path: /root/calico-etcd.yaml

regexp: ''# (value:) "192.168.0.0/16"''

replace: '' \1 "{{ POD_subnet.stdout }}"''

when:

- ansible_hostname=="k8s-master01"

- name: Modify the "image:" line

replace:

path: /root/calico-etcd.yaml

regexp: ''(.*image:) docker.io/calico(/.*)''

replace: ''\1 {{ HARBOR_DOMAIN }}/google_containers\2''

when:

- ansible_hostname=="k8s-master01"

[root@ansible-server calico-update]# vim tasks/download_images.yml

- name: get calico version

shell:

chdir: /root

cmd: awk -F "/" ''/image:/{print $NF}'' calico-etcd.yaml

register: CALICO_VERSION

when:

- ansible_hostname=="k8s-master01"

- name: download calico image

shell: |

{% for i in CALICO_VERSION.stdout_lines %}

docker pull registry.cn-beijing.aliyuncs.com/raymond9/{{ i }}

docker tag registry.cn-beijing.aliyuncs.com/raymond9/{{ i }} {{ HARBOR_DOMAIN }}/google_containers/{{ i }}

docker rmi registry.cn-beijing.aliyuncs.com/raymond9/{{ i }}

docker push {{ HARBOR_DOMAIN }}/google_containers/{{ i }}

{% endfor %}

when:

- ansible_hostname=="k8s-master01"

[root@ansible-server calico-update]# vim tasks/install_calico.yml

- name: install calico

shell:

chdir: /root

cmd: "kubectl --kubeconfig=/etc/kubernetes/admin.conf apply -f calico-etcd.yaml"

when:

- ansible_hostname=="k8s-master01"

[root@ansible-server calico-update]# vim tasks/delete_master01_calico_container.yml

- name: down master01

shell:

cmd: ssh -o StrictHostKeyChecking=no root@k8s-lb "echo "disable server kubernetes-6443/{{ MASTER01 }}" | socat stdio /var/lib/haproxy/haproxy.sock"

when:

- ansible_hostname=="k8s-master01"

- name: get calico container

shell:

cmd: kubectl --kubeconfig=/etc/kubernetes/admin.conf get pod -n kube-system -o wide|grep calico |grep master01 |awk -F " " ''{print $1}''

register: CALICO_CONTAINER

when:

- ansible_hostname=="k8s-master01"

- name: delete calico container

shell: |

kubectl --kubeconfig=/etc/kubernetes/admin.conf delete pod {{ CALICO_CONTAINER.stdout }} -n kube-system

sleep 30s

when:

- ansible_hostname=="k8s-master01"

- name: up master01

shell:

cmd: ssh -o StrictHostKeyChecking=no root@k8s-lb "echo "enable server kubernetes-6443/{{ MASTER01 }}" | socat stdio /var/lib/haproxy/haproxy.sock"

when:

- ansible_hostname=="k8s-master01"

[root@ansible-server calico-update]# vim tasks/delete_master02_calico_container.yml

- name: down master02

shell:

cmd: ssh -o StrictHostKeyChecking=no root@k8s-lb "echo "disable server kubernetes-6443/{{ MASTER02 }}" | socat stdio /var/lib/haproxy/haproxy.sock"

when:

- ansible_hostname=="k8s-master01"

- name: get calico container

shell:

cmd: kubectl --kubeconfig=/etc/kubernetes/admin.conf get pod -n kube-system -o wide|grep calico |grep master02 |awk -F " " ''{print $1}''

register: CALICO_CONTAINER

when:

- ansible_hostname=="k8s-master01"

- name: delete calico container

shell: |

kubectl --kubeconfig=/etc/kubernetes/admin.conf delete pod {{ CALICO_CONTAINER.stdout }} -n kube-system

sleep 30s

when:

- ansible_hostname=="k8s-master01"

- name: up master02

shell:

cmd: ssh -o StrictHostKeyChecking=no root@k8s-lb "echo "enable server kubernetes-6443/{{ MASTER02 }}" | socat stdio /var/lib/haproxy/haproxy.sock"

when:

- ansible_hostname=="k8s-master01"

[root@ansible-server calico-update]# vim tasks/delete_master03_calico_container.yml

- name: down master03

shell:

cmd: ssh -o StrictHostKeyChecking=no root@k8s-lb "echo "disable server kubernetes-6443/{{ MASTER03 }}" | socat stdio /var/lib/haproxy/haproxy.sock"

when:

- ansible_hostname=="k8s-master01"

- name: get calico container

shell:

cmd: kubectl get --kubeconfig=/etc/kubernetes/admin.conf pod -n kube-system -o wide|grep calico |grep master03 |awk -F " " ''{print $1}''

register: CALICO_CONTAINER

when:

- ansible_hostname=="k8s-master01"

- name: delete calico container

shell: |

kubectl --kubeconfig=/etc/kubernetes/admin.conf delete pod {{ CALICO_CONTAINER.stdout }} -n kube-system

sleep 30s

when:

- ansible_hostname=="k8s-master01"

- name: up master03

shell:

cmd: ssh -o StrictHostKeyChecking=no root@k8s-lb "echo "enable server kubernetes-6443/{{ MASTER03 }}" | socat stdio /var/lib/haproxy/haproxy.sock"

when:

- ansible_hostname=="k8s-master01"

[root@ansible-server calico-update]# vim tasks/main.yml

- include: calico_file.yml

- include: config.yml

- include: download_images.yml

- include: install_calico.yml

- include: delete_master01_calico_container.yml

- include: delete_master02_calico_container.yml

- include: delete_master03_calico_container.yml

[root@ansible-server calico-update]# cd ../../

[root@ansible-server ansible]# tree roles/calico-update/

roles/calico-update/

├── tasks

│ ├── calico_file.yml

│ ├── config.yml

│ ├── delete_master01_calico_container.yml

│ ├── delete_master02_calico_container.yml

│ ├── delete_master03_calico_container.yml

│ ├── download_images.yml

│ ├── install_calico.yml

│ └── main.yml

├── templates

│ └── calico-etcd.yaml.j2

└── vars

└── main.yml

3 directories, 10 files

[root@ansible-server ansible]# vim calico_update_role.yml

---

- hosts: master

roles:

- role: calico-update

[root@ansible-server ansible]# ansible-playbook calico_update_role.yml

19.3 node

19.3.1 升级node

[root@ansible-server ansible]# mkdir -p roles/kubeadm-update-node/{tasks,vars}

[root@ansible-server ansible]# cd roles/kubeadm-update-node/

[root@ansible-server kubeadm-update-node]# ls

tasks vars

[root@ansible-server kubeadm-update-node]# vim vars/main.yml

KUBEADM_VERSION: 1.20.15

[root@ansible-server kubeadm-update-node]# vim tasks/upgrade_node01.yml

- name: drain node01

shell:

cmd: kubectl --kubeconfig=/etc/kubernetes/admin.conf drain k8s-node01.example.local --delete-emptydir-data --force --ignore-daemonsets

when:

- ansible_hostname=="k8s-master01"

- name: install CentOS or Rocky kubeadm for node

yum:

name: kubelet-{{ KUBEADM_VERSION }},kubeadm-{{ KUBEADM_VERSION }}

when:

- (ansible_distribution=="CentOS" or ansible_distribution=="Rocky")

- ansible_hostname=="k8s-node01"

- name: install Ubuntu kubeadm for node

apt:

name: kubelet={{ KUBEADM_VERSION }}-00,kubeadm={{ KUBEADM_VERSION }}-00

force: yes

when:

- ansible_distribution=="Ubuntu"

- ansible_hostname=="k8s-node01"

- name: restart kubelet

systemd:

name: kubelet

state: restarted

daemon_reload: yes

when:

- ansible_hostname=="k8s-node01"

- name: get calico container

shell:

cmd: kubectl --kubeconfig=/etc/kubernetes/admin.conf get pod -n kube-system -o wide|grep calico |grep node01 |tail -n1|awk -F " " ''{print $1}''

register: CALICO_CONTAINER

when:

- ansible_hostname=="k8s-master01"

- name: delete calico container

shell: |

kubectl --kubeconfig=/etc/kubernetes/admin.conf delete pod {{ CALICO_CONTAINER.stdout }} -n kube-system

sleep 60s

when:

- ansible_hostname=="k8s-master01"

- name: uncordon node01

shell:

cmd: kubectl --kubeconfig=/etc/kubernetes/admin.conf uncordon k8s-node01.example.local

when:

- ansible_hostname=="k8s-master01"

[root@ansible-server kubeadm-update-node]# vim tasks/upgrade_node02.yml

- name: drain node02

shell:

cmd: kubectl --kubeconfig=/etc/kubernetes/admin.conf drain k8s-node02.example.local --delete-emptydir-data --force --ignore-daemonsets

when:

- ansible_hostname=="k8s-master01"

- name: install CentOS or Rocky kubeadm for node

yum:

name: kubelet-{{ KUBEADM_VERSION }},kubeadm-{{ KUBEADM_VERSION }}

when:

- (ansible_distribution=="CentOS" or ansible_distribution=="Rocky")

- ansible_hostname=="k8s-node02"

- name: install Ubuntu kubeadm for node

apt:

name: kubelet={{ KUBEADM_VERSION }}-00,kubeadm={{ KUBEADM_VERSION }}-00

force: yes

when:

- ansible_distribution=="Ubuntu"

- ansible_hostname=="k8s-node02"

- name: restart kubelet

systemd:

name: kubelet

state: restarted

daemon_reload: yes

when:

- ansible_hostname=="k8s-node02"

- name: get calico container

shell:

cmd: kubectl --kubeconfig=/etc/kubernetes/admin.conf get pod -n kube-system -o wide|grep calico |grep node02 |tail -n1|awk -F " " ''{print $1}''

register: CALICO_CONTAINER

when:

- ansible_hostname=="k8s-master01"

- name: delete calico container

shell: |

kubectl --kubeconfig=/etc/kubernetes/admin.conf delete pod {{ CALICO_CONTAINER.stdout }} -n kube-system

sleep 60s

when:

- ansible_hostname=="k8s-master01"

- name: uncordon node02

shell:

cmd: kubectl --kubeconfig=/etc/kubernetes/admin.conf uncordon k8s-node02.example.local

when:

- ansible_hostname=="k8s-master01"

[root@ansible-server kubeadm-update-node]# vim tasks/upgrade_node03.yml

- name: drain node03

shell:

cmd: kubectl --kubeconfig=/etc/kubernetes/admin.conf drain k8s-node03.example.local --delete-emptydir-data --force --ignore-daemonsets

when:

- ansible_hostname=="k8s-master01"

- name: install CentOS or Rocky kubeadm for node

yum:

name: kubelet-{{ KUBEADM_VERSION }},kubeadm-{{ KUBEADM_VERSION }}

when:

- (ansible_distribution=="CentOS" or ansible_distribution=="Rocky")

- ansible_hostname=="k8s-node03"

- name: install Ubuntu kubeadm for node

apt:

name: kubelet={{ KUBEADM_VERSION }}-00,kubeadm={{ KUBEADM_VERSION }}-00

force: yes

when:

- ansible_distribution=="Ubuntu"

- ansible_hostname=="k8s-node03"

- name: restart kubelet

systemd:

name: kubelet

state: restarted

daemon_reload: yes

when:

- ansible_hostname=="k8s-node03"

- name: get calico container

shell:

cmd: kubectl --kubeconfig=/etc/kubernetes/admin.conf get pod -n kube-system -o wide|grep calico |grep node03 |tail -n1|awk -F " " ''{print $1}''

register: CALICO_CONTAINER

when:

- ansible_hostname=="k8s-master01"

- name: delete calico container

shell: |

kubectl --kubeconfig=/etc/kubernetes/admin.conf delete pod {{ CALICO_CONTAINER.stdout }} -n kube-system

sleep 60s

when:

- ansible_hostname=="k8s-master01"

- name: uncordon node03

shell:

cmd: kubectl --kubeconfig=/etc/kubernetes/admin.conf uncordon k8s-node03.example.local

when:

- ansible_hostname=="k8s-master01"

[root@ansible-server kubeadm-update-node]# vim tasks/main.yml

- include: upgrade_node01.yml

- include: upgrade_node02.yml

- include: upgrade_node03.yml

[root@ansible-server kubeadm-update-node]# cd ../../

[root@ansible-server ansible]# tree roles/kubeadm-update-node/

roles/kubeadm-update-node/

├── tasks

│ ├── main.yml

│ ├── upgrade_node01.yml

│ ├── upgrade_node02.yml

│ └── upgrade_node03.yml

└── vars

└── main.yml

2 directories, 5 files

[root@ansible-server ansible]# vim kubeadm_update_node_role.yml

---

- hosts: k8s_cluster

roles:

- role: kubeadm-update-node

[root@ansible-server ansible]# ansible-playbook kubeadm_update_node_role.yml

19.3.2 验证node

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready control-plane,master 19h v1.20.15

k8s-master02.example.local Ready control-plane,master 19h v1.20.15

k8s-master03.example.local Ready control-plane,master 19h v1.20.15

k8s-node01.example.local Ready <none> 19h v1.20.15

k8s-node02.example.local Ready <none> 19h v1.20.15

k8s-node03.example.local Ready <none> 19h v1.20.15

19.4 metrics

19.4.1 升级metrics

[root@ansible-server ansible]# mkdir -p roles/metrics-update/{files,vars,tasks}

[root@ansible-server ansible]# cd roles/metrics-update/

[root@ansible-server metrics-update]# ls

files tasks vars

[root@ansible-server metrics-update]# wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml -P files/

[root@ansible-server metrics-update]# vim vars/main.yml

HARBOR_DOMAIN: harbor.raymonds.cc

[root@ansible-server metrics-update]# wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml -P files/

[root@ansible-server metrics-update]# vim files/components.yaml

...

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls #添加这行

...

[root@ansible-server metrics-update]# vim tasks/metrics_file.yml

- name: copy components.yaml file

copy:

src: components.yaml

dest: /root/components.yaml

[root@ansible-server metrics-update]# vim tasks/config.yml

- name: Modify the "image:" line

replace:

path: /root/components.yaml

regexp: ''(.*image:) k8s.gcr.io/metrics-server(/.*)''

replace: ''\1 {{ HARBOR_DOMAIN }}/google_containers\2''

[root@ansible-server metrics-update]# vim tasks/download_images.yml

- name: get metrics version

shell:

chdir: /root

cmd: awk -F "/" ''/image:/{print $NF}'' components.yaml

register: METRICS_VERSION

- name: download metrics image

shell: |

{% for i in METRICS_VERSION.stdout_lines %}

docker pull registry.aliyuncs.com/google_containers/{{ i }}

docker tag registry.aliyuncs.com/google_containers/{{ i }} {{ HARBOR_DOMAIN }}/google_containers/{{ i }}

docker rmi registry.aliyuncs.com/google_containers/{{ i }}

docker push {{ HARBOR_DOMAIN }}/google_containers/{{ i }}

{% endfor %}

[root@ansible-server metrics-update]# vim tasks/install_metrics.yml

- name: install metrics

shell:

chdir: /root

cmd: "kubectl --kubeconfig=/etc/kubernetes/admin.conf apply -f components.yaml"

[root@ansible-server metrics-update]# vim tasks/main.yml

- include: metrics_file.yml

- include: config.yml

- include: download_images.yml

- include: install_metrics.yml

[root@ansible-server metrics-update]# cd ../../

[root@ansible-server ansible]# tree roles/metrics-update/

roles/metrics-update/

├── files

│ └── components.yaml

├── tasks

│ ├── config.yml

│ ├── download_images.yml

│ ├── install_metrics.yml

│ ├── main.yml

│ └── metrics_file.yml

└── vars

└── main.yml

3 directories, 7 files

[root@ansible-server ansible]# vim metrics_update_role.yml

---

- hosts: master01

roles:

- role: metrics-update

[root@ansible-server ansible]# ansible-playbook metrics_update_role.yml

19.4.2 验证metrics

[root@k8s-master01 ~]# kubectl get pod -A|grep metrics

kube-system metrics-server-5b7c76b46c-nmqs9 1/1 Running 0 31s

[root@k8s-master01 ~]# kubectl top node

NAME cpu(cores) cpu% MEMORY(bytes) MEMORY%

k8s-master01 179m 8% 1426Mi 37%

k8s-master02.example.local 137m 6% 1240Mi 32%

k8s-master03.example.local 147m 7% 1299Mi 34%

k8s-node01.example.local 84m 4% 883Mi 23%

k8s-node02.example.local 73m 3% 898Mi 23%

k8s-node03.example.local 72m 3% 915Mi 23%

19.5 dashboard

19.5.1 升级dashboard

[root@ansible-server ansible]# mkdir -p roles/dashboard-update/{files,templates,vars,tasks}

[root@ansible-server ansible]# cd roles/dashboard-update/

[root@ansible-server dashboard-update]# ls

files tasks templates vars

[root@ansible-server dashboard-update]# vim files/admin.yaml

apiVersion: v1

kind: ServiceAccount

Metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

Metadata:

name: admin-user

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

[root@ansible-server dashboard-update]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml -P templates/recommended.yaml.j2

[root@ansible-server dashboard-update]# vim templates/recommended.yaml.j2

...

kind: Service

apiVersion: v1

Metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #添加这行

ports:

- port: 443

targetPort: 8443

nodePort: {{ NODEPORT }} #添加这行

selector:

k8s-app: kubernetes-dashboard

...

[root@ansible-server dashboard-update]# vim vars/main.yml

HARBOR_DOMAIN: harbor.raymonds.cc

NODEPORT: 30005

[root@ansible-server dashboard-update]# vim tasks/dashboard_file.yml

- name: copy recommended.yaml file

template:

src: recommended.yaml.j2

dest: /root/recommended.yaml

- name: copy admin.yaml file

copy:

src: admin.yaml

dest: /root/admin.yaml

[root@ansible-server dashboard-update]# vim tasks/config.yml

- name: Modify the "image:" line

replace:

path: /root/recommended.yaml

regexp: ''(.*image:) kubernetesui(/.*)''

replace: ''\1 {{ HARBOR_DOMAIN }}/google_containers\2''

[root@ansible-server dashboard-update]# vim tasks/download_images.yml

- name: get dashboard version

shell:

chdir: /root

cmd: awk -F "/" ''/image:/{print $NF}'' recommended.yaml

register: DASHBOARD_VERSION

- name: download dashboard image

shell: |

{% for i in DASHBOARD_VERSION.stdout_lines %}

docker pull kubernetesui/{{ i }}

docker tag kubernetesui/{{ i }} {{ HARBOR_DOMAIN }}/google_containers/{{ i }}

docker rmi kubernetesui/{{ i }}

docker push {{ HARBOR_DOMAIN }}/google_containers/{{ i }}

{% endfor %}

[root@ansible-server dashboard-update]# vim tasks/install_dashboard.yml

- name: install dashboard

shell:

chdir: /root

cmd: "kubectl --kubeconfig=/etc/kubernetes/admin.conf apply -f recommended.yaml -f admin.yaml"

[root@ansible-server dashboard-v2.4.0]# vim tasks/main.yml

- include: dashboard_file.yml

- include: config.yml

- include: download_images.yml

- include: install_dashboard.yml

[root@ansible-server dashboard-update]# cd ../../

[root@ansible-server ansible]# tree roles/dashboard-update/

roles/dashboard-update/

├── files

│ └── admin.yaml

├── tasks

│ ├── config.yml

│ ├── dashboard_file.yml

│ ├── download_images.yml

│ ├── install_dashboard.yml

│ └── main.yml

├── templates

│ └── recommended.yaml.j2

└── vars

└── main.yml

4 directories, 8 files

[root@ansible-server ansible]# vim dashboard_update_role.yml

---

- hosts: master01

roles:

- role: dashboard-update

[root@ansible-server ansible]# ansible-playbook dashboard_update_role.yml

19.5.2 登录dashboard

https://172.31.3.101:30005

[root@k8s-master01 ~]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk ''{print $1}'')

Name: admin-user-token-mlzc8

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 8e8d6838-f344-4701-85d3-21e39205a77c

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjZMdGRxbV9rX1hsQ0dtT2J1dhlDd1lwQVJORnpKY21Yc0JKYlVXaGlfaG8ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLW1semm4Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI4ZThkNjgzOC1mMzQ0LTQ3MDetoDVkMy0yMWUzOTIwNWE3N2MiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.dFe6Y-rRNEYWvVK-VNphz4N_tkCNHCG_uRt9iNhdCmtYcD5yy21iYcDjAWMVvmFuyn0QDnUlquPyl3WoASVc91BOKWNgdNkOrFEFKoP32YdgaurnRBkXMDgkAUJXQT-2vekO56UiQtoxK87DVSmFksTAFXlc7zw1VJRE1g10ZiNVTcl-omOiMPvdk5RIjs-Uk859p70_O1oC8Ep-JzBYWCilX2ymNUNNeh4lyt1Fo8Li4N0JLwzQLJgfHfjoQwpd4Irj2agMQ-BW4xT70HsJW4cUt1sJ29cnO1RfhxM8-w-6wBPnGwkJTSre4GfMrjnJoVFN2cbjQg4N0ud_MQMXcw

总结

以上是小编为你收集整理的a30.ansible 生产实战案例 --基于kubeadm-v1.20安装kubernetes--Kubeadm升级全部内容。

如果觉得小编网站内容还不错,欢迎将小编网站推荐给好友。

原文地址:https://blog.csdn.net/qq_25599925/article/details/122628909

docker – Kubectl:Kubernetes与minikube超时

我已经安装了minikube并启动了它内置的Kubernertes集群

$minikube start

Starting local Kubernetes cluster...

Kubernetes is available at https://192.168.99.100:443.

Kubectl is Now configured to use the cluster.

我也安装了kubectl

$kubectl version

Client Version: version.Info{Major:"1",Minor:"3",GitVersion:"v1.3.0",GitCommit:"283137936a498aed572ee22af6774b6fb6e9fd94",GitTreeState:"clean",BuildDate:"2016-07-01T19:26:38Z",GoVersion:"go1.6.2",Compiler:"gc",Platform:"linux/amd64"}

但我无法成功使用kubectl与正在运行的Kubernetes集群交谈

$kubectl get nodes

Unable to connect to the server: net/http: TLS handshake timeout

编辑

$minikube logs

E0712 19:02:08.767815 1257 docker_manager.go:1955] Failed to create pod infra container: ImagePullBackOff; Skipping pod "kube-addon-manager-minikubevm_kube-system(48abed82af93bb0b941173334110923f)": Back-off pulling image "gcr.io/google_containers/pause-amd64:3.0"

E0712 19:02:08.767875 1257 pod_workers.go:183] Error syncing pod 48abed82af93bb0b941173334110923f,skipping: Failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"gcr.io/google_containers/pause-amd64:3.0\""

E0712 19:02:23.767380 1257 docker_manager.go:1955] Failed to create pod infra container: ImagePullBackOff; Skipping pod "kube-addon-manager-minikubevm_kube-system(48abed82af93bb0b941173334110923f)": Back-off pulling image "gcr.io/google_containers/pause-amd64:3.0"

E0712 19:02:23.767464 1257 pod_workers.go:183] Error syncing pod 48abed82af93bb0b941173334110923f,skipping: Failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"gcr.io/google_containers/pause-amd64:3.0\""

E0712 19:02:36.766696 1257 docker_manager.go:1955] Failed to create pod infra container: ImagePullBackOff; Skipping pod "kube-addon-manager-minikubevm_kube-system(48abed82af93bb0b941173334110923f)": Back-off pulling image "gcr.io/google_containers/pause-amd64:3.0"

E0712 19:02:36.766760 1257 pod_workers.go:183] Error syncing pod 48abed82af93bb0b941173334110923f,skipping: Failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"gcr.io/google_containers/pause-amd64:3.0\""

E0712 19:02:51.767621 1257 docker_manager.go:1955] Failed to create pod infra container: ImagePullBackOff; Skipping pod "kube-addon-manager-minikubevm_kube-system(48abed82af93bb0b941173334110923f)": Back-off pulling image "gcr.io/google_containers/pause-amd64:3.0"

E0712 19:02:51.767672 1257 pod_workers.go:183] Error syncing pod 48abed82af93bb0b941173334110923f,skipping: Failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"gcr.io/google_containers/pause-amd64:3.0\""

E0712 19:03:02.766548 1257 docker_manager.go:1955] Failed to create pod infra container: ImagePullBackOff; Skipping pod "kube-addon-manager-minikubevm_kube-system(48abed82af93bb0b941173334110923f)": Back-off pulling image "gcr.io/google_containers/pause-amd64:3.0"

E0712 19:03:02.766609 1257 pod_workers.go:183] Error syncing pod 48abed82af93bb0b941173334110923f,skipping: Failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"gcr.io/google_containers/pause-amd64:3.0\""

E0712 19:03:16.766831 1257 docker_manager.go:1955] Failed to create pod infra container: ImagePullBackOff; Skipping pod "kube-addon-manager-minikubevm_kube-system(48abed82af93bb0b941173334110923f)": Back-off pulling image "gcr.io/google_containers/pause-amd64:3.0"

E0712 19:03:16.766904 1257 pod_workers.go:183] Error syncing pod 48abed82af93bb0b941173334110923f,skipping: Failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"gcr.io/google_containers/pause-amd64:3.0\""

E0712 19:04:15.829223 1257 docker_manager.go:1955] Failed to create pod infra container: ErrImagePull; Skipping pod "kube-addon-manager-minikubevm_kube-system(48abed82af93bb0b941173334110923f)": image pull Failed for gcr.io/google_containers/pause-amd64:3.0,this may be because there are no credentials on this request. details: (Error response from daemon: Get https://gcr.io/v1/_ping: dial tcp 74.125.28.82:443: I/O timeout)

E0712 19:04:15.829326 1257 pod_workers.go:183] Error syncing pod 48abed82af93bb0b941173334110923f,skipping: Failed to "StartContainer" for "POD" with ErrImagePull: "image pull Failed for gcr.io/google_containers/pause-amd64:3.0,this may be because there are no credentials on this request. details: (Error response from daemon: Get https://gcr.io/v1/_ping: dial tcp 74.125.28.82:443: I/O timeout)"

E0712 19:04:31.767536 1257 docker_manager.go:1955] Failed to create pod infra container: ImagePullBackOff; Skipping pod "kube-addon-manager-minikubevm_kube-system(48abed82af93bb0b941173334110923f)": Back-off pulling image "gcr.io/google_containers/pause-amd64:3.0"

一个.您需要确保与VM一起运行的docker守护程序可以通过代理连接到Internet.

湾您需要确保在主机上运行的kubectl可以在不通过代理的情况下到达VM

使用默认的kubectl示例

>确保将代理传递到由minikube创建的VM(这可确保VM中的docker守护程序可以访问Internet)

minikube start –vm-driver =“kvm”–docker-env =“http_proxy = xxx”–docker-env =“https_proxy = yyy”start

注意:使用代理设置替换xxx和yyy

>获取VM在启动时获得的IP.

minikube ip

注意:每次设置minikube都需要这样做,因为它可以改变

>确保kubectl可以在不进入代理的情况下与此VM通信

export no_proxy =“127.0.0.1,[minikube_ip]”

>现在启动POD并测试它

kubectl run hello-minikube –image = gcr.io / google_containers / echoserver:1.4 –port = 8080

kubectl公开部署hello-minikube –type = NodePort

kubectl获取pod

curl $(minikube service hello-minikube –url)

jenkins 部署在 k8s 里,如何使用 kubernetes cli plugin 的 withKubeConfig 执行 kubectl 脚本

withKubeConfig 支持的是 plain credential text 所以要创建如下截图的凭据,否则是无法连接到 k8s 的,找了好久,看文献还是得看原生的。以下截图就是导入 k8s 集群的 k8s 的 config 文件就可以了。

https://jenkins.io/doc/pipeline/steps/kubernetes-cli/#kubernetes-cli-plugin

https://wiki.jenkins.io/display/JENKINS/Plain+Credentials+Plugin

https://github.com/jenkinsci/kubernetes-cli-plugin/blob/master/README.md

kubectl管理kubernetes集群

[root@master ~]# kubectl get nodes 查看集群节点

NAME STATUS AGE

node1 Ready 25m

node2 Ready 19m

[root@master ~]# kubectl version 查看版本

Client Version: version.Info{Major:"1", Minor:"5", GitVersion:"v1.5.2", GitCommit:"269f928217957e7126dc87e6adfa82242bfe5b1e", GitTreeState:"clean", BuildDate:"2017-07-03T15:31:10Z", GoVersion:"go1.7.4", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"5", GitVersion:"v1.5.2", GitCommit:"269f928217957e7126dc87e6adfa82242bfe5b1e", GitTreeState:"clean", BuildDate:"2017-07-03T15:31:10Z", GoVersion:"go1.7.4", Compiler:"gc", Platform:"linux/amd64"}

[root@master ~]# kubectl run nginx --image=docker.io/nginx --replicas=1 --port=9000

deployment "nginx" created

[root@master ~]# kubectl get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

nginx 1 1 1 0 15s

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-2187705812-8r0h4 1/1 Running 0 1h

[root@master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-2187705812-8r0h4 1/1 Running 0 1h 10.255.4.2 node1

想要删除一个容器的时候:

[root@master ~]# kubectl delete pod nginx-2187705812-8r0h4

pod "nginx-2187705812-8r0h4" deleted

[root@master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-2187705812-6dn2r 0/1 ContainerCreating 0 4s

删除了之后还依然有,这个是因为创建deployment的时候参数--replicas=1起作用了。想要删除的话直接删除deployment就可以了。

[root@master ~]# kubectl delete deployment nginx

deployment "nginx" deleted

yaml语法:

[root@master ~]# kubectl create -f mysql-deployment.yaml

deployment "mysql" created

[root@master ~]# kubectl get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

mysql 1 1 1 1 9s

[root@master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE

mysql-2261771434-r8td1 1/1 Running 0 16s 10.255.4.2 node1

在node1上查看mysql docker实例

[root@node1 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e33797549b8e docker.io/mysql/mysql-server "/entrypoint.sh my..." 4 minutes ago Up 4 minutes (healthy) k8s_mysql.31ec27ee_mysql-2261771434-r8td1_default_351da1d4-f082-11e8-bbf2-000c297d60e3_089418b7

kubectl其他参数:

logs 取得pod中容器的log信息

exec 在容器中执行一条命令

cp 从容器拷出或者想容器中拷入文件

attach attach到一个运行的容器上

logs

[root@master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

mysql-2261771434-r8td1 1/1 Running 0 18m

[root@master ~]# kubectl logs mysql-2261771434-r8td1

[Entrypoint] MySQL Docker Image 5.7.20-1.1.2

[Entrypoint] Initializing database

[Entrypoint] Database initialized

Warning: Unable to load ''/usr/share/zoneinfo/iso3166.tab'' as time zone. Skipping it.

Warning: Unable to load ''/usr/share/zoneinfo/zone.tab'' as time zone. Skipping it.

Warning: Unable to load ''/usr/share/zoneinfo/zone1970.tab'' as time zone. Skipping it.

[Entrypoint] ignoring /docker-entrypoint-initdb.d/*

[Entrypoint] Server shut down

[Entrypoint] MySQL init process done. Ready for start up.

[Entrypoint] Starting MySQL 5.7.20-1.1.2

exec:

[root@master ~]# kubectl exec mysql-2261771434-r8td1 ls

[root@master ~]# kubectl exec -it mysql-2261771434-r8td1 bash

bash-4.2#

cp:

[root@master ~]# kubectl cp mysql-2261771434-r8td1:/tmp/hosts /etc/hosts

error: unexpected EOF

[root@master ~]# kubectl cp --help

Examples:

# !!!Important Note!!!

# Requires that the ''tar'' binary is present in your container 使用kubectl cp 你的容器实例中必须有tar命令,如果没有的话就会失败

# image. If ''tar'' is not present, ''kubectl cp'' will fail.

[root@master ~]# kubectl exec -it mysql-2261771434-r8td1 bash

bash-4.2# yum install tar net-tools -y

bash-4.2# echo ''this is test'' > /tmp/test.txt

再次测试:

拷贝出来

[root@master ~]# kubectl cp mysql-2261771434-r8td1:/tmp/test.txt /opt/test.txt

[root@master ~]# more /opt/test.txt

this is test

拷贝回去:

[root@master ~]# echo "this is out" >> /opt/test.txt

[root@master ~]# kubectl cp /opt/test.txt mysql-2261771434-r8td1:/tmp/test.txt

[root@master ~]# kubectl exec -it mysql-2261771434-r8td1 bash

bash-4.2# cat /tmp/test.txt

this is test

this is out

kubectl attach:

用户取得pod中容器的实时信息,可以持续不断实时的取出信息。类似于tail -f

关于使用ansible kubectl插件连接kubernetes pod以及实现原理和ansible安装kubernetes的问题就给大家分享到这里,感谢你花时间阅读本站内容,更多关于a30.ansible 生产实战案例 --基于kubeadm-v1.20安装kubernetes--Kubeadm升级、docker – Kubectl:Kubernetes与minikube超时、jenkins 部署在 k8s 里,如何使用 kubernetes cli plugin 的 withKubeConfig 执行 kubectl 脚本、kubectl管理kubernetes集群等相关知识的信息别忘了在本站进行查找喔。

本文标签: