在这里,我们将给大家分享关于ubuntu-docker入门到放弃的知识,让您更了解一docker的安装的本质,同时也会涉及到如何更有效地1.05、Docker的安装、2019-09-19docker的

在这里,我们将给大家分享关于ubuntu-docker 入门到放弃的知识,让您更了解一docker 的安装的本质,同时也会涉及到如何更有效地1.05、Docker 的安装、2019-09-19 docker 的安装、Centos7 的安装、Docker1.12.3 的安装、Centos7.1 下 Docker 的安装 - yum 方法的内容。

本文目录一览:- ubuntu-docker 入门到放弃(一)docker 的安装(ubuntu安装docker教程)

- 1.05、Docker 的安装

- 2019-09-19 docker 的安装

- Centos7 的安装、Docker1.12.3 的安装

- Centos7.1 下 Docker 的安装 - yum 方法

ubuntu-docker 入门到放弃(一)docker 的安装(ubuntu安装docker教程)

基于 ubuntu14.04 系统

安装参考官网:https://docs.docker.com

1、首先检查是否安装过 docker,如果安装过,卸载:

sudo apt-get remove docker docker-engine docker.io2、更新 apt 源

sudo apt-get updatesudo apt-get install linux-image-extra-$(uname -r) linux-image-extra-virtualsudo apt-get update3、安装 apt 的 https 包

sudo apt-get install \

apt-transport-https \

ca-certificates \

curl \

software-properties-common4、添加 key

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -sudo apt-key fingerprint 0EBFCD885、添加 docker 源并更新

sudo add-apt-repository \

"deb [arch=amd64] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) \

stable"sudo apt-get update6、安装 docker

sudo apt-get install docker-ce默认安装最新版本,如果需要指定版本可以先查询版本

apt-cache madison docker-ce然后指定版本安装

sudo apt-get install docker-ce=<VERSION>至此 docker 就安装好啦,简单不

简单 run 一个容器吧~

sudo docker run hello-world

1.05、Docker 的安装

命令:

docker inspect : 获取容器/镜像的元数据 systemctl enable docker.service : 设置开机自启 systemctl disable docker.service : 关闭开机启动 /var/lib/docker : docker的默认工作路径 docker run 相关命令 : https://www.runoob.com/docker/docker-run-command.html

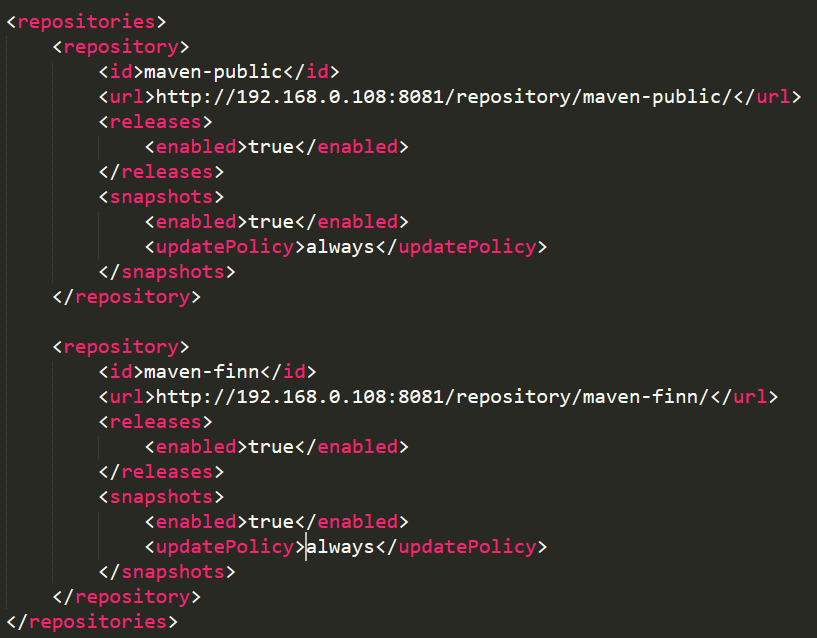

1、Docker 搭建 Maven 私服

1、下载镜像:sudo docker pull sonatype/nexus3 2、docker images 查看镜像 3、 创建nexus的挂载文件夹 : mkdir -p /usr/local/docker/data/nexus-data && chown -R 200 /usr/local/docker/data/nexus-data 4、 安装:docker run -d -p 8081:8081 -p 8082:8082 --restart=always --name nexus -v /usr/local/docker/data/nexus-data:/nexus-data sonatype/nexus3 5、登陆页面: {主机ip}:8081 如:192.168.0.110:8081 6、输入用户名admin,密码在/usr/local/docker/data/nexus-data文件下admin.password中,vi admin.password 复制密码,登陆(登陆后需要重置密码,重置密码后会自动删除admin.password文件) 7、8、

9、

10、在maven的settings.xml中配置

11、阿里云镜像

12、

2、docker 搭建 Mysql

1、拉取MySQL镜像 : docker pull mysql:latest 2、查看本地镜像 : docker images 3、运行容器 : sudo docker run -p 3307:3306 --restart=always --privileged=true --name mysql-finn -e MYSQL_ROOT_PASSWORD="root123" \ -v /docker/mysql/data:/var/lib/mysql -v /docker/mysql/conf:/etc/mysql/conf.d -v /docker/mysql/logs:/var/log/mysql \ -d mysql:latest --character-set-server=utf8mb4 --collation-server=utf8mb4_unicode_ci 4、进入容器 : docker exec -it mysql-finn /bin/bash 5、登录mysql : mysql -u root -p alter user ''root''@''%'' identified with mysql_native_password by ''root123'' 6、添加远程登录用户 : CREATE USER ''finn''@''%'' IDENTIFIED WITH mysql_native_password BY ''finn123!''; GRANT ALL PRIVILEGES ON *.* TO ''finn''@''%''; 7、即可使用Navicat连接(需关闭防火墙或开放防火墙端口)

3、docker 安装 redis

1、查看可用版本 :docker search redis 2、拉取最新版本镜像 : sudo docker pull redis 3、查看本地镜像 :sudo docker images 4、创建redis配置文件 : 1、创建文件夹 sudo mkdir -p /root/Downloads/redis/conf sudo mkdir -p /root/Downloads/redis/data 2、进入conf目录,下载redis.conf sudo cd /root/Downloads/redis/conf sudo wget http://download.redis.io/redis-stable/redis.conf 5、进入目录,启动服务 1、 sudo cd /root/Downloads/redis 2、sudo docker run -p 6379:6379 --privileged=true --name redis -v $PWD/conf/redis.conf:/etc/redis/redis.conf -v $PWD/data:/data -d redis redis-server /etc/redis/redis.conf --appendonly yes 6、修改配置文件 将bind 127.0.0.1 改 #bind 127.0.0.1 将protected-mode yes 改 protected-mode no 将requirepass 前#删除,并设置密码:requirepass redis123

4、docker 安装 jenkins

1、拉取最新版本镜像 : sudo docker pull jenkins/jenkins 2、创建文件夹 :sudo mkdir /apps/jenkins 3、文件夹授权 :sudo chown -R 1000:1000 jenkins/ 4、构建容器 :docker run -itd -p 8080:8080 -p 50000:50000 --name jenkins --privileged=true -v /apps/jenkins:/var/jenkins_home jenkins 5、修改镜像 :vi /apps/jenkins/hudson.model.UpdateCenter.xml 换成: https://mirrors.tuna.tsinghua.edu.cn/jenkins/updates/update-center.json 6、查看密码 :cat /apps/jenkins/secrets/initialAdminPassword 7、访问<ip>:<port>,选择不安装插件

5、安装 Java

1、yum -y install java-1.8.0-openjdk* 2、配置环境变量: vi /etc/profile 新增以下配置:

export JAVA_HOME=/usr/lib/jvm/java-1.8.0

export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin3、配置生效:source /etc/profile 4、验证:java javac java -version

6、安装 Maven

1、下载:wget https://mirror.bit.edu.cn/apache/maven/maven-3/3.6.3/binaries/apache-maven-3.6.3-bin.tar.gz 2、解压:tar -zxvf apache-maven-3.6.3-bin.tar.gz 3、配置:修改setting.xml文件,修改存放依赖地址 4、环境变量:vi /etc/profile,增加如下配置 export MAVEN_HOME=/apps/maven/apache-maven-3.6.3 export PATH=${PATH}:${MAVEN_HOME}/bin 5、配置生效:source /etc/profile 6、验证 : mvn -v

7、安装 portainer -docker 管理 UI

1、下载并构建容器:sudo docker run -d -p 1001:9000 --restart=always --name portainer -v /var/run/docker.sock:/var/run/docker.sock --privileged=true portainer/portainer 2、开启端口 :sudo firewall-cmd --zone=public --add-port=1001/tcp --permanent && sudo firewall-cmd --reload 3、访问:ip:1001

8、

2019-09-19 docker 的安装

一、参考

https://www.cnblogs.com/coder-lzh/p/11019558.html

https://www.cnblogs.com/Minlwen/p/10491350.html

二、如果报一下错误信息

Starting "default"...

(default) Check network to re-create if needed...

(default) Windows might ask for the permission to create a network adapter. Sometimes, such confirmation window is minimized in the taskbar.

(default) Creating a new host-only adapter produced an error: C:\Program Files\Oracle\VirtualBox\VBoxManage.exe hostonlyif create failed:

(default) 0%...

(default) Progress state: E_FAIL

(default) VBoxManage.exe: error: Failed to create the host-only adapter

(default) VBoxManage.exe: error: Could not find Host Interface Networking driver! Please reinstall

(default) VBoxManage.exe: error: Details: code E_FAIL (0x80004005), component HostNetworkInterfaceWrap, interface IHostNetworkInterface

(default) VBoxManage.exe: error: Context: "enum RTEXITCODE __cdecl handleCreate(struct HandlerArg *)" at line 94 of file VBoxManageHostonly.cpp

(default)

(default) This is a known VirtualBox bug. Let''s try to recover anyway...更新下 Oracle VM VirtualBox 到最新版就可以了。

Centos7 的安装、Docker1.12.3 的安装

1、环境准备

本文中的案例会有四台机器,他们的Host和IP地址如下

c1 -> 10.0.0.31

c2 -> 10.0.0.32

c3 -> 10.0.0.33

c4 -> 10.0.0.34

四台机器的host以c1为例:

[root[@c1](https://my.oschina.net/streetc) ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

#::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.0.0.31 c1

10.0.0.32 c2

10.0.0.33 c3

10.0.0.34 c4

1.1、Centos 7 64位安装

以c1安装为示例,安装过程中使用英文版本,然后点击continue

点击LOCALIZATION下面的Data & Time,然后选择Asia/shanghai时区,点击Done.

点击SYSTEM下面的INSTALLATION DESTINATION,选择你的硬盘后,在下面的单选框中,选择I will configure partitioning点击Done,我们来自定义硬盘和分区

点击Click here to create them automatically,系统会自动帮我们创建出推荐的分区格式。

我们将/home的挂载点删除掉,统一加到点/,文件类型是xfs,使用全部的硬盘空间,点击Update Settings,确保后面软件有足够的安装空间。 最后点击左上角的Done按钮

xfs是在Centos7.0开始提供的,原来的ext4虽然稳定,但最多只能有大概40多亿文件,单个文件大小最大只能支持到16T(4K block size) 的话。而XFS使用64位管理空间,文件系统规模可以达到EB级别。

用于正式生产的服务器,切记必须把数据盘单独分区,防止系统出问题时,保证数据的完整性。比如可以再划分一个,/data专门用来存放数据。

在弹出的窗口中点击Accept Changes

点击下图中的位置,设置机器的Host Name,这里我们安装机器的Host Name为c1

最后点击右下角的Begin Installation,过程中可以设置root的密码,也可以创建其他用户

1.2、网络配置

以下以c1为例

[root[@c1](https://my.oschina.net/streetc) ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

TYPE=Ethernet

BOOTPROTO=static #启用静态IP地址

DEFROUTE=yes

PEERDNS=yes

PEERROUTES=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_PEERDNS=yes

IPV6_PEERROUTES=yes

IPV6_FAILURE_FATAL=no

NAME=eth0

UUID=e57c6a58-1951-4cfa-b3d1-cf25c4cdebdd

DEVICE=eth0

ONBOOT=yes #开启自动启用网络连接

IPADDR0=192.168.0.31 #设置IP地址

PREFIXO0=24 #设置子网掩码

GATEWAY0=192.168.0.1 #设置网关

DNS1=192.168.0.1 #设置DNS

DNS2=8.8.8.8

重启网络:

[root[@c1](https://my.oschina.net/streetc) ~]# service network restart

更改源为阿里云

[root[@c1](https://my.oschina.net/streetc) ~]# yum install -y wget

[root[@c1](https://my.oschina.net/streetc) ~]# cd /etc/yum.repos.d/

[root@c1 yum.repos.d]# mv CentOS-Base.repo CentOS-Base.repo.bak

[root@c1 yum.repos.d]# wget http://mirrors.aliyun.com/repo/Centos-7.repo

[root@c1 yum.repos.d]# wget http://mirrors.163.com/.help/CentOS7-Base-163.repo

[root@c1 yum.repos.d]# yum clean all

[root@c1 yum.repos.d]# yum makecache

安装网络工具包和基础工具包

[root@c1 ~]# yum install net-tools checkpolicy gcc dkms foomatic openssh-server bash-completion -y

1.3、更改hostname

在四台机器上依次设置hostname,以下以c1为例

[root@localhost ~]# hostnamectl --static set-hostname c1

[root@localhost ~]# hostnamectl status

Static hostname: c1

Icon name: computer-vm

Chassis: vm

Machine ID: e4ac9d1a9e9b4af1bb67264b83da59e4

Boot ID: a128517ed6cb41d083da61de5951a109

Virtualization: kvm

Operating System: CentOS Linux 7 (Core)

CPE OS Name: cpe:/o:centos:centos:7

Kernel: Linux 3.10.0-327.36.3.el7.x86_64

Architecture: x86-64

1.4、配置ssh免密码登录登录

先后在四台机器分别执行,以c1为例

[root@c1 ~]# ssh-keygen

#一路按回车到最后

在免登录端修改配置文件

[root@c1 ~]# vi /etc/ssh/sshd_config

#找到以下内容,并去掉注释符#

RSAAuthentication yes

PubkeyAuthentication yes

AuthorizedKeysFile .ssh/authorized_keys

将ssh-keygen生成的密钥,分别复制到其他三台机器,以下以c1为例

[root@c1 ~]# ssh-copy-id c1

The authenticity of host ''c1 (10.0.0.31)'' can''t be established.

ECDSA key fingerprint is 22:84:fe:22:c2:e1:81:a6:77:d2:dc:be:7b:b7:bf:b8.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@c1''s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh ''c1''"

and check to make sure that only the key(s) you wanted were added.

[root@c1 ~]# ssh-copy-id c2

The authenticity of host ''c2 (10.0.0.32)'' can''t be established.

ECDSA key fingerprint is 22:84:fe:22:c2:e1:81:a6:77:d2:dc:be:7b:b7:bf:b8.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@c2''s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh ''c2''"

and check to make sure that only the key(s) you wanted were added.

[root@c1 ~]# ssh-copy-id c3

The authenticity of host ''c3 (10.0.0.33)'' can''t be established.

ECDSA key fingerprint is 22:84:fe:22:c2:e1:81:a6:77:d2:dc:be:7b:b7:bf:b8.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@c3''s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh ''c3''"

and check to make sure that only the key(s) you wanted were added.

[root@c1 ~]# ssh-copy-id c4

The authenticity of host ''c4 (10.0.0.34)'' can''t be established.

ECDSA key fingerprint is 22:84:fe:22:c2:e1:81:a6:77:d2:dc:be:7b:b7:bf:b8.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@c4''s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh ''c4''"

and check to make sure that only the key(s) you wanted were added.

测试密钥是否配置成功

[root@c1 ~]# for N in $(seq 1 4); do ssh c$N hostname; done;

c1

c2

c3

c4

安装ntp时间同步工具和git

[root@c1 ~]# for N in $(seq 1 4); do ssh c$N yum install ntp git -y; done;

2、安装Docker1.12.3和初步配置

可以直接在github上获取Docker各个版本包:https://github.com/docker/docker/releases

链接中提供了所有的Docker核心包:http://yum.dockerproject.org/repo/main/centos/7/Packages/

2.1、安装Docker1.12.3

不建议直接使用Docker官方的docker yum源进行安装,因为会依据系统版本去选择Docker版本,不能指定相应的版本进行选择安装。在四台机器上依次执行下面的命令,可以将下面的命令,直接复制粘贴到命令行中

mkdir -p ~/_src \

&& cd ~/_src \

&& wget http://yum.dockerproject.org/repo/main/centos/7/Packages/docker-engine-selinux-1.12.3-1.el7.centos.noarch.rpm \

&& wget http://yum.dockerproject.org/repo/main/centos/7/Packages/docker-engine-1.12.3-1.el7.centos.x86_64.rpm \

&& wget http://yum.dockerproject.org/repo/main/centos/7/Packages/docker-engine-debuginfo-1.12.3-1.el7.centos.x86_64.rpm \

&& yum localinstall -y docker-engine-selinux-1.12.3-1.el7.centos.noarch.rpm docker-engine-1.12.3-1.el7.centos.x86_64.rpm docker-engine-debuginfo-1.12.3-1.el7.centos.x86_64.rpm

2.2、 验证Docker是否安装成功

Centos7中Docker1.12中默认使用Docker作为客户端程序,使用dockerd作为服务端程序。

[root@c1 _src]# docker version

Client:

Version: 1.12.3

API version: 1.24

Go version: go1.6.3

Git commit: 6b644ec

Built:

OS/Arch: linux/amd64

Cannot connect to the Docker daemon. Is the docker daemon running on this host?

2.3、启动Docker daemon程序

在Docker1.12中,默认的daemon程序是dockerd,可以执行dockerd或者使用系统自带systemd去管理服务。但是需要注意的是,默认用的都是默认的参数,比如私有网段默认使用172.17.0.0/16 ,网桥使用docker0等等

[root@c1 _src]# dockerd

INFO[0000] libcontainerd: new containerd process, pid: 6469

WARN[0000] containerd: low RLIMIT_NOFILE changing to max current=1024 max=4096

WARN[0001] devmapper: Usage of loopback devices is strongly discouraged for production use. Please use `--storage-opt dm.thinpooldev` or use `man docker` to refer to dm.thinpooldev section.

WARN[0001] devmapper: Base device already exists and has filesystem xfs on it. User specified filesystem will be ignored.

INFO[0001] [graphdriver] using prior storage driver "devicemapper"

INFO[0001] Graph migration to content-addressability took 0.00 seconds

WARN[0001] mountpoint for pids not found

INFO[0001] Loading containers: start.

INFO[0001] Firewalld running: true

INFO[0001] Default bridge (docker0) is assigned with an IP address 172.17.0.0/16. Daemon option --bip can be used to set a preferred IP address

INFO[0001] Loading containers: done.

INFO[0001] Daemon has completed initialization

INFO[0001] Docker daemon commit=6b644ec graphdriver=devicemapper version=1.12.3

INFO[0001] API listen on /var/run/docker.sock

2.3、通过系统自带的systemctl启动docker,并启动docker服务

[root@c1 _src]# systemctl enable docker && systemctl start docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

使用dockerd --help查看启动参数

[root@c1 _src]# dockerd --help

Usage: dockerd [OPTIONS]

A self-sufficient runtime for containers.

Options:

--add-runtime=[] Register an additional OCI compatible runtime

--api-cors-header Set CORS headers in the remote API

--authorization-plugin=[] Authorization plugins to load

-b, --bridge #指定容器使用的网络接口,默认为docker0,也可以指定其他网络接口

--bip #指定桥接地址,即定义一个容器的私有网络

--cgroup-parent #为所有的容器指定父cgroup

--cluster-advertise #为集群设定一个地址或者名字

--cluster-store #后端分布式存储的URL

--cluster-store-opt=map[] #设置集群存储参数

--config-file=/etc/docker/daemon.json #指定配置文件

-D #启动debug模式

--default-gateway #为容器设定默认的ipv4网关(--default-gateway-v6)

--dns=[] #设置dns

--dns-opt=[] #设置dns参数

--dns-search=[] #设置dns域

--exec-opt=[] #运行时附加参数

--exec-root=/var/run/docker #设置运行状态文件存储目录

--fixed-cidr #为ipv4子网绑定ip

-G, --group=docker #设置docker运行时的属组

-g, --graph=/var/lib/docker #设置docker运行时的家目录

-H, --host=[] #设置docker程序启动后套接字连接地址

--icc=true #是内部容器可以互相通信,环境中需要禁止内部容器访问

--insecure-registry=[] #设置内部私有注册中心地址

--ip=0.0.0.0 #当映射容器端口的时候默认的ip(这个应该是在多主机网络的时候会比较有用)

--ip-forward=true #使net.ipv4.ip_forward生效,其实就是内核里面forward

--ip-masq=true #启用ip伪装技术(容器访问外部程序默认不会暴露自己的ip)

--iptables=true #启用容器使用iptables规则

-l, --log-level=info #设置日志级别

--live-restore #启用热启动(重启docker,保证容器一直运行1.12新特性)

--log-driver=json-file #容器日志默认的驱动

--max-concurrent-downloads=3 #为每个pull设置最大并发下载

--max-concurrent-uploads=5 #为每个push设置最大并发上传

--mtu #设置容器网络的MTU

--oom-score-adjust=-500 #设置内存oom的平分策略(-1000/1000)

-p, --pidfile=/var/run/docker.pid #指定pid所在位置

-s, --storage-driver #设置docker存储驱动

--selinux-enabled #启用selinux的支持

--storage-opt=[] #存储参数驱动

--swarm-default-advertise-addr #设置swarm默认的node节点

--tls #使用tls加密

--tlscacert=~/.docker/ca.pem #配置tls CA 认证

--tlscert=~/.docker/cert.pem #指定认证文件

--tlskey=~/.docker/key.pem #指定认证keys

--userland-proxy=true #为回环接口使用用户代理

--userns-remap #为用户态的namespaces设定用户或组

2.4、修改docker的配置文件

以下以c1为例,在ExecStart后面加上我们自定义的参数,其中三台机器也要做同步修改

[root@c1 ~]# vi /lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network.target

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

# Overlayfs跟AUFS很像,但是性能比AUFS好,有更好的内存利用。

# 加上阿里云的docker加速

ExecStart=/usr/bin/dockerd -s=overlay --registry-mirror=https://7rgqloza.mirror.aliyuncs.com --insecure-registry=localhost:5000 -H unix:///var/run/docker.sock --pidfile=/var/run/docker.pid

ExecReload=/bin/kill -s HUP $MAINPID

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Uncomment TasksMax if your systemd version supports it.

# Only systemd 226 and above support this version.

#TasksMax=infinity

TimeoutStartSec=0

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

[Install]

WantedBy=multi-user.target

重启docker服务,以保证新的配置生效

[root@c1 ~]# systemctl daemon-reload && systemctl restart docker.service

3、创建swarm 集群

10.0.0.31 (hostname:c1)作为swarm manager1

10.0.0.32 (hostname:c2)做为swarm manager2

10.0.0.33 (hostname:c3)做为swarm agent1

10.0.0.34 (hostname:c4)做为swarm agent2

3.1、开放firewall防火墙端口

在配置集群前要先开放防火墙的端口,将下面的命令,复制、粘贴到4台机器的命令行中执行。

firewall-cmd --zone=public --add-port=2377/tcp --permanent && \

firewall-cmd --zone=public --add-port=7946/tcp --permanent && \

firewall-cmd --zone=public --add-port=7946/udp --permanent && \

firewall-cmd --zone=public --add-port=4789/tcp --permanent && \

firewall-cmd --zone=public --add-port=4789/udp --permanent && \

firewall-cmd --reload

以c1为例,查看端口开放情况

[root@c1 ~]# firewall-cmd --list-ports

4789/tcp 4789/udp 7946/tcp 2377/tcp 7946/udp

3.2、设置swarm集群并将其他3台机器添加到集群

在c1上初始化swarm集群,用--listen-addr指定监听的ip与端口

[root@c1 ~]# docker swarm init --listen-addr 0.0.0.0

Swarm initialized: current node (73ju72f6nlyl9kiib7z5r0bsk) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join \

--token SWMTKN-1-47dxwelbdopq8915rjfr0hxe6t9cebsm0q30miro4u4qcwbh1c-4f1xl8ici0o32qfyru9y6wepv \

10.0.0.31:2377

To add a manager to this swarm, run ''docker swarm join-token manager'' and follow the instructions.

使用docker swarm join-token manager可以查看加入为swarm manager的token

查看结果,可以看到我们现在只有一个节点

[root@c1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

3ju72f6nlyl9kiib7z5r0bsk * c1 Ready Active Leader

通过以下命令,我们将另外3台机器,加入到集群中,将下面的命令,复制、粘贴到c1的命令行中

for N in $(seq 2 4); \

do ssh c$N \

docker swarm join \

--token SWMTKN-1-47dxwelbdopq8915rjfr0hxe6t9cebsm0q30miro4u4qcwbh1c-4f1xl8ici0o32qfyru9y6wepv \

10.0.0.31:2377 \

;done

再次查看集群节点情况,可以看到其他机器已经添加到集群中,并且c1是leader状态

[root@c1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

qn7aw9ihbjphtnm1toaoevq8 c4 Ready Active

cxm0w5j3x4mqrj8f1kdrgln5 * c1 Ready Active Leader

wqpz2v3b71q0ohzdifi94ma9 c2 Ready Active

t9ceme3w14o4gfnljtfrkpgp c3 Ready Active

将c2也设置为集群的主节点,先在c1上查看加入到主节点的token

[root@c1 ~]# docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join \

--token SWMTKN-1-47dxwelbdopq8915rjfr0hxe6t9cebsm0q30miro4u4qcwbh1c-b7k3agnzez1bjj3nfz2h93xh0 \

10.0.0.31:2377

根据c1的token信息,我们先在c2上脱离集群,再将c2加入到管理者

[root@c2 ~]# docker swarm leave

Node left the swarm.

[root@c2 ~]# docker swarm join \

> --token SWMTKN-1-47dxwelbdopq8915rjfr0hxe6t9cebsm0q30miro4u4qcwbh1c-b7k3agnzez1bjj3nfz2h93xh0 \

> 10.0.0.31:2377

This node joined a swarm as a manager.

这时我们在c1和c2任意一台机器,输入docker node ls都能够看到最新的集群节点状态,这时c2的MANAGER STATUS已经变为了Reachable

[root@c1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

qn7aw9ihbjphtnm1toaoevq8 c4 Ready Active

cxm0w5j3x4mqrj8f1kdrgln5 * c1 Ready Active Leader

wqpz2v3b71q0ohzdifi94ma9 c2 Down Active

t9ceme3w14o4gfnljtfrkpgp c3 Ready Active

ai6peof1e9wyovp8uxn5b2ufe c2 Ready Active Reachable

因为之前我们是使用docker swarm leave,所以早期的c2的状态是Down,可以通过 docker node rm <ID>命令删除掉

3.3、创建一个overlay 网络

单台服务器的时候我们应用所有的容器都跑在一台主机上, 所以容器之间的网络是能够互通的. 现在我们的集群有4台主机,如何保证不同主机之前的docker是互通的呢?

swarm集群已经帮我们解决了这个问题了,就是只用overlay network.

在docker 1.12以前, swarm集群需要一个额外的key-value存储(consul, etcd etc). 来同步网络配置, 保证所有容器在同一个网段中. 在docker 1.12已经内置了这个存储, 集成了overlay networks的支持.

查看原有网络

[root@c1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

23ee2bb5a2a1 bridge bridge local

fd17ed8db4d8 docker_gwbridge bridge local

6878c36aa311 host host local

08tt2s4pqf96 ingress overlay swarm

7c18e57e24f2 none null local

可以看到在swarm上默认已有一个名为ingress的overlay 网络,默认在swarm里使用,本文会创建一个新的

创建一个名为idoall-org的overlay网络

[root@c1 ~]# docker network create --subnet=10.0.9.0/24 --driver overlay idoall-org

e63ca0d7zcbxqpp4svlv5x04v

[root@c1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

5e47ba02a985 bridge bridge local

fd17ed8db4d8 docker_gwbridge bridge local

6878c36aa311 host host local

e63ca0d7zcbx idoall-org overlay swarm

08tt2s4pqf96 ingress overlay swarm

7c18e57e24f2 none null local

新的网络(idoall-org)已创建

--subnet 用于指定创建overlay网络的网段,也可以省略此参数

可以使用docker network inspect idoall-org查看我们添加的网络信息

[root@c1 ~]# docker network inspect idoall-org

[

{

"Name": "idoall-org",

"Id": "e63ca0d7zcbxqpp4svlv5x04v",

"Scope": "swarm",

"Driver": "overlay",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "10.0.9.0/24",

"Gateway": "10.0.9.1"

}

]

},

"Internal": false,

"Containers": null,

"Options": {

"com.docker.network.driver.overlay.vxlanid_list": "257"

},

"Labels": null

}

]

3.4、在网络上运行容器

用alpine镜像在idoall-org网络上启动3个实例

[root@c1 ~]# docker service create --name idoall-org-test-ping --replicas 3 --network=idoall-org alpine ping baidu.com

avcrdsntx8b8ei091lq5cl76y

[root@c1 ~]# docker service ps idoall-org-test-ping

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR

42vigh5lxkvgge9zo27hfah88 idoall-org-test-ping.1 alpine c4 Running Starting 1 seconds ago

aovr8r7r7lykzmxqs30e8s4ee idoall-org-test-ping.2 alpine c3 Running Starting 1 seconds ago

c7pv2o597qycsqzqzgjwwtw8b idoall-org-test-ping.3 alpine c1 Running Running 3 seconds ago

可以看到3个实例,分别部署在c1、c3、c4三台机器上

也可以使用--mode golbal 指定service运行在每个swarm节点上,稍后会有介绍

3.5、扩展(Scaling)应用

假设在程序运行的时候,发现资源不够用,我们可以使用scale进行扩展,现在有3个实例,我们更改为4个实例

[root@c1 ~]# docker service scale idoall-org-test-ping=4

idoall-org-test-ping scaled to 4

[root@c1 ~]# docker service ps idoall-org-test-ping

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR

42vigh5lxkvgge9zo27hfah88 idoall-org-test-ping.1 alpine c4 Running Running 4 minutes ago

aovr8r7r7lykzmxqs30e8s4ee idoall-org-test-ping.2 alpine c3 Running Running 4 minutes ago

c7pv2o597qycsqzqzgjwwtw8b idoall-org-test-ping.3 alpine c1 Running Running 4 minutes ago

72of5dfm67duccxsdyt1e25qd idoall-org-test-ping.4 alpine c2 Running Running 1 seconds ago

3.6、对service服务进行指定运行

在上面的案例中,不管你的实例是几个,是由swarm自动调度定义执行在某个节点上。我们可以通过在创建service的时候可以使用--constraints参数,来对service进行限制,例如我们指定一个服务在c4上运行:

[root@c1 ~]# docker service create \

--network idoall-org \

--name idoall-org \

--constraint ''node.hostname==c4'' \

-p 9000:9000 \

idoall/golang-revel

服务启动以后,通过浏览http://10.0.0.31:9000/,或者31-34的任意IP,都可以看到效果,Docker Swarm会自动做负载均衡,稍后会介绍关于Docker Swarm的负载均衡

由于各地的网络不同,下载镜像可能有些慢,可以使用下面的命令,对命名为idoall-org的镜像进行监控

[root@c1 ~]# watch docker service ps idoall-org

除了hostname也可以使用其他节点属性来创建约束表达式写法参见下表:

节点属性 匹配 示例

node.id 节点 ID node.id == 2ivku8v2gvtg4

node.hostname 节点 hostname node.hostname != c2

node.role 节点 role: manager node.role == manager

node.labels 用户自定义 node labels node.labels.security == high

engine.labels Docker Engine labels engine.labels.operatingsystem == ubuntu 14.04

我们也可以通过docker node update命令,来为机器添加label,例如:

[root@c1 ~]# docker node update --label-add site=idoall-org c1

[root@c2 ~]# docker node inspect c1

[

{

"ID": "4cxm0w5j3x4mqrj8f1kdrgln5",

"Version": {

"Index": 108

},

"CreatedAt": "2016-12-11T11:13:32.495274292Z",

"UpdatedAt": "2016-12-11T12:00:05.956367412Z",

"Spec": {

"Labels": {

"site": "idoall-org"

...

]

对于已有service, 可以通过docker service update,添加constraint配置, 例如:

[root@c1 ~]# docker service update registry --constraint-add ''node.labels.site==idoall-org''

3.7、测试docker swarm网络是否能互通

在c1上执行

[root@c1 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c683692b0153 alpine:latest "ping baidu.com" 25 minutes ago Up 25 minutes idoall-org-test-ping.4.c7pv2o597qycsqzqzgjwwtw8b

[root@c1 ~]# docker exec -it 47e5 sh

/ # ping idoall-org.1.9ne6hxjhvneuhsrhllykrg7zm

PING idoall-org.1.9ne6hxjhvneuhsrhllykrg7zm (10.0.9.8): 56 data bytes

64 bytes from 10.0.9.8: seq=0 ttl=64 time=1.080 ms

64 bytes from 10.0.9.8: seq=1 ttl=64 time=1.349 ms

64 bytes from 10.0.9.8: seq=2 ttl=64 time=1.026 ms

idoall-org.1.9ne6hxjhvneuhsrhllykrg7zm是容器在c4上运行的名称

在使用exec进入容器的时候,可以只输入容器id的前4位

在c4上执行

[root@c4 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1ead9bb757a0 idoall/docker-golang1.7.4-revel0.13:latest "/usr/bin/supervisord" About a minute ago Up 58 seconds idoall-org.1.9ne6hxjhvneuhsrhllykrg7zm

033531b30b79 alpine:latest "ping baidu.com" About a minute ago Up About a minute idoall-org-test-ping.1.6st5xvehh7c3bwaxsen3r4gpn

[root@c2 ~]# docker exec -it f49c435c94ea sh

bash-4.3# ping idoall-org-test-ping.4.cirnop0kxbuxiyjh87ii6hh4x

PING idoall-org-test-ping.4.cirnop0kxbuxiyjh87ii6hh4x (10.0.9.6): 56 data bytes

64 bytes from 10.0.9.6: seq=0 ttl=64 time=0.531 ms

64 bytes from 10.0.9.6: seq=1 ttl=64 time=0.700 ms

64 bytes from 10.0.9.6: seq=2 ttl=64 time=0.756 ms

3.8、测试dokcer swarm自带的负载均衡

使用--mode global参数,在每个节点上创建一个web服务

[root@c1 ~]# docker service create --name whoami --mode global -p 8000:8000 jwilder/whoami

1u87lrzlktgskt4g6ae30xzb8

[root@c1 ~]# docker service ps whoami

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR

cjf5w0pv5bbrph2gcvj508rvj whoami jwilder/whoami c2 Running Running 16 minutes ago

dokh8j4z0iuslye0qa662axqv \_ whoami jwilder/whoami c3 Running Running 16 minutes ago

dumjwz4oqc5xobvjv9rosom0w \_ whoami jwilder/whoami c1 Running Running 16 minutes ago

bbzgdau14p5b4puvojf06gn5s \_ whoami jwilder/whoami c4 Running Running 16 minutes ago

在任意一台机器上执行以下命令,可以发现,每次获取到的都是不同的值,超过4次以后,会继续轮询到第1台机器

[root@c1 ~]# curl $(hostname --all-ip-addresses | awk ''{print $1}''):8000

I''m 8c2eeb5d420f

[root@c1 ~]# curl $(hostname --all-ip-addresses | awk ''{print $1}''):8000

I''m 0b56c2a5b2a4

[root@c1 ~]# curl $(hostname --all-ip-addresses | awk ''{print $1}''):8000

I''m 000982389fa0

[root@c1 ~]# curl $(hostname --all-ip-addresses | awk ''{print $1}''):8000

I''m db8d3e839de5

[root@c1 ~]# curl $(hostname --all-ip-addresses | awk ''{print $1}''):8000

I''m 8c2eeb5d420f

扩展阅读

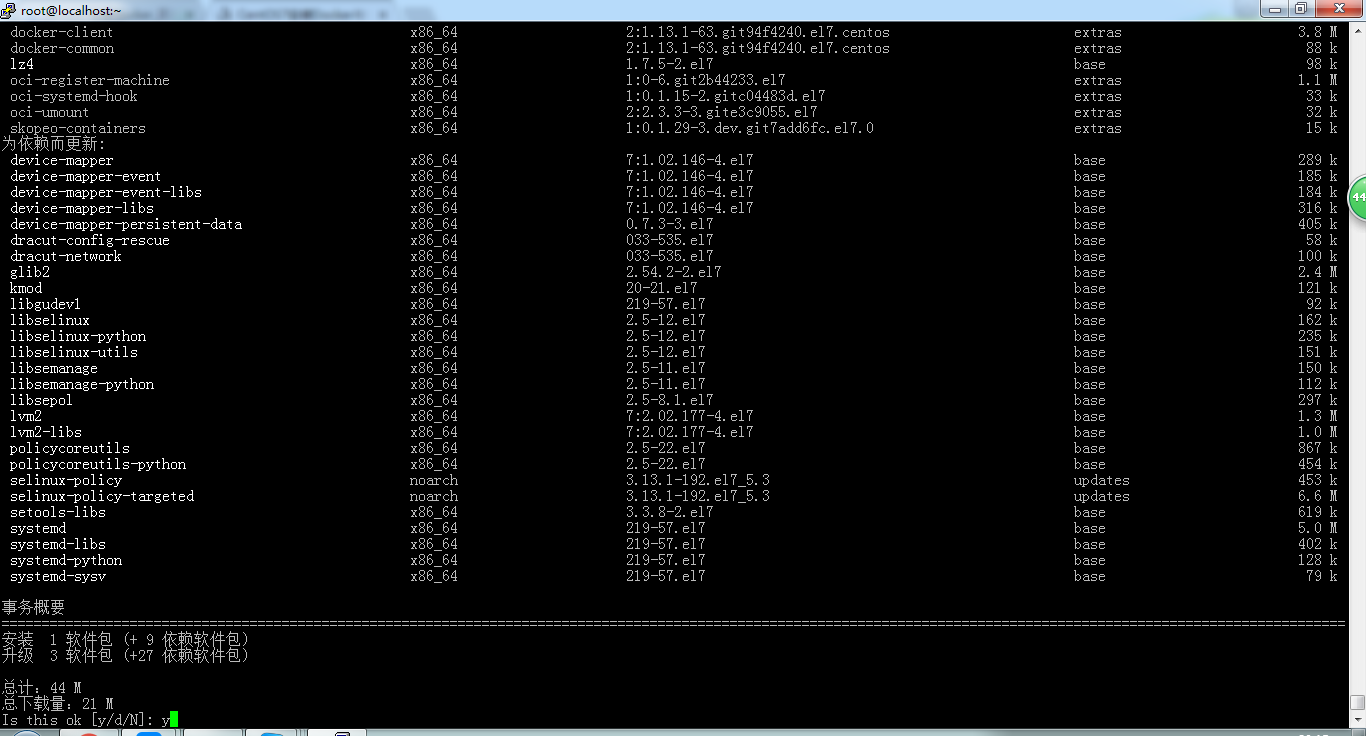

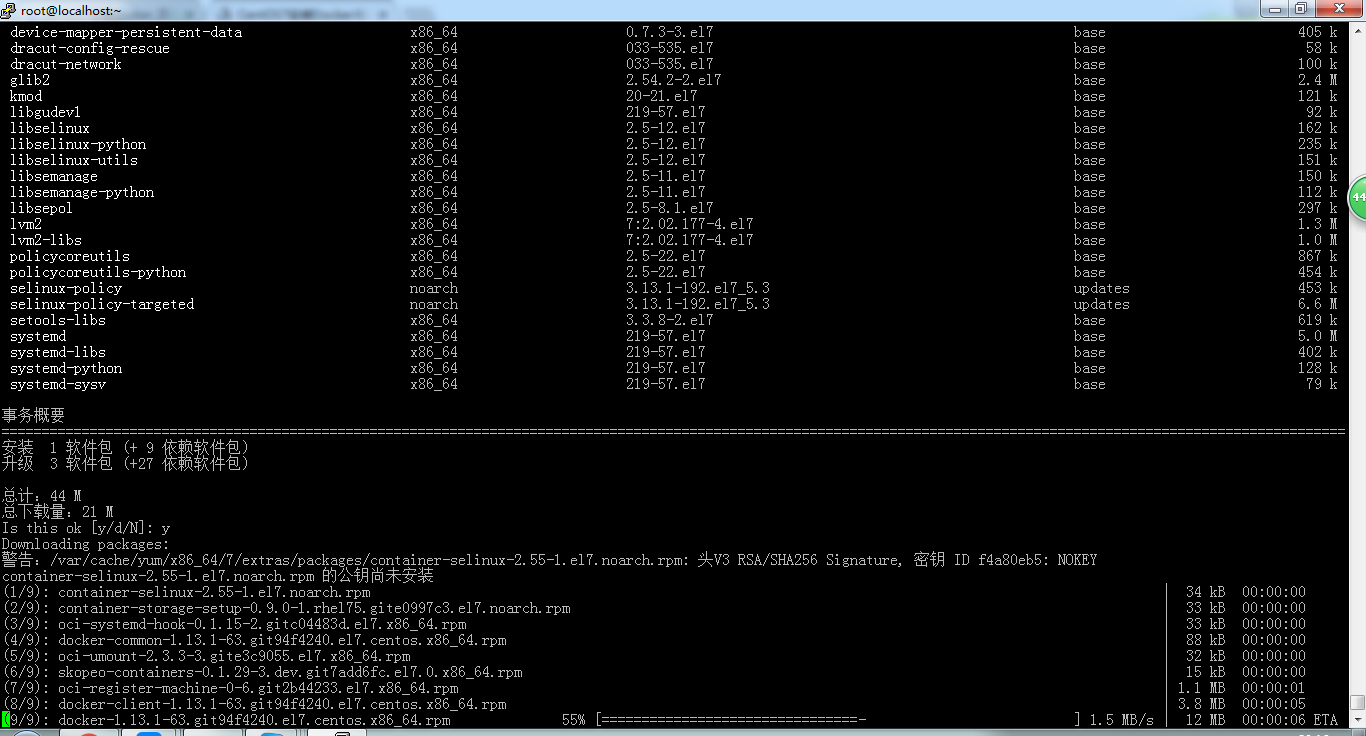

Centos7.1 下 Docker 的安装 - yum 方法

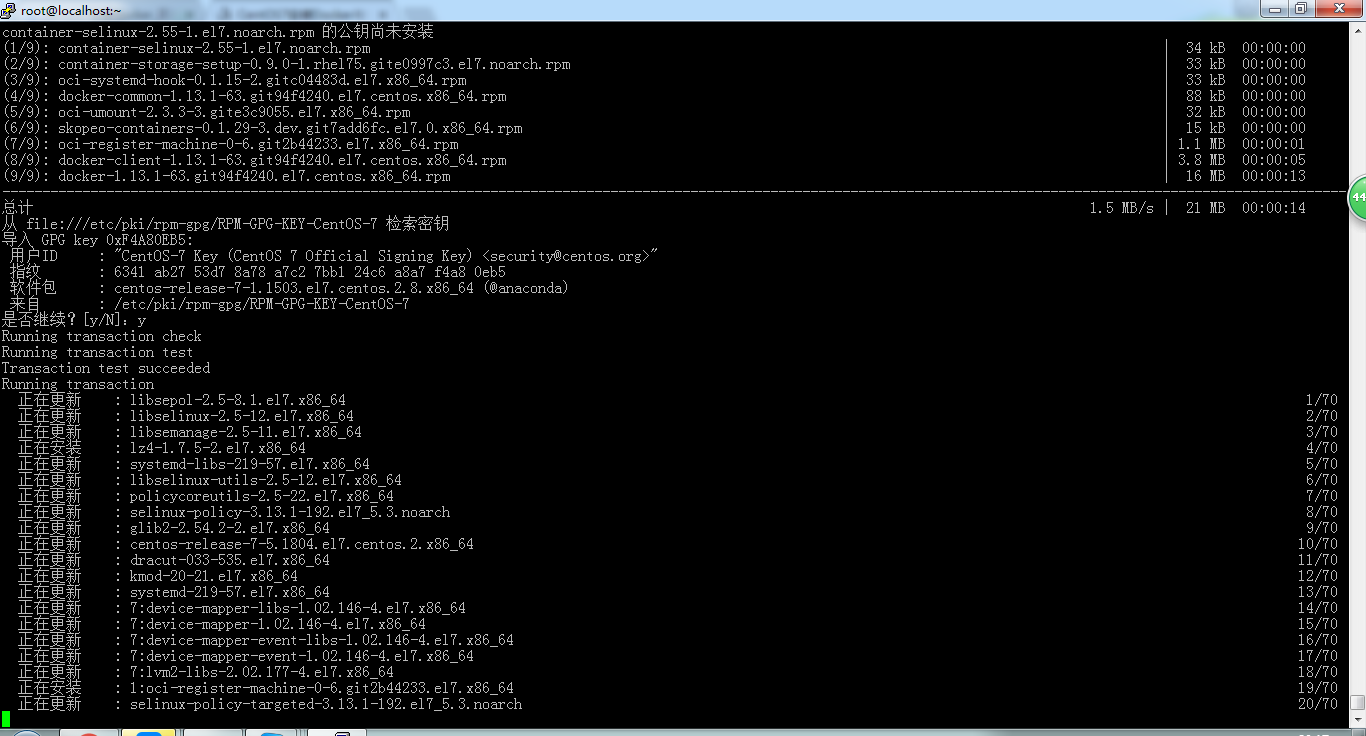

在 CentOS7 上安装 1. 查看系统版本:

$ cat /etc/redhat-release

CentOS Linux release 7.0.1406 (Core)

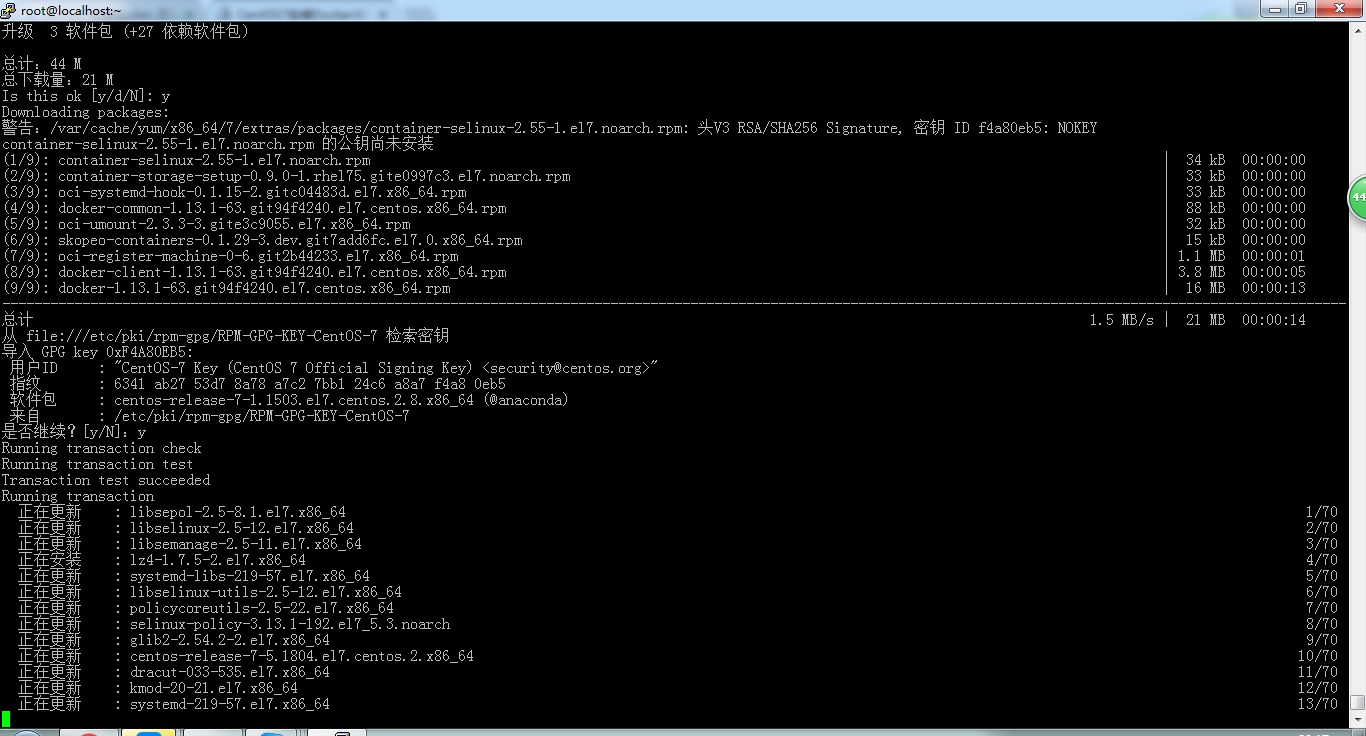

2. 安装 docker

$ yum install docker

安装过程中报错:

Transaction check error:

file /usr/lib/systemd/system/blk-availability.service from install of device-mapper-7:1.02.107-5.el7_2.2.x86_64 conflicts with file from package lvm2-7:2.02.105-14.el7.x86_64

file /usr/sbin/blkdeactivate from install of device-mapper-7:1.02.107-5.el7_2.2.x86_64 conflicts with file from package lvm2-7:2.02.105-14.el7.x86_64

file /usr/share/man/man8/blkdeactivate.8.gz from install of device-mapper-7:1.02.107-5.el7_2.2.x86_64 conflicts with file from package lvm2-7:2.02.105-14.el7.x86_64

解决办法:

$yum install libdevmapper* -y

再次运行:

$yum install docker

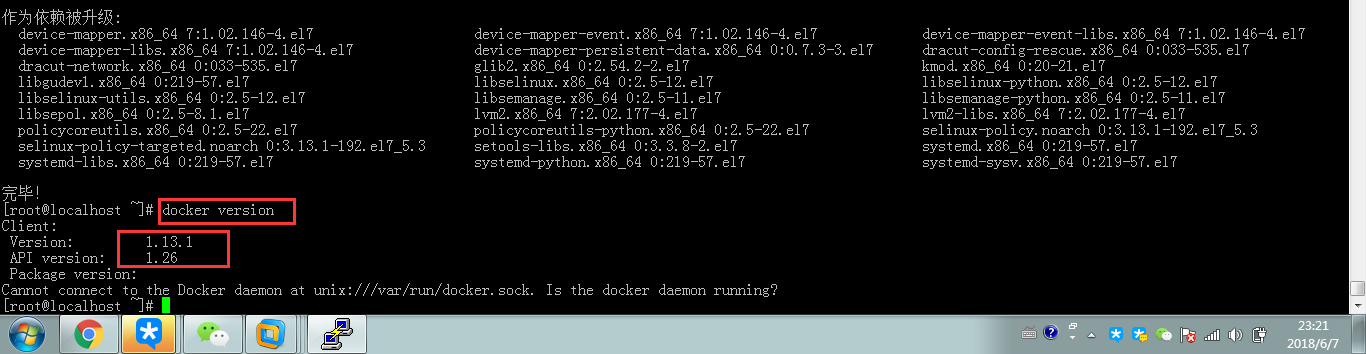

3. 检查安装是否成功

$docker version

若输出了 Docker 的版本号,说明安装成功了,可通过以下命令启动 Docker 服务:

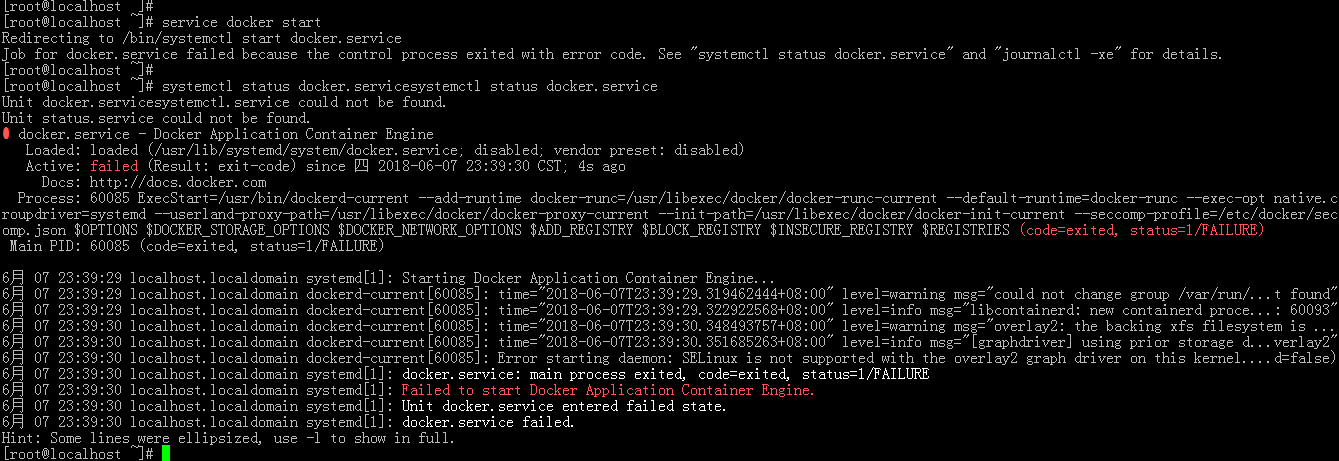

4. $service docker start

一旦 Docker 服务启动完毕,就可以开始使用 Docker 了。很遗憾,下面的启动报错。

关于ubuntu-docker 入门到放弃和一docker 的安装的问题就给大家分享到这里,感谢你花时间阅读本站内容,更多关于1.05、Docker 的安装、2019-09-19 docker 的安装、Centos7 的安装、Docker1.12.3 的安装、Centos7.1 下 Docker 的安装 - yum 方法等相关知识的信息别忘了在本站进行查找喔。

本文标签: