如果您想了解IfcEventType的相关知识,那么本文是一篇不可错过的文章,我们将为您提供关于2、typescript-类型断言,EventTargetEvent、aiohttp.client_ex

如果您想了解IfcEventType的相关知识,那么本文是一篇不可错过的文章,我们将为您提供关于2、typescript - 类型断言,EventTarget Event、aiohttp.client_exceptions.ContentTypeError: 0:json(content_type=‘??‘)、com.amazonaws.event.ProgressEventType的实例源码、com.facebook.react.uimanager.events.TouchEventType的实例源码的有价值的信息。

本文目录一览:- IfcEventType

- 2、typescript - 类型断言,EventTarget Event

- aiohttp.client_exceptions.ContentTypeError: 0:json(content_type=‘??‘)

- com.amazonaws.event.ProgressEventType的实例源码

- com.facebook.react.uimanager.events.TouchEventType的实例源码

IfcEventType

| Item | SPF | XML | Change | Description |

|---|---|---|---|---|

| IFC2x3 to IFC4 | ||||

| IfcEventType | ADDED |

-------------------------------------------------------------------------------------------------------------

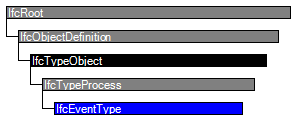

IfcEventType定义可以指定的特定事件类型。

IFC4中增加的新实体

IfcEventType提供可以指定的所有类型的事件。

IfcEventType的使用定义了一个或多个IfcEvent出现的参数。参数可以通过可以在IfcEventTypeEnum数据类型中枚举的属性集指定,也可以通过IfcEvent的显式属性指定。事件发生(IfcEvent实体)通过IfcRelDefinesByType关系链接到事件类型。

| # | Attribute | Type | Cardinality | Description | C |

|---|---|---|---|---|---|

| 10 | PredefinedType | IfcEventTypeEnum | [1:1] | Identifies the predefined types of an event from which the type required may be set. | X |

| 11 | EventTriggerType | IfcEventTriggerTypeEnum | [1:1] | Identifies the predefined types of event trigger from which the type required may be set. | X |

| 12 | UserDefinedEventTriggerType | IfcLabel | [0:1] | A user defined event trigger type, the value of which is asserted when the value of an event trigger type is declared as USERDEFINED. | X |

| Rule | Description |

|---|---|

| CorrectPredefinedType | The attribute ProcessType must be asserted when the value of PredefinedType is set to USERDEFINED. |

| CorrectEventTriggerType | The attribute UserDefinedEventTriggerType must be asserted when the value of EventTriggerType is set to USERDEFINED. |

| # | Attribute | Type | Cardinality | Description | C |

|---|---|---|---|---|---|

| IfcRoot | |||||

| 1 | GlobalId | IfcGloballyUniqueId | [1:1] | Assignment of a globally unique identifier within the entire software world. | X |

| 2 | OwnerHistory | IfcOwnerHistory | [0:1] | Assignment of the information about the current ownership of that object, including owning actor, application, local identification and information captured about the recent changes of the object,

NOTE only the last modification in stored - either as addition, deletion or modification. |

X |

| 3 | Name | IfcLabel | [0:1] | Optional name for use by the participating software systems or users. For some subtypes of IfcRoot the insertion of the Name attribute may be required. This would be enforced by a where rule. | X |

| 4 | Description | IfcText | [0:1] | Optional description, provided for exchanging informative comments. | X |

| IfcObjectDefinition | |||||

| HasAssignments | IfcRelAssigns @RelatedObjects |

S[0:?] | Reference to the relationship objects, that assign (by an association relationship) other subtypes of IfcObject to this object instance. Examples are the association to products, processes, controls, resources or groups. | X | |

| Nests | IfcRelNests @RelatedObjects |

S[0:1] | References to the decomposition relationship being a nesting. It determines that this object definition is a part within an ordered whole/part decomposition relationship. An object occurrence or type can only be part of a single decomposition (to allow hierarchical strutures only). | X | |

| IsNestedBy | IfcRelNests @RelatingObject |

S[0:?] | References to the decomposition relationship being a nesting. It determines that this object definition is the whole within an ordered whole/part decomposition relationship. An object or object type can be nested by several other objects (occurrences or types). | X | |

| HasContext | IfcRelDeclares @RelatedDefinitions |

S[0:1] | References to the context providing context information such as project unit or representation context. It should only be asserted for the uppermost non-spatial object. | X | |

| IsDecomposedBy | IfcRelAggregates @RelatingObject |

S[0:?] | References to the decomposition relationship being an aggregation. It determines that this object definition is whole within an unordered whole/part decomposition relationship. An object definitions can be aggregated by several other objects (occurrences or parts). | X | |

| Decomposes | IfcRelAggregates @RelatedObjects |

S[0:1] | References to the decomposition relationship being an aggregation. It determines that this object definition is a part within an unordered whole/part decomposition relationship. An object definitions can only be part of a single decomposition (to allow hierarchical strutures only). | X | |

| HasAssociations | IfcRelAssociates @RelatedObjects |

S[0:?] | Reference to the relationship objects, that associates external references or other resource definitions to the object.. Examples are the association to library, documentation or classification. | X | |

| IfcTypeObject | |||||

| 5 | ApplicableOccurrence | IfcIdentifier | [0:1] | The attribute optionally defines the data type of the occurrence object, to which the assigned type object can relate. If not present, no instruction is given to which occurrence object the type object is applicable. The following conventions are used:

EXAMPLE Refering to a furniture as applicable occurrence entity would be expressed as ''IfcFurnishingElement'', refering to a brace as applicable entity would be expressed as ''IfcMember/BRACE'', refering to a wall and wall standard case would be expressed as ''IfcWall, IfcWallStandardCase''. |

X |

| 6 | HasPropertySets | IfcPropertySetDefinition | S[1:?] | Set list of unique property sets, that are associated with the object type and are common to all object occurrences referring to this object type. | X |

| Types | IfcRelDefinesByType @RelatingType |

S[0:1] | Reference to the relationship IfcRelDefinedByType and thus to those occurrence objects, which are defined by this type. | X | |

| IfcTypeProcess | |||||

| 7 | Identification | IfcIdentifier | [0:1] | An identifying designation given to a process type. | X |

| 8 | LongDescription | IfcText | [0:1] | An long description, or text, describing the activity in detail.

NOTE The inherited SELF\IfcRoot.Description attribute is used as the short description. |

X |

| 9 | ProcessType | IfcLabel | [0:1] | The type denotes a particular type that indicates the process further. The use has to be established at the level of instantiable subtypes. In particular it holds the user defined type, if the enumeration of the attribute ''PredefinedType'' is set to USERDEFINED. | X |

| OperatesOn | IfcRelAssignsToProcess @RelatingProcess |

S[0:?] | Set of relationships to other objects, e.g. products, processes, controls, resources or actors that are operated on by the process type. | X | |

| IfcEventType | |||||

| 10 | PredefinedType | IfcEventTypeEnum | [1:1] | Identifies the predefined types of an event from which the type required may be set. | X |

| 11 | EventTriggerType | IfcEventTriggerTypeEnum | [1:1] | Identifies the predefined types of event trigger from which the type required may be set. | X |

| 12 | UserDefinedEventTriggerType | IfcLabel | [0:1] | A user defined event trigger type, the value of which is asserted when the value of an event trigger type is declared as USERDEFINED. | X |

2、typescript - 类型断言,EventTarget Event

有时候你会遇到这样的情况,你会比 TypeScript 更了解某个值的详细信息。 通常这会发生在你清楚地知道一个实体具有比它现有类型更确切的类型。

通过类型断言这种方式可以告诉编译器,“相信我,我知道自己在干什么”。 类型断言好比其它语言里的类型转换,但是不进行特殊的数据检查和解构。 它没有运行时的影响,只是在编译阶段起作用。 TypeScript 会假设你,程序员,已经进行了必须的检查。

类型断言有两种形式。

//类型断言有两种形式。 其一是“尖括号”语法:

let someValue: any = "this is a string";

let strLength: number = (<string>someValue).length;

//另一个为as语法:

let someValue: any = "this is a string";

let strLength: number = (someValue as string).length;

//这块是鼠标的经过事件typescript写法,因为这个onMouseOver是写到tsx里面的,所以这个方法的参数返回的是React.MouseEvent

onMouseOver = (e:React.MouseEvent) => {

//as HTMLElement是把目标target断言为HTMLElement, 预言为肯定不为null或者undefined的情况

const $target = e.target as HTMLElement;

const ref:string = $target.getAttribute(''ref) as string;

console.log(ref)

}

源: https://www.tslang.cn/docs/handbook/classes.html

aiohttp.client_exceptions.ContentTypeError: 0:json(content_type=‘??‘)

aiohttp.client_exceptions

- 问题

- 解决

问题

# 获取异步requests

async with aiohttp.ClientSession() as session:

async with session.post(url, headers=headers, data=data) as resp:

# 写入数据

csvWriter.writerow(await resp.json()) # 读取内容是异步的,需要挂起

print('库编号', data['p'], '爬取完毕!')

from aioHTTP_Requests import requests

async def req(URL):

headers = {"Accept": 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8',

"Accept-Language": "zh-CN,zh;q=0.9", "Host": "xin.baidu.com",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML,like Gecko) Chrome/72.0.3626.121 Safari/537.36", }

resp = await requests.get(URL,headers=headers,timeout=10,verify_ssl=False)

resp_text = await resp.json(encoding='utf-8')

return resp_text

if __name__ == "__main__":

print("HELLO WORLD")

URL = "https://xin.baidu.com/detail/basicAjax?pid=xlTM-TogKuTw9PzC-u6VwZxUBuZ5J7WMewmd"

loop = asyncio.get_event_loop()

loop.run_until_complete(req(URL))

csvWriter.writerow(await resp.json())

运行上面的程序会报错

解决

ContentTypeError:类型错误

在resp.json():传入编码文本类型参数即可:

resp.json(content_type='text/html',encoding='utf-8')

参考:Link

衷心感谢!

com.amazonaws.event.ProgressEventType的实例源码

/**

* Performs the copy of an Amazon S3 object from source bucket to

* destination bucket as multiple copy part requests. The information about

* the part to be copied is specified in the request as a byte range

* (first-last)

*

* @throws Exception

* Any Exception that occurs while carrying out the request.

*/

private void copyInParts() throws Exception {

multipartUploadId = initiateMultipartUpload(copyObjectRequest);

long optimalPartSize = getoptimalPartSize(Metadata.getContentLength());

try {

copyPartRequestFactory requestFactory = new copyPartRequestFactory(

copyObjectRequest,multipartUploadId,optimalPartSize,Metadata.getContentLength());

copyPartsInParallel(requestFactory);

} catch (Exception e) {

publishProgress(listenerChain,ProgressEventType.TRANSFER_Failed_EVENT);

abortMultipartcopy();

throw new RuntimeException("Unable to perform multipart copy",e);

}

}

/**

* This method is also responsible for firing COMPLETED signal to the

* listeners.

*/

@Override

public void setState(TransferState state) {

super.setState(state);

switch (state) {

case Completed :

fireProgressEvent(ProgressEventType.TRANSFER_COMPLETED_EVENT);

break;

case Canceled:

fireProgressEvent(ProgressEventType.TRANSFER_CANCELED_EVENT);

break;

case Failed:

fireProgressEvent(ProgressEventType.TRANSFER_Failed_EVENT);

break;

default:

break;

}

}

public void progressChanged(ProgressEvent progressEvent) {

if (progress != null) {

progress.progress();

}

// There are 3 http ops here,but this should be close enough for Now

ProgressEventType pet = progressEvent.getEventType();

if (pet == TRANSFER_PART_STARTED_EVENT ||

pet == TRANSFER_COMPLETED_EVENT) {

statistics.incrementWriteOps(1);

}

long transferred = upload.getProgress().getBytesTransferred();

long delta = transferred - lastBytesTransferred;

if (statistics != null && delta != 0) {

statistics.incrementBytesWritten(delta);

}

lastBytesTransferred = transferred;

}

@Override

public void progressChanged(ProgressEvent event)

{

if ( event.getEventType() == ProgressEventType.REQUEST_CONTENT_LENGTH_EVENT )

{

contentLength = event.getBytes();

getLog().info("Content size: " + contentLength + " bytes");

}

else if ( event.getEventType() == ProgressEventType.REQUEST_BYTE_TRANSFER_EVENT )

{

contentSent += event.getBytesTransferred();

double div = (double) (((double)contentSent/(double)contentLength));

double mul = div*(double)100.0;

int mod = (int)mul / 10;

if ( mod > lastTenPct )

{

lastTenPct = mod;

getLog().info("Uploaded " + (mod*10) + "% of " + (contentLength/(1024*1024)) + " MB");

}

}

}

@Bean

public S3ProgressListener s3ProgressListener() {

return new S3ProgressListener() {

@Override

public void onPersistableTransfer(PersistableTransfer persistableTransfer) {

}

@Override

public void progressChanged(ProgressEvent progressEvent) {

if (ProgressEventType.TRANSFER_COMPLETED_EVENT.equals(progressEvent.getEventType())) {

transferCompletedLatch().countDown();

}

}

};

}

private ProgressListener createProgressListener(Transfer transfer)

{

return new ProgressListener()

{

private ProgressEventType prevIoUsType;

private double prevIoUsTransferred;

@Override

public synchronized void progressChanged(ProgressEvent progressEvent)

{

ProgressEventType eventType = progressEvent.getEventType();

if (prevIoUsType != eventType) {

log.debug("Upload progress event (%s/%s): %s",host,key,eventType);

prevIoUsType = eventType;

}

double transferred = transfer.getProgress().getPercentTransferred();

if (transferred >= (prevIoUsTransferred + 10.0)) {

log.debug("Upload percentage (%s/%s): %.0f%%",transferred);

prevIoUsTransferred = transferred;

}

}

};

}

@Override

public void progressChanged(ProgressEvent progressEvent) {

ProgressEventType type = progressEvent.getEventType();

if (type.equals(TRANSFER_COMPLETED_EVENT) || type.equals(TRANSFER_STARTED_EVENT)) {

out.println();

}

if (type.isByteCountEvent()) {

long timeLeft = getTimeLeft();

if (lastTimeLeft < 1 && timeLeft > 0) {

// prime this value with a sane starting point

lastTimeLeft = timeLeft;

}

// use an exponential moving average to smooth the estimate

lastTimeLeft += 0.90 * (timeLeft - lastTimeLeft);

out.print(String.format("\r%1$s %2$s / %3$s %4$s ",generate(saturatedCast(round(completed + (progress.getPercentTransferred() * multiplier)))),humanReadableByteCount(progress.getBytesTransferred(),true),humanReadableByteCount(progress.getTotalBytesToTransfer(),fromSeconds(lastTimeLeft)));

out.flush();

}

}

public MessageDigest copyAndHash(InputStream input,long totalBytes,Progress progress)

throws IOException,CloneNotSupportedException {

// clone the current digest,such that it remains unchanged in this method

MessageDigest computedDigest = (MessageDigest) currentDigest.clone();

byte[] buffer = new byte[DEFAULT_BUF_SIZE];

long count = 0;

int n;

while (-1 != (n = input.read(buffer))) {

output.write(buffer,n);

if (progressListener != null) {

progress.updateProgress(n);

progressListener.progressChanged(new ProgressEvent(ProgressEventType.RESPONSE_BYTE_TRANSFER_EVENT,n));

}

computedDigest.update(buffer,n);

count += n;

}

// verify that at least this many bytes were read

if (totalBytes != count) {

throw new IOException(String.format("%d bytes downloaded instead of expected %d bytes",count,totalBytes));

}

return computedDigest;

}

public MessageDigest copyAndHash(InputStream input,n);

if (progressListener != null) {

progress.updateProgress(n);

progressListener.progressChanged(new ProgressEvent(ProgressEventType.REQUEST_BYTE_TRANSFER_EVENT,totalBytes));

}

return computedDigest;

}

@Override

public void progressChanged(ProgressEvent progressEvent) {

if (progressEvent.getEventType() == ProgressEventType.REQUEST_CONTENT_LENGTH_EVENT) {

partSize = progressEvent.getBytes();

ArchiveUploadHighLevel.this.log.info("Part size: " + partSize);

}

if (progressEvent.getEventType() == ProgressEventType.CLIENT_REQUEST_SUCCESS_EVENT) {

counter += partSize;

int percentage = (int)(counter * 100.0 / total);

ArchiveUploadHighLevel.this.log.info("Successfully transferred: " + counter + " / " + total + " (" + percentage + "%)");

}

}

private Response<Output> doExecute() throws InterruptedException {

runBeforeRequestHandlers();

setSdkTransactionId(request);

setUserAgent(request);

ProgressListener listener = requestConfig.getProgressListener();

// add custom headers

request.getHeaders().putAll(config.getHeaders());

request.getHeaders().putAll(requestConfig.getCustomrequestHeaders());

// add custom query parameters

mergeQueryParameters(requestConfig.getCustomQueryParameters());

Response<Output> response = null;

final InputStream origContent = request.getContent();

final InputStream toBeClosed = beforeRequest(); // for progress tracking

// make "notCloseable",so reset would work with retries

final InputStream notCloseable = (toBeClosed == null) ? null

: ReleasableInputStream.wrap(toBeClosed).disableClose();

request.setContent(notCloseable);

try {

publishProgress(listener,ProgressEventType.CLIENT_REQUEST_STARTED_EVENT);

response = executeHelper();

publishProgress(listener,ProgressEventType.CLIENT_REQUEST_SUCCESS_EVENT);

awsRequestMetrics.getTimingInfo().endTiming();

afterResponse(response);

return response;

} catch (AmazonClientException e) {

publishProgress(listener,ProgressEventType.CLIENT_REQUEST_Failed_EVENT);

afterError(response,e);

throw e;

} finally {

// Always close so any progress tracking would get the final events propagated.

closeQuietly(toBeClosed,log);

request.setContent(origContent); // restore the original content

}

}

/**

* Pause before the next retry and record metrics around retry behavior.

*/

private void pauseBeforeRetry(ExecOneRequestParams execOneParams,final ProgressListener listener) throws InterruptedException {

publishProgress(listener,ProgressEventType.CLIENT_REQUEST_RETRY_EVENT);

// Notify the progress listener of the retry

awsRequestMetrics.startEvent(Field.RetryPauseTime);

try {

doPauseBeforeRetry(execOneParams);

} finally {

awsRequestMetrics.endEvent(Field.RetryPauseTime);

}

}

@Override

public void progressChanged(ProgressEvent progressEvent) {

ProgressEventType type = progressEvent.getEventType();

if (type.isByteCountEvent())

return;

if (type != types[count]) {

throw new AssertionError("Expect event type "

+ types[count] + " but got "

+ progressEvent.getEventType());

}

count++;

}

@Override

public ProgressEvent filter(ProgressEvent progressEvent) {

// Block COMPLETE events from the low-level Getobject operation,// but we still want to keep the BytesTransferred

return progressEvent.getEventType() == ProgressEventType.TRANSFER_COMPLETED_EVENT

? null // discard this event

: progressEvent

;

}

public copyResult call() throws Exception {

copy.setState(TransferState.InProgress);

if (isMultipartcopy()) {

publishProgress(listenerChain,ProgressEventType.TRANSFER_STARTED_EVENT);

copyInParts();

return null;

} else {

return copyInOneChunk();

}

}

/**

* Override this method so that TransferState updates are also sent out to the

* progress listener chain in forms of ProgressEvent.

*/

@Override

public void setState(TransferState state) {

super.setState(state);

switch (state) {

case Waiting:

fireProgressEvent(ProgressEventType.TRANSFER_PREPARING_EVENT);

break;

case InProgress:

if ( subTransferStarted.compareAndSet(false,true) ) {

/* The first InProgress signal */

fireProgressEvent(ProgressEventType.TRANSFER_STARTED_EVENT);

}

/* Don't need any event code update for subsequent InProgress signals */

break;

case Completed:

fireProgressEvent(ProgressEventType.TRANSFER_COMPLETED_EVENT);

break;

case Canceled:

fireProgressEvent(ProgressEventType.TRANSFER_CANCELED_EVENT);

break;

case Failed:

fireProgressEvent(ProgressEventType.TRANSFER_Failed_EVENT);

break;

default:

break;

}

}

@Override

public UploadResult call() throws Exception {

CompleteMultipartUploadResult res;

try {

CompleteMultipartUploadRequest req = new CompleteMultipartUploadRequest(

origReq.getBucketName(),origReq.getKey(),uploadId,collectPartETags())

.withRequesterPays(origReq.isRequesterPays())

.withGeneralProgressListener(origReq.getGeneralProgressListener())

.withRequestMetricCollector(origReq.getRequestMetricCollector())

;

res = s3.completeMultipartUpload(req);

} catch (Exception e) {

publishProgress(listener,ProgressEventType.TRANSFER_Failed_EVENT);

throw e;

}

UploadResult uploadResult = new UploadResult();

uploadResult.setBucketName(origReq

.getBucketName());

uploadResult.setKey(origReq.getKey());

uploadResult.setETag(res.getETag());

uploadResult.setVersionId(res.getVersionId());

monitor.uploadComplete();

return uploadResult;

}

void copycomplete() {

markAllDone();

transfer.setState(TransferState.Completed);

// AmazonS3Client takes care of all the events for single part uploads,// so we only need to send a completed event for multipart uploads.

if (multipartcopyCallable.isMultipartcopy()) {

publishProgress(listener,ProgressEventType.TRANSFER_COMPLETED_EVENT);

}

}

void uploadComplete() {

markAllDone();

transfer.setState(TransferState.Completed);

// AmazonS3Client takes care of all the events for single part uploads,// so we only need to send a completed event for multipart uploads.

if (multipartUploadCallable.isMultipartUpload()) {

publishProgress(listener,ProgressEventType.TRANSFER_COMPLETED_EVENT);

}

}

public UploadResult call() throws Exception {

upload.setState(TransferState.InProgress);

if ( isMultipartUpload() ) {

publishProgress(listener,ProgressEventType.TRANSFER_STARTED_EVENT);

return uploadInParts();

} else {

return uploadInOneChunk();

}

}

@Override

public copyResult call() throws Exception {

CompleteMultipartUploadResult res;

try {

CompleteMultipartUploadRequest req = new CompleteMultipartUploadRequest(

origReq.getDestinationBucketName(),origReq.getDestinationKey(),collectPartETags())

.withRequesterPays(origReq.isRequesterPays())

.withGeneralProgressListener(origReq.getGeneralProgressListener())

.withRequestMetricCollector(origReq.getRequestMetricCollector())

;

res = s3.completeMultipartUpload(req);

} catch (Exception e) {

publishProgress(listener,ProgressEventType.TRANSFER_Failed_EVENT);

throw e;

}

copyResult copyResult = new copyResult();

copyResult.setSourceBucketName(origReq.getSourceBucketName());

copyResult.setSourceKey(origReq.getSourceKey());

copyResult.setDestinationBucketName(res

.getBucketName());

copyResult.setDestinationKey(res.getKey());

copyResult.setETag(res.getETag());

copyResult.setVersionId(res.getVersionId());

monitor.copycomplete();

return copyResult;

}

@Override

public F.Promise<Void> store(Path path,String key,String name) {

Promise<Void> promise = Futures.promise();

TransferManager transferManager = new TransferManager(credentials);

try {

Upload upload = transferManager.upload(bucketName,path.toFile());

upload.addProgressListener((ProgressListener) progressEvent -> {

if (progressEvent.getEventType().isTransferEvent()) {

if (progressEvent.getEventType().equals(ProgressEventType.TRANSFER_COMPLETED_EVENT)) {

transferManager.shutdownNow();

promise.success(null);

} else if (progressEvent.getEventType().equals(ProgressEventType.TRANSFER_Failed_EVENT)) {

transferManager.shutdownNow();

logger.error(progressEvent.toString());

promise.failure(new Exception(progressEvent.toString()));

}

}

});

} catch (AmazonServiceException ase) {

logAmazonServiceException (ase);

} catch (AmazonClientException ace) {

logAmazonClientException(ace);

}

return F.Promise.wrap(promise.future());

}

@Override

public F.Promise<Void> delete(String key,String name) {

Promise<Void> promise = Futures.promise();

AmazonS3 amazonS3 = new AmazonS3Client(credentials);

DeleteObjectRequest request = new DeleteObjectRequest(bucketName,key);

request.withGeneralProgressListener(progressEvent -> {

if (progressEvent.getEventType().isTransferEvent()) {

if (progressEvent.getEventType().equals(ProgressEventType.TRANSFER_COMPLETED_EVENT)) {

promise.success(null);

} else if (progressEvent.getEventType().equals(ProgressEventType.TRANSFER_Failed_EVENT)) {

logger.error(progressEvent.toString());

promise.failure(new Exception(progressEvent.toString()));

}

}

});

try {

amazonS3.deleteObject(request);

} catch (AmazonServiceException ase) {

logAmazonServiceException (ase);

} catch (AmazonClientException ace) {

logAmazonClientException(ace);

}

return F.Promise.wrap(promise.future());

}

@Override

public Integer call() throws Exception {

// this is the most up to date digest,it's initialized here but later holds the most up to date valid digest

currentDigest = MessageDigest.getInstance("MD5");

currentDigest = retryingGet();

if(progressListener != null) {

progressListener.progressChanged(new ProgressEvent(ProgressEventType.TRANSFER_STARTED_EVENT));

}

if (!fullETag.contains("-")) {

byte[] expected = BinaryUtils.fromHex(fullETag);

byte[] current = currentDigest.digest();

if (!Arrays.equals(expected,current)) {

throw new AmazonClientException("Unable to verify integrity of data download. "

+ "Client calculated content hash didn't match hash calculated by Amazon S3. "

+ "The data may be corrupt.");

}

} else {

// Todo log warning that we can't validate the MD5

// Todo implement this algorithm for several common chunk sizes http://stackoverflow.com/questions/6591047/etag-deFinition-changed-in-amazon-s3

if(verbose) {

System.err.println("\nMD5 does not exist on AWS for file,calculated value: " + BinaryUtils.toHex(currentDigest.digest()));

}

}

// Todo add ability to resume from prevIoUsly downloaded chunks

// Todo add rate limiter

return 0;

}

@Override

public void progressChanged(ProgressEvent progressEvent) {

if (progressEvent.getEventType() == ProgressEventType.CLIENT_REQUEST_Failed_EVENT) {

logger.warn(progressEvent.toString());

}

logger.info(progressEvent.toString());

}

public ProgressListenerWithEventCodeVerification(ProgressEventType... types) {

this.types = types.clone();

}

private UploadPartResult doUploadPart(final String bucketName,final String key,final String uploadId,final int partNumber,final long partSize,Request<UploadPartRequest> request,InputStream inputStream,MD5DigestCalculatingInputStream md5DigestStream,final ProgressListener listener) {

try {

request.setContent(inputStream);

ObjectMetadata Metadata = invoke(request,new S3MetadataResponseHandler(),bucketName,key);

final String etag = Metadata.getETag();

if (md5DigestStream != null

&& !skipMd5CheckStrategy.skipClientSideValidationPerUploadPartResponse(Metadata)) {

byte[] clientSideHash = md5DigestStream.getMd5Digest();

byte[] serverSideHash = BinaryUtils.fromHex(etag);

if (!Arrays.equals(clientSideHash,serverSideHash)) {

final String info = "bucketName: " + bucketName + ",key: "

+ key + ",uploadId: " + uploadId

+ ",partNumber: " + partNumber + ",partSize: "

+ partSize;

throw new SdkClientException(

"Unable to verify integrity of data upload. "

+ "Client calculated content hash (contentMD5: "

+ Base16.encodeAsstring(clientSideHash)

+ " in hex) didn't match hash (etag: "

+ etag

+ " in hex) calculated by Amazon S3. "

+ "You may need to delete the data stored in Amazon S3. "

+ "(" + info + ")");

}

}

publishProgress(listener,ProgressEventType.TRANSFER_PART_COMPLETED_EVENT);

UploadPartResult result = new UploadPartResult();

result.setETag(etag);

result.setPartNumber(partNumber);

result.setSSEAlgorithm(Metadata.getSSEAlgorithm());

result.setSSECustomerAlgorithm(Metadata.getSSECustomerAlgorithm());

result.setSSECustomerKeyMd5(Metadata.getSSECustomerKeyMd5());

result.setRequesterCharged(Metadata.isRequesterCharged());

return result;

} catch (Throwable t) {

publishProgress(listener,ProgressEventType.TRANSFER_PART_Failed_EVENT);

// Leaving this here in case anyone is depending on it,but it's

// inconsistent with other methods which only generate one of

// COMPLETED_EVENT_CODE or Failed_EVENT_CODE.

publishProgress(listener,ProgressEventType.TRANSFER_PART_COMPLETED_EVENT);

throw failure(t);

}

}

protected void fireProgressEvent(final ProgressEventType eventType) {

publishProgress(listenerChain,eventType);

}

/**

* Cancels all the futures associated with this upload operation. Also

* cleans up the parts on Amazon S3 if the upload is performed as a

* multi-part upload operation.

*/

void performAbort() {

cancelFutures();

multipartUploadCallable.performAbortMultipartUpload();

publishProgress(listener,ProgressEventType.TRANSFER_CANCELED_EVENT);

}

public ProgressEvent(long bytesTransferred) {

super(ProgressEventType.BYTE_TRANSFER_EVENT,bytesTransferred);

}

public ProgressEvent(ProgressEventType eventType) {

super(eventType);

}

private void downloadParseAndCreateStats(AWSCostStatsCreationContext statsData,String awsBucketName) throws IOException {

try {

// Creating a working directory for downloading and processing the bill

final Path workingDirPath = Paths.get(System.getProperty(TEMP_DIR_LOCATION),UUID.randomUUID().toString());

Files.createDirectories(workingDirPath);

AWSCsvBillParser parser = new AWSCsvBillParser();

final String csvBillZipFileName = parser

.getCsvBillFileName(statsData.billMonthTodownload,statsData.accountId,true);

Path csvBillZipFilePath = Paths.get(workingDirPath.toString(),csvBillZipFileName);

ProgressListener listener = new ProgressListener() {

@Override

public void progressChanged(ProgressEvent progressEvent) {

try {

ProgressEventType eventType = progressEvent.getEventType();

if (ProgressEventType.TRANSFER_COMPLETED_EVENT.equals(eventType)) {

OperationContext.restoreOperationContext(statsData.opContext);

LocalDate billMonth = new LocalDate(

statsData.billMonthTodownload.getYear(),statsData.billMonthTodownload.getMonthOfYear(),1);

logWithContext(statsData,Level.INFO,() -> String.format("Processing" +

" bill for the month: %s.",billMonth));

parser.parseDetailedCsvBill(statsData.ignorableInvoiceCharge,csvBillZipFilePath,statsData.awsAccountIdToComputeStates.keySet(),getHourlyStatsConsumer(billMonth,statsData),getMonthlyStatsConsumer(billMonth,statsData));

deleteTempFiles();

// Continue downloading and processing the bills for past and current months' bills

statsData.billMonthTodownload = statsData.billMonthTodownload.plusMonths(1);

handleCostStatsCreationRequest(statsData);

} else if (ProgressEventType.TRANSFER_Failed_EVENT.equals(eventType)) {

deleteTempFiles();

billDownloadFailureHandler(statsData,awsBucketName,new IOException(

"Download of AWS CSV Bill '" + csvBillZipFileName + "' Failed."));

}

} catch (Exception exception) {

deleteTempFiles();

billDownloadFailureHandler(statsData,exception);

}

}

private void deleteTempFiles() {

try {

Files.deleteIfExists(csvBillZipFilePath);

Files.deleteIfExists(workingDirPath);

} catch (IOException e) {

// Ignore IO exception while cleaning files.

}

}

};

GetobjectRequest getobjectRequest = new GetobjectRequest(awsBucketName,csvBillZipFileName).withGeneralProgressListener(listener);

statsData.s3Client.download(getobjectRequest,csvBillZipFilePath.toFile());

} catch (AmazonS3Exception s3Exception) {

billDownloadFailureHandler(statsData,s3Exception);

}

}

@Test

@Override

public void test() throws Exception {

AmazonS3 amazonS3Client = TestUtils.getPropertyValue(this.s3MessageHandler,"transferManager.s3",AmazonS3.class);

File file = this.temporaryFolder.newFile("foo.mp3");

Message<?> message = MessageBuilder.withPayload(file)

.build();

this.channels.input().send(message);

ArgumentCaptor<PutObjectRequest> putObjectRequestArgumentCaptor =

ArgumentCaptor.forClass(PutObjectRequest.class);

verify(amazonS3Client,atLeastOnce()).putObject(putObjectRequestArgumentCaptor.capture());

PutObjectRequest putObjectRequest = putObjectRequestArgumentCaptor.getValue();

assertthat(putObjectRequest.getBucketName(),equalTo(S3_BUCKET));

assertthat(putObjectRequest.getKey(),equalTo("foo.mp3"));

assertNotNull(putObjectRequest.getFile());

assertNull(putObjectRequest.getInputStream());

ObjectMetadata Metadata = putObjectRequest.getMetadata();

assertthat(Metadata.getContentMD5(),equalTo(Md5Utils.md5AsBase64(file)));

assertthat(Metadata.getContentLength(),equalTo(0L));

assertthat(Metadata.getContentType(),equalTo("audio/mpeg"));

ProgressListener listener = putObjectRequest.getGeneralProgressListener();

S3Progresspublisher.publishProgress(listener,ProgressEventType.TRANSFER_COMPLETED_EVENT);

assertTrue(this.transferCompletedLatch.await(10,TimeUnit.SECONDS));

assertTrue(this.aclLatch.await(10,TimeUnit.SECONDS));

ArgumentCaptor<SetobjectAclRequest> setobjectAclRequestArgumentCaptor =

ArgumentCaptor.forClass(SetobjectAclRequest.class);

verify(amazonS3Client).setobjectAcl(setobjectAclRequestArgumentCaptor.capture());

SetobjectAclRequest setobjectAclRequest = setobjectAclRequestArgumentCaptor.getValue();

assertthat(setobjectAclRequest.getBucketName(),equalTo(S3_BUCKET));

assertthat(setobjectAclRequest.getKey(),equalTo("foo.mp3"));

assertNull(setobjectAclRequest.getAcl());

assertthat(setobjectAclRequest.getCannedAcl(),equalTo(CannedAccessControlList.PublicReadWrite));

}

@Override

public Integer call() throws Exception {

ObjectMetadata om = amazonS3Client.getobjectMetadata(bucket,key);

contentLength = om.getContentLength();

// this is the most up to date digest,it's initialized here but later holds the most up to date valid digest

currentDigest = MessageDigest.getInstance("MD5");

chunkSize = chunkSize == null ? DEFAULT_CHUNK_SIZE : chunkSize;

fileParts = Parts.among(contentLength,chunkSize);

for (Part fp : fileParts) {

/*

* We'll need to compute the digest on the full incoming stream for

* each valid chunk that comes in. Invalid chunks will need to be

* recomputed and fed through a copy of the MD5 that was valid up

* until the latest chunk.

*/

currentDigest = retryingGetWithRange(fp.start,fp.end);

}

// Todo fix total content length progress bar

if(progressListener != null) {

progressListener.progressChanged(new ProgressEvent(ProgressEventType.TRANSFER_STARTED_EVENT));

}

String fullETag = om.getETag();

if (!fullETag.contains("-")) {

byte[] expected = BinaryUtils.fromHex(fullETag);

byte[] current = currentDigest.digest();

if (!Arrays.equals(expected,current)) {

throw new AmazonClientException("Unable to verify integrity of data download. "

+ "Client calculated content hash didn't match hash calculated by Amazon S3. "

+ "The data may be corrupt.");

}

} else {

// Todo log warning that we can't validate the MD5

if(verbose) {

System.err.println("\nMD5 does not exist on AWS for file,calculated value: " + BinaryUtils.toHex(currentDigest.digest()));

}

}

// Todo add ability to resume from prevIoUsly downloaded chunks

// Todo add rate limiter

return 0;

}

private void processReceivedEvent(ProgressEvent progressEvent) {

ProgressEventType eventType = progressEvent.getEventType();

long bytesTransferred = progressEvent.getBytesTransferred();

switch(eventType) {

case REQUEST_BYTE_TRANSFER_EVENT:

done += bytesTransferred;

chunk -= bytesTransferred;

if (chunk<=0) {

logger.info(String.format("Sent %s of %s bytes",done,total));

chunk = total / CHUNK;

}

break;

case TRANSFER_COMPLETED_EVENT:

logger.info("Transfer finished");

break;

case TRANSFER_Failed_EVENT:

logger.error("Transfer Failed");

break;

case TRANSFER_STARTED_EVENT:

done = 0;

logger.info("Transfer started");

break;

case REQUEST_CONTENT_LENGTH_EVENT:

total = progressEvent.getBytes();

chunk = total / CHUNK;

logger.info("Length is " + progressEvent.getBytes());

break;

case CLIENT_REQUEST_STARTED_EVENT:

case HTTP_Request_STARTED_EVENT:

case HTTP_Request_COMPLETED_EVENT:

case HTTP_RESPONSE_STARTED_EVENT:

case HTTP_RESPONSE_COMPLETED_EVENT:

case CLIENT_REQUEST_SUCCESS_EVENT:

// no-op

break;

default:

logger.debug("Transfer event " + progressEvent.getEventType() + " transfered bytes was " + bytesTransferred);

break;

}

}

com.facebook.react.uimanager.events.TouchEventType的实例源码

private void dispatchCancelEvent(MotionEvent androidEvent,Eventdispatcher eventdispatcher) {

// This means the gesture has already ended,via some other CANCEL or UP event. This is not

// expected to happen very often as it would mean some child View has decided to intercept the

// touch stream and start a native gesture only upon receiving the UP/CANCEL event.

if (mTargetTag == -1) {

FLog.w(

ReactConstants.TAG,"Can't cancel already finished gesture. Is a child View trying to start a gesture from " +

"an UP/CANCEL event?");

return;

}

Assertions.assertCondition(

!mChildisHandlingNativeGesture,"Expected to not have already sent a cancel for this gesture");

Assertions.assertNotNull(eventdispatcher).dispatchEvent(

TouchEvent.obtain(

mTargetTag,TouchEventType.CANCEL,androidEvent,mGestureStartTime,mTargetCoordinates[0],mTargetCoordinates[1],mTouchEventCoalescingKeyHelper));

}

private void dispatchCancelEvent(MotionEvent androidEvent,SystemClock.nanoTime(),mTargetCoordinates[1])); }

private void dispatchCancelEvent(MotionEvent androidEvent,mTouchEventCoalescingKeyHelper)); }

private void dispatchCancelEvent(MotionEvent androidEvent) {

// This means the gesture has already ended,via some other CANCEL or UP event. This is not

// expected to happen very often as it would mean some child View has decided to intercept the

// touch stream and start a native gesture only upon receiving the UP/CANCEL event.

if (mTargetTag == -1) {

FLog.w(

ReactConstants.TAG,"Can't cancel already finished gesture. Is a child View trying to start a gesture from " +

"an UP/CANCEL event?");

return;

}

Eventdispatcher eventdispatcher = mReactInstanceManager.getCurrentReactContext()

.getNativeModule(UIManagerModule.class)

.getEventdispatcher();

Assertions.assertCondition(

!mChildisHandlingNativeGesture,"Expected to not have already sent a cancel for this gesture");

Assertions.assertNotNull(eventdispatcher).dispatchEvent(

TouchEvent.obtain(

mTargetTag,mTargetCoordinates[1]));

}

private void dispatchCancelEvent(MotionEvent androidEvent) {

// This means the gesture has already ended,mTargetCoordinates[1]));

}

private void dispatch(MotionEvent ev,TouchEventType type) {

ev.offsetLocation(getAbsoluteLeft(this),getAbsolutetop(this));

mEventdispatcher.dispatchEvent(

TouchEvent.obtain(

mTargetTag,type,ev,ev.getX(),ev.getY(),mTouchEventCoalescingKeyHelper));

}

private void handletouchEvent(MotionEvent ev) {

int action = ev.getAction() & MotionEvent.ACTION_MASK;

if (action == MotionEvent.ACTION_DOWN) {

mGestureStartTime = ev.getEventTime();

dispatch(ev,TouchEventType.START);

} else if (mTargetTag == -1) {

// All the subsequent action types are expected to be called after ACTION_DOWN thus target

// is supposed to be set for them.

Log.e(

"error","Unexpected state: received touch event but didn't get starting ACTION_DOWN for this " +

"gesture before");

} else if (action == MotionEvent.ACTION_UP) {

// End of the gesture. We reset target tag to -1 and expect no further event associated with

// this gesture.

dispatch(ev,TouchEventType.END);

mTargetTag = -1;

mGestureStartTime = TouchEvent.UNSET;

} else if (action == MotionEvent.ACTION_MOVE) {

// Update pointer position for current gesture

dispatch(ev,TouchEventType.MOVE);

} else if (action == MotionEvent.ACTION_POINTER_DOWN) {

// New pointer goes down,this can only happen after ACTION_DOWN is sent for the first pointer

dispatch(ev,TouchEventType.START);

} else if (action == MotionEvent.ACTION_POINTER_UP) {

// Exactly onw of the pointers goes up

dispatch(ev,TouchEventType.END);

} else if (action == MotionEvent.ACTION_CANCEL) {

dispatchCancelEvent(ev);

mTargetTag = -1;

mGestureStartTime = TouchEvent.UNSET;

} else {

Log.w(

"IGnorE","Warning : touch event was ignored. Action=" + action + " Target=" + mTargetTag);

}

}

private void dispatchCancelEvent(MotionEvent ev) {

// This means the gesture has already ended,via some other CANCEL or UP event. This is not

// expected to happen very often as it would mean some child View has decided to intercept the

// touch stream and start a native gesture only upon receiving the UP/CANCEL event.

if (mTargetTag == -1) {

Log.w(

"error","Can't cancel already finished gesture. Is a child View trying to start a gesture from " +

"an UP/CANCEL event?");

return;

}

dispatch(ev,TouchEventType.CANCEL);

}

static Map getBubblingEventTypeConstants() {

return MapBuilder.builder()

.put(

"topChange",MapBuilder.of(

"phasedRegistrationNames",MapBuilder.of("bubbled","onChange","captured","onChangeCapture")))

.put(

"topSelect","onSelect","onSelectCapture")))

.put(

TouchEventType.START.getJSEventName(),MapBuilder.of(

"bubbled","onTouchStart","onTouchStartCapture")))

.put(

TouchEventType.MOVE.getJSEventName(),"onTouchMove","onTouchMoveCapture")))

.put(

TouchEventType.END.getJSEventName(),"onTouchEnd","onTouchEndCapture")))

.build();

}

static Map getBubblingEventTypeConstants() {

return MapBuilder.builder()

.put(

"topChange","onTouchEndCapture")))

.build();

}

static Map getBubblingEventTypeConstants() {

return MapBuilder.builder()

.put(

"topChange","onTouchEndCapture")))

.build();

}

static Map getBubblingEventTypeConstants() {

return MapBuilder.builder()

.put(

"topChange","onTouchEndCapture")))

.build();

}

static Map getBubblingEventTypeConstants() {

return MapBuilder.builder()

.put(

"topChange","onTouchEndCapture")))

.build();

}

关于IfcEventType的介绍现已完结,谢谢您的耐心阅读,如果想了解更多关于2、typescript - 类型断言,EventTarget Event、aiohttp.client_exceptions.ContentTypeError: 0:json(content_type=‘??‘)、com.amazonaws.event.ProgressEventType的实例源码、com.facebook.react.uimanager.events.TouchEventType的实例源码的相关知识,请在本站寻找。

本文标签: