本文的目的是介绍SpringBatchBATCH_JOB_INSTANCE表不存在错误的详细情况,特别关注spring.batch.job.enabled的相关信息。我们将通过专业的研究、有关数据的分

本文的目的是介绍Spring Batch BATCH_JOB_INSTANCE 表不存在错误的详细情况,特别关注spring.batch.job.enabled的相关信息。我们将通过专业的研究、有关数据的分析等多种方式,为您呈现一个全面的了解Spring Batch BATCH_JOB_INSTANCE 表不存在错误的机会,同时也不会遗漏关于java – Spring Batch Admin,无法替换占位符’batch.business.schema.script’、java – 以编程方式运行Spring Batch Job?、org.springframework.batch.core.BatchStatus的实例源码、org.springframework.batch.core.JobInstance的实例源码的知识。

本文目录一览:- Spring Batch BATCH_JOB_INSTANCE 表不存在错误(spring.batch.job.enabled)

- java – Spring Batch Admin,无法替换占位符’batch.business.schema.script’

- java – 以编程方式运行Spring Batch Job?

- org.springframework.batch.core.BatchStatus的实例源码

- org.springframework.batch.core.JobInstance的实例源码

Spring Batch BATCH_JOB_INSTANCE 表不存在错误(spring.batch.job.enabled)

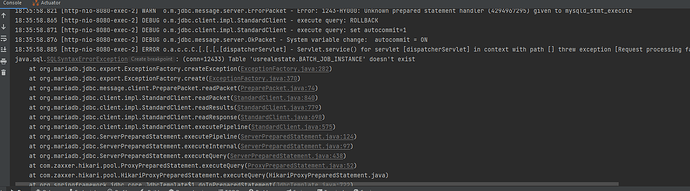

在运行 Spring Batch 项目的时候,提示上面的错误信息:

java.sql.SQLSyntaxErrorException: (conn=12433) Table ''usrealestate.BATCH_JOB_INSTANCE'' doesn''t exist

问题和解决

这个问题如果是使用 Hibernate 的会话,没有使用 Spring JPA 的话,通常是不会提示的。

如果你在 application.properties 文件中配置了数据库连接的话,通常会提示上面的错误。

这是因为,如果你没有使用 Spring JPA 的话,Spring Batch 会启用一个 H2 数据库,在这个数据库中,Sping 会对 Batch 需要的配置进行配置。

如果你使用 Spring JPA 的话,你需要 Spring Batch 帮你初始化表。

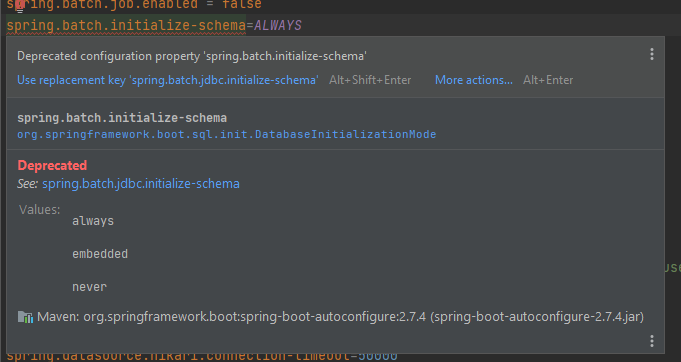

解决办法就是在项目配置文件中,设置:

spring.batch.initialize-schema=ALWAYS

但是上面的内容会显示为被丢弃了。

在 2.7 的 Spring Boot 版本中,应该使用的配置为:

spring.batch.jdbc.initialize-schema=ALWAYS

如果使用的是 IDEA 的话,上面的内容会自动提示。

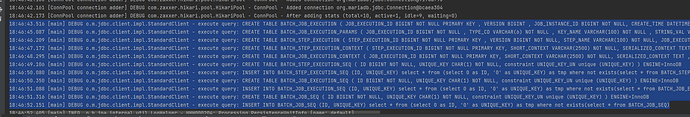

当你第一运行你的项目的时候,数据库会创建下面一堆表。

18:46:43.516 [main] DEBUG o.m.jdbc.client.impl.StandardClient - execute query: CREATE TABLE BATCH_JOB_EXECUTION ( JOB_EXECUTION_ID BIGINT NOT NULL PRIMARY KEY , VERSION BIGINT , JOB_INSTANCE_ID BIGINT NOT NULL, CREATE_TIME DATETIME(6) NOT NULL, START_TIME DATETIME(6) DEFAULT NULL , END_TIME DATETIME(6) DEFAULT NULL , STATUS VARCHAR(10) , EXIT_CODE VARCHAR(2500) , EXIT_MESSAGE VARCHAR(2500) , LAST_UPDATED DATETIME(6), JOB_CONFIGURATION_LOCATION VARCHAR(2500) NULL, constraint JOB_INST_EXEC_FK foreign key (JOB_INSTANCE_ID) references BATCH_JOB_INSTANCE(JOB_INSTANCE_ID) ) ENGINE=InnoDB

18:46:45.087 [main] DEBUG o.m.jdbc.client.impl.StandardClient - execute query: CREATE TABLE BATCH_JOB_EXECUTION_PARAMS ( JOB_EXECUTION_ID BIGINT NOT NULL , TYPE_CD VARCHAR(6) NOT NULL , KEY_NAME VARCHAR(100) NOT NULL , STRING_VAL VARCHAR(250) , DATE_VAL DATETIME(6) DEFAULT NULL , LONG_VAL BIGINT , DOUBLE_VAL DOUBLE PRECISION , IDENTIFYING CHAR(1) NOT NULL , constraint JOB_EXEC_PARAMS_FK foreign key (JOB_EXECUTION_ID) references BATCH_JOB_EXECUTION(JOB_EXECUTION_ID) ) ENGINE=InnoDB

18:46:46.209 [main] DEBUG o.m.jdbc.client.impl.StandardClient - execute query: CREATE TABLE BATCH_STEP_EXECUTION ( STEP_EXECUTION_ID BIGINT NOT NULL PRIMARY KEY , VERSION BIGINT NOT NULL, STEP_NAME VARCHAR(100) NOT NULL, JOB_EXECUTION_ID BIGINT NOT NULL, START_TIME DATETIME(6) NOT NULL , END_TIME DATETIME(6) DEFAULT NULL , STATUS VARCHAR(10) , COMMIT_COUNT BIGINT , READ_COUNT BIGINT , FILTER_COUNT BIGINT , WRITE_COUNT BIGINT , READ_SKIP_COUNT BIGINT , WRITE_SKIP_COUNT BIGINT , PROCESS_SKIP_COUNT BIGINT , ROLLBACK_COUNT BIGINT , EXIT_CODE VARCHAR(2500) , EXIT_MESSAGE VARCHAR(2500) , LAST_UPDATED DATETIME(6), constraint JOB_EXEC_STEP_FK foreign key (JOB_EXECUTION_ID) references BATCH_JOB_EXECUTION(JOB_EXECUTION_ID) ) ENGINE=InnoDB

18:46:47.172 [main] DEBUG o.m.jdbc.client.impl.StandardClient - execute query: CREATE TABLE BATCH_STEP_EXECUTION_CONTEXT ( STEP_EXECUTION_ID BIGINT NOT NULL PRIMARY KEY, SHORT_CONTEXT VARCHAR(2500) NOT NULL, SERIALIZED_CONTEXT TEXT , constraint STEP_EXEC_CTX_FK foreign key (STEP_EXECUTION_ID) references BATCH_STEP_EXECUTION(STEP_EXECUTION_ID) ) ENGINE=InnoDB

18:46:48.295 [main] DEBUG o.m.jdbc.client.impl.StandardClient - execute query: CREATE TABLE BATCH_JOB_EXECUTION_CONTEXT ( JOB_EXECUTION_ID BIGINT NOT NULL PRIMARY KEY, SHORT_CONTEXT VARCHAR(2500) NOT NULL, SERIALIZED_CONTEXT TEXT , constraint JOB_EXEC_CTX_FK foreign key (JOB_EXECUTION_ID) references BATCH_JOB_EXECUTION(JOB_EXECUTION_ID) ) ENGINE=InnoDB

18:46:49.106 [main] DEBUG o.m.jdbc.client.impl.StandardClient - execute query: CREATE TABLE BATCH_STEP_EXECUTION_SEQ ( ID BIGINT NOT NULL, UNIQUE_KEY CHAR(1) NOT NULL, constraint UNIQUE_KEY_UN unique (UNIQUE_KEY) ) ENGINE=InnoDB

18:46:50.080 [main] DEBUG o.m.jdbc.client.impl.StandardClient - execute query: INSERT INTO BATCH_STEP_EXECUTION_SEQ (ID, UNIQUE_KEY) select * from (select 0 as ID, ''0'' as UNIQUE_KEY) as tmp where not exists(select * from BATCH_STEP_EXECUTION_SEQ)

18:46:50.350 [main] DEBUG o.m.jdbc.client.impl.StandardClient - execute query: CREATE TABLE BATCH_JOB_EXECUTION_SEQ ( ID BIGINT NOT NULL, UNIQUE_KEY CHAR(1) NOT NULL, constraint UNIQUE_KEY_UN unique (UNIQUE_KEY) ) ENGINE=InnoDB

18:46:51.088 [main] DEBUG o.m.jdbc.client.impl.StandardClient - execute query: INSERT INTO BATCH_JOB_EXECUTION_SEQ (ID, UNIQUE_KEY) select * from (select 0 as ID, ''0'' as UNIQUE_KEY) as tmp where not exists(select * from BATCH_JOB_EXECUTION_SEQ)

18:46:51.316 [main] DEBUG o.m.jdbc.client.impl.StandardClient - execute query: CREATE TABLE BATCH_JOB_SEQ ( ID BIGINT NOT NULL, UNIQUE_KEY CHAR(1) NOT NULL, constraint UNIQUE_KEY_UN unique (UNIQUE_KEY) ) ENGINE=InnoDB

18:46:52.151 [main] DEBUG o.m.jdbc.client.impl.StandardClient - execute query: INSERT INTO BATCH_JOB_SEQ (ID, UNIQUE_KEY) select * from (select 0 as ID, ''0'' as UNIQUE_KEY) as tmp where not exists(select * from BATCH_JOB_SEQ)

上面的表是根据你当前你数据库配置参数进行创建的。

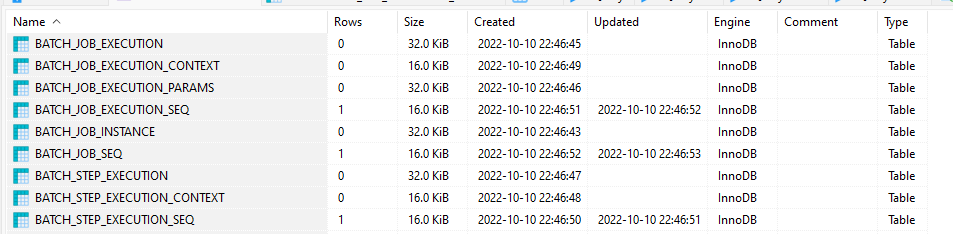

如果查看数据库的话,将会看到下面表被创建了。

Spring Batch 使用数据库作为中间存储介质来存储需要的参数。

https://www.ossez.com/t/spring-batch-batch-job-instance/14141

java – Spring Batch Admin,无法替换占位符’batch.business.schema.script’

尝试将Spring Batch Admin添加到现有的Spring Batch项目中.

我已经使用spring-batch-admin-resources和spring-batch-admin-manager更新了web.xml

我的设置:

在src / main / resources /下

我添加了2个属性文件. 1是batch-default-properties,它是一个空文件,另一个是batch-sqlserver.properties,其中包含以下内容:

batch.jdbc.driver=com.microsoft.sqlserver.jdbc.sqlServerDriver batch.jdbc.url=jdbc:sqlserver://xxx.xxx.xxx:1433;DatabaseName=SpringBatch

batch.jdbc.user=user

batch.jdbc.password=password

batch.jdbc.testWhileIdle=false

batch.jdbc.validationQuery=

batch.drop.script=/org/springframework/batch/core/schema-drop-sqlserver.sql

batch.schema.script=/org/springframework/batch/core/schema-sqlserver.sql

batch.database.incrementer.class=org.springframework.jdbc.support.incrementer.sqlServerMaxValueIncrementer

batch.lob.handler.class=org.springframework.jdbc.support.lob.DefaultLobHandler

batch.business.schema.script=business-schema-sqlserver.sql

batch.database.incrementer.parent=columnIncrementerParent

batch.grid.size=2

batch.jdbc.pool.size=6

batch.verify.cursor.position=true

batch.isolationlevel=ISOLATION_SERIALIZABLE

batch.table.prefix=BATCH_

batch.data.source.init=false

在webapp / meta-inf / spring / batch / override /下,我添加了带内容的data-source-context.xml:

spring-beans.xsd">

diobjectfactorybean">

这是在JBoss EAP 6.3中运行的.每次我启动服务器时,都会出现以下异常:

11:58:36,116 WARN [org.springframework.web.context.support.XmlWebApplicationContext] (ServerService Thread Pool -- 112) Exception encountered during context initialization - cancelling refresh attempt: org.springframework.beans.factory.BeanDeFinitionStoreException: Invalid bean deFinition with name 'org.springframework.jdbc.datasource.init.DataSourceInitializer#0' defined in null: Could not resolve placeholder 'batch.business.schema.script' in string value "${batch.business.schema.script}"; nested exception is java.lang.IllegalArgumentException: Could not resolve placeholder 'batch.business.schema.script' in string value "${batch.business.schema.script}"

at org.springframework.beans.factory.config.PlaceholderConfigurerSupport.doProcessproperties(PlaceholderConfigurerSupport.java:211) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.PropertyPlaceholderConfigurer.processproperties(PropertyPlaceholderConfigurer.java:223) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.PropertyResourceConfigurer.postProcessbeanfactory(PropertyResourceConfigurer.java:86) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.context.support.PostProcessorRegistrationDelegate.invokebeanfactoryPostProcessors(PostProcessorRegistrationDelegate.java:265) [spring-context-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.context.support.PostProcessorRegistrationDelegate.invokebeanfactoryPostProcessors(PostProcessorRegistrationDelegate.java:162) [spring-context-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.context.support.AbstractApplicationContext.invokebeanfactoryPostProcessors(AbstractApplicationContext.java:606) [spring-context-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.context.support.AbstractApplicationContext.refresh(AbstractApplicationContext.java:462) [spring-context-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.web.servlet.FrameworkServlet.configureAndRefreshWebApplicationContext(FrameworkServlet.java:663) [spring-webmvc-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.web.servlet.FrameworkServlet.createWebApplicationContext(FrameworkServlet.java:629) [spring-webmvc-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.web.servlet.FrameworkServlet.createWebApplicationContext(FrameworkServlet.java:677) [spring-webmvc-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.web.servlet.FrameworkServlet.initWebApplicationContext(FrameworkServlet.java:548) [spring-webmvc-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.web.servlet.FrameworkServlet.initServletBean(FrameworkServlet.java:489) [spring-webmvc-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.web.servlet.HttpServletBean.init(HttpServletBean.java:136) [spring-webmvc-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at javax.servlet.GenericServlet.init(GenericServlet.java:242) [jboss-servlet-api_3.0_spec-1.0.2.Final-redhat-1.jar:1.0.2.Final-redhat-1]

at org.apache.catalina.core.StandardWrapper.loadServlet(StandardWrapper.java:1194) [jbossweb-7.4.8.Final-redhat-4.jar:7.4.8.Final-redhat-4]

at org.apache.catalina.core.StandardWrapper.load(StandardWrapper.java:1100) [jbossweb-7.4.8.Final-redhat-4.jar:7.4.8.Final-redhat-4]

at org.apache.catalina.core.StandardContext.loadOnStartup(StandardContext.java:3591) [jbossweb-7.4.8.Final-redhat-4.jar:7.4.8.Final-redhat-4]

at org.apache.catalina.core.StandardContext.start(StandardContext.java:3798) [jbossweb-7.4.8.Final-redhat-4.jar:7.4.8.Final-redhat-4]

at org.jboss.as.web.deployment.WebDeploymentService.doStart(WebDeploymentService.java:161) [jboss-as-web-7.4.0.Final-redhat-19.jar:7.4.0.Final-redhat-19]

at org.jboss.as.web.deployment.WebDeploymentService.access$000(WebDeploymentService.java:59) [jboss-as-web-7.4.0.Final-redhat-19.jar:7.4.0.Final-redhat-19]

at org.jboss.as.web.deployment.WebDeploymentService$1.run(WebDeploymentService.java:94) [jboss-as-web-7.4.0.Final-redhat-19.jar:7.4.0.Final-redhat-19]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [rt.jar:1.8.0_51]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) [rt.jar:1.8.0_51]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [rt.jar:1.8.0_51]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [rt.jar:1.8.0_51]

at java.lang.Thread.run(Thread.java:745) [rt.jar:1.8.0_51]

at org.jboss.threads.JBossthread.run(JBossthread.java:122)

Caused by: java.lang.IllegalArgumentException: Could not resolve placeholder 'batch.business.schema.script' in string value "${batch.business.schema.script}"

at org.springframework.util.PropertyPlaceholderHelper.parseStringValue(PropertyPlaceholderHelper.java:174) [spring-core-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.util.PropertyPlaceholderHelper.replacePlaceholders(PropertyPlaceholderHelper.java:126) [spring-core-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.PropertyPlaceholderConfigurer$PlaceholderResolvingStringValueResolver.resolveStringValue(PropertyPlaceholderConfigurer.java:259) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.BeanDeFinitionVisitor.resolveStringValue(BeanDeFinitionVisitor.java:282) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.BeanDeFinitionVisitor.resolveValue(BeanDeFinitionVisitor.java:204) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.BeanDeFinitionVisitor.visitIndexedArgumentValues(BeanDeFinitionVisitor.java:150) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.BeanDeFinitionVisitor.visitBeanDeFinition(BeanDeFinitionVisitor.java:84) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.BeanDeFinitionVisitor.resolveValue(BeanDeFinitionVisitor.java:169) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.BeanDeFinitionVisitor.visitPropertyValues(BeanDeFinitionVisitor.java:141) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.BeanDeFinitionVisitor.visitBeanDeFinition(BeanDeFinitionVisitor.java:82) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.BeanDeFinitionVisitor.resolveValue(BeanDeFinitionVisitor.java:169) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.BeanDeFinitionVisitor.visitList(BeanDeFinitionVisitor.java:228) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.BeanDeFinitionVisitor.resolveValue(BeanDeFinitionVisitor.java:192) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.BeanDeFinitionVisitor.visitPropertyValues(BeanDeFinitionVisitor.java:141) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.BeanDeFinitionVisitor.visitBeanDeFinition(BeanDeFinitionVisitor.java:82) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.BeanDeFinitionVisitor.resolveValue(BeanDeFinitionVisitor.java:169) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.BeanDeFinitionVisitor.visitPropertyValues(BeanDeFinitionVisitor.java:141) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.BeanDeFinitionVisitor.visitBeanDeFinition(BeanDeFinitionVisitor.java:82) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

at org.springframework.beans.factory.config.PlaceholderConfigurerSupport.doProcessproperties(PlaceholderConfigurerSupport.java:208) [spring-beans-4.1.3.RELEASE.jar:4.1.3.RELEASE]

... 26 more

我看到batch.business.schema.script来自:

弹簧分批管理的管理器/ SRC /主/资源/ meta-inf /弹簧/批次/引导/管理器/数据源的context.xml,

还有“${batch.schema.script}”,它是从我的batch-sqlserver.properties加载而不是batch.business.schema.script.

有谁知道为什么或有任何建议?

谢谢!

最佳答案

我也遇到了这个问题.我没有单独的覆盖data-source-context.xml,但是我通过使用名为ENVIRONMENT的JVM选项启动服务器(在我的情况下为tomcat)来解决它:

-DENVIRONMENT = Postgresql的.

在你的情况下,它将是:

-DENVIRONMENT = sqlSERVER

我的猜测是Spring批处理应用程序使用spring配置文件来选择要使用的数据库类型,并需要初始标志来播种.

作为参考,这是David Syer关于此的帖子:

http://forum.spring.io/forum/spring-projects/batch/105054-spring-batch-admin-configuration-for-pointing-the-database?p=562140#post562140

对于覆盖上下文方法,他说:

Or you can go with the override XML,as long as you put it in the

right location – from the user guide “add your own versions of the

same bean deFinitions to a Spring XML config file in

meta-inf/spring/batch/override” (that’s in the classpath,not the war

file meta-inf). In that case just override the bean named

“dataSource”.

所以你把文件放在了错误的地方.您需要将其移动到类路径上的目录中.

java – 以编程方式运行Spring Batch Job?

是手动分配一个JobLauncher并运行该启动器的工作的唯一方法,还是在Spring Batch中有一个支持(或者有人知道一个样本)的类?

解决方法

org.springframework.batch.core.BatchStatus的实例源码

@Bean

public Job discreteJob() {

AbstractJob job = new AbstractJob("discreteRegisteredJob") {

@Override

public Collection<String> getStepNames() {

return Collections.emptySet();

}

@Override

public Step getStep(String stepName) {

return null;

}

@Override

protected void doExecute(JobExecution execution)

throws JobExecutionException {

execution.setStatus(BatchStatus.COMPLETED);

}

};

job.setJobRepository(this.jobRepository);

return job;

}

@Bean

public Job discreteJob() {

AbstractJob job = new AbstractJob("discreteLocalJob") {

@Override

public Collection<String> getStepNames() {

return Collections.emptySet();

}

@Override

public Step getStep(String stepName) {

return null;

}

@Override

protected void doExecute(JobExecution execution)

throws JobExecutionException {

execution.setStatus(BatchStatus.COMPLETED);

}

};

job.setJobRepository(this.jobRepository);

return job;

}

@Bean

public Job discreteJob() {

AbstractJob job = new AbstractJob("discreteRegisteredJob") {

@Override

public Collection<String> getStepNames() {

return Collections.emptySet();

}

@Override

public Step getStep(String stepName) {

return null;

}

@Override

protected void doExecute(JobExecution execution)

throws JobExecutionException {

execution.setStatus(BatchStatus.COMPLETED);

}

};

job.setJobRepository(this.jobRepository);

return job;

}

@Bean

public Job discreteJob() {

AbstractJob job = new AbstractJob("discreteLocalJob") {

@Override

public Collection<String> getStepNames() {

return Collections.emptySet();

}

@Override

public Step getStep(String stepName) {

return null;

}

@Override

protected void doExecute(JobExecution execution)

throws JobExecutionException {

execution.setStatus(BatchStatus.COMPLETED);

}

};

job.setJobRepository(this.jobRepository);

return job;

}

@Before

public void setup() throws Exception {

if (!initialized) {

registerapp(task,"timestamp");

initialize();

createJobExecution(JOB_NAME,BatchStatus.STARTED);

documentation.dontDocument(() -> this.mockmvc.perform(

post("/tasks/deFinitions")

.param("name","DOCJOB_1")

.param("deFinition","timestamp --format='YYYY MM DD'"))

.andExpect(status().isOk()));

initialized = true;

}

}

@Before

public void setup() throws Exception {

if (!initialized) {

registerapp(task,BatchStatus.STARTED);

createJobExecution(JOB_NAME + "_1",BatchStatus.STOPPED);

documentation.dontDocument(() -> this.mockmvc.perform(

post("/tasks/deFinitions")

.param("name","timestamp --format='YYYY MM DD'"))

.andExpect(status().isOk()));

initialized = true;

}

}

@Bean

public Job discreteJob() {

AbstractJob job = new AbstractJob("discreteRegisteredJob") {

@Override

public Collection<String> getStepNames() {

return Collections.emptySet();

}

@Override

public Step getStep(String stepName) {

return null;

}

@Override

protected void doExecute(JobExecution execution)

throws JobExecutionException {

execution.setStatus(BatchStatus.COMPLETED);

}

};

job.setJobRepository(this.jobRepository);

return job;

}

@Bean

public Job discreteJob() {

AbstractJob job = new AbstractJob("discreteLocalJob") {

@Override

public Collection<String> getStepNames() {

return Collections.emptySet();

}

@Override

public Step getStep(String stepName) {

return null;

}

@Override

protected void doExecute(JobExecution execution)

throws JobExecutionException {

execution.setStatus(BatchStatus.COMPLETED);

}

};

job.setJobRepository(this.jobRepository);

return job;

}

/**

* @param resultSet Set the result set

* @param rowNumber Set the row number

* @throws sqlException if there is a problem

* @return a job execution instance

*/

public final JobExecution mapRow(final ResultSet resultSet,final int rowNumber) throws sqlException {

JobInstance jobInstance = new JobInstance(resultSet.getBigDecimal(

"JOB_INSTANCE_ID").longValue(),new JobParameters(),resultSet.getString("JOB_NAME"));

JobExecution jobExecution = new JobExecution(jobInstance,resultSet.getBigDecimal("JOB_EXECUTION_ID").longValue());

jobExecution.setStartTime(resultSet.getTimestamp("START_TIME"));

jobExecution.setCreateTime(resultSet.getTimestamp("CREATE_TIME"));

jobExecution.setEndTime(resultSet.getTimestamp("END_TIME"));

jobExecution.setStatus(BatchStatus.valueOf(resultSet

.getString("STATUS")));

ExitStatus exitStatus = new ExitStatus(

resultSet.getString("EXIT_CODE"),resultSet.getString("EXIT_MESSAGE"));

jobExecution.setExitStatus(exitStatus);

return jobExecution;

}

@Override

public List<Resource> listResourcesToHarvest(Integer limit,DateTime Now,String fetch) {

Criteria criteria = getSession().createCriteria(type);

criteria.add(Restrictions.isNotNull("resourceType"));

criteria.add(Restrictions.in("status",Arrays.asList(new BatchStatus[] {BatchStatus.COMPLETED,BatchStatus.Failed,BatchStatus.ABANDONED,BatchStatus.STOPPED})));

criteria.add(Restrictions.eq("scheduled",Boolean.TRUE));

criteria.add(Restrictions.disjunction().add(Restrictions.lt("nextAvailableDate",Now)).add(Restrictions.isNull("nextAvailableDate")));

if (limit != null) {

criteria.setMaxResults(limit);

}

enableProfilePreQuery(criteria,fetch);

criteria.addOrder( Property.forName("nextAvailableDate").asc() );

List<Resource> result = (List<Resource>) criteria.list();

for(Resource t : result) {

enableProfilePostQuery(t,fetch);

}

return result;

}

/**

* @param status

* Set the status

* @return true if the job is startable

*/

public static Boolean isstartable(BatchStatus status) {

if (status == null) {

return Boolean.TRUE;

} else {

switch (status) {

case STARTED:

case STARTING:

case STOPPING:

case UNKNowN:

return Boolean.FALSE;

case COMPLETED:

case Failed:

case STOPPED:

default:

return Boolean.TRUE;

}

}

}

@Override

public void notify(JobExecutionException jobExecutionException,String resourceIdentifier) {

if(resourceIdentifier != null) {

Resource resource = service.find(resourceIdentifier,"job-with-source");

resource.setJobId(null);

resource.setDuration(null);

resource.setExitCode("Failed");

resource.setExitDescription(jobExecutionException.getLocalizedMessage());

resource.setJobInstance(null);

resource.setResource(null);

resource.setStartTime(null);

resource.setStatus(BatchStatus.Failed);

resource.setProcessSkip(0);

resource.setRecordsRead(0);

resource.setReadSkip(0);

resource.setWriteSkip(0);

resource.setWritten(0);

service.saveOrUpdate(resource);

solrIndexingListener.indexObject(resource);

}

}

/**

* Update and retrieve job execution - check attributes have changed as

* expected.

*/

@Transactional

@Test

public void testUpdateExecution() {

execution.setStatus(BatchStatus.STARTED);

jobExecutionDao.saveJobExecution(execution);

execution.setLastUpdated(new Date(0));

execution.setStatus(BatchStatus.COMPLETED);

jobExecutionDao.updateJobExecution(execution);

JobExecution updated = jobExecutionDao.findJobExecutions(jobInstance).get(0);

assertEquals(execution,updated);

assertEquals(BatchStatus.COMPLETED,updated.getStatus());

assertExecutionsAreEqual(execution,updated);

}

/**

* Successful synchronization from STARTED to STOPPING status.

*/

@Transactional

@Test

public void testSynchronizeStatusUpgrade() {

JobExecution exec1 = new JobExecution(jobInstance,jobParameters);

exec1.setStatus(BatchStatus.STOPPING);

jobExecutionDao.saveJobExecution(exec1);

JobExecution exec2 = new JobExecution(jobInstance,jobParameters);

assertTrue(exec1.getId() != null);

exec2.setId(exec1.getId());

exec2.setStatus(BatchStatus.STARTED);

exec2.setVersion(7);

assertTrue(exec1.getVersion() != exec2.getVersion());

assertTrue(exec1.getStatus() != exec2.getStatus());

jobExecutionDao.synchronizeStatus(exec2);

assertEquals(exec1.getVersion(),exec2.getVersion());

assertEquals(exec1.getStatus(),exec2.getStatus());

}

/**

* UNKNowN status won't be changed by synchronizeStatus,because it is the

* 'largest' BatchStatus (will not downgrade).

*/

@Transactional

@Test

public void testSynchronizeStatusDowngrade() {

JobExecution exec1 = new JobExecution(jobInstance,jobParameters);

exec1.setStatus(BatchStatus.STARTED);

jobExecutionDao.saveJobExecution(exec1);

JobExecution exec2 = new JobExecution(jobInstance,jobParameters);

Assert.state(exec1.getId() != null);

exec2.setId(exec1.getId());

exec2.setStatus(BatchStatus.UNKNowN);

exec2.setVersion(7);

Assert.state(exec1.getVersion() != exec2.getVersion());

Assert.state(exec1.getStatus().isLessthan(exec2.getStatus()));

jobExecutionDao.synchronizeStatus(exec2);

assertEquals(exec1.getVersion(),exec2.getVersion());

assertEquals(BatchStatus.UNKNowN,exec2.getStatus());

}

@Override

public List<Resource> listResourcesToHarvest(Integer limit,fetch);

}

return result;

}

private boolean harvestSuccessful(JobConfiguration job) {

DateTime start = DateTime.Now();

while(new Period(start,DateTime.Now()).getSeconds() < 20) {

jobConfigurationService.refresh(job);

if(BatchStatus.COMPLETED.equals(job.getJobStatus())) {

logger.info("Succesfully completed {}",job.getDescription());

return true;

}

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printstacktrace();

}

}

return false;

}

@Test

public void testJobInterruptedException() throws Exception {

StepExecution workerStep = new StepExecution("workerStep",new JobExecution(1L),2L);

when(this.environment.containsproperty(DeployerPartitionHandler.SPRING_CLOUD_TASK_JOB_EXECUTION_ID)).thenReturn(true);

when(this.environment.containsproperty(DeployerPartitionHandler.SPRING_CLOUD_TASK_STEP_EXECUTION_ID)).thenReturn(true);

when(this.environment.containsproperty(DeployerPartitionHandler.SPRING_CLOUD_TASK_STEP_NAME)).thenReturn(true);

when(this.environment.getProperty(DeployerPartitionHandler.SPRING_CLOUD_TASK_STEP_NAME)).thenReturn("workerStep");

when(this.beanfactory.getBeanNamesForType(Step.class)).thenReturn(new String[] {"workerStep","foo","bar"});

when(this.environment.getProperty(DeployerPartitionHandler.SPRING_CLOUD_TASK_STEP_EXECUTION_ID)).thenReturn("2");

when(this.environment.getProperty(DeployerPartitionHandler.SPRING_CLOUD_TASK_JOB_EXECUTION_ID)).thenReturn("1");

when(this.jobExplorer.getStepExecution(1L,2L)).thenReturn(workerStep);

when(this.environment.getProperty(DeployerPartitionHandler.SPRING_CLOUD_TASK_STEP_NAME)).thenReturn("workerStep");

when(this.beanfactory.getBean("workerStep",Step.class)).thenReturn(this.step);

doThrow(new JobInterruptedException("expected")).when(this.step).execute(workerStep);

handler.run();

verify(this.jobRepository).update(this.stepExecutionArgumentCaptor.capture());

assertEquals(BatchStatus.STOPPED,this.stepExecutionArgumentCaptor.getValue().getStatus());

}

@Test

public void testRuntimeException() throws Exception {

StepExecution workerStep = new StepExecution("workerStep",Step.class)).thenReturn(this.step);

doThrow(new RuntimeException("expected")).when(this.step).execute(workerStep);

handler.run();

verify(this.jobRepository).update(this.stepExecutionArgumentCaptor.capture());

assertEquals(BatchStatus.Failed,this.stepExecutionArgumentCaptor.getValue().getStatus());

}

private JobParameters createJobParametersWithIncrementerIfAvailable(String parameters,Job job) throws JobParametersNotFoundException {

JobParameters jobParameters = jobParametersConverter.getJobParameters(PropertiesConverter.stringToProperties(parameters));

// use JobParametersIncrementer to create JobParameters if incrementer is set and only if the job is no restart

if (job.getJobParametersIncrementer() != null){

JobExecution lastJobExecution = jobRepository.getLastJobExecution(job.getName(),jobParameters);

boolean restart = false;

// check if job Failed before

if (lastJobExecution != null) {

BatchStatus status = lastJobExecution.getStatus();

if (status.isUnsuccessful() && status != BatchStatus.ABANDONED) {

restart = true;

}

}

// if it's not a restart,create new JobParameters with the incrementer

if (!restart) {

JobParameters nextParameters = getNextJobParameters(job);

Map<String,JobParameter> map = new HashMap<String,JobParameter>(nextParameters.getParameters());

map.putAll(jobParameters.getParameters());

jobParameters = new JobParameters(map);

}

}

return jobParameters;

}

@Test

public void testRunJob() throws InterruptedException {

Long executionId = restTemplate.postForObject("http://localhost:" + port + "/batch/operations/jobs/flatFile2JobXml","",Long.class);

while (!restTemplate.getForObject("http://localhost:" + port + "/batch/operations/jobs/executions/{executionId}",String.class,executionId)

.equals("COMPLETED")) {

Thread.sleep(1000);

}

String log = restTemplate.getForObject("http://localhost:" + port + "/batch/operations/jobs/executions/{executionId}/log",executionId);

assertthat(log.length() > 20,is(true));

JobExecution jobExecution = jobExplorer.getJobExecution(executionId);

assertthat(jobExecution.getStatus(),is(BatchStatus.COMPLETED));

String jobExecutionString = restTemplate.getForObject("http://localhost:" + port + "/batch/monitoring/jobs/executions/{executionId}",executionId);

assertthat(jobExecutionString.contains("COMPLETED"),is(true));

}

@Test

public void testRunFlatFiletodbnoSkipJob_Success() throws InterruptedException {

JobExecution jobExecution = runJob("flatFiletodbnoSkipJob","metrics/flatFiletodbnoSkipJob_Success.csv");

assertthat(jobExecution.getStatus(),is(BatchStatus.COMPLETED));

ExecutionContext executionContext = jobExecution.getStepExecutions().iterator().next().getExecutionContext();

long writeCount = 5L;

MetricValidator validator = MetricValidatorBuilder.metricValidator().withExecutionContext(executionContext).withBeforeChunkCount(2L)

.withStreamOpenCount(1L).withStreamUpdateCount(3L).withStreamCloseCount(0L).withBeforeReadCount(6L).withReadCount(6L)

.withAfterReadCount(5L).withReadErrorCount(0L).withBeforeProcessCount(5L).withProcessCount(5L).withAfterProcessCount(5L)

.withProcessErrorCount(0L).withBeforeWriteCount(5L).withWriteCount(writeCount).withAfterWriteCount(5L).withAfterChunkCount(2L)

.withChunkErrorCount(0L).withSkipInReadCount(0L).withSkipInProcessCount(0L).withSkipInWriteCount(0L).build();

validator.validate();

// if one is correct,all will be in the metricReader,so I check just one

assertthat((Double) metricReader.findOne("gauge.batch.flatFiletodbnoSkipJob.step." + MetricNames.PROCESS_COUNT.getName()).getValue(),is(notNullValue())); // Todo

assertthat(jdbcTemplate.queryForObject("SELECT COUNT(*) FROM ITEM",Long.class),is(writeCount));

}

@Test

public void testRunFlatFiletodbnoSkipJob_Failed() throws InterruptedException {

JobExecution jobExecution = runJob("flatFiletodbnoSkipJob","metrics/flatFiletodbnoSkipJob_Failed.csv");

assertthat(jobExecution.getStatus(),is(BatchStatus.Failed));

ExecutionContext executionContext = jobExecution.getStepExecutions().iterator().next().getExecutionContext();

long writeCount = 3L;

MetricValidator validator = MetricValidatorBuilder.metricValidator().withExecutionContext(executionContext).withBeforeChunkCount(1L)

.withStreamOpenCount(1L).withStreamUpdateCount(2L).withStreamCloseCount(0L).withBeforeReadCount(6L).withReadCount(6L)

.withAfterReadCount(5L).withReadErrorCount(0L).withBeforeProcessCount(3L).withProcessCount(3L).withAfterProcessCount(3L)

.withProcessErrorCount(1L).withBeforeWriteCount(3L).withWriteCount(writeCount).withAfterWriteCount(3L).withAfterChunkCount(1L)

.withChunkErrorCount(1L).withSkipInReadCount(0L).withSkipInProcessCount(0L).withSkipInWriteCount(0L).build();

validator.validate();

// if one is correct,is(notNullValue()));

assertthat(jdbcTemplate.queryForObject("SELECT COUNT(*) FROM ITEM",is(writeCount));

}

@Test

public void testRunFlatFiletoDbSkipJob_SkipInProcess() throws InterruptedException {

JobExecution jobExecution = runJob("flatFiletoDbSkipJob","metrics/flatFiletoDbSkipJob_SkipInProcess.csv");

assertthat(jobExecution.getStatus(),is(BatchStatus.COMPLETED));

ExecutionContext executionContext = jobExecution.getStepExecutions().iterator().next().getExecutionContext();

long writeCount = 7L;

MetricValidator validator = MetricValidatorBuilder.metricValidator().withExecutionContext(executionContext).withBeforeChunkCount(3L)

.withStreamOpenCount(1L).withStreamUpdateCount(4L).withStreamCloseCount(0L).withBeforeReadCount(9L).withReadCount(9L)

.withAfterReadCount(8L).withReadErrorCount(0L).withBeforeProcessCount(7L).withProcessCount(7L).withAfterProcessCount(7L)

.withProcessErrorCount(1L).withBeforeWriteCount(7L).withWriteCount(writeCount).withAfterWriteCount(7L).withAfterChunkCount(3L)

.withChunkErrorCount(1L).withSkipInReadCount(0L).withSkipInProcessCount(1L).withSkipInWriteCount(0L).build();

validator.validate();

// if one is correct,so I check just one

assertthat((Double) metricReader.findOne("gauge.batch.flatFiletoDbSkipJob.step." + MetricNames.PROCESS_COUNT.getName()).getValue(),is(writeCount));

}

@Test

public void testRunFlatFiletoDbSkipJob_SkipInProcess_Failed() throws InterruptedException {

JobExecution jobExecution = runJob("flatFiletoDbSkipJob","metrics/flatFiletoDbSkipJob_SkipInProcess_Failed.csv");

assertthat(jobExecution.getStatus(),is(BatchStatus.Failed));

ExecutionContext executionContext = jobExecution.getStepExecutions().iterator().next().getExecutionContext();

long writeCount = 7L;

MetricValidator validator = MetricValidatorBuilder.metricValidator().withExecutionContext(executionContext).withBeforeChunkCount(3L)

.withStreamOpenCount(1L).withStreamUpdateCount(4L).withStreamCloseCount(0L).withBeforeReadCount(12L).withReadCount(12L)

.withAfterReadCount(12L).withReadErrorCount(0L).withBeforeProcessCount(7L).withProcessCount(7L).withAfterProcessCount(7L)

.withProcessErrorCount(5L).withBeforeWriteCount(7L).withWriteCount(writeCount).withAfterWriteCount(7L).withAfterChunkCount(3L)

.withChunkErrorCount(6L).withSkipInReadCount(0L).withSkipInProcessCount(2L).withSkipInWriteCount(0L).build();

validator.validate();

// if one is correct,is(writeCount));

}

@Test

public void testRunFlatFiletoDbSkipJob_SkipInWrite() throws InterruptedException {

JobExecution jobExecution = runJob("flatFiletoDbSkipJob","metrics/flatFiletoDbSkipJob_SkipInWrite.csv");

assertthat(jobExecution.getStatus(),is(BatchStatus.COMPLETED));

ExecutionContext executionContext = jobExecution.getStepExecutions().iterator().next().getExecutionContext();

long writeCount = 7L;

MetricValidator validator = MetricValidatorBuilder.metricValidator().withExecutionContext(executionContext).withBeforeChunkCount(4L)

.withStreamOpenCount(1L).withStreamUpdateCount(4L).withStreamCloseCount(0L).withBeforeReadCount(9L).withReadCount(9L)

.withAfterReadCount(8L).withReadErrorCount(0L).withBeforeProcessCount(7L).withProcessCount(7L).withAfterProcessCount(7L)

.withProcessErrorCount(0L).withBeforeWriteCount(5L).withWriteCount(writeCount).withAfterWriteCount(7L).withWriteErrorCount(4L)

.withAfterChunkCount(4L).withChunkErrorCount(2L).withSkipInReadCount(0L).withSkipInProcessCount(0L).withSkipInWriteCount(1L).build();

validator.validate();

// if one is correct,is(writeCount));

}

@Test

public void testRunFlatFiletoDbSkipJob_SkipInRead() throws InterruptedException {

JobExecution jobExecution = runJob("flatFiletoDbSkipJob","metrics/flatFiletoDbSkipJob_SkipInRead.csv");

assertthat(jobExecution.getStatus(),is(BatchStatus.COMPLETED));

ExecutionContext executionContext = jobExecution.getStepExecutions().iterator().next().getExecutionContext();

long writeCount = 7L;

MetricValidator validator = MetricValidatorBuilder.metricValidator().withExecutionContext(executionContext).withBeforeChunkCount(3L)

.withStreamOpenCount(1L).withStreamUpdateCount(4L).withStreamCloseCount(0L).withBeforeReadCount(9L).withReadCount(9L)

.withAfterReadCount(7L).withReadErrorCount(1L).withBeforeProcessCount(7L).withProcessCount(7L).withAfterProcessCount(7L)

.withProcessErrorCount(0L).withBeforeWriteCount(7L).withWriteCount(writeCount).withAfterWriteCount(7L).withWriteErrorCount(0L)

.withAfterChunkCount(3L).withChunkErrorCount(0L).withSkipInReadCount(1L).withSkipInProcessCount(0L).withSkipInWriteCount(0L).build();

validator.validate();

// if one is correct,is(writeCount));

}

@Test

public void testRunFlatFiletoDbSkipJob_SkipInProcess_ProcessorNonTransactional() throws InterruptedException {

JobExecution jobExecution = runJob("flatFiletoDbSkipProcessorNonTransactionalJob",is(BatchStatus.COMPLETED));

ExecutionContext executionContext = jobExecution.getStepExecutions().iterator().next().getExecutionContext();

long writeCount = 7L;

MetricValidator validator = MetricValidatorBuilder.metricValidator().withExecutionContext(executionContext).withBeforeChunkCount(3L)

.withStreamOpenCount(1L).withStreamUpdateCount(4L).withStreamCloseCount(0L).withBeforeReadCount(9L).withReadCount(9L)

.withAfterReadCount(8L).withReadErrorCount(0L).withBeforeProcessCount(8L).withProcessCount(8L).withAfterProcessCount(7L)

.withProcessErrorCount(1L).withBeforeWriteCount(7L).withWriteCount(writeCount).withAfterWriteCount(7L).withAfterChunkCount(3L)

.withChunkErrorCount(1L).withSkipInReadCount(0L).withSkipInProcessCount(1L).withSkipInWriteCount(0L).build();

validator.validate();

// if one is correct,so I check just one

assertthat(

(Double) metricReader.findOne(

"gauge.batch.flatFiletoDbSkipProcessorNonTransactionalJob.step." + MetricNames.PROCESS_COUNT.getName()).getValue(),is(writeCount));

}

@Test

public void testRunFlatFiletoDbSkipJob_SkipInWrite_ProcessorNonTransactional() throws InterruptedException {

JobExecution jobExecution = runJob("flatFiletoDbSkipProcessorNonTransactionalJob",is(BatchStatus.COMPLETED));

ExecutionContext executionContext = jobExecution.getStepExecutions().iterator().next().getExecutionContext();

long writeCount = 7L;

MetricValidator validator = MetricValidatorBuilder.metricValidator().withExecutionContext(executionContext).withBeforeChunkCount(4L)

.withStreamOpenCount(1L).withStreamUpdateCount(4L).withStreamCloseCount(0L).withBeforeReadCount(9L).withReadCount(9L)

.withAfterReadCount(8L).withReadErrorCount(0L).withBeforeProcessCount(8L).withProcessCount(8L).withAfterProcessCount(8L)

.withProcessErrorCount(0L).withBeforeWriteCount(5L).withWriteCount(writeCount).withAfterWriteCount(7L).withWriteErrorCount(4L)

.withAfterChunkCount(4L).withChunkErrorCount(2L).withSkipInReadCount(0L).withSkipInProcessCount(0L).withSkipInWriteCount(1L).build();

// Todo Bug in beforeWrite listener in Spring Batch?

validator.validate();

// if one is correct,is(writeCount));

}

@Test

public void testRunFlatFiletoDbSkipJob_SkipInProcess_ReaderTransactional() throws InterruptedException {

JobExecution jobExecution = runJob("flatFiletoDbSkipReaderTransactionalJob",is(BatchStatus.COMPLETED));

ExecutionContext executionContext = jobExecution.getStepExecutions().iterator().next().getExecutionContext();

long writeCount = 5L;

MetricValidator validator = MetricValidatorBuilder.metricValidator().withExecutionContext(executionContext).withBeforeChunkCount(2L)

.withStreamOpenCount(1L).withStreamUpdateCount(3L).withStreamCloseCount(0L).withBeforeReadCount(6L).withReadCount(6L)

.withAfterReadCount(5L).withReadErrorCount(0L).withBeforeProcessCount(5L).withProcessCount(5L).withAfterProcessCount(5L)

.withProcessErrorCount(1L).withBeforeWriteCount(5L).withWriteCount(writeCount).withAfterWriteCount(5L).withAfterChunkCount(2L)

.withChunkErrorCount(1L).withSkipInReadCount(0L).withSkipInProcessCount(0L).withSkipInWriteCount(0L).build();

validator.validate();

// if one is correct,so I check just one

assertthat((Double) metricReader

.findOne("gauge.batch.flatFiletoDbSkipReaderTransactionalJob.step." + MetricNames.PROCESS_COUNT.getName()).getValue(),is(writeCount));

}

@Test

public void testRunJob() throws InterruptedException {

Long executionId = restTemplate.postForObject("http://localhost:" + port + "/batch/operations/jobs/simpleBatchMetricsJob",is(true));

Metric<?> metric = metricReader.findOne("gauge.batch.simpleBatchMetricsJob.simpleBatchMetricsstep.processor");

assertthat(metric,is(notNullValue()));

assertthat((Double) metric.getValue(),is(7.0));

}

@Test

public void testRunJob() throws InterruptedException{

Long executionId = restTemplate.postForObject("http://localhost:"+port+"/batch/operations/jobs/delayJob",Long.class);

Thread.sleep(500);

String runningExecutions = restTemplate.getForObject("http://localhost:"+port+"/batch/monitoring/jobs/runningexecutions",String.class);

assertthat(runningExecutions.contains(executionId.toString()),is(true));

String runningExecutionsForDelayJob = restTemplate.getForObject("http://localhost:"+port+"/batch/monitoring/jobs/runningexecutions/delayJob",String.class);

assertthat(runningExecutionsForDelayJob.contains(executionId.toString()),is(true));

restTemplate.delete("http://localhost:"+port+"/batch/operations/jobs/executions/{executionId}",executionId);

Thread.sleep(1500);

JobExecution jobExecution = jobExplorer.getJobExecution(executionId);

assertthat(jobExecution.getStatus(),is(BatchStatus.STOPPED));

String jobExecutionString = restTemplate.getForObject("http://localhost:"+port+"/batch/monitoring/jobs/executions/{executionId}",executionId);

assertthat(jobExecutionString.contains("STOPPED"),is(true));

}

@Test

public void testXml() throws Exception {

JobExecution exec = jobLauncherTestUtils.launchJob();

Assertions.assertthat(exec.getStatus()).isEqualTo(BatchStatus.COMPLETED);

Resource ouput = new @R_301_727@(JobXmlMultiFileConfiguration.OUTPUT_FILE + ".1");

String content = IoUtils.toString(ouput.getInputStream());

assertXpathEvaluatesTo("1000","count(//product)",content);

ouput = new @R_301_727@(JobXmlMultiFileConfiguration.OUTPUT_FILE + ".2");

content = IoUtils.toString(ouput.getInputStream());

assertXpathEvaluatesTo("1000",content);

ouput = new @R_301_727@(JobXmlMultiFileConfiguration.OUTPUT_FILE + ".3");

content = IoUtils.toString(ouput.getInputStream());

assertXpathEvaluatesTo("1000",content);

ouput = new @R_301_727@(JobXmlMultiFileConfiguration.OUTPUT_FILE + ".4");

content = IoUtils.toString(ouput.getInputStream());

assertXpathEvaluatesTo("1000",content);

ouput = new @R_301_727@(JobXmlMultiFileConfiguration.OUTPUT_FILE + ".5");

content = IoUtils.toString(ouput.getInputStream());

assertXpathEvaluatesTo("517",content);

}

/**

* JobOperator 가 Job 중단을 수행합니다.

*

* @throws Exception

*/

@Test

public void stopWithJobOperator() throws Exception {

JobExecution jobExecution = jobLauncher.run(jobOperatorJob,new JobParameters());

assertthat(jobExecution.getStatus()).isIn(BatchStatus.STARTING,BatchStatus.STARTED);

Set<Long> runningExecutions = jobOperator.getRunningExecutions(jobOperatorJob.getName());

assertthat(runningExecutions.size()).isEqualTo(1);

Long executionId = runningExecutions.iterator().next();

boolean stopMessageSent = jobOperator.stop(executionId);

assertthat(stopMessageSent).isTrue();

waitForTermination(jobOperatorJob);

runningExecutions = jobOperator.getRunningExecutions(jobOperatorJob.getName());

assertthat(runningExecutions.size()).isEqualTo(0);

}

@Test

public void sunnyDay() throws Exception {

int read = 12;

configureServiceForRead(service,read);

JobExecution exec = jobLauncher.run(

job,new JobParametersBuilder().addLong("time",System.currentTimeMillis())

.toJobParameters());

assertthat(exec.getStatus()).isEqualTo(BatchStatus.COMPLETED);

assertRead(read,exec);

assertWrite(read,exec);

assertReadSkip(0,exec);

assertProcessSkip(0,exec);

assertWriteSkip(0,exec);

assertCommit(3,exec);

assertRollback(0,exec);

}

@Router

public List<String> routeJobExecution(JobExecution jobExecution) {

final List<String> routetochannels = new ArrayList<String>();

if (jobExecution.getStatus().equals(BatchStatus.Failed)) {

routetochannels.add("jobRestarts");

}

else {

if (jobExecution.getStatus().equals(BatchStatus.COMPLETED)) {

routetochannels.add("completeApplication");

}

routetochannels.add("notifiableExecutions");

}

return routetochannels;

}

@Override

public void afterJob(JobExecution jobExecution) {

if(jobExecution.getStatus() == BatchStatus.COMPLETED) {

logger.info("!!! JOB FINISHED! LAST POSTID IMPORTED: " +

jobExecution.getExecutionContext().get("postId") );

}

}

org.springframework.batch.core.JobInstance的实例源码

@disabled

@Test

public void testGetJobs() throws Exception {

Set<String> jobNames = new HashSet<>();

jobNames.add("job1");

jobNames.add("job2");

jobNames.add("job3");

Long job1Id = 1L;

Long job2Id = 2L;

List<Long> jobExecutions = new ArrayList<>();

jobExecutions.add(job1Id);

JobInstance jobInstance = new JobInstance(job1Id,"job1");

expect(jobOperator.getJobNames()).andReturn(jobNames).anyTimes();

expect(jobOperator.getJobInstances(eq("job1"),eq(0),eq(1))).andReturn(jobExecutions);

expect(jobExplorer.getJobInstance(eq(job1Id))).andReturn(jobInstance);

// expect(jobOperator.getJobInstances(eq("job2"),eq(1))).andReturn(null);

replayAll();

assertthat(service.getJobs(),nullValue());

}

private static void createSampleJob(String jobName,int jobExecutionCount) {

JobInstance instance = jobRepository.createJobInstance(jobName,new JobParameters());

jobInstances.add(instance);

TaskExecution taskExecution = dao.createTaskExecution(jobName,new Date(),new ArrayList<String>(),null);

Map<String,JobParameter> jobParameterMap = new HashMap<>();

jobParameterMap.put("foo",new JobParameter("FOO",true));

jobParameterMap.put("bar",new JobParameter("BAR",false));

JobParameters jobParameters = new JobParameters(jobParameterMap);

JobExecution jobExecution = null;

for (int i = 0; i < jobExecutionCount; i++) {

jobExecution = jobRepository.createJobExecution(instance,jobParameters,null);

taskBatchDao.saveRelationship(taskExecution,jobExecution);

StepExecution stepExecution = new StepExecution("foobar",jobExecution);

jobRepository.add(stepExecution);

}

}

private void assertCorrectMixins(RestTemplate restTemplate) {

boolean containsMappingJackson2HttpMessageConverter = false;

for (HttpMessageConverter<?> converter : restTemplate.getMessageConverters()) {

if (converter instanceof MappingJackson2HttpMessageConverter) {

containsMappingJackson2HttpMessageConverter = true;

final MappingJackson2HttpMessageConverter jacksonConverter = (MappingJackson2HttpMessageConverter) converter;

final ObjectMapper objectMapper = jacksonConverter.getobjectMapper();

assertNotNull(objectMapper.findMixInClassFor(JobExecution.class));

assertNotNull(objectMapper.findMixInClassFor(JobParameters.class));

assertNotNull(objectMapper.findMixInClassFor(JobParameter.class));

assertNotNull(objectMapper.findMixInClassFor(JobInstance.class));

assertNotNull(objectMapper.findMixInClassFor(ExitStatus.class));

assertNotNull(objectMapper.findMixInClassFor(StepExecution.class));

assertNotNull(objectMapper.findMixInClassFor(ExecutionContext.class));

assertNotNull(objectMapper.findMixInClassFor(StepExecutionHistory.class));

}

}

if (!containsMappingJackson2HttpMessageConverter) {

fail("Expected that the restTemplate's list of Message Converters contained a "

+ "MappingJackson2HttpMessageConverter");

}

}

/**

* @param resultSet Set the result set

* @param rowNumber Set the row number

* @throws sqlException if there is a problem

* @return a job execution instance

*/

public final JobExecution mapRow(final ResultSet resultSet,final int rowNumber) throws sqlException {

JobInstance jobInstance = new JobInstance(resultSet.getBigDecimal(

"JOB_INSTANCE_ID").longValue(),new JobParameters(),resultSet.getString("JOB_NAME"));

JobExecution jobExecution = new JobExecution(jobInstance,resultSet.getBigDecimal("JOB_EXECUTION_ID").longValue());

jobExecution.setStartTime(resultSet.getTimestamp("START_TIME"));

jobExecution.setCreateTime(resultSet.getTimestamp("CREATE_TIME"));

jobExecution.setEndTime(resultSet.getTimestamp("END_TIME"));

jobExecution.setStatus(BatchStatus.valueOf(resultSet

.getString("STATUS")));

ExitStatus exitStatus = new ExitStatus(

resultSet.getString("EXIT_CODE"),resultSet.getString("EXIT_MESSAGE"));

jobExecution.setExitStatus(exitStatus);

return jobExecution;

}

@Override

public List<JobInstance> list(Integer page,Integer size) {

httpentity<JobInstance> requestEntity = new httpentity<JobInstance>(httpHeaders);

Map<String,Object> uriVariables = new HashMap<String,Object>();

uriVariables.put("resource",resourceDir);

if(size == null) {

uriVariables.put("limit","");

} else {

uriVariables.put("limit",size);

}

if(page == null) {

uriVariables.put("start","");

} else {

uriVariables.put("start",page);

}

ParameterizedTypeReference<List<JobInstance>> typeRef = new ParameterizedTypeReference<List<JobInstance>>() {};

httpentity<List<JobInstance>> responseEntity = restTemplate.exchange(baseUri + "/{resource}?limit={limit}&start={start}",HttpMethod.GET,requestEntity,typeRef,uriVariables);

return responseEntity.getBody();

}

/**

* @param jobInstance

* the job instance to save

* @return A response entity containing a newly created job instance

*/

@RequestMapping(value = "/jobInstance",method = RequestMethod.POST)

public final ResponseEntity<JobInstance> create(@RequestBody final JobInstance jobInstance) {

HttpHeaders httpHeaders = new HttpHeaders();

try {

httpHeaders.setLocation(new URI(baseUrl + "/jobInstance/"

+ jobInstance.getId()));

} catch (URISyntaxException e) {

logger.error(e.getMessage());

}

service.save(jobInstance);

ResponseEntity<JobInstance> response = new ResponseEntity<JobInstance>(

jobInstance,httpHeaders,HttpStatus.CREATED);

return response;

}

/**

* @param jobId

* Set the job id

* @param jobName

* Set the job name

* @param authorityName

* Set the authority name

* @param version

* Set the version

*/

public void createJobInstance(String jobId,String jobName,String authorityName,String version) {

enableAuthentication();

Long id = null;

if (jobId != null && jobId.length() > 0) {

id = Long.parseLong(jobId);

}

Integer v = null;

if (version != null && version.length() > 0) {

v = Integer.parseInt(version);

}

Map<String,JobParameter> jobParameterMap = new HashMap<String,JobParameter>();

if (authorityName != null && authorityName.length() > 0) {

jobParameterMap.put("authority.name",new JobParameter(

authorityName));

}

JobParameters jobParameters = new JobParameters(jobParameterMap);

JobInstance jobInstance = new JobInstance(id,jobName);

jobInstance.setVersion(v);

data.push(jobInstance);

jobInstanceService.save(jobInstance);

disableAuthentication();

}

@Override

public final void setupModule(final SetupContext setupContext) {

SimpleKeyDeserializers keyDeserializers = new SimpleKeyDeserializers();

keyDeserializers.addDeserializer(Location.class,new GeographicalRegionKeyDeserializer());

setupContext.addKeyDeserializers(keyDeserializers);

SimpleSerializers simpleSerializers = new SimpleSerializers();

simpleSerializers.addSerializer(new JobInstanceSerializer());

simpleSerializers.addSerializer(new JobExecutionSerializer());

setupContext.addSerializers(simpleSerializers);

SimpleDeserializers simpleDeserializers = new SimpleDeserializers();

simpleDeserializers.addDeserializer(JobInstance.class,new JobInstanceDeserializer());

simpleDeserializers.addDeserializer(JobExecution.class,new JobExecutionDeserializer(jobInstanceService));

simpleDeserializers.addDeserializer(JobExecutionException.class,new JobExecutionExceptionDeserializer());

setupContext.addDeserializers(simpleDeserializers);

}

/**

*

* @throws Exception

* if there is a problem serializing the object

*/

@Test

public void testWriteJobInstance() throws Exception {

Map<String,JobParameter> jobParameterMap

= new HashMap<String,JobParameter>();

jobParameterMap.put("authority.name",new JobParameter("test"));

JobInstance jobInstance = new JobInstance(1L,new JobParameters(

jobParameterMap),"testJob");

jobInstance.setVersion(1);

try {

objectMapper.writeValueAsstring(jobInstance);

} catch (Exception e) {

fail("No exception expected here");

}

}

@Override

public StepExecution unmarshal(AdaptedStepExecution v) throws Exception {

JobExecution je = new JobExecution(v.getJobExecutionId());

JobInstance ji = new JobInstance(v.getJobInstanceId(),v.getJobName());

je.setJobInstance(ji);

StepExecution step = new StepExecution(v.getStepName(),je);

step.setId(v.getId());

step.setStartTime(v.getStartTime());

step.setEndTime(v.getEndTime());

step.setReadSkipCount(v.getReadSkipCount());

step.setWriteSkipCount(v.getWriteSkipCount());

step.setProcessSkipCount(v.getProcessSkipCount());

step.setReadCount(v.getReadCount());

step.setWriteCount(v.getWriteCount());

step.setFilterCount(v.getFilterCount());

step.setRollbackCount(v.getRollbackCount());

step.setExitStatus(new ExitStatus(v.getExitCode()));

step.setLastUpdated(v.getLastUpdated());

step.setVersion(v.getVersion());

step.setStatus(v.getStatus());

step.setExecutionContext(v.getExecutionContext());

return step;

}

@Test

public void marshallStepExecutiontest() throws Exception {

JobInstance jobInstance = new JobInstance(1234L,"test");

JobExecution jobExecution = new JobExecution(123L);

jobExecution.setJobInstance(jobInstance);

StepExecution step = new StepExecution("testStep",jobExecution);

step.setLastUpdated(new Date(System.currentTimeMillis()));

StepExecutionAdapter adapter = new StepExecutionAdapter();

AdaptedStepExecution adStep = adapter.marshal(step);

jaxb2Marshaller.marshal(adStep,result);

Fragment frag = new Fragment(new DOMBuilder().build(doc));

frag.setNamespaces(getNamespaceProvider().getNamespaces());

frag.prettyPrint();

frag.assertElementExists("/msb:stepExecution");

frag.assertElementExists("/msb:stepExecution/msb:lastUpdated");

frag.assertElementValue("/msb:stepExecution/msb:stepName","testStep");

}

/**

* Create and retrieve a job instance.

*/

@Transactional

@Test

public void testGetLastInstances() throws Exception {

testCreateAndRetrieve();

// unrelated job instance that should be ignored by the query

jobInstanceDao.createJobInstance("anotherJob",new JobParameters());

// we need two instances of the same job to check ordering

jobInstanceDao.createJobInstance(fooJob,new JobParameters());

List<JobInstance> jobInstances = jobInstanceDao.getJobInstances(fooJob,2);

assertEquals(2,jobInstances.size());

assertEquals(fooJob,jobInstances.get(0).getJobName());

assertEquals(fooJob,jobInstances.get(1).getJobName());

assertEquals(Integer.valueOf(0),jobInstances.get(0).getVersion());

assertEquals(Integer.valueOf(0),jobInstances.get(1).getVersion());

//assertTrue("Last instance should be first on the list",jobInstances.get(0).getCreateDateTime() > jobInstances.get(1)

// .getId());

}

/**

* Create and retrieve a job instance.

*/

@Transactional

@Test

public void testGetLastInstancesPastEnd() throws Exception {

testCreateAndRetrieve();

// unrelated job instance that should be ignored by the query

jobInstanceDao.createJobInstance("anotherJob",4,2);

assertEquals(0,jobInstances.size());

}

@Before

public void onSetUp() throws Exception {

jobParameters = new JobParameters();

jobInstance = new JobInstance(12345L,"execJob");

execution = new JobExecution(jobInstance,new JobParameters());

execution.setStartTime(new Date(System.currentTimeMillis()));

execution.setLastUpdated(new Date(System.currentTimeMillis()));

execution.setEndTime(new Date(System.currentTimeMillis()));

jobExecutionDao = new MarkLogicJobExecutionDao(getClient(),getBatchProperties());

}

@SuppressWarnings("unchecked")

protected void executeInternal(JobExecutionContext context) {

Map<String,Object> jobDataMap = context.getMergedJobDataMap();

String jobName = (String) jobDataMap.get(JOB_NAME);

LOGGER.info("Quartz trigger firing with Spring Batch jobName=" + jobName);

try {

Job job = jobLocator.getJob(jobName);

JobParameters prevIoUsJobParameters = null;

List<JobInstance> jobInstances = jobExplorer.getJobInstances(jobName,1);

if ((jobInstances != null) && (jobInstances.size() > 0)) {

prevIoUsJobParameters = jobInstances.get(0).getJobParameters();

}

JobParameters jobParameters = getJobParametersFromJobMap(jobDataMap,prevIoUsJobParameters);

if (job.getJobParametersIncrementer() != null) {

jobParameters = job.getJobParametersIncrementer().getNext(jobParameters);

}

jobLauncher.run(jobLocator.getJob(jobName),jobParameters);

} catch (JobExecutionException e) {

LOGGER.error("Could not execute job.",e);

}

}

@Test

public void startWithCustomStringParametersWithPrevIoUsParameters() throws Exception {

final JobInstance prevIoUsInstance = mock(JobInstance.class);

when(jobExplorer.getJobInstances(JOB_NAME,1)).thenReturn(Arrays.asList(prevIoUsInstance));

final JobParameters prevIoUsParams = new JobParameters();

final JobExecution prevIoUsExecution = mock(JobExecution.class);

when(prevIoUsExecution.getJobParameters()).thenReturn(prevIoUsParams);

when(jobExplorer.getJobExecutions(prevIoUsInstance)).thenReturn(Arrays.asList(prevIoUsExecution));

final JobParameters incremented = new JobParametersBuilder(params).addString("test","test").toJobParameters();

when(jobParametersIncrementer.getNext(prevIoUsParams)).thenReturn(incremented);

final JobParameters expected = new JobParametersBuilder(incremented).addString("foo","bar").addLong("answer",42L,false)

.toJobParameters();

when(jobLauncher.run(job,expected)).thenReturn(execution);

final JobParameters parameters = new JobParametersBuilder().addString("foo",false).toJobParameters();

final long executionId = batchOperator.start(JOB_NAME,parameters);

assertthat("job execution id",executionId,is(1L));

}

public List<JobResult> getJobResults() {

List<JobInstance> jobInstancesByJobName = jobExplorer.findJobInstancesByJobName(AbstractEmployeeJobConfig.EMPLOYEE_JOB,Integer.MAX_VALUE);

DateTime currentTime = new DateTime();

List<JobStartParams> months = getJobStartParamsPrevIoUsMonths(currentTime.getYear(),currentTime.getMonthOfYear());

final Map<JobStartParams,JobResult> jobResultMap = getMapOfJobResultsForJobInstances(jobInstancesByJobName);

List<JobResult> collect = months

.stream()

.map(mapJobStartParamsToJobResult(jobResultMap))

.sorted((comparing(onYear).thenComparing(comparing(onMonth))).reversed())

.collect(Collectors.toList());

return collect;

}

@Test

public void testGetFinishedJobResults_SameDates_SortingIsDescOnDate() throws Exception {

//ARRANGE

JobInstance jobInstance1 = new JobInstance(1L,EmployeeJobConfigSingleJvm.EMPLOYEE_JOB);

when(jobExplorer.findJobInstancesByJobName(EmployeeJobConfigSingleJvm.EMPLOYEE_JOB,MAX_VALUE))

.thenReturn(asList(jobInstance1));

DateTime dateTime = new DateTime();

JobExecution jobInstance1_jobExecution1 = new JobExecution(jobInstance1,1L,createJobParameters(dateTime.getYear(),dateTime.getMonthOfYear()),null);

jobInstance1_jobExecution1.setEndTime(getDateOfDay(3));

JobExecution jobInstance1_jobExecution2 = new JobExecution(jobInstance1,2L,null);

jobInstance1_jobExecution2.setEndTime(getDateOfDay(4));

when(jobExplorer.getJobExecutions(jobInstance1)).thenReturn(asList(jobInstance1_jobExecution1,jobInstance1_jobExecution2));

//ACT

List<JobResult> jobResults = jobResultsService.getJobResults();

assertthat(jobResults.get(0).getJobExecutionResults().get(0).getEndTime()).isAfter(jobResults.get(0).getJobExecutionResults().get(1).getEndTime());

}

/**

* Borrowed from CommandLineJobRunner.

* @param job the job that we need to find the next parameters for

* @return the next job parameters if they can be located

* @throws JobParametersNotFoundException if there is a problem

*/

private JobParameters getNextJobParameters(Job job) throws JobParametersNotFoundException {

String jobIdentifier = job.getName();

JobParameters jobParameters;

List<JobInstance> lastInstances = jobExplorer.getJobInstances(jobIdentifier,1);

JobParametersIncrementer incrementer = job.getJobParametersIncrementer();

if (lastInstances.isEmpty()) {

jobParameters = incrementer.getNext(new JobParameters());

if (jobParameters == null) {

throw new JobParametersNotFoundException("No bootstrap parameters found from incrementer for job="

+ jobIdentifier);

}

}

else {

List<JobExecution> lastExecutions = jobExplorer.getJobExecutions(lastInstances.get(0));

jobParameters = incrementer.getNext(lastExecutions.get(0).getJobParameters());

}

return jobParameters;

}

@Test

public void createProtocol() throws Exception {

// Given

JobExecution jobExecution = new JobExecution(1L,new JobParametersBuilder().addString("test","value").toJobParameters());

jobExecution.setJobInstance(new JobInstance(1L,"test-job"));

jobExecution.setCreateTime(new Date());

jobExecution.setStartTime(new Date());

jobExecution.setEndTime(new Date());

jobExecution.setExitStatus(new ExitStatus("COMPLETED_WITH_ERRORS","This is a default exit message"));

jobExecution.getExecutionContext().put("jobCounter",1);

StepExecution stepExecution = jobExecution.createStepExecution("test-step-1");

stepExecution.getExecutionContext().put("stepCounter",1);

ProtocolListener protocolListener = new ProtocolListener();

// When

protocolListener.afterJob(jobExecution);

// Then

String output = this.outputCapture.toString();

assertthat(output,containsstring("Protocol for test-job"));

assertthat(output,containsstring("COMPLETED_WITH_ERRORS"));

}

/**

* 다음 실행 될 Batch Job의 Job Parameter를 생성한다.

*

* @param job

* @return JobParameters

* @throws JobParametersNotFoundException

*/

private JobParameters getNextJobParameters(Job job) throws JobParametersNotFoundException {

String jobIdentifier = job.getName();

JobParameters jobParameters;

List<JobInstance> lastInstances = jobExplorer.getJobInstances(jobIdentifier,1);

JobParametersIncrementer incrementer = job.getJobParametersIncrementer();

if (incrementer == null) {

throw new JobParametersNotFoundException("No job parameters incrementer found for job=" + jobIdentifier);

}

if (lastInstances.isEmpty()) {

jobParameters = incrementer.getNext(new JobParameters());

if (jobParameters == null) {

throw new JobParametersNotFoundException("No bootstrap parameters found from incrementer for job="

+ jobIdentifier);

}

}

else {

jobParameters = incrementer.getNext(lastInstances.get(0).getJobParameters());

}

return jobParameters;

}

private Collection<StepExecution> getStepExecutions() {

JobExplorer jobExplorer = this.applicationContext.getBean(JobExplorer.class);

List<JobInstance> jobInstances = jobExplorer.findJobInstancesByJobName("job",1);

assertEquals(1,jobInstances.size());

JobInstance jobInstance = jobInstances.get(0);

List<JobExecution> jobExecutions = jobExplorer.getJobExecutions(jobInstance);

assertEquals(1,jobExecutions.size());

JobExecution jobExecution = jobExecutions.get(0);

return jobExecution.getStepExecutions();

}

private JobParameters getNextJobParameters(Job job,JobParameters additionalParameters) {

String name = job.getName();

JobParameters parameters = new JobParameters();

List<JobInstance> lastInstances = this.jobExplorer.getJobInstances(name,1);

JobParametersIncrementer incrementer = job.getJobParametersIncrementer();

Map<String,JobParameter> additionals = additionalParameters.getParameters();

if (lastInstances.isEmpty()) {

// Start from a completely clean sheet

if (incrementer != null) {

parameters = incrementer.getNext(new JobParameters());

}

}

else {

List<JobExecution> prevIoUsExecutions = this.jobExplorer

.getJobExecutions(lastInstances.get(0));

JobExecution prevIoUsExecution = prevIoUsExecutions.get(0);

if (prevIoUsExecution == null) {

// normally this will not happen - an instance exists with no executions

if (incrementer != null) {

parameters = incrementer.getNext(new JobParameters());

}

}

else if (isstoppedOrFailed(prevIoUsExecution) && job.isRestartable()) {

// Retry a Failed or stopped execution

parameters = prevIoUsExecution.getJobParameters();

// Non-identifying additional parameters can be removed to a retry

removeNonIdentifying(additionals);

}

else if (incrementer != null) {

// New instance so increment the parameters if we can

parameters = incrementer.getNext(prevIoUsExecution.getJobParameters());

}

}

return merge(parameters,additionals);

}

private JobParameters getNextJobParameters(Job job,additionals); }

public JobExecutionResource(TaskJobExecution taskJobExecution,TimeZone timeZone) {

Assert.notNull(taskJobExecution,"taskJobExecution must not be null");

this.taskExecutionId = taskJobExecution.getTaskId();

this.jobExecution = taskJobExecution.getJobExecution();

this.timeZone = timeZone;

this.executionId = jobExecution.getId();

this.jobId = jobExecution.getJobId();

this.stepExecutionCount = jobExecution.getStepExecutions().size();

this.jobParameters = converter.getProperties(jobExecution.getJobParameters());

this.jobParameteRSString = fromJobParameters(jobExecution.getJobParameters());

this.defined = taskJobExecution.istaskdefined();

JobInstance jobInstance = jobExecution.getJobInstance();

if (jobInstance != null) {

this.name = jobInstance.getJobName();

this.restartable = JobUtils.isJobExecutionRestartable(jobExecution);

this.abandonable = JobUtils.isJobExecutionAbandonable(jobExecution);

this.stoppable = JobUtils.isJobExecutionStoppable(jobExecution);

}

else {

this.name = "?";

}

// Duration is always in GMT

durationFormat.setTimeZone(TimeUtils.getDefaultTimeZone());

// The others can be localized

timeFormat.setTimeZone(timeZone);

dateFormat.setTimeZone(timeZone);

if (jobExecution.getStartTime() != null) {

this.startDate = dateFormat.format(jobExecution.getStartTime());

this.startTime = timeFormat.format(jobExecution.getStartTime());

Date endTime = jobExecution.getEndTime() != null ? jobExecution.getEndTime() : new Date();

this.duration = durationFormat.format(new Date(endTime.getTime() - jobExecution.getStartTime().getTime()));

}

}

public JobInstanceExecutions(JobInstance jobInstance,List<TaskJobExecution> taskJobExecutions) {

Assert.notNull(jobInstance,"jobInstance must not be null");

this.jobInstance = jobInstance;

if (taskJobExecutions == null) {

this.taskJobExecutions = Collections.emptyList();

}

else {

this.taskJobExecutions = Collections.unmodifiableList(taskJobExecutions);

}

}

/**

* Will augment the provided {@link RestTemplate} with the Jackson Mixins required by

* Spring Cloud Data Flow,specifically:

* <p>

* <ul>

* <li>{@link JobExecutionJacksonMixIn}

* <li>{@link JobParametersJacksonMixIn}

* <li>{@link JobParameterJacksonMixIn}

* <li>{@link JobInstanceJacksonMixIn}

* <li>{@link ExitStatusJacksonMixIn}

* <li>{@link StepExecutionJacksonMixIn}

* <li>{@link ExecutionContextJacksonMixIn}

* <li>{@link StepExecutionHistoryJacksonMixIn}

* </ul>

* <p>

* Furthermore,this method will also register the {@link Jackson2HalModule}

*

* @param restTemplate Can be null. Instantiates a new {@link RestTemplate} if null

* @return RestTemplate with the required Jackson Mixins

*/

public static RestTemplate prepareRestTemplate(RestTemplate restTemplate) {

if (restTemplate == null) {

restTemplate = new RestTemplate();

}

restTemplate.setErrorHandler(new VndErrorResponseErrorHandler(restTemplate.getMessageConverters()));

boolean containsMappingJackson2HttpMessageConverter = false;

for (HttpMessageConverter<?> converter : restTemplate.getMessageConverters()) {

if (converter instanceof MappingJackson2HttpMessageConverter) {

containsMappingJackson2HttpMessageConverter = true;

final MappingJackson2HttpMessageConverter jacksonConverter = (MappingJackson2HttpMessageConverter) converter;

jacksonConverter.getobjectMapper().registerModule(new Jackson2HalModule())

.addMixIn(JobExecution.class,JobExecutionJacksonMixIn.class)

.addMixIn(JobParameters.class,JobParametersJacksonMixIn.class)

.addMixIn(JobParameter.class,JobParameterJacksonMixIn.class)

.addMixIn(JobInstance.class,JobInstanceJacksonMixIn.class)

.addMixIn(ExitStatus.class,ExitStatusJacksonMixIn.class)

.addMixIn(StepExecution.class,StepExecutionJacksonMixIn.class)

.addMixIn(ExecutionContext.class,ExecutionContextJacksonMixIn.class)

.addMixIn(StepExecutionHistory.class,StepExecutionHistoryJacksonMixIn.class);

}

}

if (!containsMappingJackson2HttpMessageConverter) {

throw new IllegalArgumentException(

"The RestTemplate does not contain a required " + "MappingJackson2HttpMessageConverter.");

}

return restTemplate;

}

@Test

public void testDeserializationOfMultipleJobExecutions() throws IOException {

final ObjectMapper objectMapper = new ObjectMapper();

final InputStream inputStream = JobExecutionDeserializationTests.class

.getResourceAsstream("/JobExecutionjson.txt");

final String json = new String(StreamUtils.copyToByteArray(inputStream));

objectMapper.registerModule(new Jackson2HalModule());

objectMapper.addMixIn(JobExecution.class,JobExecutionJacksonMixIn.class);

objectMapper.addMixIn(JobParameters.class,JobParametersJacksonMixIn.class);

objectMapper.addMixIn(JobParameter.class,JobParameterJacksonMixIn.class);

objectMapper.addMixIn(JobInstance.class,JobInstanceJacksonMixIn.class);

objectMapper.addMixIn(StepExecution.class,StepExecutionJacksonMixIn.class);

objectMapper.addMixIn(StepExecutionHistory.class,StepExecutionHistoryJacksonMixIn.class);

objectMapper.addMixIn(ExecutionContext.class,ExecutionContextJacksonMixIn.class);

objectMapper.addMixIn(ExitStatus.class,ExitStatusJacksonMixIn.class);

PagedResources<Resource<JobExecutionResource>> paged = objectMapper.readValue(json,new TypeReference<PagedResources<Resource<JobExecutionResource>>>() {

});

JobExecutionResource jobExecutionResource = paged.getContent().iterator().next().getContent();

Assert.assertEquals("Expect 1 JobExecutionInfoResource",6,paged.getContent().size());

Assert.assertEquals(Long.valueOf(6),jobExecutionResource.getJobId());

Assert.assertEquals("job200616815",jobExecutionResource.getName());

Assert.assertEquals("COMPLETED",jobExecutionResource.getJobExecution().getStatus().name());

}

@Test

public void testDeserializationOfSingleJobExecution() throws IOException {

final ObjectMapper objectMapper = new ObjectMapper();

objectMapper.registerModule(new Jackson2HalModule());

final InputStream inputStream = JobExecutionDeserializationTests.class

.getResourceAsstream("/SingleJobExecutionjson.txt");

final String json = new String(StreamUtils.copyToByteArray(inputStream));

objectMapper.addMixIn(JobExecution.class,ExitStatusJacksonMixIn.class);

objectMapper.setDateFormat(new ISO8601DateFormatWithMilliSeconds());

final JobExecutionResource jobExecutionInfoResource = objectMapper.readValue(json,JobExecutionResource.class);

Assert.assertNotNull(jobExecutionInfoResource);

Assert.assertEquals(Long.valueOf(1),jobExecutionInfoResource.getJobId());

Assert.assertEquals("ff.job",jobExecutionInfoResource.getName());

Assert.assertEquals("COMPLETED",jobExecutionInfoResource.getJobExecution().getStatus().name());

}

/**

* Retrieves Pageable list of {@link JobInstanceExecutions} from the JobRepository with a

* specific jobName and matches the data with the associated JobExecutions.

*

* @param pageable enumerates the data to be returned.

* @param jobName the name of the job for which to search.

* @return List containing {@link JobInstanceExecutions}.

*/

@Override

public List<JobInstanceExecutions> listTaskJobInstancesForJobName(Pageable pageable,String jobName)

throws NoSuchJobException {

Assert.notNull(pageable,"pageable must not be null");

Assert.notNull(jobName,"jobName must not be null");

List<JobInstanceExecutions> taskJobInstances = new ArrayList<>();

for (JobInstance jobInstance : jobService.listJobInstances(jobName,pageable.getoffset(),pageable.getPageSize())) {

taskJobInstances.add(getJobInstanceExecution(jobInstance));

}

return taskJobInstances;

}

@Before

public void setupmockmvc() {

this.mockmvc = mockmvcBuilders.webAppContextSetup(wac)

.defaultRequest(get("/").accept(MediaType.APPLICATION_JSON)).build();

if (!initialized) {

this.sampleArgumentList = new LinkedList<String>();

this.sampleArgumentList.add("--password=foo");

this.sampleArgumentList.add("password=bar");

this.sampleArgumentList.add("org.woot.password=baz");

this.sampleArgumentList.add("foo.bar=foo");

this.sampleArgumentList.add("bar.baz = boo");

this.sampleArgumentList.add("foo.credentials.boo=bar");

this.sampleArgumentList.add("spring.datasource.username=dbuser");

this.sampleArgumentList.add("spring.datasource.password=dbpass");

this.sampleCleansedArgumentList = new LinkedList<String>();

this.sampleCleansedArgumentList.add("--password=******");

this.sampleCleansedArgumentList.add("password=******");

this.sampleCleansedArgumentList.add("org.woot.password=******");

this.sampleCleansedArgumentList.add("foo.bar=foo");

this.sampleCleansedArgumentList.add("bar.baz = boo");

this.sampleCleansedArgumentList.add("foo.credentials.boo=******");

this.sampleCleansedArgumentList.add("spring.datasource.username=dbuser");

this.sampleCleansedArgumentList.add("spring.datasource.password=******");

taskDeFinitionRepository.save(new TaskDeFinition(TASK_NAME_ORIG,"demo"));