在本文中,我们将带你了解greenplumerror!在这篇文章中,同时我们还将给您一些技巧,以帮助您实现更有效的ConcurrencyControlinGreenplumDatabase、Deepg

在本文中,我们将带你了解greenplum error!在这篇文章中,同时我们还将给您一些技巧,以帮助您实现更有效的Concurrency Control in Greenplum Database、Deepgreen DB 是什么(含Deepgreen和Greenplum下载地址)、Deepgreen(Greenplum) DBA常用运维SQL、Deepgreen/Greenplum 删除节点步骤。

本文目录一览:- greenplum error!

- Concurrency Control in Greenplum Database

- Deepgreen DB 是什么(含Deepgreen和Greenplum下载地址)

- Deepgreen(Greenplum) DBA常用运维SQL

- Deepgreen/Greenplum 删除节点步骤

greenplum error!

20160923:22:17:32:026503 gpinitsystem:host-192-168-111-19:gpadmin-[INFO]:-Checking Master host

20160923:22:17:32:026503 gpinitsystem:host-192-168-111-19:gpadmin-[WARN]:-Have lock file /tmp/.s.PGSQL.5432.lock but no process running on port 5432

20160923:22:17:32:gpinitsystem:host-192-168-111-19:gpadmin-[FATAL]:-Found indication of postmaster process on port 5432 on Master host Script Exiting!

移除 lock 文件 /tmp/.s.PGSQL.5432.lock

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:- Total segment instance count from metadata = 4

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:-----------------------------------------------------

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:- Primary Segment Status

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:-----------------------------------------------------

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:- Total primary segments = 4

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:- Total primary segment valid (at master) = 4

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:- Total primary segment failures (at master) = 0

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:- Total number of postmaster.pid files missing = 0

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:- Total number of postmaster.pid files found = 4

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:- Total number of postmaster.pid PIDs missing = 0

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:- Total number of postmaster.pid PIDs found = 4

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:- Total number of /tmp lock files missing = 0

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:- Total number of /tmp lock files found = 4

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:- Total number postmaster processes missing = 0

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:- Total number postmaster processes found = 4

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:-----------------------------------------------------

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:- Mirror Segment Status

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:-----------------------------------------------------

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:- Mirrors not configured on this array

20160923:22:35:49:023838 gpstate:host-192-168-111-19:gpadmin-[INFO]:-----------------------------------------------------

[gpadmin@host-192-168-111-19 ~]$ ls

Concurrency Control in Greenplum Database

Unlike traditional database systems which use locks for concurrency control, Greenplum Database (as does PostgreSQL) maintains data consistency by using a multiversion model (Multiversion Concurrency Control, MVCC). This means that while querying a database, each transaction sees a snapshot of data which protects the transaction from viewing inconsistent data that could be caused by (other) concurrent updates on the same data rows. This provides transaction isolation for each database session.

MVCC, by eschewing explicit locking methodologies of traditional database systems, minimizes lock contention in order to allow for reasonable performance in multiuser environments. The main advantage to using the MVCC model of concurrency control rather than locking is that in MVCC locks acquired for querying (reading) data do not conflict with locks acquired for writing data, and so reading never blocks writing and writing never blocks reading.

Greenplum Database provides various lock modes to control concurrent access to data in tables. Most Greenplum Database SQL commands automatically acquire locks of appropriate modes to ensure that referenced tables are not dropped or modified in incompatible ways while the command executes. For applications that cannot adapt easily to MVCC behavior, the LOCK command can be used to acquire explicit locks. However, proper use of MVCC will generally provide better performance than locks.

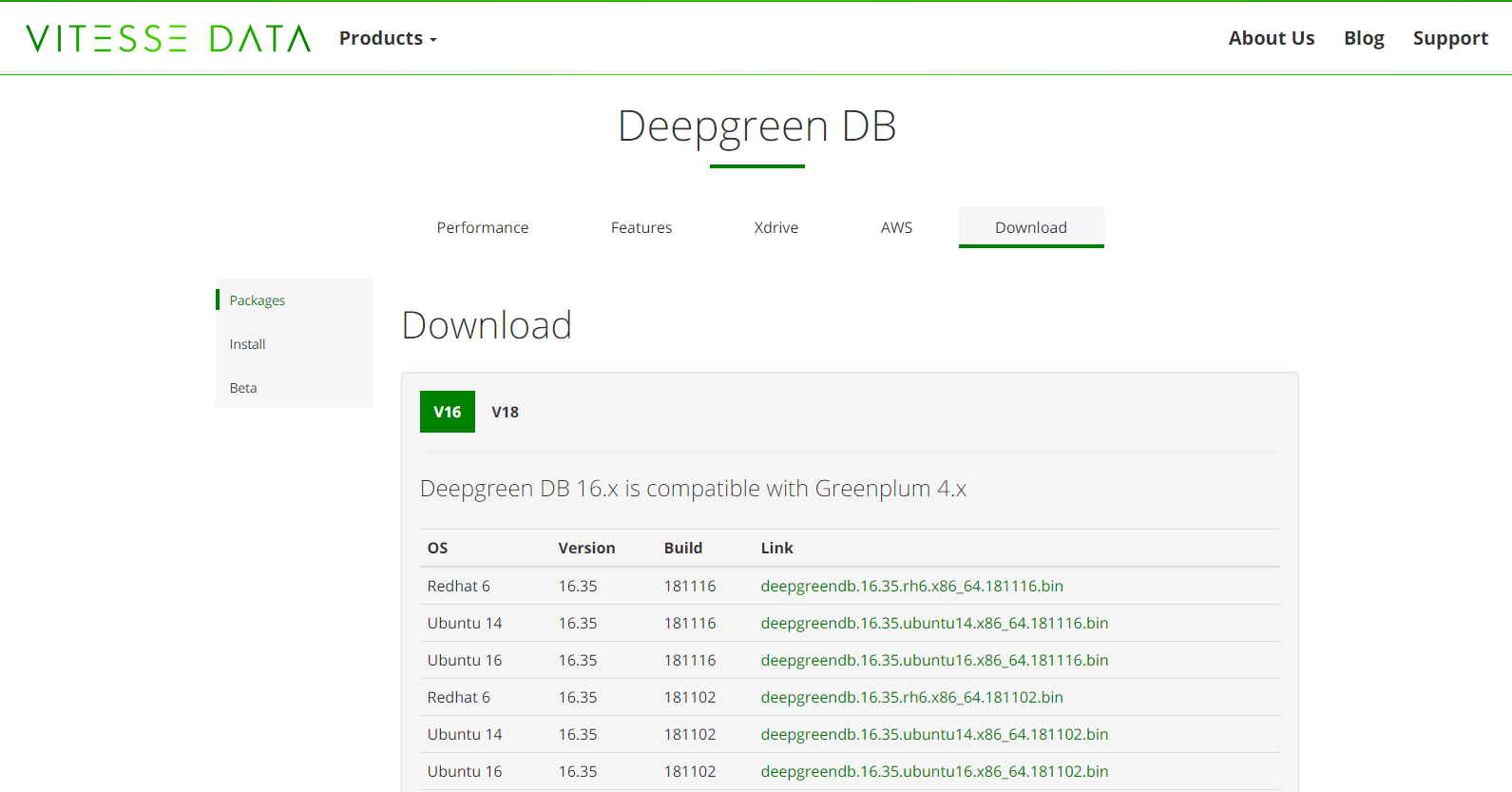

Deepgreen DB 是什么(含Deepgreen和Greenplum下载地址)

Deepgreen官网下载地址:http://vitessedata.com/products/deepgreen-db/download/ 不需要注册

Greenplum官网下载地址:https://network.pivotal.io/products/pivotal-gpdb/#/releases/118806/file_groups/1022 需要注册

- 优越的连接和聚合算法

- 新的溢出处理子系统

- 基于JIT的查询优化、矢量扫描和数据路径优化

- 除了以quicklz方式压缩的数据需要修改外,其他数据无需重新装载

- DML和DDL语句没有任何改变

- UDF(用户定义函数)语法没有任何改变

- 存储过程语法没有任何改变

- JDBC/ODBC等连接和授权协议没有任何改变

- 运行脚本没有任何改变(例如备份脚本)

这两个数据类型需要在数据库初始化以后,通过命令加载到需要的数据库中:

dgadmin@flash:~$ source deepgreendb/greenplum_path.sh

dgadmin@flash:~$ cd $GPHOME/share/postgresql/contrib/

dgadmin@flash:~/deepgreendb/share/postgresql/contrib$ psql postgres -f pg_decimal.sql使用语句:select avg(x), sum(2*x) from table

数据量:100万

dgadmin@flash:~$ psql -d postgres

psql (8.2.15)

Type "help" for help.

postgres=# drop table if exists tt;

NOTICE: table "tt" does not exist, skipping

DROP TABLE

postgres=# create table tt(

postgres(# ii bigint,

postgres(# f64 double precision,

postgres(# d64 decimal64,

postgres(# d128 decimal128,

postgres(# n numeric(15, 3))

postgres-# distributed randomly;

CREATE TABLE

postgres=# insert into tt

postgres-# select i,

postgres-# i + 0.123,

postgres-# (i + 0.123)::decimal64,

postgres-# (i + 0.123)::decimal128,

postgres-# i + 0.123

postgres-# from generate_series(1, 1000000) i;

INSERT 0 1000000

postgres=# \timing on

Timing is on.

postgres=# select count(*) from tt;

count

---------

1000000

(1 row)

Time: 161.500 ms

postgres=# set vitesse.enable=1;

SET

Time: 1.695 ms

postgres=# select avg(f64),sum(2*f64) from tt;

avg | sum

------------------+------------------

500000.622996815 | 1000001245993.63

(1 row)

Time: 45.368 ms

postgres=# select avg(d64),sum(2*d64) from tt;

avg | sum

------------+-------------------

500000.623 | 1000001246000.000

(1 row)

Time: 135.693 ms

postgres=# select avg(d128),sum(2*d128) from tt;

avg | sum

------------+-------------------

500000.623 | 1000001246000.000

(1 row)

Time: 148.286 ms

postgres=# set vitesse.enable=1;

SET

Time: 11.691 ms

postgres=# select avg(n),sum(2*n) from tt;

avg | sum

---------------------+-------------------

500000.623000000000 | 1000001246000.000

(1 row)

Time: 154.189 ms

postgres=# set vitesse.enable=0;

SET

Time: 1.426 ms

postgres=# select avg(n),sum(2*n) from tt;

avg | sum

---------------------+-------------------

500000.623000000000 | 1000001246000.000

(1 row)

Time: 296.291 ms45ms - 64位float

136ms - decimal64

148ms - decimal128

154ms - deepgreen numeric

296ms - greenplum numericdgadmin@flash:~$ psql postgres -f $GPHOME/share/postgresql/contrib/json.sqldgadmin@flash:~$ psql postgres

psql (8.2.15)

Type "help" for help.

postgres=# select ''[1,2,3]''::json->2;

?column?

----------

3

(1 row)

postgres=# create temp table mytab(i int, j json) distributed by (i);

CREATE TABLE

postgres=# insert into mytab values (1, null), (2, ''[2,3,4]''), (3, ''[3000,4000,5000]'');

INSERT 0 3

postgres=#

postgres=# insert into mytab values (1, null), (2, ''[2,3,4]''), (3, ''[3000,4000,5000]'');

INSERT 0 3

postgres=# select i, j->2 from mytab;

i | ?column?

---+----------

2 | 4

2 | 4

1 |

3 | 5000

1 |

3 | 5000

(6 rows)- zstd主页 http://facebook.github.io/zstd/

- lz4主页 http://lz4.github.io/lz4/

postgres=# create temp table ttnone (

postgres(# i int,

postgres(# t text,

postgres(# default column encoding (compresstype=none))

postgres-# with (appendonly=true, orientation=column)

postgres-# distributed by (i);

CREATE TABLE

postgres=# \timing on

Timing is on.

postgres=# create temp table ttzlib(

postgres(# i int,

postgres(# t text,

postgres(# default column encoding (compresstype=zlib, compresslevel=1))

postgres-# with (appendonly=true, orientation=column)

postgres-# distributed by (i);

CREATE TABLE

Time: 762.596 ms

postgres=# create temp table ttzstd (

postgres(# i int,

postgres(# t text,

postgres(# default column encoding (compresstype=zstd, compresslevel=1))

postgres-# with (appendonly=true, orientation=column)

postgres-# distributed by (i);

CREATE TABLE

Time: 827.033 ms

postgres=# create temp table ttlz4 (

postgres(# i int,

postgres(# t text,

postgres(# default column encoding (compresstype=lz4))

postgres-# with (appendonly=true, orientation=column)

postgres-# distributed by (i);

CREATE TABLE

Time: 845.728 ms

postgres=# insert into ttnone select i, ''user ''||i from generate_series(1, 100000000) i;

INSERT 0 100000000

Time: 104641.369 ms

postgres=# insert into ttzlib select i, ''user ''||i from generate_series(1, 100000000) i;

INSERT 0 100000000

Time: 99557.505 ms

postgres=# insert into ttzstd select i, ''user ''||i from generate_series(1, 100000000) i;

INSERT 0 100000000

Time: 98800.567 ms

postgres=# insert into ttlz4 select i, ''user ''||i from generate_series(1, 100000000) i;

INSERT 0 100000000

Time: 96886.107 ms

postgres=# select pg_size_pretty(pg_relation_size(''ttnone''));

pg_size_pretty

----------------

1708 MB

(1 row)

Time: 83.411 ms

postgres=# select pg_size_pretty(pg_relation_size(''ttzlib''));

pg_size_pretty

----------------

374 MB

(1 row)

Time: 4.641 ms

postgres=# select pg_size_pretty(pg_relation_size(''ttzstd''));

pg_size_pretty

----------------

325 MB

(1 row)

Time: 5.015 ms

postgres=# select pg_size_pretty(pg_relation_size(''ttlz4''));

pg_size_pretty

----------------

785 MB

(1 row)

Time: 4.483 ms

postgres=# select sum(length(t)) from ttnone;

sum

------------

1288888898

(1 row)

Time: 4414.965 ms

postgres=# select sum(length(t)) from ttzlib;

sum

------------

1288888898

(1 row)

Time: 4500.671 ms

postgres=# select sum(length(t)) from ttzstd;

sum

------------

1288888898

(1 row)

Time: 3849.648 ms

postgres=# select sum(length(t)) from ttlz4;

sum

------------

1288888898

(1 row)

Time: 3160.477 ms- SELECT {select-clauses} LIMIT SAMPLE {n} ROWS;

- SELECT {select-clauses} LIMIT SAMPLE {n} PERCENT;

postgres=# select count(*) from ttlz4;

count

-----------

100000000

(1 row)

Time: 903.661 ms

postgres=# select * from ttlz4 limit sample 0.00001 percent;

i | t

----------+---------------

3442917 | user 3442917

9182620 | user 9182620

9665879 | user 9665879

13791056 | user 13791056

15669131 | user 15669131

16234351 | user 16234351

19592531 | user 19592531

39097955 | user 39097955

48822058 | user 48822058

83021724 | user 83021724

1342299 | user 1342299

20309120 | user 20309120

34448511 | user 34448511

38060122 | user 38060122

69084858 | user 69084858

73307236 | user 73307236

95421406 | user 95421406

(17 rows)

Time: 4208.847 ms

postgres=# select * from ttlz4 limit sample 10 rows;

i | t

----------+---------------

78259144 | user 78259144

85551752 | user 85551752

90848887 | user 90848887

53923527 | user 53923527

46524603 | user 46524603

31635115 | user 31635115

19030885 | user 19030885

97877732 | user 97877732

33238448 | user 33238448

20916240 | user 20916240

(10 rows)

Time: 3578.031 msGreenplum使用TPC-H测试过程及结果 :

https://blog.csdn.net/xfg0218/article/details/82785187

原文链接

Deepgreen(Greenplum) DBA常用运维SQL

摘要: 1.查看对象大小(表、索引、数据库等) select pg_size_pretty(pg_relation_size(’$schema.$table’)); 示例: tpch=# select pg_size_pretty(pg_relation_size(''public.

1.查看对象大小(表、索引、数据库等)

select pg_size_pretty(pg_relation_size(’$schema.$table’));

示例:

tpch=# select pg_size_pretty(pg_relation_size(''public.customer''));

pg_size_pretty

----------------

122 MB

(1 row)2.查看用户(非系统)表和索引

tpch=# select * from pg_stat_user_tables;

relid | schemaname | relname | seq_scan | seq_tup_read | idx_scan | idx_tup_fetch | n_tup_ins | n_tup_upd | n_tup_del | last_vacuum | last_autovacuum | last_analyze

| last_autoanalyze

-------+------------+----------------------+----------+--------------+----------+---------------+-----------+-----------+-----------+-------------+-----------------+--------------

+------------------

17327 | public | partsupp | 0 | 0 | 0 | 0 | 0 | 0 | 0 | | |

|

17294 | public | customer | 0 | 0 | 0 | 0 | 0 | 0 | 0 | | |

|

17158 | public | part | 0 | 0 | | | 0 | 0 | 0 | | |

|

17259 | public | supplier | 0 | 0 | 0 | 0 | 0 | 0 | 0 | | |

|

16633 | gp_toolkit | gp_disk_free | 0 | 0 | | | 0 | 0 | 0 | | |

|

17394 | public | lineitem | 0 | 0 | 0 | 0 | 0 | 0 | 0 | | |

|

17361 | public | orders | 0 | 0 | 0 | 0 | 0 | 0 | 0 | | |

|

16439 | gp_toolkit | __gp_masterid | 0 | 0 | | | 0 | 0 | 0 | | |

|

49164 | public | number_xdrive | 0 | 0 | | | 0 | 0 | 0 | | |

|

17193 | public | region | 0 | 0 | | | 0 | 0 | 0 | | |

|

49215 | public | number_gpfdist | 0 | 0 | | | 0 | 0 | 0 | | |

|

16494 | gp_toolkit | __gp_log_master_ext | 0 | 0 | | | 0 | 0 | 0 | | |

|

40972 | public | number | 0 | 0 | | | 0 | 0 | 0 | | |

|

16413 | gp_toolkit | __gp_localid | 0 | 0 | | | 0 | 0 | 0 | | |

|

16468 | gp_toolkit | __gp_log_segment_ext | 0 | 0 | | | 0 | 0 | 0 | | |

|

17226 | public | nation | 0 | 0 | 0 | 0 | 0 | 0 | 0 | | |

|

(16 rows)

tpch=# select * from pg_stat_user_indexes;

relid | indexrelid | schemaname | relname | indexrelname | idx_scan | idx_tup_read | idx_tup_fetch

-------+------------+------------+----------+-------------------------+----------+--------------+---------------

17259 | 17602 | public | supplier | idx_supplier_nation_key | 0 | 0 | 0

17327 | 17623 | public | partsupp | idx_partsupp_partkey | 0 | 0 | 0

17327 | 17644 | public | partsupp | idx_partsupp_suppkey | 0 | 0 | 0

17294 | 17663 | public | customer | idx_customer_nationkey | 0 | 0 | 0

17361 | 17684 | public | orders | idx_orders_custkey | 0 | 0 | 0

17394 | 17705 | public | lineitem | idx_lineitem_orderkey | 0 | 0 | 0

17394 | 17726 | public | lineitem | idx_lineitem_part_supp | 0 | 0 | 0

17226 | 17745 | public | nation | idx_nation_regionkey | 0 | 0 | 0

17394 | 17766 | public | lineitem | idx_lineitem_shipdate | 0 | 0 | 0

17361 | 17785 | public | orders | idx_orders_orderdate | 0 | 0 | 0

(10 rows)3.查看表分区

select b.nspname||''.''||a.relname as tablename, d.parname as partname from pg_class a, pg_namespace b, pg_partition c,pg_partition_rule d where a.relnamespace = b.oid and b.nspname = ''public'' and a.relname = ''customer'' and a.oid = c.parrelid and c.oid = d.paroid order by parname;

tablename | partname

-----------+----------

(0 rows)4.查看Distributed key

tpch=# select b.attname from pg_class a, pg_attribute b, pg_type c, gp_distribution_policy d, pg_namespace e where d.localoid = a.oid and a.relnamespace = e.oid and e.nspname = ''public'' and a.relname= ''customer'' and a.oid = b.attrelid and b.atttypid = c.oid and b.attnum > 0 and b.attnum = any(d.attrnums) order by attnum;

attname

-----------

c_custkey

(1 row)5.查看当前存活的查询

select procpid as pid, sess_id as session, usename as user, current_query as query, waiting,date_trunc(''second'',query_start) as start_time, client_addr as useraddr from pg_stat_activity where datname =''$PGDATABADE''

and current_query not like ''%from pg_stat_activity%where datname =%'' order by start_time;

示例:

tpch=# select procpid as pid, sess_id as session, usename as user, current_query as query, waiting,date_trunc(''second'',query_start) as start_time, client_addr as useraddr from pg_stat_activity where datname =''tpch''

tpch-# and current_query not like ''%from pg_stat_activity%where datname =%'' order by start_time;

pid | session | user | query | waiting | start_time | useraddr

-----+---------+------+-------+---------+------------+----------

(0 rows)

Deepgreen/Greenplum 删除节点步骤

Deepgreen/Greenplum 删除节点步骤

Greenplum 和 Deepgreen 官方都没有给出删除节点的方法和建议,但实际上,我们可以对节点进行删除。由于不确定性,删除节点极有可能导致其他的问题,所以还行做好备份,谨慎而为。下面是具体的步骤:

1. 查看数据库当前状态(12 个实例)

[gpadmin@sdw1 ~]$ gpstate

20170816:12:53:25:097578 gpstate:sdw1:gpadmin-[INFO]:-Starting gpstate with args:

20170816:12:53:25:097578 gpstate:sdw1:gpadmin-[INFO]:-local Greenplum Version: ''postgres (Greenplum Database) 4.3.99.00 build Deepgreen DB''

20170816:12:53:25:097578 gpstate:sdw1:gpadmin-[INFO]:-master Greenplum Version: ''PostgreSQL 8.2.15 (Greenplum Database 4.3.99.00 build Deepgreen DB) on x86_64-unknown-linux-gnu, compiled by GCC gcc (GCC) 4.9.2 20150212 (Red Hat 4.9.2-6) compiled on Jul 6 2017 03:04:10''

20170816:12:53:25:097578 gpstate:sdw1:gpadmin-[INFO]:-Obtaining Segment details from master...

20170816:12:53:25:097578 gpstate:sdw1:gpadmin-[INFO]:-Gathering data from segments...

..

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:-Greenplum instance status summary

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:-----------------------------------------------------

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Master instance = Active

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Master standby = No master standby configured

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Total segment instance count from metadata = 12

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:-----------------------------------------------------

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Primary Segment Status

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:-----------------------------------------------------

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Total primary segments = 12

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Total primary segment valid (at master) = 12

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Total primary segment failures (at master) = 0

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Total number of postmaster.pid files missing = 0

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Total number of postmaster.pid files found = 12

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs missing = 0

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs found = 12

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Total number of /tmp lock files missing = 0

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Total number of /tmp lock files found = 12

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Total number postmaster processes missing = 0

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Total number postmaster processes found = 12

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:-----------------------------------------------------

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Mirror Segment Status

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:-----------------------------------------------------

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:- Mirrors not configured on this array

20170816:12:53:27:097578 gpstate:sdw1:gpadmin-[INFO]:-----------------------------------------------------

2. 并行备份数据库

使用 gpcrondump 命令备份数据库,这里不赘述,不明白的可以翻看文档。

3. 关闭当前数据库

[gpadmin@sdw1 ~]$ gpstop -M fast

20170816:12:54:10:097793 gpstop:sdw1:gpadmin-[INFO]:-Starting gpstop with args: -M fast

20170816:12:54:10:097793 gpstop:sdw1:gpadmin-[INFO]:-Gathering information and validating the environment...

20170816:12:54:10:097793 gpstop:sdw1:gpadmin-[INFO]:-Obtaining Greenplum Master catalog information

20170816:12:54:10:097793 gpstop:sdw1:gpadmin-[INFO]:-Obtaining Segment details from master...

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:-Greenplum Version: ''postgres (Greenplum Database) 4.3.99.00 build Deepgreen DB''

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:---------------------------------------------

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:-Master instance parameters

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:---------------------------------------------

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- Master Greenplum instance process active PID = 31250

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- Database = template1

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- Master port = 5432

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- Master directory = /hgdata/master/hgdwseg-1

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- Shutdown mode = fast

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- Timeout = 120

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- Shutdown Master standby host = Off

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:---------------------------------------------

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:-Segment instances that will be shutdown:

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:---------------------------------------------

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- Host Datadir Port Status

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg0 25432 u

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg1 25433 u

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg2 25434 u

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg3 25435 u

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg4 25436 u

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg5 25437 u

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg6 25438 u

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg7 25439 u

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg8 25440 u

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg9 25441 u

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg10 25442 u

20170816:12:54:11:097793 gpstop:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg11 25443 u

Continue with Greenplum instance shutdown Yy|Nn (default=N):

> y

20170816:12:54:12:097793 gpstop:sdw1:gpadmin-[INFO]:-There are 0 connections to the database

20170816:12:54:12:097793 gpstop:sdw1:gpadmin-[INFO]:-Commencing Master instance shutdown with mode=''fast''

20170816:12:54:12:097793 gpstop:sdw1:gpadmin-[INFO]:-Master host=sdw1

20170816:12:54:12:097793 gpstop:sdw1:gpadmin-[INFO]:-Detected 0 connections to database

20170816:12:54:12:097793 gpstop:sdw1:gpadmin-[INFO]:-Using standard WAIT mode of 120 seconds

20170816:12:54:12:097793 gpstop:sdw1:gpadmin-[INFO]:-Commencing Master instance shutdown with mode=fast

20170816:12:54:12:097793 gpstop:sdw1:gpadmin-[INFO]:-Master segment instance directory=/hgdata/master/hgdwseg-1

20170816:12:54:13:097793 gpstop:sdw1:gpadmin-[INFO]:-Attempting forceful termination of any leftover master process

20170816:12:54:13:097793 gpstop:sdw1:gpadmin-[INFO]:-Terminating processes for segment /hgdata/master/hgdwseg-1

20170816:12:54:13:097793 gpstop:sdw1:gpadmin-[INFO]:-No standby master host configured

20170816:12:54:13:097793 gpstop:sdw1:gpadmin-[INFO]:-Commencing parallel segment instance shutdown, please wait...

20170816:12:54:13:097793 gpstop:sdw1:gpadmin-[INFO]:-0.00% of jobs completed

20170816:12:54:23:097793 gpstop:sdw1:gpadmin-[INFO]:-100.00% of jobs completed

20170816:12:54:23:097793 gpstop:sdw1:gpadmin-[INFO]:-----------------------------------------------------

20170816:12:54:23:097793 gpstop:sdw1:gpadmin-[INFO]:- Segments stopped successfully = 12

20170816:12:54:23:097793 gpstop:sdw1:gpadmin-[INFO]:- Segments with errors during stop = 0

20170816:12:54:23:097793 gpstop:sdw1:gpadmin-[INFO]:-----------------------------------------------------

20170816:12:54:23:097793 gpstop:sdw1:gpadmin-[INFO]:-Successfully shutdown 12 of 12 segment instances

20170816:12:54:23:097793 gpstop:sdw1:gpadmin-[INFO]:-Database successfully shutdown with no errors reported

20170816:12:54:23:097793 gpstop:sdw1:gpadmin-[INFO]:-Cleaning up leftover gpmmon process

20170816:12:54:23:097793 gpstop:sdw1:gpadmin-[INFO]:-No leftover gpmmon process found

20170816:12:54:23:097793 gpstop:sdw1:gpadmin-[INFO]:-Cleaning up leftover gpsmon processes

20170816:12:54:23:097793 gpstop:sdw1:gpadmin-[INFO]:-No leftover gpsmon processes on some hosts. not attempting forceful termination on these hosts

20170816:12:54:23:097793 gpstop:sdw1:gpadmin-[INFO]:-Cleaning up leftover shared memory

4. 以管理模式启动数据库

[gpadmin@sdw1 ~]$ gpstart -m

20170816:12:54:40:098061 gpstart:sdw1:gpadmin-[INFO]:-Starting gpstart with args: -m

20170816:12:54:40:098061 gpstart:sdw1:gpadmin-[INFO]:-Gathering information and validating the environment...

20170816:12:54:40:098061 gpstart:sdw1:gpadmin-[INFO]:-Greenplum Binary Version: ''postgres (Greenplum Database) 4.3.99.00 build Deepgreen DB''

20170816:12:54:40:098061 gpstart:sdw1:gpadmin-[INFO]:-Greenplum Catalog Version: ''201310150''

20170816:12:54:40:098061 gpstart:sdw1:gpadmin-[INFO]:-Master-only start requested in configuration without a standby master.

Continue with master-only startup Yy|Nn (default=N):

> y

20170816:12:54:41:098061 gpstart:sdw1:gpadmin-[INFO]:-Starting Master instance in admin mode

20170816:12:54:42:098061 gpstart:sdw1:gpadmin-[INFO]:-Obtaining Greenplum Master catalog information

20170816:12:54:42:098061 gpstart:sdw1:gpadmin-[INFO]:-Obtaining Segment details from master...

20170816:12:54:42:098061 gpstart:sdw1:gpadmin-[INFO]:-Setting new master era

20170816:12:54:42:098061 gpstart:sdw1:gpadmin-[INFO]:-Master Started...

5. 登陆管理数据库

[gpadmin@sdw1 ~]$ PGOPTIONS="-c gp_session_role=utility" psql -d postgres

psql (8.2.15)

Type "help" for help.

6. 删除 segment

postgres=# select * from gp_segment_configuration;

dbid | content | role | preferred_role | mode | status | port | hostname | address | replication_port | san_mounts

------+---------+------+----------------+------+--------+-------+----------+---------+------------------+------------

1 | -1 | p | p | s | u | 5432 | sdw1 | sdw1 | |

2 | 0 | p | p | s | u | 25432 | sdw1 | sdw1 | |

3 | 1 | p | p | s | u | 25433 | sdw1 | sdw1 | |

4 | 2 | p | p | s | u | 25434 | sdw1 | sdw1 | |

5 | 3 | p | p | s | u | 25435 | sdw1 | sdw1 | |

6 | 4 | p | p | s | u | 25436 | sdw1 | sdw1 | |

7 | 5 | p | p | s | u | 25437 | sdw1 | sdw1 | |

8 | 6 | p | p | s | u | 25438 | sdw1 | sdw1 | |

9 | 7 | p | p | s | u | 25439 | sdw1 | sdw1 | |

10 | 8 | p | p | s | u | 25440 | sdw1 | sdw1 | |

11 | 9 | p | p | s | u | 25441 | sdw1 | sdw1 | |

12 | 10 | p | p | s | u | 25442 | sdw1 | sdw1 | |

13 | 11 | p | p | s | u | 25443 | sdw1 | sdw1 | |

(13 rows)

postgres=# set allow_system_table_mods=''dml'';

SET

postgres=# delete from gp_segment_configuration where dbid=13;

DELETE 1

postgres=# select * from gp_segment_configuration;

dbid | content | role | preferred_role | mode | status | port | hostname | address | replication_port | san_mounts

------+---------+------+----------------+------+--------+-------+----------+---------+------------------+------------

1 | -1 | p | p | s | u | 5432 | sdw1 | sdw1 | |

2 | 0 | p | p | s | u | 25432 | sdw1 | sdw1 | |

3 | 1 | p | p | s | u | 25433 | sdw1 | sdw1 | |

4 | 2 | p | p | s | u | 25434 | sdw1 | sdw1 | |

5 | 3 | p | p | s | u | 25435 | sdw1 | sdw1 | |

6 | 4 | p | p | s | u | 25436 | sdw1 | sdw1 | |

7 | 5 | p | p | s | u | 25437 | sdw1 | sdw1 | |

8 | 6 | p | p | s | u | 25438 | sdw1 | sdw1 | |

9 | 7 | p | p | s | u | 25439 | sdw1 | sdw1 | |

10 | 8 | p | p | s | u | 25440 | sdw1 | sdw1 | |

11 | 9 | p | p | s | u | 25441 | sdw1 | sdw1 | |

12 | 10 | p | p | s | u | 25442 | sdw1 | sdw1 | |

(12 rows)

7. 删除 filespace

postgres=# select * from pg_filespace_entry;

fsefsoid | fsedbid | fselocation

----------+---------+---------------------------

3052 | 1 | /hgdata/master/hgdwseg-1

3052 | 2 | /hgdata/primary/hgdwseg0

3052 | 3 | /hgdata/primary/hgdwseg1

3052 | 4 | /hgdata/primary/hgdwseg2

3052 | 5 | /hgdata/primary/hgdwseg3

3052 | 6 | /hgdata/primary/hgdwseg4

3052 | 7 | /hgdata/primary/hgdwseg5

3052 | 8 | /hgdata/primary/hgdwseg6

3052 | 9 | /hgdata/primary/hgdwseg7

3052 | 10 | /hgdata/primary/hgdwseg8

3052 | 11 | /hgdata/primary/hgdwseg9

3052 | 12 | /hgdata/primary/hgdwseg10

3052 | 13 | /hgdata/primary/hgdwseg11

(13 rows)

postgres=# delete from pg_filespace_entry where fsedbid=13;

DELETE 1

postgres=# select * from pg_filespace_entry;

fsefsoid | fsedbid | fselocation

----------+---------+---------------------------

3052 | 1 | /hgdata/master/hgdwseg-1

3052 | 2 | /hgdata/primary/hgdwseg0

3052 | 3 | /hgdata/primary/hgdwseg1

3052 | 4 | /hgdata/primary/hgdwseg2

3052 | 5 | /hgdata/primary/hgdwseg3

3052 | 6 | /hgdata/primary/hgdwseg4

3052 | 7 | /hgdata/primary/hgdwseg5

3052 | 8 | /hgdata/primary/hgdwseg6

3052 | 9 | /hgdata/primary/hgdwseg7

3052 | 10 | /hgdata/primary/hgdwseg8

3052 | 11 | /hgdata/primary/hgdwseg9

3052 | 12 | /hgdata/primary/hgdwseg10

(12 rows)

8. 退出管理模式,正常启动数据库

[gpadmin@sdw1 ~]$ gpstop -m

20170816:12:56:52:098095 gpstop:sdw1:gpadmin-[INFO]:-Starting gpstop with args: -m

20170816:12:56:52:098095 gpstop:sdw1:gpadmin-[INFO]:-Gathering information and validating the environment...

20170816:12:56:52:098095 gpstop:sdw1:gpadmin-[INFO]:-Obtaining Greenplum Master catalog information

20170816:12:56:52:098095 gpstop:sdw1:gpadmin-[INFO]:-Obtaining Segment details from master...

20170816:12:56:52:098095 gpstop:sdw1:gpadmin-[INFO]:-Greenplum Version: ''postgres (Greenplum Database) 4.3.99.00 build Deepgreen DB''

20170816:12:56:52:098095 gpstop:sdw1:gpadmin-[INFO]:-There are 0 connections to the database

20170816:12:56:52:098095 gpstop:sdw1:gpadmin-[INFO]:-Commencing Master instance shutdown with mode=''smart''

20170816:12:56:52:098095 gpstop:sdw1:gpadmin-[INFO]:-Master host=sdw1

20170816:12:56:52:098095 gpstop:sdw1:gpadmin-[INFO]:-Commencing Master instance shutdown with mode=smart

20170816:12:56:52:098095 gpstop:sdw1:gpadmin-[INFO]:-Master segment instance directory=/hgdata/master/hgdwseg-1

20170816:12:56:53:098095 gpstop:sdw1:gpadmin-[INFO]:-Attempting forceful termination of any leftover master process

20170816:12:56:53:098095 gpstop:sdw1:gpadmin-[INFO]:-Terminating processes for segment /hgdata/master/hgdwseg-1

[gpadmin@sdw1 ~]$ gpstart

20170816:12:57:02:098112 gpstart:sdw1:gpadmin-[INFO]:-Starting gpstart with args:

20170816:12:57:02:098112 gpstart:sdw1:gpadmin-[INFO]:-Gathering information and validating the environment...

20170816:12:57:02:098112 gpstart:sdw1:gpadmin-[INFO]:-Greenplum Binary Version: ''postgres (Greenplum Database) 4.3.99.00 build Deepgreen DB''

20170816:12:57:02:098112 gpstart:sdw1:gpadmin-[INFO]:-Greenplum Catalog Version: ''201310150''

20170816:12:57:02:098112 gpstart:sdw1:gpadmin-[INFO]:-Starting Master instance in admin mode

20170816:12:57:03:098112 gpstart:sdw1:gpadmin-[INFO]:-Obtaining Greenplum Master catalog information

20170816:12:57:03:098112 gpstart:sdw1:gpadmin-[INFO]:-Obtaining Segment details from master...

20170816:12:57:03:098112 gpstart:sdw1:gpadmin-[INFO]:-Setting new master era

20170816:12:57:03:098112 gpstart:sdw1:gpadmin-[INFO]:-Master Started...

20170816:12:57:03:098112 gpstart:sdw1:gpadmin-[INFO]:-Shutting down master

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:---------------------------

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:-Master instance parameters

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:---------------------------

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:-Database = template1

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:-Master Port = 5432

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:-Master directory = /hgdata/master/hgdwseg-1

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:-Timeout = 600 seconds

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:-Master standby = Off

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:---------------------------------------

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:-Segment instances that will be started

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:---------------------------------------

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:- Host Datadir Port

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg0 25432

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg1 25433

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg2 25434

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg3 25435

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg4 25436

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg5 25437

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg6 25438

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg7 25439

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg8 25440

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg9 25441

20170816:12:57:05:098112 gpstart:sdw1:gpadmin-[INFO]:- sdw1 /hgdata/primary/hgdwseg10 25442

Continue with Greenplum instance startup Yy|Nn (default=N):

> y

20170816:12:57:07:098112 gpstart:sdw1:gpadmin-[INFO]:-Commencing parallel segment instance startup, please wait...

.......

20170816:12:57:14:098112 gpstart:sdw1:gpadmin-[INFO]:-Process results...

20170816:12:57:14:098112 gpstart:sdw1:gpadmin-[INFO]:-----------------------------------------------------

20170816:12:57:14:098112 gpstart:sdw1:gpadmin-[INFO]:- Successful segment starts = 11

20170816:12:57:14:098112 gpstart:sdw1:gpadmin-[INFO]:- Failed segment starts = 0

20170816:12:57:14:098112 gpstart:sdw1:gpadmin-[INFO]:- Skipped segment starts (segments are marked down in configuration) = 0

20170816:12:57:14:098112 gpstart:sdw1:gpadmin-[INFO]:-----------------------------------------------------

20170816:12:57:14:098112 gpstart:sdw1:gpadmin-[INFO]:-

20170816:12:57:14:098112 gpstart:sdw1:gpadmin-[INFO]:-Successfully started 11 of 11 segment instances

20170816:12:57:14:098112 gpstart:sdw1:gpadmin-[INFO]:-----------------------------------------------------

20170816:12:57:14:098112 gpstart:sdw1:gpadmin-[INFO]:-Starting Master instance sdw1 directory /hgdata/master/hgdwseg-1

20170816:12:57:15:098112 gpstart:sdw1:gpadmin-[INFO]:-Command pg_ctl reports Master sdw1 instance active

20170816:12:57:15:098112 gpstart:sdw1:gpadmin-[INFO]:-No standby master configured. skipping...

20170816:12:57:15:098112 gpstart:sdw1:gpadmin-[INFO]:-Database successfully started

9. 将删除节点的备份文件使用 psql 恢复到当前数据库

psql -d postgres -f xxxx.sql #这里不赘述恢复过程

备注:

1)本文使用的是只恢复删除节点的数据。

2)本文的过程,逆向执行,可以将删除的节点重新添加回来,但是数据恢复起来比较耗时,与重新建库恢复差不多。

转载自:https://www.sypopo.com/post/M95Rm39Or7/

关于greenplum error!的问题我们已经讲解完毕,感谢您的阅读,如果还想了解更多关于Concurrency Control in Greenplum Database、Deepgreen DB 是什么(含Deepgreen和Greenplum下载地址)、Deepgreen(Greenplum) DBA常用运维SQL、Deepgreen/Greenplum 删除节点步骤等相关内容,可以在本站寻找。

本文标签: