本篇文章给大家谈谈hive中执行showdatabases报错:returncode1fromorg.apa,以及hive执行出现code2的知识点,同时本文还将给你拓展AndroidStudioGr

本篇文章给大家谈谈hive 中执行showdatabases报错:return code 1 from org.apa,以及hive执行出现code2的知识点,同时本文还将给你拓展Android Studio Gradle build 报错:Received status code 400 from server: Bad Request、Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask、Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.mr.MapredLocalTask、Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask等相关知识,希望对各位有所帮助,不要忘了收藏本站喔。

本文目录一览:- hive 中执行showdatabases报错:return code 1 from org.apa(hive执行出现code2)

- Android Studio Gradle build 报错:Received status code 400 from server: Bad Request

- Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask

- Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.mr.MapredLocalTask

- Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask

hive 中执行showdatabases报错:return code 1 from org.apa(hive执行出现code2)

hive> show databases;FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.metastore.HiveMetaStoreClient

查看日志信息:

./hive -hiveconf hive.hadoop.logger=DEBUG,console(hive 中查看日志信息命令,我本机是hadoop用户)

解决方法:

GRANT ALL PRIVILEGES ON *.* TO ''hadoop''@''master'' IDENTIFIED BY ''hadoop'' WITH GRANT OPTION;

flush privileges;

最后ok.

Android Studio Gradle build 报错:Received status code 400 from server: Bad Request

错误提示如下

Could not GET ''https://dl.google.com/dl/android/maven2/com/android/tools/build/gradle/3.1.2/

gradle-3.1.2.pom''. Received status code 400 from server: Bad Request。

但是实际上你访问此网址是能够下载这些东西的,这说明并不是网络的问题, 那么是什么问题呢?

解决方案

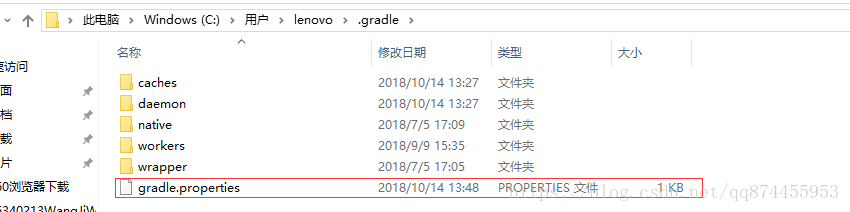

打开路径

C:\Users\<用户名>\.gradle

找到gradle.properties, 修改此文件

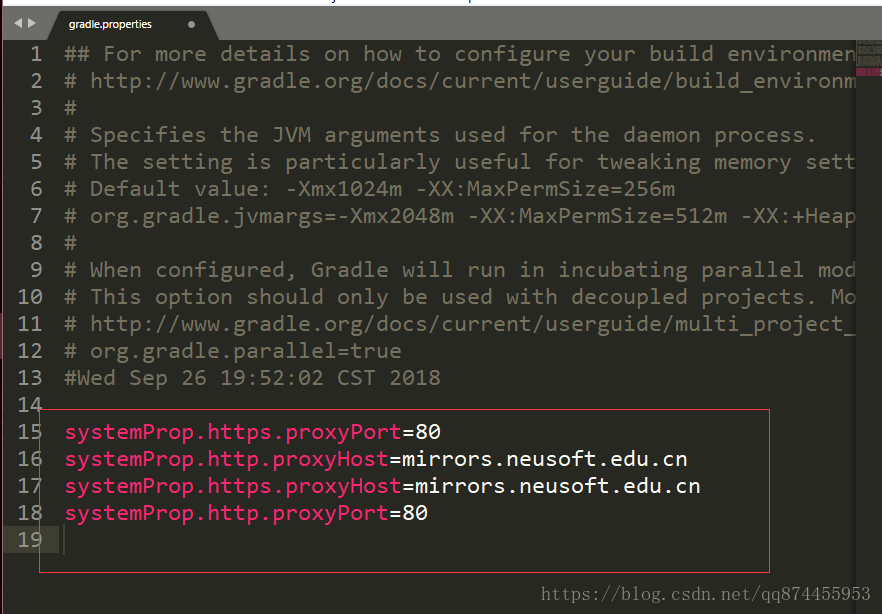

然后把

systemProp.https.proxyPort=80

systemProp.http.proxyHost=mirrors.neusoft.edu.cn

systemProp.https.proxyHost=mirrors.neusoft.edu.cn

systemProp.http.proxyPort=80

代码删除掉就解决了

Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask

Showing 4096 bytes of 17167 total. Click here for the full log.

.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$2.run(ApplicationMaster.scala:542) 17/01/20 09:39:23 ERROR client.RemoteDriver: Shutting down remote driver due to error: java.lang.InterruptedException java.lang.InterruptedException at java.lang.Object.wait(Native Method) at org.apache.spark.scheduler.TaskSchedulerImpl.waitBackendReady(TaskSchedulerImpl.scala:623) at org.apache.spark.scheduler.TaskSchedulerImpl.postStartHook(TaskSchedulerImpl.scala:170) at org.apache.spark.scheduler.cluster.YarnClusterScheduler.postStartHook(YarnClusterScheduler.scala:33) at org.apache.spark.SparkContext.<init>(SparkContext.scala:595) at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:59) at org.apache.hive.spark.client.RemoteDriver.<init>(RemoteDriver.java:169) at org.apache.hive.spark.client.RemoteDriver.main(RemoteDriver.java:556) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$2.run(ApplicationMaster.scala:542) 17/01/20 09:39:23 INFO yarn.ApplicationMaster: Unregistering ApplicationMaster with FAILED (diag message: Uncaught exception: org.apache.hadoop.yarn.exceptions.InvalidResourceRequestException: Invalid resource request, requested virtual cores < 0, or requested virtual cores > max configured, requestedVirtualCores=4, maxVirtualCores=2 at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.validateResourceRequest(SchedulerUtils.java:258) at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.normalizeAndValidateRequest(SchedulerUtils.java:226) at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.normalizeAndvalidateRequest(SchedulerUtils.java:233) at org.apache.hadoop.yarn.server.resourcemanager.RMServerUtils.normalizeAndValidateRequests(RMServerUtils.java:97) at org.apache.hadoop.yarn.server.resourcemanager.ApplicationMasterService.allocate(ApplicationMasterService.java:504) at org.apache.hadoop.yarn.api.impl.pb.service.ApplicationMasterProtocolPBServiceImpl.allocate(ApplicationMasterProtocolPBServiceImpl.java:60) at org.apache.hadoop.yarn.proto.ApplicationMasterProtocol$ApplicationMasterProtocolService$2.callBlockingMethod(ApplicationMasterProtocol.java:99) at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617) at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2086) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2082) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:415) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698) at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2080) ) 17/01/20 09:39:23 INFO impl.AMRMClientImpl: Waiting for application to be successfully unregistered. 17/01/20 09:39:24 INFO yarn.ApplicationMaster: Deleting staging directory .sparkStaging/application_1484288256809_0021 17/01/20 09:39:24 INFO storage.DiskBlockManager: Shutdown hook called 17/01/20 09:39:24 INFO util.ShutdownHookManager: Shutdown hook called 17/01/20 09:39:24 INFO util.ShutdownHookManager: Deleting directory /yarn/nm/usercache/anonymous/appcache/application_1484288256809_0021/spark-3f3ac5b0-5a46-48d7-929b-81b7820c9e81/userFiles-af94b1af-604f-4423-b1e4-0384e372c1f8 17/01/20 09:39:24 INFO util.ShutdownHookManager: Deleting directory /yarn/nm/usercache/anonymous/appcache/application_1484288256809_0021/spark-3f3ac5b0-5a46-48d7-929b-81b7820c9e81

Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.mr.MapredLocalTask

hive 遇到的问题,总结一下:

Query ID = grid_20151208110606_377592ad-5984-4f7b-9cfc-9cb2d6be4b6a

Total jobs = 1

java.io.IOException: Cannot run program "/home/grid/hadoop-2.6.1-64/bin/hadoop" (in directory "/root"): error=13, 权限不够

at java.lang.ProcessBuilder.start(ProcessBuilder.java:1041)

at java.lang.Runtime.exec(Runtime.java:617)

at java.lang.Runtime.exec(Runtime.java:450)

at org.apache.hadoop.hive.ql.exec.mr.MapredLocalTask.executeInChildVM(MapredLocalTask.java:289)

at org.apache.hadoop.hive.ql.exec.mr.MapredLocalTask.execute(MapredLocalTask.java:137)

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:160)

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:85)

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:1604)

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1364)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1177)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1004)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:994)

at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:201)

at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:153)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:364)

at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:712)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:631)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:570)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Caused by: java.io.IOException: error=13, 权限不够

at java.lang.UNIXProcess.forkAndExec(Native Method)

at java.lang.UNIXProcess.<init>(UNIXProcess.java:135)

at java.lang.ProcessImpl.start(ProcessImpl.java:130)

at java.lang.ProcessBuilder.start(ProcessBuilder.java:1022)

... 23 more

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.mr.MapredLocalTask

从上边错误及下边报错的类MapredLocalTask可以看出跟本地任务有关

hive从0.7版以后,为了提高小数据的计算速度,增加了本地模式,即将hdfs上的数据拉到hiveserver本地进行计算,可以通过以下几个参数对相关行为进行设置

hive.exec.mode.local.auto.input.files.max=4

hive.exec.mode.local.auto.inputbytes.max=134217728

总计 162

drwxr-xr-x 2 root root 4096 09-30 04:07 bin

drwxr-xr-x 4 root root 1024 09-29 11:42 boot

drwxr-xr-x 3 root root 4096 10-16 10:38 data0

drwxr-xr-x 11 root root 4000 11-19 09:42 dev

drwxr-xr-x 105 root root 12288 12-05 04:03 etc

drwxr-xr-x 5 root root 4096 12-03 18:15 home

drwxr-xr-x 11 root root 4096 09-30 04:07 lib

drwxr-xr-x 8 root root 12288 09-30 04:07 lib64

drwx------ 2 root root 16384 2012-09-17 lost+found

drwxr-xr-x 2 root root 4096 11-19 09:39 media

drwxr-xr-x 2 root root 0 11-19 09:39 misc

drwxr-xr-x 2 root root 4096 2011-05-11 mnt

drwxr-xr-x 2 root root 0 11-19 09:39 net

drwxr-xr-x 3 root root 4096 12-03 18:16 opt

dr-xr-xr-x 143 root root 0 11-19 09:37 proc

drwxr-x--- 20 root root 4096 12-08 14:18 root

drwxr-xr-x 2 root root 12288 09-30 04:07 sbin

drwxr-xr-x 4 root root 0 11-19 09:37 selinux

drwxr-xr-x 2 root root 4096 2011-05-11 srv

drwxr-xr-x 11 root root 0 11-19 09:37 sys

drwxrwxrwt 15 root root 4096 12-08 14:19 tmp

drwxr-xr-x 22 root root 4096 11-24 14:16 usr

drwxr-xr-x 24 root root 4096 09-29 11:30 var

[sudo] password for grid:

[grid@h1 /]$ ls -l

总计 162

drwxr-xr-x 2 root root 4096 09-30 04:07 bin

drwxr-xr-x 4 root root 1024 09-29 11:42 boot

drwxr-xr-x 3 root root 4096 10-16 10:38 data0

drwxr-xr-x 11 root root 4000 11-19 09:42 dev

drwxr-xr-x 105 root root 12288 12-05 04:03 etc

drwxr-xr-x 5 root root 4096 12-03 18:15 home

drwxr-xr-x 11 root root 4096 09-30 04:07 lib

drwxr-xr-x 8 root root 12288 09-30 04:07 lib64

drwx------ 2 root root 16384 2012-09-17 lost+found

drwxr-xr-x 2 root root 4096 11-19 09:39 media

drwxr-xr-x 2 root root 0 11-19 09:39 misc

drwxr-xr-x 2 root root 4096 2011-05-11 mnt

drwxr-xr-x 2 root root 0 11-19 09:39 net

drwxr-xr-x 3 root root 4096 12-03 18:16 opt

dr-xr-xr-x 143 root root 0 11-19 09:37 proc

drwxr-xr-x 20 root root 4096 12-08 14:18 root

drwxr-xr-x 2 root root 12288 09-30 04:07 sbin

drwxr-xr-x 4 root root 0 11-19 09:37 selinux

drwxr-xr-x 2 root root 4096 2011-05-11 srv

drwxr-xr-x 11 root root 0 11-19 09:37 sys

drwxrwxrwt 15 root root 4096 12-08 14:19 tmp

drwxr-xr-x 22 root root 4096 11-24 14:16 usr

drwxr-xr-x 24 root root 4096 09-29 11:30 var

[grid@h1 /]$ hive

15/12/08 14:45:32 WARN conf.HiveConf: HiveConf of name hive.metastore.local does not exist

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/grid/hadoop-2.6.1-64/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/grid/apache-hive-1.0.1-bin/lib/hive-jdbc-1.0.1-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

hive> select a.UUID, a.TASK_NAME, a.START_TIME, b.ORG_NAME,b.ORG_ID FROM NISMP_E_YEAR_WORK_PLAN_ETL a join NISMP_R_ORG_YEAR_WORK_PLAN_ETL b on (a.UUID = b.TASK_UUID);

Query ID = grid_20151208144545_cf044720-85e5-4c04-9a84-e75d465041a0

Total jobs = 1

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/grid/hadoop-2.6.1-64/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/grid/apache-hive-1.0.1-bin/lib/hive-jdbc-1.0.1-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

15/12/08 14:46:00 WARN conf.HiveConf: DEPRECATED: Configuration property hive.metastore.local no longer has any effect. Make sure to provide a valid value for hive.metastore.uris if you are connecting to a remote metastore.

15/12/08 14:46:00 WARN conf.HiveConf: HiveConf of name hive.metastore.local does not exist

Execution log at: /tmp/grid/grid_20151208144545_cf044720-85e5-4c04-9a84-e75d465041a0.log

2015-12-08 02:46:00 Starting to launch local task to process map join; maximum memory = 477102080

2015-12-08 02:46:02 Dump the side-table for tag: 0 with group count: 11 into file: file:/tmp/hive/local/e4a94925-1b3e-400a-9d0b-5a75cb757c84/hive_2015-12-08_14-45-53_214_6791763111790754109-1/-local-10003/HashTable-Stage-3/MapJoin-mapfile00--.hashtable

2015-12-08 02:46:02 Uploaded 1 File to: file:/tmp/hive/local/e4a94925-1b3e-400a-9d0b-5a75cb757c84/hive_2015-12-08_14-45-53_214_6791763111790754109-1/-local-10003/HashTable-Stage-3/MapJoin-mapfile00--.hashtable (1050 bytes)

2015-12-08 02:46:02 End of local task; Time Taken: 1.641 sec.

Execution completed successfully

MapredLocal task succeeded

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1447925899672_0008, Tracking URL = http://h1:8088/proxy/application_1447925899672_0008/

Kill Command = /home/grid/hadoop-2.6.1-64/bin/hadoop job -kill job_1447925899672_0008

Hadoop job information for Stage-3: number of mappers: 2; number of reducers: 0

2015-12-08 14:46:12,591 Stage-3 map = 0%, reduce = 0%

2015-12-08 14:46:23,123 Stage-3 map = 50%, reduce = 0%, Cumulative CPU 1.73 sec

2015-12-08 14:46:24,174 Stage-3 map = 100%, reduce = 0%, Cumulative CPU 3.72 sec

MapReduce Total cumulative CPU time: 3 seconds 720 msec

Ended Job = job_1447925899672_0008

MapReduce Jobs Launched:

Stage-Stage-3: Map: 2 Cumulative CPU: 3.72 sec HDFS Read: 16591 HDFS Write: 8819 SUCCESS

Total MapReduce CPU Time Spent: 3 seconds 720 msec

OK

9368588218954e30b49d125a7c8602cf 测试 2015-11-12 测试部 ceshi

20aa5494ee9248f6b6a6b282729b56d9 大家好 2015-11-13 南通 320600

c8a269c18e6c49229e0a8c10473a0657 你好 2015-11-13 南通 320600

c8a269c18e6c49229e0a8c10473a0657 你好 2015-11-13 扬州 321000

c8a269c18e6c49229e0a8c10473a0657 你好 2015-11-13 淮安 320800

Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask

我们今天的关于hive 中执行showdatabases报错:return code 1 from org.apa和hive执行出现code2的分享已经告一段落,感谢您的关注,如果您想了解更多关于Android Studio Gradle build 报错:Received status code 400 from server: Bad Request、Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask、Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.mr.MapredLocalTask、Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask的相关信息,请在本站查询。

本文标签: