在本文中,我们将详细介绍mysqlreplication之1594错误的各个方面,并为您提供关于mysql1405错误的相关解答,同时,我们也将为您带来关于MySQL5.6GTIDReplicatio

在本文中,我们将详细介绍mysql replication 之 1594 错误的各个方面,并为您提供关于mysql 1405错误的相关解答,同时,我们也将为您带来关于MySQL 5.6 GTID Replication、MySQL 5.6 Replication、MySQL 5.7 基于组复制(MySQL Group Replication) - 运维小结、MySQL 5.7: 使用组复制(MySQL Group Replication)的有用知识。

本文目录一览:- mysql replication 之 1594 错误(mysql 1405错误)

- MySQL 5.6 GTID Replication

- MySQL 5.6 Replication

- MySQL 5.7 基于组复制(MySQL Group Replication) - 运维小结

- MySQL 5.7: 使用组复制(MySQL Group Replication)

mysql replication 之 1594 错误(mysql 1405错误)

Step 1: 本周末因服务商初始化了 mysql 从库服务器,导致 mysql 主从不同

说明:主从不同步信息如下

mysql> show slave status\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 104.237.146.129

Master_User: rpl

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-master.000066

Read_Master_Log_Pos: 924477125

Relay_Log_File: mysqld-relay-bin.000183

Relay_Log_Pos: 805630111

Relay_Master_Log_File: mysql-master.000066

Slave_IO_Running: Yes

Slave_SQL_Running: No

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 1594

Last_Error: Relay log read failure: Could not parse relay log event entry. The possible reasons are: the master''s binary log is corrupted (you can check this by running ''mysqlbinlog'' on the binary log), the slave''s relay log is corrupted (you can check this by running ''mysqlbinlog'' on the relay log), a network problem, or a bug in the master''s or slave''s MySQL code. If you want to check the master''s binary log or slave''s relay log, you will be able to know their names by issuing ''SHOW SLAVE STATUS'' on this slave.

Skip_Counter: 0

Exec_Master_Log_Pos: 805629945

Relay_Log_Space: 924482592

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: NULL

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 1594

Last_SQL_Error: Relay log read failure: Could not parse relay log event entry. The possible reasons are: the master''s binary log is corrupted (you can check this by running ''mysqlbinlog'' on the binary log), the slave''s relay log is corrupted (you can check this by running ''mysqlbinlog'' on the relay log), a network problem, or a bug in the master''s or slave''s MySQL code. If you want to check the master''s binary log or slave''s relay log, you will be able to know their names by issuing ''SHOW SLAVE STATUS'' on this slave.

Replicate_Ignore_Server_Ids:

Master_Server_Id: 129

Master_UUID: 47451c14-87e6-11e8-93b3-f23c91cd2190

Master_Info_File: /data/mysql/master.info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State:

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp: 190126 13:57:17

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set:

Executed_Gtid_Set:

Auto_Position: 0

1 row in set (0.00 sec)

Step 2. 通过上面的信息,我们可以知道

1.Master_Log_File: mysql-master.000066 当前的slave已经读取了master的binlog文件为:mysql-master.000066

2.Read_Master_Log_Pos: 924477125 当前的slave读取的master binlog---mysql-master.000066的位置是924477125

3.Slave_SQL_Running: No 当前slave的sql线程停止,表示,master的变化,可以读取到slave,但是slave不能应用这些变换

4.Last_Errno: 1594 当前slave发生了1594错误

5.Last_Error 1594错误的具体信息在laster_error中显示

6.Relay_Master_Log_File: mysql-master.000066 当前slave应用的最新的master的binlog是:mysql-master.000066,这就意味着,如果我们想重新启用slave,同步的起点应该是从mysql-master.000066这个文件开始

7.Exec_Master_Log_Pos: 236752486 当前slave应用的最新的binlog的位置是:805629945,这就意味着,同步的起点文件的位置是:805629945

Step 3. 解决办法

1. 重新创建 slave。

使用 mysqldump 命令,重新备份数据库。然后在 slave 中导入,然后使用 change master to 命令重建 slave

注:在备份时,加入参数 --single-transaction --master-data=2,减少对线上业务的影响,把当前备份的 binglog 的信息记录到备份中。2. 直接使用 change master to 命令,根据 Relay_Master_Log_File、Exec_Master_Log_Pos 的值,重建 slave.

#先stop slave

mysql> stop slave;

Query OK, 0 rows affected (0.01 sec)

#重建slave

mysql> change master to master_log_file=''mysql-master.000066'',master_log_pos=805629945;

Query OK, 0 rows affected (0.05 sec)

#开启slave

mysql> start slave;

Query OK, 0 rows affected (0.00 sec)

#查看同步情况,不能同步

show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 104.237.146.129

Master_User: rpl

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-master.000066

Read_Master_Log_Pos: 929646723

Relay_Log_File: mysqld-relay-bin.000002

Relay_Log_Pos: 286

Relay_Master_Log_File: mysql-master.000066

Slave_IO_Running: Yes

Slave_SQL_Running: No

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 1062

Last_Error: Error ''Duplicate entry ''25-112-MediaShakers-US-East-2019-01-26 12:23:46-086-6a-051ef24c0'' for key ''PRIMARY'''' on query. Default database: ''gadmobe_dw''. Query: ''INSERT INTO open_rtb_win(exchange_id, dsp_id, endpoint_id, request_id, advertiser_spend, gadmobe_cost, bid_price, bid_floor,advertiser_price,campaign_id,response_id,creative_id,country) VALUES

(

25,112,''MediaShakers-US-East'',''086-6a-051ef24c017f4866'',0.46988000554858445,0.26188,0.2680000055485845,0.1,0.47600001109716894,''1167_21'',''Wi89d4Iu7-w_0'',''1167_29'',''USA''

)''

Skip_Counter: 0

Exec_Master_Log_Pos: 805629945

Relay_Log_Space: 124017238

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: NULL

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 1062

Last_SQL_Error: Error ''Duplicate entry ''25-112-MediaShakers-US-East-2019-01-26 12:23:46-086-6a-051ef24c0'' for key ''PRIMARY'''' on query. Default database: ''gadmobe_dw''. Query: ''INSERT INTO open_rtb_win(exchange_id, dsp_id, endpoint_id, request_id, advertiser_spend, gadmobe_cost, bid_price, bid_floor,advertiser_price,campaign_id,response_id,creative_id,country) VALUES

(

25,112,''MediaShakers-US-East'',''086-6a-051ef24c017f4866'',0.46988000554858445,0.26188,0.2680000055485845,0.1,0.47600001109716894,''1167_21'',''Wi89d4Iu7-w_0'',''1167_29'',''USA''

)''

Replicate_Ignore_Server_Ids:

Master_Server_Id: 129

Master_UUID: 47451c14-87e6-11e8-93b3-f23c91cd2190

Master_Info_File: /data/mysql/master.info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State:

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp: 190127 11:02:31

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set:

Executed_Gtid_Set:

Auto_Position: 0

1 row in set (0.00 sec)

#尝试跳过这个错误

mysql> stop slave;

Query OK, 0 rows affected (0.01 sec)

mysql> set GLOBAL SQL_SLAVE_SKIP_COUNTER=1;

Query OK, 0 rows affected (0.00 sec)

mysql> start slave;

Query OK, 0 rows affected (0.00 sec)

#仍然不能同步

mysql> show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 104.237.146.129

Master_User: rpl

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-master.000066

Read_Master_Log_Pos: 932450072

Relay_Log_File: mysqld-relay-bin.000002

Relay_Log_Pos: 901

Relay_Master_Log_File: mysql-master.000066

Slave_IO_Running: Yes

Slave_SQL_Running: No

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 1062

Last_Error: Error ''Duplicate entry ''19-112-MediaShakers-US-East-2019-01-26 12:23:48-246-57-1962c2525'' for key ''PRIMARY'''' on query. Default database: ''gadmobe_dw''. Query: ''INSERT INTO open_rtb_win(exchange_id, dsp_id, endpoint_id, request_id, advertiser_spend, gadmobe_cost, bid_price, bid_floor,advertiser_price,campaign_id,response_id,creative_id,country) VALUES

(

19,112,''MediaShakers-US-East'',''246-57-1962c2525479a11c'',0.465220031573686,0.26922,0.280000031573686,0.14,0.47600006314737203,''1167_21'',''pfV2DBMCttI_0'',''1167_29'',''USA''

)''

Skip_Counter: 0

Exec_Master_Log_Pos: 805630560

Relay_Log_Space: 126820927

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: NULL

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 1062

Last_SQL_Error: Error ''Duplicate entry ''19-112-MediaShakers-US-East-2019-01-26 12:23:48-246-57-1962c2525'' for key ''PRIMARY'''' on query. Default database: ''gadmobe_dw''. Query: ''INSERT INTO open_rtb_win(exchange_id, dsp_id, endpoint_id, request_id, advertiser_spend, gadmobe_cost, bid_price, bid_floor,advertiser_price,campaign_id,response_id,creative_id,country) VALUES

(

19,112,''MediaShakers-US-East'',''246-57-1962c2525479a11c'',0.465220031573686,0.26922,0.280000031573686,0.14,0.47600006314737203,''1167_21'',''pfV2DBMCttI_0'',''1167_29'',''USA''

)''

Replicate_Ignore_Server_Ids:

Master_Server_Id: 129

Master_UUID: 47451c14-87e6-11e8-93b3-f23c91cd2190

Master_Info_File: /data/mysql/master.info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State:

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp: 190127 11:31:29

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set:

Executed_Gtid_Set:

Auto_Position: 0

1 row in set (0.00 sec)

#在配置文件里配置跳过错误

注意:如果不重要的数据可跳过.

$ vim /etc/my.cnf

加入:

slave_skip_errors = 1062

#重启mysql

$ /etc/init.d/mysqld restart

#查看同步情况

mysql> show slave status\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 104.237.146.129

Master_User: rpl

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-master.000066

Read_Master_Log_Pos: 933161094

Relay_Log_File: mysqld-relay-bin.000002

Relay_Log_Pos: 28953833

Relay_Master_Log_File: mysql-master.000066

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 834583492

Relay_Log_Space: 127533112

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 60002

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 129

Master_UUID: 47451c14-87e6-11e8-93b3-f23c91cd2190

Master_Info_File: /data/mysql/master.info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State: update

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set:

Executed_Gtid_Set:

Auto_Position: 0

1 row in set (0.00 sec)

MySQL 5.6 GTID Replication

一. mysql 5.6引入了gtid的概念,那么gtid是何方神圣?其实也不复杂,就是一个全局事务标示符。使用gtid时,每次事务提交都会在binlog里生成1个唯一的标示符,它由uuid和事务id组成。首次提交的事务id为1,第二次为2,第三次为3,以此例推。uuid是服务器的身份id,在第一次启动mysql时,会自动生成一个server_uuid, 并且默认写入到数据目录下的auto.cnf文件里。我们一般无需修改,官方也不建议修改。更为详细的可以参考mariadb官方文档

https://mariadb.com/kb/en/mariadb/mariadb-documentation/replication-cluster-multi-master/replication/parallel-replication/

[root@mysql-server-01 data]# pwd/root/sandboxes/msb_5_6_19/data[root@mysql-server-01 data]# cat auto.cnf [auto]server-uuid=9b0147c3-eed0-11e3-9821-000c29e3621e[root@mysql-server-01 data]#

那么基于GTID的Replication有啥好处?好处主要有以下2点:

(1)在传统的复制里面,当发生故障,需要主从切换,需要找到binlog和pos点,然后change master to指向新的master,相对来说比较麻烦,也容易出错。在MySQL 5.6里面,不用再找binlog和pos点,我们只需要知道master的ip,端口,以及账号密码就行,因为复制是自动的,MySQL会通过内部机制GTID自动找点同步。

(2)多线程复制(基于库)。在MySQL 5.6以前的版本,slave的复制是单线程的。一个事件一个事件的读取应用。而master是并发写入的,所以延时是避免不了的。唯一有效的方法是把多个库放在多台slave,这样又有点浪费服务器。在MySQL 5.6里面,我们可以把多个表放在多个库,这样就可以使用多线程复制,当只有1个库,多线程复制是没有用的。

GTID相关特性默认是关闭的(难道官方还觉得不够成熟),如下:

mysql [localhost] {msandbox} ((none)) > show variables like ''%gtid%'';+--------------------------+-----------+| Variable_name| Value |+--------------------------+-----------+| enforce_gtid_consistency | OFF || gtid_executed| || gtid_mode| OFF || gtid_next| AUTOMATIC || gtid_owned | || gtid_purged| |+--------------------------+-----------+6 rows in set (0.01 sec)mysql [localhost] {msandbox} ((none)) >binlog里面也不会有GTID相关的记录,和普通复制时是一样的,如下:

[root@mysql-server-01 data]# mysqlbinlog --no-defaults -v --base64-output=DECODE-ROWS mysql_sandbox5619-bin.000006 /*!50530 SET @@SESSION.PSEUDO_SLAVE_MODE=1*/;/*!40019 SET @@session.max_insert_delayed_threads=0*/;/*!50003 SET @OLD_COMPLETION_TYPE=@@COMPLETION_TYPE,COMPLETION_TYPE=0*/;DELIMITER /*!*/;# at 4#140706 20:53:36 server id 1end_log_pos 120 Start: binlog v 4, server v 5.6.19-log created 140706 20:53:36 at startup# Warning: this binlog is either in use or was not closed properly.ROLLBACK/*!*/;# at 120#140706 21:32:15 server id 1end_log_pos 207 Query thread_id=1 exec_time=0 error_code=0SET TIMESTAMP=1404653535/*!*/;SET @@session.pseudo_thread_id=1/*!*/;SET @@session.foreign_key_checks=1, @@session.sql_auto_is_null=0, @@session.unique_checks=1, @@session.autocommit=1/*!*/;SET @@session.sql_mode=1073741824/*!*/;SET @@session.auto_increment_increment=1, @@session.auto_increment_offset=1/*!*/;/*!/C utf8 *//*!*/;SET @@session.character_set_client=33,@@session.collation_connection=33,@@session.collation_server=8/*!*/;SET @@session.lc_time_names=0/*!*/;SET @@session.collation_database=DEFAULT/*!*/;drop database yayunz/*!*/;DELIMITER ;# End of log fileROLLBACK /* added by mysqlbinlog */;/*!50003 SET COMPLETION_TYPE=@OLD_COMPLETION_TYPE*/;/*!50530 SET @@SESSION.PSEUDO_SLAVE_MODE=0*/;[root@mysql-server-01 data]#

当开启GTID特性以后,binlog记录格式是这样的(具体开启后面介绍)

注意:(当启用GTID以后,binlog格式变化很大,如果继续采用低版本的mysqlbinlog命令查看,将会得到如下错误)。

[root@mysql-server-01 data]# mysqlbinlog --no-defaults -v --base64-output=DECODE-ROWS mysql-bin.000004/*!50530 SET @@SESSION.PSEUDO_SLAVE_MODE=1*/;/*!40019 SET @@session.max_insert_delayed_threads=0*/;/*!50003 SET @OLD_COMPLETION_TYPE=@@COMPLETION_TYPE,COMPLETION_TYPE=0*/;DELIMITER /*!*/;# at 4#140706 22:08:25 server id 1end_log_pos 120 Start: binlog v 4, server v 5.6.19-log created 140706 22:08:25ERROR: Error in Log_event::read_log_event(): ''Sanity check failed'', data_len: 71, event_type: 35ERROR: Could not read entry at offset 120: Error in log format or read error.DELIMITER ;# End of log fileROLLBACK /* added by mysqlbinlog */;/*!50003 SET COMPLETION_TYPE=@OLD_COMPLETION_TYPE*/;/*!50530 SET @@SESSION.PSEUDO_SLAVE_MODE=0*/;[root@mysql-server-01 data]#

下面是使用MySQL 5.6的mysqlbinlog命令查看开启了GTID日志的记录。

[root@mysql-server-01 data]# /data/sandbox_mysql/5.6.19/bin/mysqlbinlog --no-defaults -v --base64-output=DECODE-ROWS mysql-bin.000002 | tail -n 30DELIMITER /*!*/;# at 4#140706 21:58:35 server id 1end_log_pos 120 CRC32 0xcb49c4feStart: binlog v 4, server v 5.6.19-log created 140706 21:58:35# at 120#140706 21:58:35 server id 1end_log_pos 191 CRC32 0x0e40da64Previous-GTIDs# 9b0147c3-eed0-11e3-9821-000c29e3621e:1# at 191#140706 22:00:37 server id 1end_log_pos 239 CRC32 0x52fc16edGTID [commit=yes]SET @@SESSION.GTID_NEXT= ''9b0147c3-eed0-11e3-9821-000c29e3621e:2''/*!*/;# at 239#140706 22:00:37 server id 1end_log_pos 330 CRC32 0xf652f593Query thread_id=3 exec_time=0 error_code=0SET TIMESTAMP=1404655237/*!*/;SET @@session.pseudo_thread_id=3/*!*/;SET @@session.foreign_key_checks=1, @@session.sql_auto_is_null=0, @@session.unique_checks=1, @@session.autocommit=1/*!*/;SET @@session.sql_mode=1073741824/*!*/;SET @@session.auto_increment_increment=1, @@session.auto_increment_offset=1/*!*/;/*!/C utf8 *//*!*/;SET @@session.character_set_client=33,@@session.collation_connection=33,@@session.collation_server=8/*!*/;SET @@session.lc_time_names=0/*!*/;SET @@session.collation_database=DEFAULT/*!*/;create database dyy/*!*/;SET @@SESSION.GTID_NEXT= ''AUTOMATIC'' /* added by mysqlbinlog */ /*!*/;# at 330#140706 22:00:42 server id 1end_log_pos 377 CRC32 0x68ff3fc0Rotate to mysql-bin.000003pos: 4DELIMITER ;# End of log fileROLLBACK /* added by mysqlbinlog */;/*!50003 SET COMPLETION_TYPE=@OLD_COMPLETION_TYPE*/;/*!50530 SET @@SESSION.PSEUDO_SLAVE_MODE=0*/;[root@mysql-server-01 data]#

我们从上面输出可以清楚的看见事务id如下(根据上面提到的,这个是第二个事务):

SET @@SESSION.GTID_NEXT= ''9b0147c3-eed0-11e3-9821-000c29e3621e:2''/*!*/;

GTID的简单工作流程如下:

(1)在master上产生一个事务并且提交,并且写入binlog

(2)master上的binlog发送到slave,slave接收完毕并且写入relay log,slave读取到这个GTID,并设置gtid_next的值,例如:

SET @@SESSION.GTID_NEXT= ''9b0147c3-eed0-11e3-9821-000c29e3621e:2

然后告诉slave接下来的事务必须使用GTID,并写入到它自己的binlog里。 (3)slave检查并确认这个GTID没有被使用,如果没有被使用,那么开始执行这个事务并写入到它自己的binlog里。

(4)由于gtid_next的值不是空的,slave不会尝试去生成一个新的gtid,而是通过主从复制来获取GTID。

二. 基于GTID的Replication的配置如下(最少配置选项,master和slave一样,server-id则需要不一样了,下面的参数只是针对于GTID,对于复制过滤选项和普通复制一样,因为GTID模式的复制也可以转换为普通模式的复制)

binlog_format = rowgtid-mode = ONenforce-gtid-consistency = ONlog-bin=mysql-binlog-slave-updates

少了相关选项,启动则报错,这是其中的报错日志,如下:

2014-07-06 22:47:44 15228 [ERROR] --gtid-mode=ON or UPGRADE_STEP_1 or UPGRADE_STEP_2 requires --log-bin and --log-slave-updates

其中binlog_format = row不是必须的,但是推荐使用ROW格式,具体的参考我前面的文章提到的原因。

上面参数主从都配置以后,下面我们就来完成一个基于GTID的Replication(和普通复制有少量区别)

(1)在master上授权账户;

mysql [localhost] {root} ((none)) > GRANT REPLICATION SLAVE ON *.* TO ''repl''@''192.168.0.10'' IDENTIFIED BY ''123456'';Query OK, 0 rows affected (0.00 sec)mysql [localhost] {root} ((none)) > flush privileges;Query OK, 0 rows affected (0.00 sec)mysql [localhost] {root} ((none)) >(2)在master上备份要同步的库(这里的备份参数大家自行查阅文档,当然有几个参数或许用不到)

[root@mysql-server-01 msb_5_6_19]# mysqldump -uroot -pmsandbox -S /tmp/mysql_sandbox5619.sock -q -R --triggers --opt --single-transaction --flush-logs --master-data=2 dyy > /tmp/dyy.sql[root@mysql-server-01 msb_5_6_19]#

(3)将备份的数据在slave上导入:

[root@mysql-server-02 ~]# mysql -uroot -pmsandbox -S /tmp/mysql_sandbox5619.sock <p>(4)进行change master to操作</p><pre>mysql [localhost] {root} (dyy) > CHANGE MASTER TO MASTER_HOST=''192.168.0.100'',MASTER_PORT=5619,MASTER_USER=''repl'',MASTER_PASSWORD=''123456'',master_auto_position=1;Query OK, 0 rows affected, 2 warnings (0.03 sec)mysql [localhost] {root} (dyy) > show warnings;+-------+------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+| Level | Code | Message|+-------+------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+| Note| 1759 | Sending passwords in plain text without SSL/TLS is extremely insecure. || Note| 1760 | Storing MySQL user name or password information in the master info repository is not secure and is therefore not recommended. Please consider using the USER and PASSWORD connection options for START SLAVE; see the ''START SLAVE Syntax'' in the MySQL Manual for more information. |+-------+------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+2 rows in set (0.00 sec)mysql [localhost] {root} (dyy) >可以看见成功,但是有两个警告,原来在MySQL 5.6里面越来越严格了,说不推荐这种使用方法。

mysql [localhost] {root} (dyy) > start slave;Query OK, 0 rows affected (0.01 sec)mysql [localhost] {root} (dyy) > show slave status/G*************************** 1. row *************************** Slave_IO_State: Waiting for master to send eventMaster_Host: 192.168.0.100Master_User: replMaster_Port: 5619Connect_Retry: 60Master_Log_File: mysql-bin.000001Read_Master_Log_Pos: 151 Relay_Log_File: mysql_sandbox5619-relay-bin.000002Relay_Log_Pos: 361Relay_Master_Log_File: mysql-bin.000001 Slave_IO_Running: YesSlave_SQL_Running: YesReplicate_Do_DB: Replicate_Ignore_DB:Replicate_Do_Table:Replicate_Ignore_Table: Replicate_Wild_Do_Table: Replicate_Wild_Ignore_Table:Last_Errno: 0 Last_Error:Skip_Counter: 0Exec_Master_Log_Pos: 151Relay_Log_Space: 577Until_Condition: None Until_Log_File: Until_Log_Pos: 0 Master_SSL_Allowed: No Master_SSL_CA_File:Master_SSL_CA_Path: Master_SSL_Cert: Master_SSL_Cipher:Master_SSL_Key: Seconds_Behind_Master: 0Master_SSL_Verify_Server_Cert: NoLast_IO_Errno: 0Last_IO_Error:Last_SQL_Errno: 0 Last_SQL_Error: Replicate_Ignore_Server_Ids:Master_Server_Id: 1Master_UUID: 9b0147c3-eed0-11e3-9821-000c29e3621e Master_Info_File: /root/sandboxes/msb_5_6_19/data/master.infoSQL_Delay: 0SQL_Remaining_Delay: NULLSlave_SQL_Running_State: Slave has read all relay log; waiting for the slave I/O thread to update it Master_Retry_Count: 86400Master_Bind: Last_IO_Error_Timestamp:Last_SQL_Error_Timestamp:Master_SSL_Crl:Master_SSL_Crlpath:Retrieved_Gtid_Set: Executed_Gtid_Set: 9b0147c3-eed0-11e3-9821-000c29e3621e:1-13,d3c1aada-fad2-11e3-a66f-000c29ce3f18:1-85Auto_Position: 11 row in set (0.00 sec)mysql [localhost] {root} (dyy) >如果使用GTID模式的复制而change master to还使用普通模式的,则会报错。

三. GTID的局限性(或者说还不够完善的地方)

(1)目前GTID还不支持MyISAM表(GTID是基于事务的)

(2)对CREATE TABLE ..... SELECT语句不支持。

mysql [localhost] {root} (dyy) > create table dyy select * from t1;ERROR 1786 (HY000): CREATE TABLE ... SELECT is forbidden when @@GLOBAL.ENFORCE_GTID_CONSISTENCY = 1.mysql [localhost] {root} (dyy) >(3)必须开启enforce-gtid-consistency参数,否则启动MySQL报错,如下所示:

2014-07-06 23:44:27 17563 [ERROR] --gtid-mode=ON or UPGRADE_STEP_1 requires --enforce-gtid-consistency2014-07-06 23:44:27 17563 [ERROR] Aborting

注:在5.6.9的版本还不支持创建临时表,我测试的是5.6.19的版本,已经可以创建临时表了。

四. 多线程复制(基于库)

多线程复制由参数slave-paralles-workers控制,设定从服务器的SQL线程数;0表示关闭多线程复制功能;默认为0,最大可以设置为1024个线程。在从库的配置文件[mysqld]段落添加配置:

slave_parallel_workers = 6

重启MySQL以后使用show processlist看到如下所示:

mysql [localhost] {msandbox} ((none)) > show processlist;+----+-------------+-----------+------+---------+------+-----------------------------------------------------------------------------+------------------+| Id | User| Host| db | Command | Time | State | Info |+----+-------------+-----------+------+---------+------+-----------------------------------------------------------------------------+------------------+|1 | system user | | NULL | Connect |173 | Slave has read all relay log; waiting for the slave I/O thread to update it | NULL ||2 | system user | | NULL | Connect |173 | Waiting for master to send event| NULL ||3 | system user | | NULL | Connect |173 | Waiting for an event from Coordinator | NULL ||4 | system user | | NULL | Connect |173 | Waiting for an event from Coordinator | NULL ||5 | system user | | NULL | Connect |173 | Waiting for an event from Coordinator | NULL ||6 | system user | | NULL | Connect |173 | Waiting for an event from Coordinator | NULL ||7 | system user | | NULL | Connect |173 | Waiting for an event from Coordinator | NULL ||8 | system user | | NULL | Connect |173 | Waiting for an event from Coordinator | NULL || 10 | msandbox| localhost | NULL | Query |0 | init| show processlist |+----+-------------+-----------+------+---------+------+-----------------------------------------------------------------------------+------------------+9 rows in set (0.00 sec)mysql [localhost] {msandbox} ((none)) >此外用于保证relog信息以及master信息不丢失,需要设置相关参数来保证,可以参考我前面的文章。

总结:

我们尽可能的把一个库中的表按照业务逻辑拆分为多个库,这样在master上写操作时,slave就可以根据我们设置的线程数进行多线程复制,减少了传统复制的问题--延时。比如2个库我们就可以开启2个线程,3个库就可以开启3个线程,以此类推。还有目前GTID好像还不太成熟,官方的版本里面也没有默认开启,所以还需要进行大量的测试。

参考资料

http://dev.mysql.com/doc/refman/5.6/en/replication-options-gtids.html

http://dev.mysql.com/doc/refman/5.5/en/replication-howto.html

MySQL 5.6 Replication

打开

打开mysql主页,满篇介绍mysql5.6版本有多好,多牛。后来浏览了5.6的更新说明,说是强化了replication,还有人测试开启replication对性能影响不大,不像以前,影响性能明显。反而性能更好?那个叫mysql中国的网站测试说的。官网有说多线程啥的进行复制,好吧。我信了。

但是安装网上老的配置方法配置主从模式失败,服务器空间,服务起不来,说找不到pid什么文件,错误已经忘啦~~不好意思。

于是乎,在官方下载最新的安装文档...全英文...一口一口的啃。

终于在1个小时前配置好了,是双主互备模式。master==master.

整理下配置方法。

安装mysql5.6.9(源码下载那个网站没有提供最新的5.6.10版本,而我又不想装RPM包,你懂的)。安装在这里略过,只要看解压后里面的INSTALL文件安装提示来就可以了。

我把mysql安装到了/usr/local/mysql目录,装完之后,有个my.cnf在/usr/local/mysql目录下面。

这个就是配置文件了,打开一看,里面就有一行...

-----------------下面我们开始配置-------------

两台服务器:mysql-m1 192.168.0.140

mysql-m2 192.168.0.141

打开mysql-m1的my.cnf文件,添加如下代码:

binlog-format=ROW

log-slave-updates=true

gtid-mode=on # GTID only

enforce-gtid-consistency=true # GTID only

master-info-repository=TABLE

relay-log-info-repository=TABLE

sync-master-info=1

slave-parallel-workers=2

binlog-checksum=CRC32

master-verify-checksum=1

slave-sql-verify-checksum=1

binlog-rows-query-log_events=1

server-id=1

report-port=3306

port=3306

log-bin=binlog

report-host=192.168.0.140

肯定有人好奇,为啥要加这些代码?

好吧,我也不知道,官方就这么说的。(开玩笑了)。我把个个参数的意思原汁原味的写出来:

• binlog-format: row-based replication is selected in order to test all of the MySQL 5.6

optimisations

• log-slave-updates, gtid-mode, enforce-gtid-consistency, report-port and

report-host: used to enable Global Transaction IDs and meet the associated prerequisites

• master-info-repository and relay-log-info-repository: are turned on to enable

the crash-safe binlog/slave functionality (storing the information in transactional tables rather

than flat files)

• sync-master-info: set to 1 to ensure that no information is lost

• slave-parallel-workers: sets the number of parallel threads to be used for applying

received replication events when this server acts as a slave. A value of 0 would turn off the

multithreaded slave functionality; if the machine has a lot of cores and you are using many

databases within the server then you may want to increase this value in order to better exploit

multi-threaded replication

• binlog-checksum, master-verify-checksum and slave-sql-verify-checksum:

used to enable all of the replication checksum checks

• binlog-rows-query-log-events: enables informational log events (specifically, the

original SQL query) in the binary log when using row-based replication – this makes

troubleshooting simpler

• log-bin: The server cannot act as a replication master unless binary logging is enabled. If

you wish to enable a slave to assume the role of master at some point in the future (i.e. in the

event of a failover or switchover), you also need to configure binary logging. Binary logging

must also be enabled on the slave(s) when using Global Transaction IDs.

• server-id: The server_id variable must be unique amongst all servers in the replication

topology and is represented by a positive integer value from 1 to 2

32

好了,上面的参数都知道什么意思了吧。

接下来,我们同样设置第二台服务器:

binlog-format=ROW

log-slave-updates=true

gtid-mode=on # GTID only

enforce-gtid-consistency=true # GTID only

master-info-repository=TABLE

relay-log-info-repository=TABLE

sync-master-info=1

slave-parallel-workers=2

binlog-checksum=CRC32

master-verify-checksum=1

slave-sql-verify-checksum=1

binlog-rows-query-log_events=1

server-id=2

report-port=3306

port=3306

log-bin=binlog

report-host=192.168.0.141

注意,server-id=2,另外,report-host也改下。

这两个配置文件改好之后重启服务器。

重启完服务器之后,登录第二台服务器mysql-m2

登录mysql

mysql -u root -p

输入完用户名和密码之后:

> CHANGE MASTER TO MASTER_HOST=192.168.0.140, MASTER_USER=''repl_user'',

MASTER_PASSWORD=''billy'';

> START SLAVE;

这样主从模式就做好了主-----》从

-----------------------------------

我们在第一台服务器上设置可远程登录账户:

先登录mysql服务器:

>Grant all privileges on *.* to ''admin''@''%'' identified by ''123456'' with grant option;

红色字体分别为账户和密码。

同样的,第二台服务器也这么操作。

然后,我们在主服务器(mysql-m1)的test数据库下面建立一个表测试同步情况:

登录mysql服务器:mysql -u root -p

>use test;(装好后,mysql默认自带)。

>create table abc(a int,b int,c int);

创建好后插入数据。

>insert into abc values(1,2,3);

多执行几次

然后select * from abc;

查看数据插入进去了没有。(我后面有自己插入了几行)。

mysql> select * from acc;

+------+------+------+

| a | b | c |

+------+------+------+

| 1 | 2 | 3 |

| 1 | 2 | 3 |

| 1 | 2 | 3 |

| 1 | 2 | 3 |

| 1 | 2 | 3 |

| 1 | 2 | 3 |

| 2 | 2 | 2 |

| 2 | 2 | 2 |

| 2 | 2 | 2 |

+------+------+------+

登录mysql-m2,查看是否有数据同步过来。

同步过来了就是ok的了。

-------------------------------------

官方的文档只说了主从模式,我查了一下,要做双主模式,必须开启log-slave-updates=true这个选项。

我看了看两台服务器的配置文件都有这个。

然后呢,我自己试了一下。

登录主服务器---mysql-m1

登录mysql ----mysql -u root -p

输入密码

执行:

> CHANGE MASTER TO MASTER_HOST=192.168.0.141, MASTER_USER=''admin'',

MASTER_PASSWORD=''123456'';

> START SLAVE;

没想到,真的就可以,没报错。

>show slave status\G;

两台服务器都能查询出来信息。

===================总结=================

官方这个文档我是明白了。

它让每个slave都有当master的机会,如果一个master宕机了,

执行:

> CHANGE MASTER TO MASTER_HOST=192.168.0.*, MASTER_USER=''repl_user'',

MASTER_PASSWORD=''billy'';

> START SLAVE;

这个操作,只要换个IP地址,可以把任何一台从机变成主机,当主机启动之后,再执行:

> CHANGE MASTER TO MASTER_HOST=192.168.0.MASTER_IP, MASTER_USER=''repl_user'',

MASTER_PASSWORD=''billy'';

> START SLAVE;

这样主从切换来回自如。

不过,香港虚拟主机,我真的不知道类似于heartbeat的功能有木有~~~~我不像业务中断,香港虚拟主机,难道要在master上面做heartbeat?

本文出自 “勇攀高峰” 博客,谢绝转载!

MySQL 5.7 基于组复制(MySQL Group Replication) - 运维小结

之前介绍了Mysq主从同步的异步复制(默认模式)、半同步复制、基于GTID复制、基于组提交和并行复制 (解决同步延迟),下面简单说下Mysql基于组复制(MySQL Group Replication) 操作。

一、组复制 (MGR)介绍

MySQL Group Replication(简称MGR)是MySQL官方于2016年12月推出的一个全新的高可用与高扩展的解决方案。组复制是MySQL5.7版本出现的新特性,它提供了高可用、高扩展、高可靠的MySQL集群服务。MySQL组复制分单主模式和多主模式,mysql 的复制技术仅解决了数据同步的问题,如果 master 宕机,意味着数据库管理员需要介入,应用系统可能需要修改数据库连接地址或者重启才能实现。(这里也可以使用数据库中间件产品来避免应用系统数据库连接的问题,例如 mycat 和 atlas 等产品)。组复制在数据库层面上做到了,只要集群中大多数主机可用,则服务可用,也就是说3台服务器的集群,允许其中1台宕机。

1.1 组复制的两种模式

- 在单主模式下, 组复制具有自动选主功能,每次只有一个 server成员接受更新;

- 在多主模式下, 所有的 server 成员都可以同时接受更新;

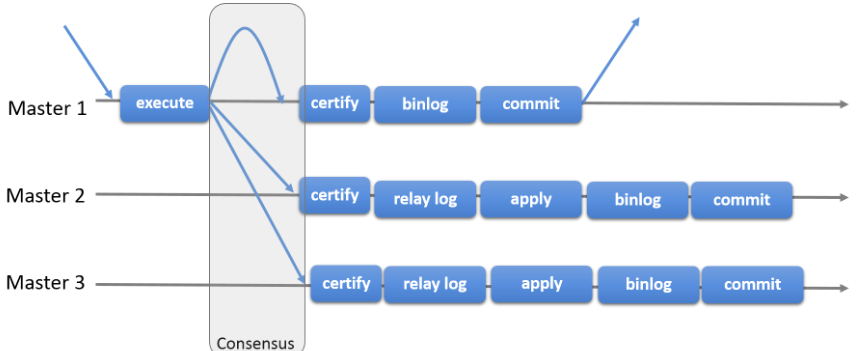

1.2 组复制原理

组复制是一种可用于实现容错系统的技术。 复制组是一个通过消息传递相互交互的server集群。通信层提供了原子消息(atomic message)和完全有序信息交互等保障机制,实现了基于复制协议的多主更新。复制组由多个 server成员构成,并且组中的每个server 成员可以独立地执行事务。但所有读写(RW)事务只有在冲突检测成功后才会提交。只读(RO)事务不需要在冲突检测,可以立即提交。句话说, 对于任何 RW 事务,提交操作并不是由始发 server 单向决定的,而是由组来决定是否提交。准确地说,在始发 server 上,当事务准备好提交时,该 server 会广播写入值(已改变的行)和对应的写入集(已更新的行的唯一标识符)。然后会为该事务建立一个全局的顺序。最终,这意味着所有 server 成员以相同的顺序接收同一组事务。因此, 所有 server 成员以相同的顺序应用相同的更改,以确保组内一致。

基于组的复制(Group-basedReplication)是一种被使用在容错系统中的技术。Replication-group(复制组)是由能够相互通信的多个服务器(节点)组成的。在通信层,Groupreplication实现了一系列的机制:比如原子消息(atomicmessage delivery)和全序化消息(totalorderingof messages)。这些原子化,抽象化的机制,为实现更先进的数据库复制方案提供了强有力的支持。MySQL Group Replication正是基于这些技术和概念,实现了一种多主全更新的复制协议。

简而言之,一个Replication-group就是一组节点,每个节点都可以独立执行事务,而读写事务则会在于group内的其他节点进行协调之后再commit。因此,当一个事务准备提交时,会自动在group内进行原子性的广播,告知其他节点变更了什么内容/执行了什么事务。

这种原子广播的方式,使得这个事务在每一个节点上都保持着同样顺序。这意味着每一个节点都以同样的顺序,接收到了同样的事务日志,所以每一个节点以同样的顺序重演了这些事务日志,最终整个group保持了完全一致的状态。然而,不同的节点上执行的事务之间有可能存在资源争用。这种现象容易出现在两个不同的并发事务上。

假设在不同的节点上有两个并发事务,更新了同一行数据,那么就会发生资源争用。面对这种情况,GroupReplication判定先提交的事务为有效事务,会在整个group里面重演,后提交的事务会直接中断,或者回滚,最后丢弃掉。因此,这也是一个无共享的复制方案,每一个节点都保存了完整的数据副本。看下图描述了具体的工作流程,能够简洁的和其他方案进行对比。这个复制方案,在某种程度上,和数据库状态机(DBSM)的Replication方法比较类似。

MySQL组复制协议工作流程:

需要注意:MySQL组复制是一种 share-nothing 复制方案,其中每个 server 成员都有自己的完整数据副本。

1.3 组复制特点

- 高一致性

基于原生复制及 paxos 协议的组复制技术,并以插件的方式提供,提供一致数据安全保证。确保组内数据最终一致性【重要】(通过分布式协议和分布式recovery机制保证);

- 高容错性

确保组内高可用。只要不是大多数节点坏掉就可以继续工作,有自动检测机制,当不同节点产生资源争用冲突时,不会出现错误,按照先到者优先原则进行处理,并且内置了自动化脑裂防护机制;

- 高扩展性

良好的扩展能力,可动态增删节点,组成员自动管理。节点的新增和移除都是自动的,新节点加入后,会自动从其他节点上同步状态,直到新节点和其他节点保持一致,如果某节点被移除了,其他节点自动更新组信息,自动维护新的组信息;

- 高灵活性

有单主模式和多主模式,单主模式下,会自动选主,所有更新操作都在主上进行;

多主模式下,所有 server 都可以同时处理更新操作。

- 多写,写冲突检测;

1.4 组复制故障检测

故障检测是提供关于哪些 server 可能已死的信息(猜测)的分布式服务。 某个 server 无响应时触发猜测,组中其余成员进行协调决定以排除给定成员。如果某个 server 与组的其余成员隔离,则它会怀疑所有其他 server 都失败了。由于无法与组达成协议(因为它无法确保仲裁成员数),其怀疑不会产生后果。当服务器以此方式与组隔离时,它无法执行任何本地事务。 在线 server 列表通常称为视图,新成员server的加入离开,无论是自愿还是被迫的离开,该组都会动态地重新规划其配置,并触发视图更新。

1.5 组复制的限制

- 存储引擎必须为Innodb,即仅支持InnoDB表,并且每张表一定要有一个主键,用于做write set的冲突检测;

- 每个表必须提供主键;

- 只支持ipv4,网络需求较高;

- 必须打开GTID特性,二进制日志格式必须设置为ROW,用于选主与write set;

- COMMIT可能会导致失败,类似于快照事务隔离级别的失败场景;

- 目前一个MGR集群组最多支持9个节点;

- 不支持外键于save point特性,无法做全局间的约束检测与部分部分回滚;

- 二进制日志binlog不支持Replication event checksums;

- 多主模式(也就是多写模式) 不支持SERIALIZABLE事务隔离级别;

- 多主模式不能完全支持级联外键约束;

- 多主模式不支持在不同节点上对同一个数据库对象并发执行DDL(在不同节点上对同一行并发进行RW事务,后发起的事务会失败);

二、组复制技术实现

2.1 组复制与传统复制的区别和大幅改进

传统复制

主-从复制: 有一个主和不等数量的从。主节点执行的事务会异步发送给从节点,在从节点重新执行。(异步和半同步;半同步相对异步Master会确认Slave是否接到数据,更加安全)

并行复制: 复制->广播->正式复制

组复制相比传统复制的优势在于:

- 弹性复制(高扩展性): server动态添加移除;

- 高可用分片(高扩展性): 分片实现写扩展,每个分片是一个复制组;

- 替代主从复制(高扩展性): 整组写入,避免单点争用;

- 自动化系统: 自动化部署Mysql复制到已有复制协议的自动化系统;

- 故障检测与容错: 自动检测,若服务faild,组内成员大多数达成认为该服务已不正常,则自动隔离;

在MySQL组复制环境中,组内成员会构成一个视图,组内成员主动加入或离开(主动或被动),都会更新组配置,更新视图。成员自愿离开,先更新组配置,然后采用大多数成员(不包含主动脱离的成员)意见是否确认该成员离开更新视图。如果是故障要排除,则需大多数服务确认(包括故障成员意见),然后才会更新组配置和视图。

特别注意:组复制最大允许即时故障数:f=(n-1)/2,多数正常则正常

2.2 组复制优点小结

1) 在master-slave之间实现了强一致性;

对于只读事务,组间实例无需进行通讯,就可以处理事务;对于读写(RW)事务,组内所有节点必须经过通讯,共同决定事务提交与否。

2) 事务冲突处理

在高并发的多写模式下,节点间事务的提交可能会产生冲突,比如,两个不同的事务在两个节点上操作了同一行数据,这个时候就会产生冲突。首先,Group Replication(GR)能够识别到这个冲突,然后对此的处理是,依赖事务提交的时间先后顺序,先发起提交的节点能够正确提交,而后面的提交,会失败

3) 故障检测

MGR自带故障检测机制,可以识别组内成员是否挂掉(组内节点心跳检测)。当一个节点失效,将由其他节点决定是否将这个失效的节点从group里面剔除。

4) 组成员管理

MGR需要维护组内节点的状态(ONLINE,RECOVERING,OFFLINE),对于失效的节点,由其他节点决定是否剔除。对于新加入的节点,需要维护它的视图与其他节点的视图保持一致。

5) 容错能力

MGR基于分布式一致性算法实现,一个组允许部分节点挂掉,只要保证大多数节点仍然存活并且之间的通讯是没有问题的,那么这个组对外仍然能够提供服务!假设一个MGR由2n+1个节点,那么允许n个节点失效,这个MGR仍然能够对外提供服务。比如有3个节点组成的一个GR,可允许1个节点失效,这个GR仍然能够提供服务。

6) 部署方便简单。

7) 最后结论

对比之前的5.6的双主模式,5.7的组复制模式不管从部署还是管理都要方便很多。

2.3 组复制模式介绍

MGR提供了single-primary和multi-primary两种模式。其中,single-primary mode(单写模式) 组内只有一个节点负责写入,读可以从任意一个节点读取,组内数据保持最终一致;multi-primary mode(多写模式),即写会下发到组内所有节点,组内所有节点同时可读,也是能够保证组内数据最终一致性。尤其要注意:一个MGR的所有节点必须配置使用同一种模式,不可混用!

1) 单写模式

单写模式group内只有一台节点可写可读,其他节点只可以读。

对于group的部署,需要先跑起primary节点(即那个可写可读的节点,read_only = 0)然后再跑起其他的节点,并把这些节点一一加进group。其他的节点就会自动同步primary节点上面的变化,然后将自己设置为只读模式(read_only = 1)。当primary节点意外宕机或者下线,在满足大多数节点存活的情况下,group内部发起选举,选出下一个可用的读节点,提升为primary节点。primary选举根据group内剩下存活节点的UUID按字典序升序来选择,即剩余存活的节点按UUID字典序排列,然后选择排在最前的节点作为新的primary节点。

单写模式部署(单机多实例)

在一个节点上运行三个MySQL实例,然后把其中一个实例部署为主,其他两个节点部署为从;主写,从读;这种模式适用于实验和自己练习。

特别重要:在切换primary期间,mysql group不会处理应用重连接到新的主,这需要应用层自己或者由另外的中间件层(proxy or router)去保证!

2) 多写模式

group内的所有机器都是primary节点,同时可以进行读写操作,并且数据是最终一致的。

该模式不好的地方在于: 非rpm包安装,目前使用rpm方式没有配置成功;启动还是处于手动方式,可以编写sys V方式启动脚本;性能上面没有做压测。

多机单写部署

在三个节点上分别部署MySQL实例,然后把其中一个实例部署为主节点,其他两个节点部署为从节点;主写,从读; 当主节点OFFLINE(下线)时,两个从节点会选举出一个注节点,但是在应用中的连接IP是不会随着更换的,需要重新进行配置。这种模式在节点故障率比较高的场景不适用,会导致应用找不到数据库。

多机多写部署

在三个节点上分别部署MySQL实例,每个节点都接收写请求;额外可以加入一个节点,测试节点的动态增加。

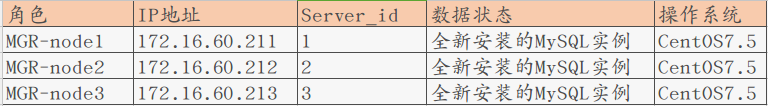

三、基于GTID的组复制分布式集群的环境部署记录

需要清楚知道:MySQL复制组能够以一种自动优先选择的单主模式运行,在某个时间只有一个服务器接受更新 。但是对于更高优先级的用户,组能够以多主模式部署,所有的服务器都能够接受更新,即使它们是同时发生的。组复制中存在着一种内建的组成员关系服务用来保持组的视图一致,并且在任意时间对于组中的所有的服务器都可用。MySQL服务器能够退出或者加入组中,而且视图也会相应的更新。有时服务器可能会意外的退出组(故障),在这种情况下失败检测机制检测这种情况并且告知复制组视图发生了变化,这所有的一切都是自动实现的。

3.1 实验环境

[root@MGR-node1 ~]# cat /etc/redhat-release

CentOS Linux release 7.5.1804 (Core)

为了方便实验,关闭所有节点的防火墙

[root@MGR-node1 ~]# systemctl stop firewalld

[root@MGR-node1 ~]# firewall-cmd --state

not running

[root@MGR-node1 ~]# cat /etc/sysconfig/selinux |grep "SELINUX=disabled"

SELINUX=disabled

[root@MGR-node1 ~]# setenforce 0

setenforce: SELinux is disabled

[root@MGR-node1 ~]# getenforce

Disabled

特别要注意一个关键点: 必须保证各个mysql节点的主机名不一致,并且能通过主机名找到各成员!

则必须要在每个节点的/etc/hosts里面做主机名绑定,否则后续将节点加入group组会失败!报错RECOVERING!!

[root@MGR-node1 ~]# cat /etc/hosts

........

172.16.60.211 MGR-node1

172.16.60.212 MGR-node2

172.16.60.213 MGR-node33.2 安装Mysql5.7

在三个mysql节点机上使用yum方式安装Mysql5.7,参考:https://www.cnblogs.com/kevingrace/p/8340690.html

安装MySQL yum资源库

[root@MGR-node1 ~]# yum localinstall https://dev.mysql.com/get/mysql57-community-release-el7-8.noarch.rpm

安装MySQL 5.7

[root@MGR-node1 ~]# yum install -y mysql-community-server

启动MySQL服务器和MySQL的自动启动

[root@MGR-node1 ~]# systemctl start mysqld.service

[root@MGR-node1 ~]# systemctl enable mysqld.service

设置登录密码

由于MySQL从5.7开始不允许首次安装后使用空密码进行登录!为了加强安全性,系统会随机生成一个密码以供管理员首次登录使用,

这个密码记录在/var/log/mysqld.log文件中,使用下面的命令可以查看此密码:

[root@MGR-node1 ~]# cat /var/log/mysqld.log|grep ''A temporary password''

2019-01-11T05:53:17.824073Z 1 [Note] A temporary password is generated for root@localhost: TaN.k:*Qw2xs

使用上面查看的密码TaN.k:*Qw2xs 登录mysql,并重置密码为123456

[root@MGR-node1 ~]# mysql -p #输入默认的密码:TaN.k:*Qw2xs

.............

mysql> set global validate_password_policy=0;

Query OK, 0 rows affected (0.00 sec)

mysql> set global validate_password_length=1;

Query OK, 0 rows affected (0.00 sec)

mysql> set password=password("123456");

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

查看mysql版本

[root@MGR-node1 ~]# mysql -p123456

........

mysql> select version();

+-----------+

| version() |

+-----------+

| 5.7.24 |

+-----------+

1 row in set (0.00 sec)

=====================================================================

温馨提示

mysql5.7通过上面默认安装后,执行语句可能会报错:

ERROR 1819 (HY000): Your password does not satisfy the current policy requirements

这个报错与Mysql 密码安全策略validate_password_policy的值有关,validate_password_policy可以取0、1、2三个值:

解决办法:

set global validate_password_policy=0;

set global validate_password_length=1;3.3 MGR组复制配置 (本案例采用MGR多写模式)

第一个节点MGR-node1的配置

先给这组MGR起个组名,组名可以随便起,但是不能使用主机的GTID!

通过节点的uuid作为loose-group_replication_group_name的组名,并且每个节点的这个组名必须一样!

这里使用MGR-node1节点mysql里的uuid作为组名

mysql> select uuid();

+--------------------------------------+

| uuid() |

+--------------------------------------+

| 5db40c3c-180c-11e9-afbf-005056ac6820 |

+--------------------------------------+

1 row in set (0.00 sec)

[root@MGR-node1 ~]# cp /etc/my.cnf /etc/my.cnf.bak

[root@MGR-node1 ~]# >/etc/my.cnf

[root@MGR-node1 ~]# vim /etc/my.cnf

[mysqld]

datadir = /var/lib/mysql

socket = /var/lib/mysql/mysql.sock

symbolic-links = 0

log-error = /var/log/mysqld.log

pid-file = /var/run/mysqld/mysqld.pid

#GTID:

server_id = 1

gtid_mode = on

enforce_gtid_consistency = on

master_info_repository=TABLE

relay_log_info_repository=TABLE

binlog_checksum=NONE

#binlog

log_bin = mysql-bin

log-slave-updates = 1

binlog_format = row

sync-master-info = 1

sync_binlog = 1

#relay log

skip_slave_start = 1

transaction_write_set_extraction=XXHASH64 #以便在server收集写集合的同时将其记录到二进制日志。写集合基于每行的主键,并且是行更改后的唯一标识此标识将用于检测冲突。

loose-group_replication_group_name="5db40c3c-180c-11e9-afbf-005056ac6820" #组的名字可以随便起,但不能用主机的GTID! 所有节点的这个组名必须保持一致!

loose-group_replication_start_on_boot=off #为了避免每次启动自动引导具有相同名称的第二个组,所以设置为OFF。

loose-group_replication_local_address= "172.16.60.211:24901"

loose-group_replication_group_seeds= "172.16.60.211:24901,172.16.60.212:24901,172.16.60.213:24901"

loose-group_replication_bootstrap_group=off

loose-group_replication_single_primary_mode=off #关闭单主模式的参数(本例测试时多主模式,所以关闭该项)

loose-group_replication_enforce_update_everywhere_checks=on #开启多主模式的参数

loose-group_replication_ip_whitelist="172.16.60.0/24,127.0.0.1/8" # 允许加入组复制的客户机来源的ip白名单

===============================================================================

特别提示:

把MGR-node1的 /etc/my.cnf 文件配置好后,直接拷贝给另外的两个节点MGR-node2、MGR-node3

然后再在其他两个节点上修改server_id和loose-group_replication_local_address即可,其他都不动!

[root@MGR-node1 ~]# rsync -e "ssh -p22" -avpgolr /etc/my.cnf root@172.16.60.212:/etc/

[root@MGR-node1 ~]# rsync -e "ssh -p22" -avpgolr /etc/my.cnf root@172.16.60.213:/etc/

===============================================================================

配置完成后,要记得重启mysqld服务 (重启之前,先情况mysqld服务日志)

[root@MGR-node1 ~]# >/var/log/mysqld.log

[root@MGR-node1 ~]# systemctl restart mysqld

查看mysql日志

[root@MGR-node1 ~]# grep password /var/log/mysqld.log

2019-01-14T15:19:02.847387Z 0 [Note] Shutting down plugin ''validate_password''

2019-01-14T15:19:04.121045Z 0 [Note] Shutting down plugin ''sha256_password''

2019-01-14T15:19:04.121048Z 0 [Note] Shutting down plugin ''mysql_native_password''

登录mysql进行相关设置操作

[root@MGR-node1 ~]# mysql -p123456

............

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

4 rows in set (0.00 sec)

mysql> SET SQL_LOG_BIN=0; #即不记录二进制日志

Query OK, 0 rows affected (0.00 sec)

mysql> GRANT REPLICATION SLAVE ON *.* TO rpl_slave@''%'' IDENTIFIED BY ''slave@123'';

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.00 sec)

mysql> reset master;

Query OK, 0 rows affected (0.11 sec)

mysql> SET SQL_LOG_BIN=1;

Query OK, 0 rows affected (0.00 sec)

mysql> CHANGE MASTER TO MASTER_USER=''rpl_slave'', MASTER_PASSWORD=''slave@123'' FOR CHANNEL ''group_replication_recovery'';

Query OK, 0 rows affected, 2 warnings (0.29 sec)

mysql> INSTALL PLUGIN group_replication SONAME ''group_replication.so''; #安装复制组插件

Query OK, 0 rows affected (0.04 sec)

mysql> SHOW PLUGINS;

+----------------------------+----------+--------------------+----------------------+---------+

| Name | Status | Type | Library | License |

+----------------------------+----------+--------------------+----------------------+---------+

| binlog | ACTIVE | STORAGE ENGINE | NULL | GPL |

| mysql_native_password | ACTIVE | AUTHENTICATION | NULL | GPL |

| sha256_password | ACTIVE | AUTHENTICATION | NULL | GPL |

| CSV | ACTIVE | STORAGE ENGINE | NULL | GPL |

| MEMORY | ACTIVE | STORAGE ENGINE | NULL | GPL |

| InnoDB | ACTIVE | STORAGE ENGINE | NULL | GPL |

| INNODB_TRX | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_LOCKS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_LOCK_WAITS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMP | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMP_RESET | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMPMEM | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMPMEM_RESET | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMP_PER_INDEX | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMP_PER_INDEX_RESET | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_BUFFER_PAGE | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_BUFFER_PAGE_LRU | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_BUFFER_POOL_STATS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_TEMP_TABLE_INFO | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_METRICS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_DEFAULT_STOPWORD | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_DELETED | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_BEING_DELETED | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_CONFIG | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_INDEX_CACHE | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_INDEX_TABLE | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_TABLES | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_TABLESTATS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_INDEXES | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_COLUMNS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_FIELDS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_FOREIGN | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_FOREIGN_COLS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_TABLESPACES | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_DATAFILES | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_VIRTUAL | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| MyISAM | ACTIVE | STORAGE ENGINE | NULL | GPL |

| MRG_MYISAM | ACTIVE | STORAGE ENGINE | NULL | GPL |

| PERFORMANCE_SCHEMA | ACTIVE | STORAGE ENGINE | NULL | GPL |

| ARCHIVE | ACTIVE | STORAGE ENGINE | NULL | GPL |

| BLACKHOLE | ACTIVE | STORAGE ENGINE | NULL | GPL |

| FEDERATED | DISABLED | STORAGE ENGINE | NULL | GPL |

| partition | ACTIVE | STORAGE ENGINE | NULL | GPL |

| ngram | ACTIVE | FTPARSER | NULL | GPL |

| rpl_semi_sync_master | ACTIVE | REPLICATION | semisync_master.so | GPL |

| validate_password | ACTIVE | VALIDATE PASSWORD | validate_password.so | GPL |

| group_replication | ACTIVE | GROUP REPLICATION | group_replication.so | GPL |

+----------------------------+----------+--------------------+----------------------+---------+

47 rows in set (0.00 sec)

mysql> SET GLOBAL group_replication_bootstrap_group=ON; #只在第一个节点执行这个步骤

Query OK, 0 rows affected (0.00 sec)

mysql> START GROUP_REPLICATION;

ERROR 3096 (HY000): The START GROUP_REPLICATION command failed as there was an error when initializing the group communication layer.

如上出现如上错误,则需要先stop之后做才可以

mysql> STOP GROUP_REPLICATION;

Query OK, 0 rows affected (0.00 sec)

mysql> SET GLOBAL group_replication_bootstrap_group=ON;

Query OK, 0 rows affected (0.00 sec)

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected (2.14 sec)

mysql> SET GLOBAL group_replication_bootstrap_group=OFF;

Query OK, 0 rows affected (0.00 sec)

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | 317e2aad-1565-11e9-9c2e-005056ac6820 | MGR-node1 | 3306 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

1 row in set (0.00 sec)

比如要保证上面的group_replication_applier的状态为"ONLINE"才对!

创建一个测试库

mysql> CREATE DATABASE kevin CHARACTER SET utf8 COLLATE utf8_general_ci;

Query OK, 1 row affected (0.03 sec)

mysql> use kevin;

Database changed

mysql> create table if not exists haha (id int(10) PRIMARY KEY AUTO_INCREMENT,name varchar(50) NOT NULL);

Query OK, 0 rows affected (0.24 sec)

mysql> insert into kevin.haha values(1,"wangshibo"),(2,"guohuihui"),(3,"yangyang"),(4,"shikui");

Query OK, 4 rows affected (0.07 sec)

Records: 4 Duplicates: 0 Warnings: 0

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

+----+-----------+

4 rows in set (0.00 sec)第二个节点MGR-node2的配置

配置MGR-node2节点的my.cnf文件

只需要修改 server_id 和 loose-group_replication_local_address的配置即可 (和MGR-node1的my.cnf文件配置相比较)

[root@MGR-node2 ~]# cat /etc/my.cnf

[mysqld]

datadir = /var/lib/mysql

socket = /var/lib/mysql/mysql.sock

symbolic-links = 0

log-error = /var/log/mysqld.log

pid-file = /var/run/mysqld/mysqld.pid

#GTID:

server_id = 2

gtid_mode = on

enforce_gtid_consistency = on

master_info_repository=TABLE

relay_log_info_repository=TABLE

binlog_checksum=NONE

#binlog

log_bin = mysql-bin

log-slave-updates = 1

binlog_format = row

sync-master-info = 1

sync_binlog = 1

#relay log

skip_slave_start = 1

transaction_write_set_extraction=XXHASH64

loose-group_replication_group_name="5db40c3c-180c-11e9-afbf-005056ac6820"

loose-group_replication_start_on_boot=off

loose-group_replication_local_address= "172.16.60.212:24901"

loose-group_replication_group_seeds= "172.16.60.211:24901,172.16.60.212:24901,172.16.60.213:24901"

loose-group_replication_bootstrap_group=off

loose-group_replication_single_primary_mode=off

loose-group_replication_enforce_update_everywhere_checks=on

loose-group_replication_ip_whitelist="172.16.60.0/24,127.0.0.1/8"

配置完成后,要记得重启mysqld服务 (重启之前,先情况mysqld服务日志)

[root@MGR-node2 ~]# >/var/log/mysqld.log

[root@MGR-node2 ~]# systemctl restart mysqld

查看mysql日志

[root@MGR-node2 ~]# grep password /var/log/mysqld.log

2019-01-14T15:53:14.293490Z 0 [Note] Shutting down plugin ''validate_password''

2019-01-14T15:53:16.144077Z 0 [Note] Shutting down plugin ''sha256_password''

2019-01-14T15:53:16.144080Z 0 [Note] Shutting down plugin ''mysql_native_password''

登录mysql进行相关设置操作

[root@MGR-node2 ~]# mysql -p123456

.........

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

4 rows in set (0.00 sec)

mysql> SET SQL_LOG_BIN=0;

Query OK, 0 rows affected (0.00 sec)

mysql> GRANT REPLICATION SLAVE ON *.* TO rpl_slave@''%'' IDENTIFIED BY ''slave@123'';

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.00 sec)

mysql> reset master;

Query OK, 0 rows affected (0.10 sec)

mysql> SET SQL_LOG_BIN=1;

Query OK, 0 rows affected (0.00 sec)

mysql> CHANGE MASTER TO MASTER_USER=''rpl_slave'', MASTER_PASSWORD=''slave@123'' FOR CHANNEL ''group_replication_recovery'';

Query OK, 0 rows affected, 2 warnings (0.21 sec)

mysql> INSTALL PLUGIN group_replication SONAME ''group_replication.so'';

Query OK, 0 rows affected (0.04 sec)

mysql> SHOW PLUGINS;

+----------------------------+----------+--------------------+----------------------+---------+

| Name | Status | Type | Library | License |

+----------------------------+----------+--------------------+----------------------+---------+

| binlog | ACTIVE | STORAGE ENGINE | NULL | GPL |

| mysql_native_password | ACTIVE | AUTHENTICATION | NULL | GPL |

| sha256_password | ACTIVE | AUTHENTICATION | NULL | GPL |

| CSV | ACTIVE | STORAGE ENGINE | NULL | GPL |

| MEMORY | ACTIVE | STORAGE ENGINE | NULL | GPL |

| InnoDB | ACTIVE | STORAGE ENGINE | NULL | GPL |

| INNODB_TRX | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_LOCKS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_LOCK_WAITS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMP | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMP_RESET | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMPMEM | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMPMEM_RESET | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMP_PER_INDEX | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMP_PER_INDEX_RESET | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_BUFFER_PAGE | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_BUFFER_PAGE_LRU | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_BUFFER_POOL_STATS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_TEMP_TABLE_INFO | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_METRICS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_DEFAULT_STOPWORD | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_DELETED | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_BEING_DELETED | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_CONFIG | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_INDEX_CACHE | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_INDEX_TABLE | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_TABLES | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_TABLESTATS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_INDEXES | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_COLUMNS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_FIELDS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_FOREIGN | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_FOREIGN_COLS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_TABLESPACES | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_DATAFILES | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_VIRTUAL | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| MyISAM | ACTIVE | STORAGE ENGINE | NULL | GPL |

| MRG_MYISAM | ACTIVE | STORAGE ENGINE | NULL | GPL |

| PERFORMANCE_SCHEMA | ACTIVE | STORAGE ENGINE | NULL | GPL |

| ARCHIVE | ACTIVE | STORAGE ENGINE | NULL | GPL |

| BLACKHOLE | ACTIVE | STORAGE ENGINE | NULL | GPL |

| FEDERATED | DISABLED | STORAGE ENGINE | NULL | GPL |

| partition | ACTIVE | STORAGE ENGINE | NULL | GPL |

| ngram | ACTIVE | FTPARSER | NULL | GPL |

| rpl_semi_sync_slave | ACTIVE | REPLICATION | semisync_slave.so | GPL |

| validate_password | ACTIVE | VALIDATE PASSWORD | validate_password.so | GPL |

| group_replication | ACTIVE | GROUP REPLICATION | group_replication.so | GPL |

+----------------------------+----------+--------------------+----------------------+---------+

47 rows in set (0.00 sec)

这里只需要执行这一步即可!

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected (4.58 sec)

查看组内情况,发现MGR-node2已经成功加入这个组内了。

注意:这里一定要将三个节点的/etc/hosts文件里绑定主机名,否则这里添加组的时候会一直报错:RECOVERING (必须要是ONLINE才行)

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | 2c1efc46-1565-11e9-ab8e-00505688047c | MGR-node2 | 3306 | ONLINE |

| group_replication_applier | 317e2aad-1565-11e9-9c2e-005056ac6820 | MGR-node1 | 3306 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

2 rows in set (0.00 sec)

查看下,发现已经将MGR-node1节点添加的数据同步过来了

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| kevin |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.00 sec)

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

+----+-----------+

4 rows in set (0.00 sec)第三个节点MGR-node3的配置

配置MGR-node3节点的my.cnf文件

只需要修改 server_id 和 loose-group_replication_local_address的配置即可 (和MGR-node1的my.cnf文件配置相比较)

[root@MGR-node3 ~]# vim /etc/my.cnf

[mysqld]

datadir = /var/lib/mysql

socket = /var/lib/mysql/mysql.sock

symbolic-links = 0

log-error = /var/log/mysqld.log

pid-file = /var/run/mysqld/mysqld.pid

#GTID:

server_id = 3

gtid_mode = on

enforce_gtid_consistency = on

master_info_repository=TABLE

relay_log_info_repository=TABLE

binlog_checksum=NONE

#binlog

log_bin = mysql-bin

log-slave-updates = 1

binlog_format = row

sync-master-info = 1

sync_binlog = 1

#relay log

skip_slave_start = 1

transaction_write_set_extraction=XXHASH64

loose-group_replication_group_name="5db40c3c-180c-11e9-afbf-005056ac6820"

loose-group_replication_start_on_boot=off

loose-group_replication_local_address= "172.16.60.213:24901"

loose-group_replication_group_seeds= "172.16.60.211:24901,172.16.60.212:24901,172.16.60.213:24901"

loose-group_replication_bootstrap_group=off

loose-group_replication_single_primary_mode=off

loose-group_replication_enforce_update_everywhere_checks=on

loose-group_replication_ip_whitelist="172.16.60.0/24,127.0.0.1/8"

配置完成后,要记得重启mysqld服务 (重启之前,先情况mysqld服务日志)

[root@MGR-node3 ~]# >/var/log/mysqld.log

[root@MGR-node3 ~]# systemctl restart mysqld

查看mysql日志

[root@MGR-node3 ~]# grep password /var/log/mysqld.log

2019-01-14T16:40:15.251663Z 0 [Note] Shutting down plugin ''validate_password''

2019-01-14T16:40:16.997360Z 0 [Note] Shutting down plugin ''sha256_password''

2019-01-14T16:40:16.997363Z 0 [Note] Shutting down plugin ''mysql_native_password''

登录mysql进行相关设置操作

[root@MGR-node3 ~]# mysql -p123456

..........

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

4 rows in set (0.00 sec)

mysql> SET SQL_LOG_BIN=0;

Query OK, 0 rows affected (0.00 sec)

mysql> GRANT REPLICATION SLAVE ON *.* TO rpl_slave@''%'' IDENTIFIED BY ''slave@123'';

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.00 sec)

mysql> reset master;

Query OK, 0 rows affected (0.12 sec)

mysql> SET SQL_LOG_BIN=1;

Query OK, 0 rows affected (0.00 sec)

mysql> CHANGE MASTER TO MASTER_USER=''rpl_slave'', MASTER_PASSWORD=''slave@123'' FOR CHANNEL ''group_replication_recovery'';

Query OK, 0 rows affected, 2 warnings (0.29 sec)

mysql> INSTALL PLUGIN group_replication SONAME ''group_replication.so'';

Query OK, 0 rows affected (0.03 sec)

mysql> SHOW PLUGINS;

+----------------------------+----------+--------------------+----------------------+---------+

| Name | Status | Type | Library | License |

+----------------------------+----------+--------------------+----------------------+---------+

| binlog | ACTIVE | STORAGE ENGINE | NULL | GPL |

| mysql_native_password | ACTIVE | AUTHENTICATION | NULL | GPL |

| sha256_password | ACTIVE | AUTHENTICATION | NULL | GPL |

| CSV | ACTIVE | STORAGE ENGINE | NULL | GPL |

| MEMORY | ACTIVE | STORAGE ENGINE | NULL | GPL |

| InnoDB | ACTIVE | STORAGE ENGINE | NULL | GPL |

| INNODB_TRX | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_LOCKS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_LOCK_WAITS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMP | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMP_RESET | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMPMEM | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMPMEM_RESET | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMP_PER_INDEX | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_CMP_PER_INDEX_RESET | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_BUFFER_PAGE | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_BUFFER_PAGE_LRU | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_BUFFER_POOL_STATS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_TEMP_TABLE_INFO | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_METRICS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_DEFAULT_STOPWORD | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_DELETED | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_BEING_DELETED | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_CONFIG | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_INDEX_CACHE | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_FT_INDEX_TABLE | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_TABLES | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_TABLESTATS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_INDEXES | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_COLUMNS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_FIELDS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_FOREIGN | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_FOREIGN_COLS | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_TABLESPACES | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_DATAFILES | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| INNODB_SYS_VIRTUAL | ACTIVE | INFORMATION SCHEMA | NULL | GPL |

| MyISAM | ACTIVE | STORAGE ENGINE | NULL | GPL |

| MRG_MYISAM | ACTIVE | STORAGE ENGINE | NULL | GPL |

| PERFORMANCE_SCHEMA | ACTIVE | STORAGE ENGINE | NULL | GPL |

| ARCHIVE | ACTIVE | STORAGE ENGINE | NULL | GPL |

| BLACKHOLE | ACTIVE | STORAGE ENGINE | NULL | GPL |

| FEDERATED | DISABLED | STORAGE ENGINE | NULL | GPL |

| partition | ACTIVE | STORAGE ENGINE | NULL | GPL |

| ngram | ACTIVE | FTPARSER | NULL | GPL |

| rpl_semi_sync_slave | ACTIVE | REPLICATION | semisync_slave.so | GPL |

| validate_password | ACTIVE | VALIDATE PASSWORD | validate_password.so | GPL |

| group_replication | ACTIVE | GROUP REPLICATION | group_replication.so | GPL |

+----------------------------+----------+--------------------+----------------------+---------+

47 rows in set (0.00 sec)

这里只需要执行这一步即可!

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected (3.45 sec)

查看组内情况,发现MGR-node3已经成功加入这个组内了。

注意:这里一定要将三个节点的/etc/hosts文件里绑定主机名,否则这里添加组的时候会一直报错:RECOVERING (必须要是ONLINE才行)

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | 2658b203-1565-11e9-9f8b-005056880888 | MGR-node3 | 3306 | ONLINE |

| group_replication_applier | 2c1efc46-1565-11e9-ab8e-00505688047c | MGR-node2 | 3306 | ONLINE |

| group_replication_applier | 317e2aad-1565-11e9-9c2e-005056ac6820 | MGR-node1 | 3306 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

3 rows in set (0.00 sec)

查看下,发现已经将在其他节点上添加的数据同步过来了

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| kevin |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.00 sec)

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

+----+-----------+

4 rows in set (0.00 sec)组复制数据同步测试

在任意一个节点上执行

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | 2658b203-1565-11e9-9f8b-005056880888 | MGR-node3 | 3306 | ONLINE |

| group_replication_applier | 2c1efc46-1565-11e9-ab8e-00505688047c | MGR-node2 | 3306 | ONLINE |

| group_replication_applier | 317e2aad-1565-11e9-9c2e-005056ac6820 | MGR-node1 | 3306 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

3 rows in set (0.00 sec)

如上,说明已经在MGR-node1、MGR-node2、MGR-node3 三个节点上成功部署了基于GTID的组复制同步环境。

现在在三个节点中的任意一个上面更新数据,那么其他两个节点的数据库都会将新数据同步过去的!

1)在MGR-node1节点数据库更新数据

mysql> delete from kevin.haha where id>2;

Query OK, 2 rows affected (0.14 sec)

接着在MGR-node2、MGR-node3节点数据库查看,发现更新后数据已经同步过来了!

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

+----+-----------+

2 rows in set (0.00 sec)

2)在MGR-node2节点数据库更新数据

mysql> insert into kevin.haha values(11,"beijing"),(12,"shanghai"),(13,"anhui");

Query OK, 3 rows affected (0.06 sec)

Records: 3 Duplicates: 0 Warnings: 0

接着在MGR-node1、MGR-node3节点数据库查看,发现更新后数据已经同步过来了!

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 11 | beijing |

| 12 | shanghai |

| 13 | anhui |

+----+-----------+

5 rows in set (0.00 sec)

3)在MGR-node3节点数据库更新数据

mysql> update kevin.haha set id=100 where name="anhui";

Query OK, 1 row affected (0.16 sec)

Rows matched: 1 Changed: 1 Warnings: 0

mysql> delete from kevin.haha where id=12;

Query OK, 1 row affected (0.22 sec)

接着在MGR-node1、MGR-node2节点数据库查看,发现更新后数据已经同步过来了!

mysql> select * from kevin.haha;

+-----+-----------+

| id | name |

+-----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 11 | beijing |

| 100 | anhui |

+-----+-----------+

4 rows in set (0.00 sec)组复制故障测试

当组内的某个节点发生故障时,会自动从将该节点从组内踢出,与其他节点隔离。剩余的节点之间保持主从复制的正常同步关系。当该节点的故障恢复后,只需手动激活组复制即可(即执行"START GROUP_REPLICATION;");

1)比如 MGR-node1的mysql发生故障,比如关闭mysql服务(或者该节点网络故障,其他节点与之通信失败等)

[root@MGR-node1 mysql]# systemctl stop mysqld

[root@MGR-node1 mysql]# ps -ef|grep mysql

root 4662 26047 0 01:02 pts/0 00:00:00 grep --color=auto mysql

[root@MGR-node1 mysql]#

在剩余的两个节点中的任意一个查看

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | 2658b203-1565-11e9-9f8b-005056880888 | MGR-node3 | 3306 | ONLINE |

| group_replication_applier | 2c1efc46-1565-11e9-ab8e-00505688047c | MGR-node2 | 3306 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

2 rows in set (0.00 sec)

如上,在MGR-node1节点的mysql发生故障后,会自动从这个组内踢出,剩余的两个节点的组复制同步关系正常!

在MGR-node3节点更新数据

mysql> update kevin.haha set id=3 where name="anhui";

Query OK, 1 row affected (0.11 sec)

Rows matched: 1 Changed: 1 Warnings: 0

接在在另一个节点MGR-node2上查看,发现更新数据已同步过来

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | anhui |

| 11 | beijing |

+----+-----------+

4 rows in set (0.00 sec)

2)当MGR-node1节点的mysql服务恢复后,是什么情况呢?

[root@MGR-node1 mysql]# systemctl start mysqld

[root@MGR-node1 mysql]# ps -ef|grep mysqld

mysql 4846 1 0 01:04 ? 00:00:00 /usr/sbin/mysqld --daemonize --pid-file=/var/run/mysqld/mysqld.pid

root 4965 26047 0 01:05 pts/0 00:00:00 grep --color=auto mysqld

在剩余的两个节点中的任意一个查看

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | 2658b203-1565-11e9-9f8b-005056880888 | MGR-node3 | 3306 | ONLINE |

| group_replication_applier | 2c1efc46-1565-11e9-ab8e-00505688047c | MGR-node2 | 3306 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

2 rows in set (0.00 sec)

如上发现,MGR-node1节点恢复后,不会自动添加到组内,需要手动激活下该节点的组复制功能

[root@MGR-node1 mysql]# mysql -p123456

...........

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected (2.89 sec)

再次查看,就会发现MGR-node1节点已经重新添加到组内了

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | 2658b203-1565-11e9-9f8b-005056880888 | MGR-node3 | 3306 | ONLINE |

| group_replication_applier | 2c1efc46-1565-11e9-ab8e-00505688047c | MGR-node2 | 3306 | ONLINE |

| group_replication_applier | 317e2aad-1565-11e9-9c2e-005056ac6820 | MGR-node1 | 3306 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

3 rows in set (0.01 sec)

MGR-node1节点恢复后,并重新添加到组内后,其他节点更新的数据也会及时同步过来!

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | anhui |

| 11 | beijing |

+----+-----------+

4 rows in set (0.00 sec)

===========================================================================

要是三个节点都发生故障的话,在节点的故障都恢复后,需要手动重新做组复制,操作流程如下:

第一个节点

mysql> reset master;

mysql> SET SQL_LOG_BIN=1;

mysql> CHANGE MASTER TO MASTER_USER=''rpl_slave'', MASTER_PASSWORD=''slave@123'' FOR CHANNEL ''group_replication_recovery'';

mysql> STOP GROUP_REPLICATION;

mysql> SET GLOBAL group_replication_bootstrap_group=ON;

mysql> START GROUP_REPLICATION;

mysql> SET GLOBAL group_replication_bootstrap_group=OFF;

mysql> SELECT * FROM performance_schema.replication_group_members;

第二个节点

mysql> reset master;

mysql> SET SQL_LOG_BIN=1;

mysql> CHANGE MASTER TO MASTER_USER=''rpl_slave'', MASTER_PASSWORD=''slave@123'' FOR CHANNEL ''group_replication_recovery'';

mysql> START GROUP_REPLICATION;

mysql> SELECT * FROM performance_schema.replication_group_members;

第三个节点

mysql> reset master;

mysql> SET SQL_LOG_BIN=1;

mysql> CHANGE MASTER TO MASTER_USER=''rpl_slave'', MASTER_PASSWORD=''slave@123'' FOR CHANNEL ''group_replication_recovery'';

mysql> START GROUP_REPLICATION;

mysql> SELECT * FROM performance_schema.replication_group_members;MGR节点由于网络出现异常导致的错误解决方法

MGR组内本来有三个节点,但是MGR-node3节点突然从复制组内踢出去了。

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | 3fee1651-1eeb-11e9-a2f2-00505688047c | MGR-node2 | 3306 | ONLINE |

| group_replication_applier | 40ecddaf-1eeb-11e9-94bf-005056ac6820 | MGR-node1 | 3306 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

3 rows in set (0.00 sec)

查看MGR-node3节点日志发现:

[root@MGR-node3 ~]# tail -f /var/log/mysqld.log

.........

2019-01-11T11:17:09.890375Z 0 [ERROR] Plugin group_replication reported: ''Member was expelled from the group due to network failures, changing member status to ERROR.''

错误日志写了是由于网络出现异常导致节点被踢出的组复制,试着重新加入群组:

mysql> start group_replication;

ERROR 3093 (HY000): The START GROUP_REPLICATION command failed since the group is already running.

解决办法:先停掉这个MGR-node3节点的复制重新打开试下

mysql> stop group_replication;

Query OK, 0 rows affected (7.18 sec)

mysql> start group_replication;

Query OK, 0 rows affected (3.32 sec)

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | 340ed31a-1eeb-11e9-8221-005056880888 | MGR-node3 | 3306 | ONLINE |

| group_replication_applier | 3fee1651-1eeb-11e9-a2f2-00505688047c | MGR-node2 | 3306 | ONLINE |

| group_replication_applier | 40ecddaf-1eeb-11e9-94bf-005056ac6820 | MGR-node1 | 3306 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

3 rows in set (0.00 sec)

MGR-node3节点重新恢复到复制组内,查看该节点的数据,发现数据也会同步过来的。

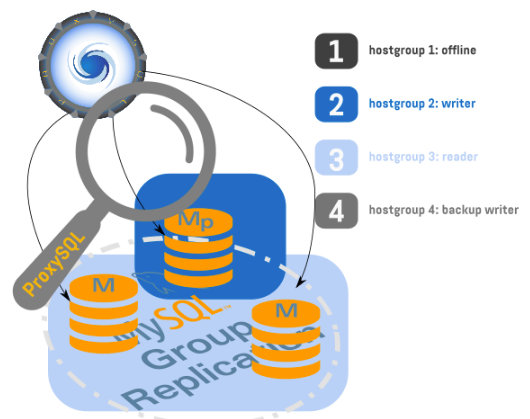

如果是多个节点是因为这个原因被踢出复制组,则采用上面方法进行恢复,至此所有节点都恢复正常!MGR可以使用ProxySQL代理插件,比如三个MGR写节点,一个为当前写节点,另外两个为备份写节点。当前写节点发生故障后,会自会切换到其他正常的备份写节点上。

ProxySQL在MySQL的代理和负载均衡中一直处于领先地位,对MGR提供了很好的原生支持,其中包含了诸如缓存查询,多路复用,流量镜像,读写分离,路由等等的强力功能。在最新的功能性增强中,包含了对MGR的原生支持,不在需要使用第三方脚本进行适配。

最新的增强中,提供了对单写和多写集群组的支持,甚至可以在多写组上指定只由某个成员进行写入操作。

在新版本的ProxySQL中,比如在一个七个节点的多写集群中,指定2组写节点,2组备用写节点,3个只读节点的操作。即ProxySQL虽然识别出来所有的节点皆为写节点,但只路由写操作到选定的两个写节点(通过Hostgroup的方式),同时将另外两个写节点添加到备用写节点组中,最后三个读节点加入读组。(本案例中的组皆为ProxySQL中的hostgroup含义)。除此之外,ProxySQL还可以限制连接访问集群中超出最大设定落后事务值的慢节点(应该是通过mysql_servers.max_replication_lag来控制)。

基于以上部署的MGR节点环境 (即 3 个master节点的多写集群),以下记录ProxySQL + MGR (多写模式) 配置过程:

在172.16.60.211/212/213 三个节点中的任意一个节点的mysql上执行:

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | a2078290-1f25-11e9-b50e-00505688047c | MGR-node2 | 3306 | ONLINE |

| group_replication_applier | aaf67b46-1f25-11e9-a9a1-005056ac6820 | MGR-node1 | 3306 | ONLINE |

| group_replication_applier | b5df6e60-1f25-11e9-b1bd-005056880888 | MGR-node3 | 3306 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

3 rows in set (0.00 sec)

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 11 | beijing |

| 12 | shanghai |

+----+-----------+

4 rows in set (0.00 sec)

可以看出,基于上述部署的MGR环境,3个master节点的多写模式,现在MGR组复制内的三个节点都是ONLINE在线同步状态。现在追加一台节点172.16.60.220作为ProxySQL代理节点,操作记录如下:

1) 准备工作

[root@mysql-proxy ~]# cat /etc/redhat-release

CentOS Linux release 7.5.1804 (Core)

[root@mysql-proxy ~]# hostnamectl set-hostname mysql-proxy

[root@mysql-proxy ~]# hostname

mysql-proxy

[root@mysql-proxy ~]# systemctl stop firewalld

[root@mysql-proxy ~]# firewall-cmd --state

not running

[root@mysql-proxy ~]# setenforce 0

[root@mysql-proxy ~]# cat /etc/sysconfig/selinux |grep "SELINUX=disabled"

SELINUX=disabled

[root@mysql-proxy ~]# hostname -I

172.16.60.220- 安装mysql客户端,用于在本机连接到ProxySQL的管理接口

[root@mysql-proxy ~]# vim /etc/yum.repos.d/mariadb.repo

[mariadb]

name = MariaDB

baseurl = http://yum.mariadb.org/10.3.5/centos6-amd64

gpgkey=https://yum.mariadb.org/RPM-GPG-KEY-MariaDB

gpgcheck=1

安装mysql-clinet客户端

[root@mysql-proxy ~]# yum install -y MariaDB-client

============================================================================

如果遇到报错:

Error: MariaDB-compat conflicts with 1:mariadb-libs-5.5.60-1.el7_5.x86_64